MIT engineers design an aerial microrobot that can fly as fast as a bumblebee

A time-lapse photo shows a flying microrobot performing a flip. Credit: Courtesy of the Soft and Micro Robotics Laboratory.

A time-lapse photo shows a flying microrobot performing a flip. Credit: Courtesy of the Soft and Micro Robotics Laboratory.

By Adam Zewe

In the future, tiny flying robots could be deployed to aid in the search for survivors trapped beneath the rubble after a devastating earthquake. Like real insects, these robots could flit through tight spaces larger robots can’t reach, while simultaneously dodging stationary obstacles and pieces of falling rubble.

So far, aerial microrobots have only been able to fly slowly along smooth trajectories, far from the swift, agile flight of real insects — until now.

MIT researchers have demonstrated aerial microrobots that can fly with speed and agility that is comparable to their biological counterparts. A collaborative team designed a new AI-based controller for the robotic bug that enabled it to follow gymnastic flight paths, such as executing continuous body flips.

With a two-part control scheme that combines high performance with computational efficiency, the robot’s speed and acceleration increased by about 450 percent and 250 percent, respectively, compared to the researchers’ best previous demonstrations.

The speedy robot was agile enough to complete 10 consecutive somersaults in 11 seconds, even when wind disturbances threatened to push it off course.

“We want to be able to use these robots in scenarios that more traditional quad copter robots would have trouble flying into, but that insects could navigate. Now, with our bioinspired control framework, the flight performance of our robot is comparable to insects in terms of speed, acceleration, and the pitching angle. This is quite an exciting step toward that future goal,” says Kevin Chen, an associate professor in the Department of Electrical Engineering and Computer Science (EECS), head of the Soft and Micro Robotics Laboratory within the Research Laboratory of Electronics (RLE), and co-senior author of a paper on the robot.

Chen is joined on the paper by co-lead authors Yi-Hsuan Hsiao, an EECS MIT graduate student; Andrea Tagliabue PhD ’24; and Owen Matteson, a graduate student in the Department of Aeronautics and Astronautics (AeroAstro); as well as EECS graduate student Suhan Kim; Tong Zhao MEng ’23; and co-senior author Jonathan P. How, the Ford Professor of Engineering in the Department of Aeronautics and Astronautics and a principal investigator in the Laboratory for Information and Decision Systems (LIDS). The research appears today in Science Advances.

An AI controller

Chen’s group has been building robotic insects for more than five years.

They recently developed a more durable version of their tiny robot, a microcassette-sized device that weighs less than a paperclip. The new version utilizes larger, flapping wings that enable more agile movements. They are powered by a set of squishy artificial muscles that flap the wings at an extremely fast rate.

But the controller — the “brain” of the robot that determines its position and tells it where to fly — was hand-tuned by a human, limiting the robot’s performance.

For the robot to fly quickly and aggressively like a real insect, it needed a more robust controller that could account for uncertainty and perform complex optimizations quickly.

Such a controller would be too computationally intensive to be deployed in real time, especially with the complicated aerodynamics of the lightweight robot.

To overcome this challenge, Chen’s group joined forces with How’s team and, together, they crafted a two-step, AI-driven control scheme that provides the robustness necessary for complex, rapid maneuvers, and the computational efficiency needed for real-time deployment.

“The hardware advances pushed the controller so there was more we could do on the software side, but at the same time, as the controller developed, there was more they could do with the hardware. As Kevin’s team demonstrates new capabilities, we demonstrate that we can utilize them,” How says.

For the first step, the team built what is known as a model-predictive controller. This type of powerful controller uses a dynamic, mathematical model to predict the behavior of the robot and plan the optimal series of actions to safely follow a trajectory.

While computationally intensive, it can plan challenging maneuvers like aerial somersaults, rapid turns, and aggressive body tilting. This high-performance planner is also designed to consider constraints on the force and torque the robot could apply, which is essential for avoiding collisions.

For instance, to perform multiple flips in a row, the robot would need to decelerate in such a way that its initial conditions are exactly right for doing the flip again.

“If small errors creep in, and you try to repeat that flip 10 times with those small errors, the robot will just crash. We need to have robust flight control,” How says.

They use this expert planner to train a “policy” based on a deep-learning model, to control the robot in real time, through a process called imitation learning. A policy is the robot’s decision-making engine, which tells the robot where and how to fly.

Essentially, the imitation-learning process compresses the powerful controller into a computationally efficient AI model that can run very fast.

The key was having a smart way to create just enough training data, which would teach the policy everything it needs to know for aggressive maneuvers.

“The robust training method is the secret sauce of this technique,” How explains.

The AI-driven policy takes robot positions as inputs and outputs control commands in real time, such as thrust force and torques.

Insect-like performance

In their experiments, this two-step approach enabled the insect-scale robot to fly 447 percent faster while exhibiting a 255 percent increase in acceleration. The robot was able to complete 10 somersaults in 11 seconds, and the tiny robot never strayed more than 4 or 5 centimeters off its planned trajectory.

“This work demonstrates that soft and microrobots, traditionally limited in speed, can now leverage advanced control algorithms to achieve agility approaching that of natural insects and larger robots, opening up new opportunities for multimodal locomotion,” says Hsiao.

The researchers were also able to demonstrate saccade movement, which occurs when insects pitch very aggressively, fly rapidly to a certain position, and then pitch the other way to stop. This rapid acceleration and deceleration help insects localize themselves and see clearly.

“This bio-mimicking flight behavior could help us in the future when we start putting cameras and sensors on board the robot,” Chen says.

Adding sensors and cameras so the microrobots can fly outdoors, without being attached to a complex motion capture system, will be a major area of future work.

The researchers also want to study how onboard sensors could help the robots avoid colliding with one another or coordinate navigation.

“For the micro-robotics community, I hope this paper signals a paradigm shift by showing that we can develop a new control architecture that is high-performing and efficient at the same time,” says Chen.

“This work is especially impressive because these robots still perform precise flips and fast turns despite the large uncertainties that come from relatively large fabrication tolerances in small-scale manufacturing, wind gusts of more than 1 meter per second, and even its power tether wrapping around the robot as it performs repeated flips,” says Sarah Bergbreiter, a professor of mechanical engineering at Carnegie Mellon University, who was not involved with this work.

“Although the controller currently runs on an external computer rather than onboard the robot, the authors demonstrate that similar, but less precise, control policies may be feasible even with the more limited computation available on an insect-scale robot. This is exciting because it points toward future insect-scale robots with agility approaching that of their biological counterparts,” she adds.

This research is funded, in part, by the National Science Foundation (NSF), the Office of Naval Research, Air Force Office of Scientific Research, MathWorks, and the Zakhartchenko Fellowship.

From individuals to crews, AI brings teamwork into construction productivity analysis

2025 Top Article – Redefining industries with robotics and AI

New robotic skin lets humanoid robots sense pain and react instantly

Top Industrial Robots List

The origami wheel that could explore lunar caves

The origami wheel that could explore lunar caves

2025 Top Article – Purpose-Built, Specialized Robots are the Future

Robohub highlights 2025

Over the course of the year, we’ve had the pleasure of working with many talented researchers from across the globe. As 2025 draws to a close, we take a look back at some of the excellent blog posts, interviews and podcasts from our contributors.

Teaching robot policies without new demonstrations: interview with Jiahui Zhang and Jesse Zhang

Jiahui Zhang and Jesse Zhang to tell us about their framework for learning robot manipulation tasks solely from language instructions without per-task demonstrations.

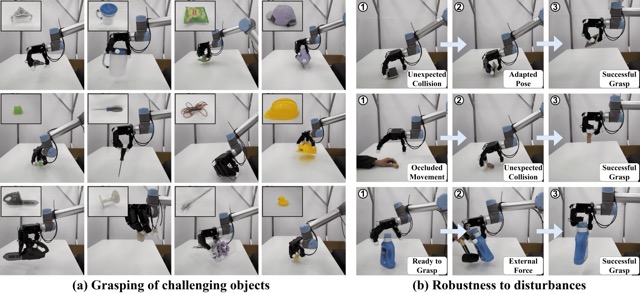

CoRL2025 – RobustDexGrasp: dexterous robot hand grasping of nearly any object

Hui Zhang writes about work presented at CoRL2025 on RobustDexGrasp, a novel framework that tackles different grasping challenges with targeted solutions.

Robot Talk Episode 133 – Creating sociable robot collaborators, with Heather Knight

Robot Talk host Claire Asher chatted to Heather Knight from Oregon State University about applying methods from the performing arts to robotics.

Generations in Dialogue: Human-robot interactions and social robotics with Professor Marynel Vasquez

In this podcast from AAAI, host Ella Lan asked Professor Marynel Vázquez about what inspired her research direction, how her perspective on human-robot interactions has changed over time, robots navigating the social world, and more.

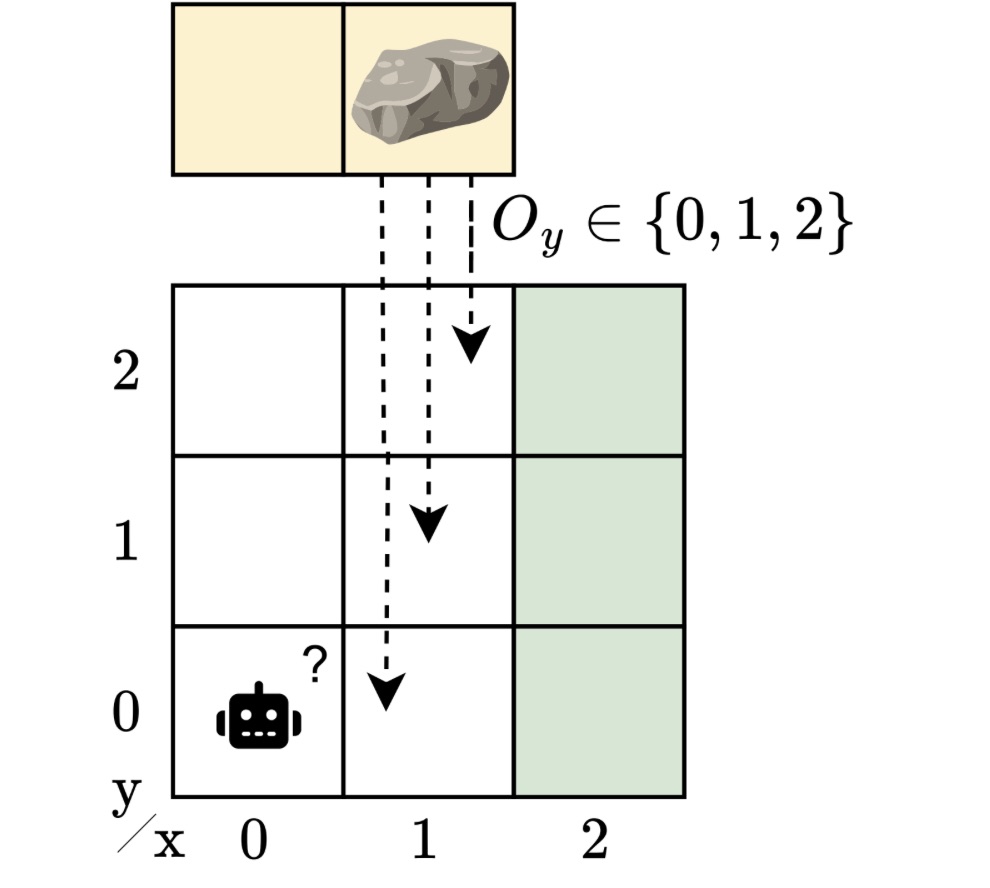

Learning robust controllers that work across many partially observable environments

In this blog post, Maris Galesloot summarizes work presented at IJCAI 2025, which explores designing controllers that perform reliably even when the environment may not be precisely known.

Robot Talk Episode 130 – Robots learning from humans, with Chad Jenkins

Claire Asher chatted to Chad Jenkins from University of Michigan about how robots can learn from people and assist us in our daily lives.

Interview with Zahra Ghorrati: developing frameworks for human activity recognition using wearable sensors

Zahra Ghorrati is pursuing her PhD at Purdue University, where her dissertation focuses on developing scalable and adaptive deep learning frameworks for human activity recognition (HAR) using wearable sensors.

Self-supervised learning for soccer ball detection and beyond: interview with winners of the RoboCup 2025 best paper award

We caught up with some of the authors of the RoboCup 2025 best paper award to find out more about the work, how their method can be transferred to applications beyond RoboCup, and their future plans for the competition.

#IJCAI2025 distinguished paper: Combining MORL with restraining bolts to learn normative behaviour

Agata Ciabattoni and Emery Neufeld introduce a framework for guiding reinforcement learning agents to comply with social, legal, and ethical norms.

Robot Talk Episode 114 – Reducing waste with robotics, with Josie Gotz

Claire Asher chatted to Josie Gotz from the Manufacturing Technology Centre about robotics for material recovery, reuse and recycling.

Multi-agent path finding in continuous environments

Kristýna Janovská and Pavel Surynek write about how can a group of agents minimise their journey length whilst avoiding collisions.

RoboCupRescue: an interview with Adam Jacoff

Find out what’s new in the RoboCupRescue League this year.

An interview with Nicolai Ommer: the RoboCup Soccer Small Size League

We caught up with Nicolai to find out more about the Small Size League, how the auto referees work, and how teams use AI.

Interview with Kate Candon: Leveraging explicit and implicit feedback in human-robot interactions

Hear from PhD student Kate about her work on human-robot interactions.

AIhub coffee corner: Agentic AI

The AIhub coffee corner captures the musings of AI experts over a short conversation.

Generations in Dialogue: Multi-agent systems and human-AI interaction with Professor Manuela Veloso

Host Ella Lan chats to Professor Manuela Veloso about her research journey and path into AI, the history and evolution of AI research, inter-generational collaborations, and more.

Preparing for kick-off at RoboCup2025: an interview with General Chair Marco Simões

We spoke to Marco Simões, one of the General Chairs of RoboCup 2025 and President of RoboCup Brazil.

Gearing up for RoboCupJunior: Interview with Ana Patrícia Magalhães

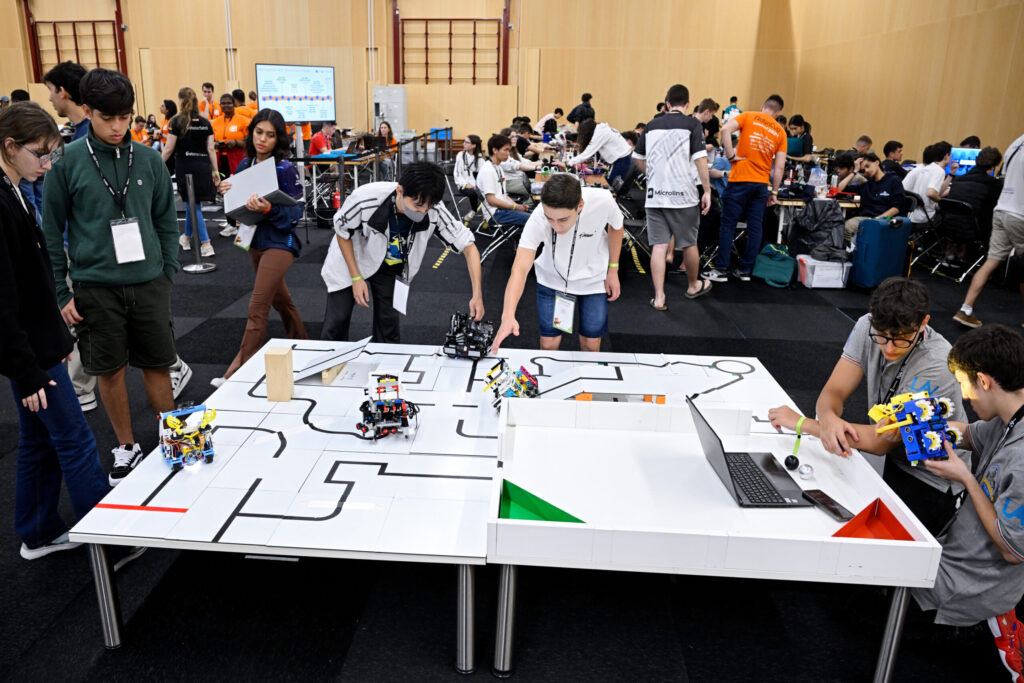

RoboCup Junior Rescue @ WK RoboCup 2024. Photo: RoboCup/Bart van Overbeeke

We heard from the organiser of RoboCupJunior 2025 and find out more about the event.

Top Ten Stories in AI Writing, Q4 2025

Shirking its ‘fun toy’ image, AI like ChatGPT was increasingly seen by the business community in Q4 as a must-have productivity tool destined to reward early adopters and punish Luddites.

ChatGPT’s maker, for example, released a study finding that everyday AI business users are saving at least 40 minutes a day on busy work – while power users are saving up to two hours-a-day.

Meanwhile, MIT released a report concluding that AI can currently eliminate 12% of all jobs — as more businesses were found issuing decrees along the lines of ‘Use AI or You’re Fired.”

Especially alarming for writers was a move by media outlet Business Insider, which started publishing news stories completely written by AI and carrying an AI byline.

Plus, photographers and graphic artists got their own dose of rubber-meets-road reality with new, back-to-back releases of extremely powerful new AI imaging tools built into Gemini and ChatGPT.

But despite the breakneck development, users also continued to report that AI agents – designed to automate multi-step tasks – are continuing to fail miserably.

Plus, many users continued to ‘forget’ that AI makes-up facts, and that using AI responses without fact-checks can lead to major ‘egg-on-face’ moments.

The most gleaming ray of hope in al lthis: The Wall Street Journal reported that AI is considered so essential to U.S. defense, there’s a good chance the U.S. government will bail-out the AI industry if the much-feared ‘AI Bubble’ bursts.

Here’s a full rundown of how those stories — and more — helped shape AI writing in Q4 2024:

*ChatGPT-Maker Study: The State of Enterprise AI: New research from OpenAI finds that everyday business users of AI are saving about 40-60 minutes-a-day when compared to working without the tool.

Even better, the heaviest AI users say they’re saving up to two hours a day with the tech.

*AI Can Already Eliminate 12% of U.S. Workforce: A new study from MIT finds that AI can already eliminate 12% of everyday jobs.

Dubbed the “Iceberg Index,” the study simulated AI’s ability to handle – or partially handle – nearly 1,000 occupations that are currently worked by more than 150 million in the U.S.

Observes writer Megan Cerullo: “AI is also already doing some of the entry-level jobs that have historically been reserved for recent college graduates or relatively inexperienced workers.”

*Use AI or You’re Fired: In another sign that the days of ‘AI is Your Buddy’ are fading fast, increasing numbers of businesses have turned to strong-arming employees when it comes to AI.

Observes Wall Street Journal writer Lindsay Ellis: “Rank-and-file employees across corporate America have grown worried over the past few years about being replaced by AI.

“Something else is happening now: AI is costing workers their jobs if their bosses believe they aren’t embracing the technology fast enough.”

*Breaking News Gets an AI Byline at Business Insider: The next news story you read from Business Insider may be completely written by AI — and carry an AI byline.

The media outlet has announced a pilot test of a story writing algorithm that will grab a piece of breaking news and give it context by combining it with data drawn from stories in the Business Insider archive.

The only human involvement will be an editor, who will look over the finished product before it’s published.

*Study: AI Agents Virtually Useless at Completing Freelance Assignments: New research finds that much-ballyhooed AI agents are literally horrible at completing everyday assignments found on freelance brokerage sites like Fiverr and Upwork.

Observes writer Frank Landymore: “The top performer, they found, was an AI agent from the Chinese startup Manus with an automation rate of just 2.5 percent — meaning it was only able to complete 2.5 percent of the projects it was assigned at a level that would be acceptable as commissioned work in a real-world freelancing job, the researchers said.

“Second place was a tie, at 2.1 percent, between Elon Musk’s Grok 4 and Anthropic’s Claude Sonnet 4.5.”

*Oops, Sorry Australia, Here’s Your Money Back: Consulting firm Deloitte has agreed to refund the Australian government $440,000 for a study both agree was riddled by errors created by AI.

Observes writer Krishani Dhanji: “University of Sydney academic Dr. Christopher Rudge — who first highlighted the errors — said the report contained ‘hallucinations’ where AI models may fill in gaps, misinterpret data, or try to guess answers.”

Insult to injury: The near half-million-dollar payment is only a partial refund to what the Australian government actually paid for the flawed research.

*Forget Benchmarks: Put AI Through Your Own Tests Before You Commit: While benchmarks offer an indication of the AI solution you’re considering, you really need to put the AI through your own tests before you opt for anything, according to Ethan Mollick.

An associate professor of management at the Wharton School of the University of Pennsylvania, Mollick studies and teaches entrepreneurship, innovation and how AI is changing work and education.

Observes Mollick: “You need to know specifically what your AI is good at — not what AIs are good at on average.”

*AI Gets a Number One Country Hit: Well, it’s official: AI can now write and produce a country hit with the best of ’em.

“Walk My Walk,” a song credited to an AI artist named ‘Breaking Rust,’ has hugged the number one spot on Billboard’s Country Digital Song Sales chart for two weeks in a row.

The hit comes on the heels of another AI hit in another music genre, according to Billboard’s Adult R&B Airplay chart.

*Free AI from China Keeps U.S. Tech Titans on Their Toes: While still holding a slim lead, major AI players like ChatGPT, Gemini and Claude are feeling the nip-at-their-heels of ‘nearly as good’ – and free – AI alternatives from China.

Key Chinese players like DeepSeek and Qwen, for example, are within chomping distance of the U.S. marketing leaders — and are Open Source, or freely available for download and tinkering.

One caveat: Researchers have found AI code embedded in some Chinese AI that can be used to forward your data along to the Chinese Communist Party.

*Solution to AI Bubble Fears: U.S. Government?: The Wall Street Journal reports that AI is now considered so essential to U.S. defense, the U.S. government may step in to save the AI industry — should it implode from the irrational exuberance of investors.

Observes lead writer Sarah Myers West: “The federal government is already bailing out the AI industry with regulatory changes and public funds that will protect companies in the event of a private sector pullback.

“Despite the lukewarm market signals, the U.S. government seems intent on backstopping American AI — no matter what.”

Share a Link: Please consider sharing a link to https://RobotWritersAI.com from your blog, social media post, publication or emails. More links leading to RobotWritersAI.com helps everyone interested in AI-generated writing.

–Joe Dysart is editor of RobotWritersAI.com and a tech journalist with 20+ years experience. His work has appeared in 150+ publications, including The New York Times and the Financial Times of London.

The post Top Ten Stories in AI Writing, Q4 2025 appeared first on Robot Writers AI.

The Silicon Manhattan Project: China’s Atomic Gamble for AI Supremacy

Reports surfaced this week confirming what many industry observers had long suspected but feared to articulate: China has launched a “Manhattan Project” for semiconductors. This massive, state-backed initiative has a singular, existential goal—to reverse-engineer the ultra-complex lithography machines currently monopolized […]

The post The Silicon Manhattan Project: China’s Atomic Gamble for AI Supremacy appeared first on TechSpective.