Here’s my annual summary of the top stories of the prior year. This time the news was a strong mix of bad and good.

Read the text story on Forbes.com at Robocars 2022 year in review.

And see the video version here:

Here’s my annual summary of the top stories of the prior year. This time the news was a strong mix of bad and good.

Read the text story on Forbes.com at Robocars 2022 year in review.

And see the video version here:

California has released the disengagement reports the law requires companies to file and it’s a lot of data. Also worth noting is Waymo’s own blog post on their report where they report their miles per disengagement has improved from 5,600 to 11,000.

Fortunately some hard-working redditors and others have done some summation of the data, including this one from Last Driver’s Licence Holder. Most notable are an absolutely ridiculous number from Apple, and that only Waymo and Cruise have numbers suggesting real capability, with Zoox coming in from behind.

The problem, of course, is that “disengagements” is a messy statistic. Different teams report different things. Different disengagements have different importance. And it matters how complex the road you are driving is. (Cruise likes to make a big point of that.)

Safety drivers are trained to disengage if they feel at all uncomfortable. This means that they will often disengage when it is not actually needed. So it’s important to do what Waymo does, namely to play back the situation in simulator to see what would have happened if the driver had not taken over. That playback can reveal if it was:

A real measurement involves a complex mix of all these, and I’ll be writing up more about how we could possibly score these.

We know the numbers for these events for humans thanks to “naturalistic” driving studies and other factors. Turns out that humans are making mistakes all the time. We’re constantly not paying attention to something on the road we should be looking at, but we get away with it. We constantly find ourselves drifting out of a lane, or find we must brake harder than we would want to. But mostly, nothing happens. Robots aren’t handled that way — any mistake is a serious issue. Robocars will have fewer crashes because “somebody else was in the wrong place when I wasn’t looking.” Their crashes will often have causes that are foreign to humans.

In Waymo’s report you can actually see a few disengagements because the perception system didn’t see something. That’s definitely something to investigate and fix, but humans don’t see something very frequently, and we still do tolerably well.

A summary of the numbers for humans on US roads:

The other very common type of disengagement is a software disengagement. Here, the software decides to disengage because it detects something is going wrong. These are quite often not safety incidents. Modern software is loaded with diagnostic tests, always checking if things are going as expected. When one fails, most software just logs a warning, or “throws an exception” to code that handles the problem. Most of the time, that code does indeed handle the problem, and there is no safety incident. But during testing, you want to disengage to be on the safe side. Once again, the team examines the warning/exception to find out the cause and tries to fix it and figure out how serious it would have been.

That’s why Waymo’s 11,000 miles is a pretty good number. They have not published it in a long time, but their number of “necessary interventions” is much higher than that. In fact, we can bet that in the Phoenix area, where they have authorized limited operations with no safety driver, that it’s better than the numbers above.

My feet are aching, as usual, after 3 days on the CES show floor, and the question people always ask others there is “what have you seen that was interesting?”

I won’t say I didn’t see anything interesting, and I had a large number of rewarding conversations with all sorts of companies, making the trip very worthwhile, but I will say I saw less that was new and exciting than ever before. This may be a result of the show’s constant growth that meant in 3 days I still did not manage to get to 3 1/2 major rooms of the show, putting my focus on cars as I usually do.

A larger fraction of CES is not even remotely consumer electronics. In fact, the reason I go is largely the robocar related companies, none of whom are selling to consumers at this time, and most of which never plan to. And yes, the amazing new TVs from Samsung, LG and others are always a draw — LG always creates an astonishing video wall, and all companies were now displaying 8K TVs as a real product (even if a very expensive one for which no video sources are available, but which will be fantastic for still photos.)

My favourite part of the show, Eureka Park, even disappointed even though I gave it most of a day. The proportion of products which are poorly thought through is always high, but you search for gems that might someday change the world. I saw few Eureka moments.

Several fields, such as Iot/Smarthome were growing and maturing, with lots more companies, but at the same time, very little in smart home moves beyond the “Oh, that sounds nice” into truly appealing and useful. I’ve had computer controlled lights for decades. They are handy, and I would rather have them than not have them, but they aren’t essentials the way my phone and a dozen apps within it are, things I can’t do without any more.

There were, again, a ton of different LIDAR companies. What’s interesting about the LIDAR crowd is that they are almost all quite different, in many cases taking entirely different approaches to the technology — the output, the steering, the sensing, the band and even the core technology. I’ll have more coverage of that later. Of course these are not for consumers.

Other press were equally unexcited — I saw far less coverage of the “hot new thing” in the media this year as the show opened and progressed. This was the year of mostly incremental improvements.

Flying car style vehicles did generate excitement, but they were barely there. The most buzz was about Bell helicopter’s “Nexus” tilt-rotor hybrid vehicle on display. It’s a high-end vehicle, with 6 giant ducted fan tilt-rotors, and very much a prototype. Bell knows a lot about tilt-rotor from their work on the V-22 Osprey, a vehicle which has a rather checkered history, so it’s not sure how this will go. The few other companies doing flying vehicles (for people) had tiny booths showing nothing.

The Nexus is a hybrid-hybrid — both multirotor and fixed-wing flight, and both electric batteries and gasoline powered generator for power.

There was some interesting battery technology, including a small startup named GBatteries which claimed that by pulsing the charge voltage they could charge at vastly faster speeds — 5C, or 10 minutes from empty to 80%, and 5 minutes to 50%, without any more heat or battery damage than today’s fast charging (which takes about an hour.) Their demo was just on a drill, so it is yet to be proven in a car, but it would change some of the usability of battery devices and electric cars.

I saw other interesting battery tech but there is some irony – because of all the investment made by Tesla and others in standard lithium ion cells, these cells are now better than other, more innovative technologies, because of their mass production (driving down the cost) and the heavy R&D (improving charge times and reducing battery degradation.) The lithium ion cell was created to be lightweight for laptops where weight is absolutely crucial, but now it’s everywhere, from cars to scooters to even grid storage.

There was an uptick of mobility products (or at least I am paying the most attention to them) beyond cars, including scooters, delivery, bike riding and more.

A number of other summaries of 2018 in robocars have called it a bad year, the year it all went south, even the year the public realized that robocars will never come.

In fact, 2018 was the year the field reached a new level of maturity, as its warts began to show, and we saw the first missteps (minor and major) and the long anticipated popping of some of the hype.

As predicted by Gartner’s famous “hype cycle” any highly-hyped technology goes through a “trough of disillusionment” after the initial surge.I see several reasons why the trough is happening now:

In reality, the plan at Waymo and many other companies has always been to build a car that serves a limited set of streets — a small set of easy streets — at the start, and then starts growing the network of streets, and eventually towns with streets, as time goes by.

There is going to be a big surge once the technology reaches a level where the remaining problems are no longer technological as logistic.That is to say, when the barrier to expanding to new streets and cities is the detailed work of mapping those streets, learning the local rules and working with local governments.That’s when the “land rush” happens.The limiting factor there is time and talent more than it’s money.

But none of that happens until the cars are ready for deployment, and until they are, they will be tested as prototypes with safety drivers in them.Even the first prototype services, like Waymo’s and Zoox’s and others, will have safety drivers in them.

No question the big story this year was the death of Elaine Herzberg as the result of a compound series of errors and bad practices at Uber.The story is notable for many reasons, including of course how it happened, but also in the public’s reaction. For a long time, I’ve been assured by many skeptics that the first death would mean the end of the robocar dream.The public actually thinks the first deaths were in Teslas (they weren’t) and Tesla stock went up after they took places. The Uber fatality was real, and did teach us that teams are capable of more negligence than I had thought. While it did scale up public distrust, and Uber did shut down their program for at least a year, the overall effect still seems modest.(The larger effect will be much greater intolerance for the next fatality, the one that would have been the first.)

Here’s some of my many posts on Uber this year:

Waymo remains the clear leader in the field, so the next top story has to be about them, but sadly it’s the story of their first miss — promising to launch in 2018 and feeling forced to do a “launch” that was really just a formalization of existing activity. I believe that Uber is partly to blame here, in that it did use up a lot of the public’s tolerance for errors, especially in the Phoenix area.Waymo soft launches in Phoenix, but

The better story for Waymo, however, was their first totally unmanned operations earlier in the year.This also disappointed people because these unmanned operations were on a much more limited scale than people originally imagined, but it’s still a major milestone.It means Waymo’s team convinced the lawyers and board that the systems were good enough to take this risk, even if only in a limited area.

Waymo goes totally unmanned, arbitration and other news

This was also the year that “flying cars” also known as e-VTOL aircraft, “took off.”It’s now clear the engineering problems are close to solved, though many social and logistic problems remain.These vehicles are at the stage robocars were 10 years ago, and the excitement is building.Sebastian Thrun, the modern “father of self-driving cars” and the man who first got me excited about them, has switched his efforts to flying.I’ll be writing more on this in the coming year.

In chronological order, not order of importance

The main focus of this site are my essays on the issues and future of robocars.Here are the ones from this year I think you will find most valuable.

Waymo announced today they will begin commercial operations in the Phoenix area under the name “Waymo One.” Waymo has promised that it would happen this year, and it is a huge milestone, but I can’t avoid a small bit of disappointment.

Regular readers will know I am a huge booster of Waymo, not simply because I worked on that team in its early years, but because it is clearly the best by every metric we know. However, this pilot rollout is also quite a step down from what was anticipated, though for sensible reasons.

Other companies — including Waymo, Uber, Lyft and several others — have offered limited taxi services with safety drivers. This service is mainly different in its polish and level of development — or at least that’s all we have been told. They only say they “hope” to expand it to people outside the early rider program soon.

In other words, Waymo has missed the target it set of a real service in 2018. It was a big, hairy audacious target, so there is no shame or surprise in missing it, and it may not be missed by much.

There is a good reason for missing the target. The Uber fatality, right in that very operation area, has everybody skittish. The public. Developers. Governments. It used up the tolerance the public would normally have for mistakes. Waymo can’t take the risk of a mistake, especially in Phoenix, especially now, and especially if it is seen it came about because they tried to go too fast, or took new risks like dropping safety drivers.

I suspect at Waymo they had serious talks about not launching in Phoenix, in spite of the huge investment there. But in the end, changing towns may help, but not enough. Everybody is slowed down by this. Even an injury-free accident that could have had an injury will be problematic — and the truth is, as the volume of service increases, that’s coming.

It was terribly jarring for me to watch Waymo’s introduction video. I set it to play at one minute, where they do the big reveal and declare they are “Introducing the self driving service.”

The problem? The car is driving down N. Mill Avenue in Tempe, the road on which Uber killed Elaine Herzberg, about 1,100 feet from the site of her death. Waymo assures me that this was entirely unintentional — and those who live outside the area or who did not study the accident may not recognize it — but it soured the whole launch for me.

A recent Reuters story suggests Cruise is well behind schedule with one insider saying “nothing is on schedule” and various reports of problems not yet handled. This puts doubt into GM’s announced plan to have a commercial pilot without safety drivers in operation in San Francisco in 2019.

The problem for me, and everybody else, is that it’s very hard to judge the progress of a project from outside. This is because it’s “easy” to get a basic car together and do demo runs on various streets. Teams usually have something like that up and running within a year. Just 2 years in, Google had logged 100,000 miles on 1,000 different miles of road. Today, it’s even easier.

I love to talk about the coming robocar world. Over the next few decades, more and more trips will be made in robocars, and more and more people will reduce or give up car ownership to live the robotaxi life. This won’t be instantaneous, and it will happen in some places decades before it happens in others, but I think it’s coming.

But what of the driver of the regular car? What lies ahead for those who love driving and want to own a traditional car? I often see people declare that nobody will own cars in the future, and that human driving will even be banned. Is that realistic?

The transition to robocars must be gradual, one car at a time, at least in most of the world. That means lots of human driven cars on all the roads for decades to come.

Some people predict that human driving will quickly be banned. This won’t happen in most places simply because there will still be lots of places robocars don’t go because it’s not commercially viable to certify them there. In addition, there will be strong political opposition. At a rough guess, around 1/3rd of people never have a car accident in their lives. What is the justification in taking away their licences?

When I give talks on robocars, I usually get some people telling me they can’t imagine why anybody would drive or own a car in the future, and others declaring that only a minority will give up the fun, freedom and control of manual driving. The real answer will be a mix. Though to those who tell me that Americans love cars too much to ever give them up, I ask how it is that car-loving Californians can move to Manhattan and give up car ownership in 15 minutes.

We might see the rise of robocar-only lanes in certain places. There might be a special highway lane, where faster driving is allowed, and platooning takes place.

More dramatic would be the designation of certain downtown areas as robocar only at certain times or all the time. It’s not unusual for there to be driving restrictions in downtowns, particularly “old city” downtowns with small streets as found in Europe. Sometimes downtown streets are converted to pedestrian malls as well. It’s possible to imagine the robocars being deemed well behaved enough to go into these restricted areas.

We might also see robocars allowed to access, under certain rules, the private right-of-way used by transit lines, particularly bus rapid transit paths. It’s also possible rail lines and tunnels could get partially paved to allow the robocars to use them when the transit vehicle is not. They can be trusted not to interfere, and they can also drive reliably on thin strips of pavement — like rails for tires — if necessary.

This is not taking anything away from regular cars, but rather it’s giving the robocars a privilege the traditional cars never got.

More threatening to the human driver might be time restrictions or congestion restrictions. Once robocars provably cause less congestion, we might see congestion taxes on human drivers, or limitations on human driving during congested times, just as we sometimes see for trucks.

Many years down the road, cities might realize that when building new infrastructure, there are advantages in making it only for robocars, or for certain classes of robocars, such as lightweight electric single passenger cars. You can build very small tunnels for them, or much cheaper bridges and elevated roadways. They can drive reliably on a thin lane, saving a lot of money. Your old car won’t go there, but then again, it can’t go there now.

It’s also possible we might see housing neighbourhoods that take advantage of some things robocars can do, like serve the homes only with a single lane back alley, which comes with occasional wide spots for cars to do perfectly timed passing of one another on the short lanes.

Like many people, I think that robocars will be electric. As we get more electric cars, they might get privileges on the road you don’t get if you drive a manual gas guzzler. This even includes things like driving into buildings.

The vast majority of the changes due to robocars will be on urban streets and highways. Commuting roads. Even those who love to drive don’t tend to love the urban commute. The country roads, the scenic coastal and mountain routes — these will stay pretty much the same for a long time to come. In fact, you might like having a robocar drive you out of the city to places where it’s fun to drive, and then take the wheel (or switch to a sportscar) to enjoy those great drives.

Robocars must mix with regular cars, but they are able to do a better job at helping reduce traffic congestion by obeying on-the-fly rules about road use. They make use of “virtual infrastructure” — things like maps that show everything about the roads and their rules.

Drivers of regular cars can participate in this as well, with just a smartphone. If the day comes when cities want to meter or charge for roads using computer networks, the robocars will be ready to do it, but so will the ordinary drivers as long as they have that phone.

Robotaxis don’t need to park that much — they would rather be out working on another ride. Private robocars will drop their masters off at the door and then go find the cheapest parking nearby. That parking doesn’t have to be right nearby, they will shop around in a way that human drivers can’t. And park themselves densely in valet style. So they won’t pay that much for parking. You’ll probably have to pay more since you need to park your car right where you’re going while they don’t. You also take up more space.

Many parking spaces on the street might be restricted to robocars at certain times. That’s because robocars don’t park, they “stand,” able to move away at any time. A robocar can stand in front of a driveway or even a fire hydrant. And we might see important streets that only let robocars park on them as rush hour approaches, because they can be trusted to reliably clear the parking lanes and make them open for traffic. Robocars can also double park where there is room, because they can get out of the way if the “blocked” car wants to get out.

The existence of cheap delivery robots might change how much people want trucks or vehicles with lots of cargo space. If you know that, when you want to move a big thing, you can easily call up a delivery robot, you might reduce your desire for cargo space. On the other hand, if you want a van with everything in it all the time, you might still go for that.

The price of insurance is based on how frequently people like you have accidents. Unless you think you will have more accidents, your insurance won’t go up. In fact, as the roads get safer and more orderly, you might have fewer accidents. And late model manually driven cars will still be loaded up with new accident prevention features, some of them a result of robocar research. That means your insurance will get a bit cheaper. Of course, the robocars will drive more safely than you, and pay even less for the cost of accidents. If not, they’re doing it wrong.

Kids are alive today who won’t get a licence due to robocars. That’s easy because kids are alive today who are not getting licences due to transit and Uber. This will grow. As it grows, it will become more normal, which means more effort to accommodate them, and a bit more pressure against human driving in some areas and times. Parents will cut back on the idea of giving a teenager a car, preferring to offer them a “card” which provides robotaxi service. A time will come when preferring to manual drive will be looked on as a touch odd, and for the older generation and the enthusiast.

As I outline in my timeline for deployment, robocars will roll out on very different schedules based on where you are. There will be cities that have heavy robotaxi penetration in the 2020s, while other cities have next to none. There will be countries which have no robocars even in the 2030s.

That’s good news for people who want to give up car ownership and sell their existing car. Even if they might have a hard time selling a used car in a city where car ownership is decreasing, there will still be plenty of places, both in the USA and around the world, where the car can be sold.

In addition, robotaxi service will not be available in rural areas for a very long time. Out there you will find only traditional cars and privately owned robocars. There will be many places to enjoy driving and sell cars.

Conversely, there is talk that countries like China, which have been known to do bold projects and are building brand-new cities, might declare a city to be all-robocars before too long. While that’s possible, it is not going to affect how you drive outside that city.

Privately owned robocars will have a steering wheel available, though if it’s for rare use it may be more like a video game wheel. You’ll need it to drive places robocars are not rated to go, like off-road or the dirt road to grandpa’s house — or just the many places the robocar doesn’t have maps and safety certification for. This probably won’t be “fun” sports driving, but it could be. Taxis won’t have these wheels (except for use by staff if the vehicle fails) but might drop you off next to a vehicle that has a wheel if that’s needed to complete your trip.

The biggest threat to the lover of driving is that while you won’t get any more dangerous, you will be more dangerous by comparison. You might not get away with things you could get away with before when everybody was dangerous.

It’s possible that you might lose your licence after one or two accidents. Perhaps even after just one DUI or a few serious tickets. Today, society doesn’t want to take away people’s licences because that destroys their mobility. In the future, it won’t — you will still be able to get around. So society may not tolerate you making big mistakes.

This also might happen more to people as they get older. Again, today we don’t want to take away a senior’s licence even when they are half-blind, because that ends their mobile life. Not any more.

As time passes, a new generation will grow up without learning how to drive. A couple of generations from now, manual driving may be mostly a sport or affectation. Still allowed, but uncommon. After all, people still ride horses a century after they stopped being used for transportation.

In this far away time, driving may be seen mostly as a sport, not a means of transportation. Racetracks and special roads will still exist, but for fun, not travel.

This week’s hot story was again from Amir at The Information and there is even more detail in the author’s Twitter thread.

The short summary: Amir was able to find a fair number of Waymo’s neighbours in Chandler, Arizona who are getting frustrated by the over-cautious drive patterns of the Waymo vans. Several used the words, “I hate them.”

A lot of the problems involve over-hesitation at an unprotected left turn near the Waymo HQ. The car is just not certain when it can turn. There is also additional confirmation of what I reported earlier, that operation with no safety driver is still very rare, and on limited streets.

Unprotected turns, especially left ones, have always been one of the more challenging elements of day-to-day driving. You must contend with oncoming traffic and pedestrians who may be crossing as well. You may have to do this against traffic that is moving at high speed.

While I am generally not surprised that these intersections can be a problem, I am a little surprised they are one for Waymo. Waymo is the only operating car which features a steerable, high resolution long range LIDAR on board. This means it can see out 200m or more (in a narrower field of view.) This lets it get a good look at traffic coming at it in such situations. (Radar also sees such vehicles but with much less resolution.)

For Waymo, this is not a problem of sensors but one of being too timid. One reason they are operating in Phoenix is it’s a pretty easy area to be timid in. The instinct of all teams is to avoid early risks that lead to early accidents. That became even stronger in Arizona after the Uber fatality, which used up a lot of the public’s tolerance for such errors. As such, the “better safe than sorry” philosophy which was already present in Waymo and most teams has been strengthened.

The problem is, it needs to eventually be weakened. Timid drivers won’t make it in the real world. They won’t make it far at all in the non-tame places like Boston. Thus you look at a nasty trade-off:

That leaves only a few options. The other drivers must adapt better to a timid driver, or the public must get more tolerant of a slightly higher (but still better than human) accident risk.

Hype around self-driving cars has pushed some into the public to expect computerized perfection. That’s not coming.

Believe it or not, it is not as impossible to change the behaviour of other drivers as you might think. In Manhattan not that may years ago, two things were very common. Endless honking, and gridlock. Both were the result of well ingrained aggressive habits of New York drivers. The city decided to crack down on both, and got aggressive with fines. It worked, and those things are both vastly reduced.

It’s less possible to adjust the patterns of pedestrians. Some recent articles have gotten a lot of attention from people suggesting this must happen. I think some of it will happen, but much less than hoped, and that will be the subject of another article.

A robocar should eventually get very good at driving decisions which involve physics, because computers are very good at physics. Unlike humans which have difficulty precisely timing when an oncoming car will get to them, robots could in theory do it with a very frightening precision, making turns through gaps that seem tiny to humans. This would frighten other drivers and cause problems, so it’s not something we’ll see today.

This isn’t the last story we’ll see of robocars frustrating other drivers. That’s particularly true if we unwisely keep them at the speed limit. My long term belief is that most of the traffic code should be eliminate for robocars and be replaced by, “it’s legal if you can do it safely and without unfairly impeding traffic.” The whole idea of a traffic code only makes sense for humans. With robots, since there will never be more than a few dozen different software stacks on the road, you can just get all the designers together in a room and work out what should be done and what is safe. Traffic codes and other laws are there to deal with humans who can’t be trusted to know the rules or even obey the rules they know. While companies can’t be trusted to do anything but look after their own interests, you can easily make it in their interests to follow the rules.

The newsletter The Information reports Uber’s investors are pushing Uber to sell its self-drive division to some other large player. The division has, of course, been nothing but trouble for Uber, and as I have noted several times, Uber is one of the few large players in this space that doesn’t have to build their own tech. They have the #1 brand in selling rides, and selling rides is what the robotaxi business is all about.

At the same time, Uber recruited a great team (though it has lost many of them.) First they recruited many of the best around CMU for their Pittsburgh ATC headquarters. CMU and Stanford are where most of the stars of the robocar world have come from. Then they “paid” $680M in stock for Otto, which was really an acqui-hire in many ways, at least if you believe court documents. Because that stock payout required certain milestones that weren’t met, the Otto stockholders did not get their money, but Uber had to make a large stock payout to Waymo as a result of the lawsuit over Anthony Levanowski’s actions.

So they have this team, but the team and the Uber name are tarnished by the fatality. But the right pickings from the team are still valuable to somebody who is falling behind, if you eliminate the factors that led to the fatality and take the Uber name off it.

At the same time, Uber gets a pledge that the car the team makes will be usable in the Uber network. They get guaranteed access to tech (if the new owner succeeds) though not the deep control that comes with owning it. They don’t need the deep control. In fact, it’s now better if they can stick another famous brand on it.

Aside from Starship (which I am involved in) the company to watch in the delivery space is Nuro. Nuro has started a delivery pilot in Scottsdale with Fry’s groceries. The pilot will be done with regular Prius and Leaf cars with Nuro’s self drive tech, and a safety driver, not with the small no-seat delivery pod Nuro is trying to build. That pod isn’t ready for the real streets, even the easy streets of Scottsdale.

Nuro is the company to watch in on-road delivery because its founders were among the top members of Google/Waymo’s team. I worked with them regularly when I was there and they are the best. They have also raised lots of money.

Driving on the road is much harder than the sidewalk, though. A road robot must be able to handle any situation on its own and get to a safe state if there is a problem no matter what. A sidewalk robot can stop in 30cm. If it encounters something it doesn’t understand, it can just stop and request help from HQ. Safety is very unlikely to be an issue, while a road robot could really hurt somebody in a mistake.

The $6 price for delivery in the pilot is strange, since that’s a common price for human driven delivery.

The Tempe police released a detailed report on their investigation of Uber’s fatality. I am on the road and have not had time to read it, but the big point, reported in many press was that the safety driver was, according to logs from her phone accounts, watching the show “The Voice” via Hulu on her phone just shortly before the incident.

This is at odds with earlier statements in the NTSB report, that she had been looking at the status console of the Uber self-drive system, and had not been using her phones. The report further said that Uber asked its safety drivers to observe the console and make notes on things seen on it. It appears the safety driver lied, and may have tried to implicate Uber in doing so.

Obviously attempting to watch a TV show while you are monitoring a car is unacceptable, presumably negligent behaviour. More interesting is what this means for Uber and other companies.

The first question — did Uber still instruct safety drivers to look at the monitors and make note of problems? That is a normal instruction for a software operator when there are two crew in the car, as most companies have. At first, we presumed that perhaps Uber had forgotten to alter this instruction when it went form 2 crew to 1. Perhaps the safety driver just used that as an excuse for her looking down since she felt she could not admit to watching TV. (She probably didn’t realize police would get logs from Hulu.)

If Uber still did that, it’s an error on their part, but now seems to play no role in this incident. That’s positive legal news for Uber.

It is true that if you had two people in the car, it’s highly unlikely the safety driver behind the wheel would be watching a TV show. It’s also true that if Uber had attention monitoring on the safety driver, it also would have made it harder to pull a stunt like that. Not all teams have attention monitoring, though after this incident I believe that most, including Uber, are putting it in. It might be argued that if Uber did require drivers to check the monitors, this might have somehow encouraged the safety driver’s negligent decision to watch TV, but that’s a stretch. I think any reasonable person is going to know this is not a job where you do that.

There may be some question regarding if a person with such bad judgement should have been cleared to be a safety driver. Uber may face some scrutiny for that bad choice. They may also face scrutiny if their training and job evaluation process for the safety drivers was clearly negligent. On the other hand, human employees are human, and if there’s not a pattern, it is less likely to create legal trouble for Uber.

From the standpoint of the Robocar industry, it makes the incident no less tragic, but less informative about robocar accidents. Accidents are caused every day because people allow themselves ridiculously unsafe distractions on their phones. This one is still special, but less so than we thought. While the issue of whether today’s limited systems (like the Tesla) generate too much driver complacency is still there, this was somebody being paid not to be complacent. The lessons we already knew — have 2 drivers, have driver attention monitoring — are still the same.

A number of press stories on the event have said that Uber “disabled” the emergency braking, and this also played a role in the fatality. That’s partly true but is very misleading vocabulary. The reality appears to be that Uber doesn’t have a working emergency braking capability in their system, and as such it is not enabled. That’s different from the idea that they have one and disabled it, which sounds much more like an ill act.

Uber’s system, like all systems, sometimes decides suddenly that there is an obstacle in front of the car for which it should brake when that obstacle is not really there. This is called a “false positive” or “ghost.” When this happens well in advance, it’s OK to have the car apply the brakes in a modest way, and then release them when it becomes clear it’s a ghost. However, if the ghost is so close that it would require full-hard braking, this creates a problem. If a car frequently does full-hard braking for ghosts, it is not only jarring, it can be dangerous, both for occupants of the car, and for cars following a little too closely behind — which sadly is the reality of driving.

As such, an emergency braking decision algorithm which hard brakes for ghosts is not a working system. You can’t turn it on safety, and so you don’t. Which is different from disabling it. While the Uber software did decide 2 seconds out that there was an obstacle that required a hard brake, it decides that out of the blue too often to be trusted with that decision. The decision is left to the safety driver — who should not be watching TV.

That does not mean Uber could not have done this much better. The car should still have done moderate braking, which would reduce the severity of any real accident and also wake up any inattentive safety driver. An audible alert should also have been present. Earlier, I speculated that if the driver was looking at the console, this sort of false positive incident would very likely have been there, so it was odd she did not see it, but it turns out she was not looking there.

The Volvo also has an emergency braking system. That system was indeed disabled — it is normally for any ADAS functions built into the cars to be disabled when used as prototype robocars. You are building something better, and you can’t have them competing. The Volvo system does not brake too often for ghosts, but that’s because it also doesn’t brake for real things far too often for a robocar system. Any ADAS system will be tuned that way because the driver is still responsible for driving. Teslas have been notoriously plowing into road barriers and trucks due to this ADAS style of tuning. It’s why a real robocar is much harder than the Tesla autopilot.

I’ve been on the road, so I have not reported on it, but the general news has been quite impressive. In particular, Waymo announced the order of 63,000 Chrysler minivans of the type they use in their Phoenix area tests. They are going beyond a pilot project to real deployment, and soon. Nobody else is close. This will add to around 20,000 Jaguar electric vehicles presumably aimed at a more luxury ride — though I actually think the minivan with its big doors, large interior space and high ride may well be more pleasant for most trips. The electric Jaguar will be more efficient.

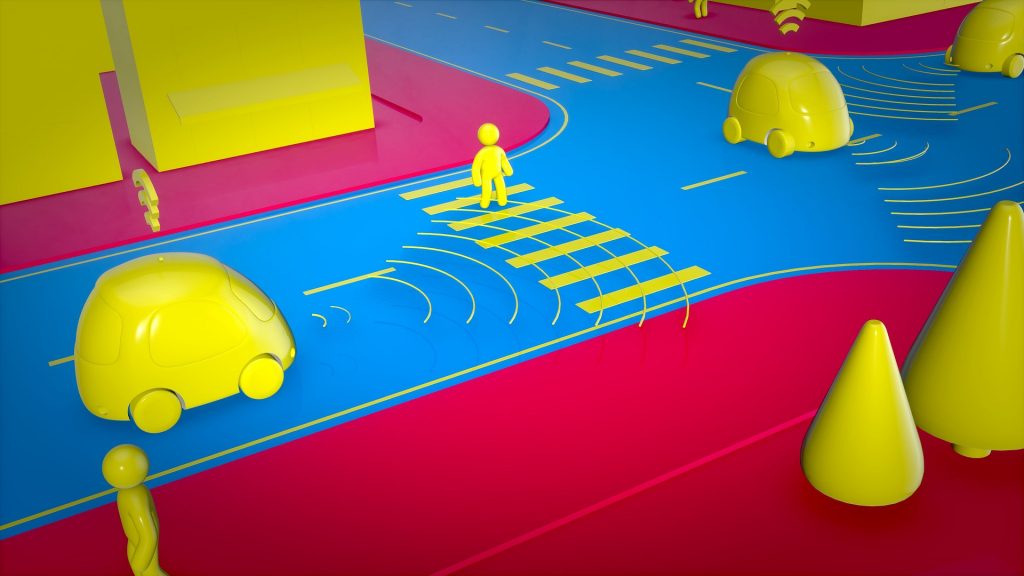

The NHTSA/SAE “levels” of robocars are not just incorrect. I now believe they are contributing to an attitude towards their “level 2” autopilots that plays a small, but real role in the recent Tesla fatalities.

Readers of this blog will know I have been critical of the NHTSA/SAE “levels” taxonomy for robocars since it was announced. My criticisms have ranged to simply viewing them as incorrect or misleading, and you might have enjoyed my satire of the levels which questions the wisdom of defining the robocar based on the role the human being plays in driving it.

Recent events lead me to go further. I believe a case can be made that this levels are holding the industry back, and have a possible minor role in the traffic fatalities we have seen with Tesla autopilot. As such I urge the levels be renounced by NHTSA and the SAE and replaced by something better.

It’s true that in the early days, when Google was effectively the only company doing work on a full self-driving car for the roads, people were looking for some sort of taxonomy to describe the different types of potential cars. NHTSA’s first article laid one out as a series of levels numbered 0 to 4 which gave the appearance of an evolutionary progression.

Problem was, none of those stages existed. Even Google didn’t know what it wanted to build, and my most important contribution there probably was being one of those pushing it from the highway car with occasional human driving to the limited area urban taxi. Anthony Levandowski first wanted the highway car because it was the easiest thing to build and he’s always been eager to get out into reality as soon as possible.

The models were just ideas, and I don’t think the authors at NHTSA knew how much they would be carving them into public and industry thinking by releasing the idea of levels in an official document. They may not have known that once in people’s minds, they would affect product development, and also change ideas about regulation. To regulate something you must define it, and this was the only definition coming from government.

The central error of the levels was threefold. First, it defined vehicles according to the role the human being played in their operation. (In my satire, I compare that to how the “horseless carriage” was first defined by the role the horse played, or didn’t play in it.)

Second, by giving numbered levels and showing a chart the future, it advanced the prediction that the levels were a progression. That they would be built in order, each level building on the work of the ones before it.

Worst of all, it cast into stone the guess that the driver assist (ADAS) technologies were closely related to, and the foundation of the robocar technologies. That they were just different levels of the same core idea.

(The ADAS technologies are things like adaptive cruise control, lanekeeping, forward collision avoidance, blindspot warning, anti-lock brakes and everything else that helps in driving or alerts or corrects driver errors.)

There certainly wasn’t consensus agreement on that guess. When Google pushed Nevada to pass the first robocar regulations, the car companies came forward during the comment phase to make one thing very clear — this new law had nothing to do with them. This law was for crazy out-there projects like Google. The law specifically exempted all the the ADAS projects car companies had, including and up to things like the Tesla Autopilot, from being governed by the law or considered self-driving car technology.

Many in the car companies, whose specialty was ADAS, loved the progression idea. It meant that they were already on the right course. That the huge expertise they had built in ADAS was the right decision, and would give them a lead into the future.

Outside the car companies, the idea was disregarded. Almost all companies went directly to full robocar projects, barely using existing ADAS tools and hardware if at all. The exceptions were companies in the middle like Tesla and MobilEye who had feet in both camps.

SAE, in their 2nd version of their standard, partly at my urging, added language to say that the fact that the levels were numbered was not to be taken as an ordering. Very good, but not enough.

In spite of the levels, the first vehicle to get commercial deployment was the Navia (now Navya) which is a low speed shuttle with no user controls inside. What would be called a “level 4.” Later, using entirely different technology, Tesla’s Autopilot was the first commercial offering of “level 2.” Recently, Audi has declared that given the constraints of operating in a traffic jam, they are selling “level 3.” While nobody sold it, car companies demonstrated autopilot technologies going back to 2006, and of course prototype “level 4” cars completed the DARPA grand challenge in 2005 and urban challenge in 2007.

In other words, no ordering at all. DARPA’s rules were so against human involvement that teams were not allowed to send any signal to their car other than “abort the race.”

The confusion between the two broad technological areas has extended out to the public. People routinely think of Tesla’s autopilot as actual self-driving car technology, or simply as primitive self-driving car technology, rather than as extra-smart ADAS.

Tesla’s messaging points the public in both directions. On the one hand, Tesla’s manuals and startup screen are very clear that the Autopilot is not a self-driving car. That it needs to be constantly watched, with the driver ready to take over at any time. In some areas, that’s obvious to drivers — the AutoPilot does not respond to stop signs or red lights, so anybody driving an urban street without looking would soon get in an accident. On the highway, though, it’s better, and some would say too good. It can cruise around long enough without intervention to lull drivers into a false sense of security.

To prevent that, Tesla takes one basic measure — it requires you to apply a little torque to the steering wheel every so often to indicate your hands are on it. Wait too long and you get a visual alert, wait longer and you get an audible alarm. This is the lowest level of driver attention monitoring out there. Some players have a camera actually watch the driver’s eyes to make sure they are on the road most of the time.

At the same time, Tesla likes to talk up the hope their AutoPilot is a stepping stone. When you order your new Tesla, you can order AutoPilot and you can also pay $5,000 for “full self driving.” It’s mostly clear that they are two different things. When you order the full self driving package, you don’t get it, because it doesn’t exist. Rather you get some extra sensors in the car, and Tesla’s promise that a new software release in the future will use those extra sensors to give you some form of full self driving. Elon Musk likes to made radically optimistic predictions of when Tesla will produce full robocars that can come to you empty or take you door to door.

NHTSA improved things in their later documents starting in 2016. In particular they clarified that it was very important to consider what roads and road conditions a robocar was rated to operate in. They called this the ODD (Operational Design Domain.) The SAE had made that clear earlier when they had added a “Level 5” to make it clear that their Level 4 did not go everywhere. The Level 5 car that can go literally everywhere remains a science fiction goal for now — nobody knows how to do it or even truly plans to do it, because there are diminishing economic returns to handling and certifying safety on absolutely all roads, but it exists to remind people that the only meaningful level (4) does not go everywhere and is not science fiction. The 3rd level is effectively a car whose driving domain includes places where a human must take the controls to leave it.

NHTSA unified their levels with the SAE a few years in, but they are still called the NHTSA levels by most.

The recent fatalities involving Uber and Tesla have shown the level of confusion among the public is high. Indeed, there is even confusion within those with higher familiarity of the industry. It has required press comments from some of the robocar companies to remind people, “very tragic about that Tesla crash, but realize that was not a self-driving car.” And indeed, there are still people in the industry who believe they will turn ADAS into robocars. I am not declaring them to be fools, but rather stating that we need people to be aware that is very far from a foregone conclusion.

Are the levels solely responsible for the confusion? Surely not — a great deal of the blame can be lain in many places, including automakers who have been keen to being perceived as in the game even though their primary work is still in ADAS. Automakers were extremely frustrated back in 2010 when the press started writing that the true future of the car was in the hands of Google and other Silicon Valley companies, not with them. Many of them got to work on real robocar projects as well.

NHTSA and SAE’s levels may not be to blame for all the confusion, but they are to blame for not doing their best to counter it. They should renounce the levels and, if necessary, create a superior taxonomy which is both based on existing work and flexible enough to handle our lack of understanding of the future.

While the world still hasn’t settled on a term (and the government and SAE documents have gone through a few themselves. (Highly Automated Vehicles, Driving Automation for On-Road Vehicles, Automated Vehicles, Automated Driving Systems etc.) I will use my preferred term here (robocars) but understand they will probably come up with something of their own. (As long as it’s not “driverless.”)

The Driver Assist systems would include traditional ADAS, as well as autopilots. There is no issue of the human’s role in this technology — it is always present and alert. These systems have been unregulated and may remain so, though there might be investigation into technologies to assure the drivers are remaining alert.

The robocar systems might be divided up by their operating domains. While this domain will be a map of specific streets, for the purposes of a taxonomy, people will be interested in types of roads and conditions. A rough guess at some categories would be “Highway,” “City-Fast” and “City-Slow.” Highway would be classified as roads that do not allow pedestrians and/or cyclists. The division between fast and slow will change with time, but today it’s probably at about 25mph. Delivery robots that run on roads will probably stick to the slow area. Subclassifications could include questions about the presence of snow, rain, crowds, animals and children.

What is called level 3 — a robocar that needs a human on standby to take over in certain situations — adds some complexity. This is a transitionary technology. It will only exist during the earliest phases of robocars as a “cheat” to get things going in places where the car’s domain is so limited that it’s forced to transition to human control while moving on short but not urgent notice.

Many people (including Waymo) think this is a bad idea — that it should never be made. It certainly should not be declared as one of the levels of a numbered progression. It is felt that a transition to human driving while moving at speed is a risky thing, exactly the sort of thing where failure is most common in other forms of automation.

Even so, car companies are building this, particularly for the traffic jam. While first visions of a car with a human on standby mostly talked about a highway car with the human handling exit ramps and construction zones, an easier and useful product is the traffic jam autopilot. This can drive safely with no human supervision in traffic jams. When the jam clears, the human needs to do the driving. This can be built without the need for takeover at speed, however. The takeover can be when stopped or at low speed, and if the human can’t takeover, stopping is a reasonable option because the traffic was recently very slow.

Generally, however, these standby driver cars will be a footnote of history, and don’t really deserve a place in the taxonomy. While all cars will have regions they don’t drive, they will also all be capable of coming to a stop near the border of such regions, allowing the human to take control while slow or stopped, which is safe.

Tesla knows it does not have a robocar, and warns its drivers about this regularly, though they ignore it. Some of that inattention may come from those drivers imagining they have “almost a robocar.” But even without that factor, the taxonomies create another problem. The public, told that the Tesla is just a lower level of robocar, sees the deaths of Tesla drivers as a sign that real robocar projects are more dangerous. The real projects do have dangers, but not the same dangers as the autopilots have. (Though clearly lack of driver attention is an issue both have on their plates.) A real robocar is not going to follow badly painted highway lines right into a crash barrier. They follow their maps, and the lane markers are just part of how they decide where they are and where to go.

But if the public says, “We need the government to slow down the robocar teams because of those Tesla crashes” or “I don’t trust getting in the Waymo car because of the Tesla crashes” then we’ve done something very wrong.

(If the public says they are worried about the Waymo car because of the Uber crash, they have a more valid point, though those teams are also very different from one another.)

For decades, roboticists used the word “autonomous” to refers to robots that took actions and decisions without having to rely on an outside source (such as human guidance.) They never used it in the “self-ruling” sense it has politically, though that is the more common (but not only) definition in common parlance.

Unfortunately, one early figure in car experiments hated that the roboticists’ vocabulary didn’t match his narrow view of the meaning of the word, and he pushed with moderate success for the academic and governmental communities to use the word “automated.” To many people, unfortunately, “automated” means simple levels of automation. Your dishwasher is automated. Your teller machine is automated. To the roboticist, the robocar is autonomous — it can operate entirely without you. The autopilot is automated — it needs human guidance.

I suspect that the public might better understand the difference if these words were split in these fashions. The Waymo car is autonomous, the Tesla automated. Everybody in robotics knows they don’t use the world autonomous in the political sense. I expressed this in a joke many years ago, “A truly autonomous car is one that, if you tell it to take you to the office, says it would rather go to the beach instead.” Nobody is building that car. Yet.

I am not attempting to say that there are no commonalities between ADAS and robocars. In fact, as development of both technologies progresses, elements of each have slipped into the other, and will continue to do so. Robocars have always used radars created for ADAS work. Computer vision tools are being used in both systems. The small ultrasonic sensors for ADAS are used by some robocars for close in detection where their LIDARs don’t see.

Even so, the difference is big enough to be qualitative and not, as numbered levels imply, quantitative. A robocar is not just an ADAS autopilot that is 10 times or 100 times or even 1,000 times better. It’s such a large difference that it doesn’t happen by evolutionary improvement but through different ways of thinking.

There are people who don’t believe this, and Tesla is the most prominent of them.

As such, I am not declaring that the two goals are entirely disjoint, but rather that official taxonomies should declare them to be disjoint. Make sure that developers and the public know the difference and so modulate their expectations. It won’t forbid the cross pollination between the efforts. It won’t even stop those who disagree with all I have said from trying the evolutionary approach on their ADAS systems to create robocars.

SAE should release a replacement for its levels, and NHTSA should endorse this or do the same.

The Uber car and Tesla’s autopilot, both in the news for fatalities are really two very different things. This table outlines the difference. Also, see below for some new details on why the Tesla crashed and more.

The Uber car and Tesla’s autopilot, both in the news for fatalities are really two very different things. This table outlines the difference. Also, see below for some new details on why the Tesla crashed and more.

| Uber ATG Test | Tesla Autopilot |

|---|---|

| A prototype full robocar capable of unmanned operations on city streets. | A driver assist system for highways and expressways |

| Designed for taxi service | Designed for privately owned and driven cars |

| A full suite of high end roobcar sensors including LIDAR | Productive automotive sensors, cameras and radar. |

| 1 pedestran fatality, other accidents unknown | Fatalities in Florida, China, California, other serious crashes without injury |

| Approximately 3 million miles of testing | Late 2016: 300M miles, 1.3B miles data gathering. |

| A prototype in testing which needs a human safety driver monitoring it | A production product overseen by the customer |

| Designed to handle everything it might encounter on the road | Designed to handle only certain situations. Users are expressly warned it doesn’t handle major things like cross traffic, stop signs and traffic lights. |

| Still in an early state, needing intervention every 13 miles on city streets | In production and needing intervention rarely on highways but if you tried to drive it on city streets it would need it very frequently |

| Needs a state licence for testing with rules requring safety drivers | No government regulation needed, similar to the adpative cruise control that it is based on |

| Only Uber employees can get behind the wheel | Anybody can be behind the wheel |

| Vehicle failed in manner outside its design constraints — it should have readily detected and stopped for the pedestrian | Vehicles had incidents in ways expected under their design constraints |

| Vehicle was trusted too much by safety driver, took eyes off road for 5 seconds | Vehicles trusted too much by drivers, took eyes off road for 6 seconds or longer |

| Safety drivers get 3 weeks training, fired if caught using a phone | No training or punishments for customers, though manual and screen describe proper procedures for operating |

| Safety driver recorded with camera, no warnings by software of inattention | Tesla drivers get visible, then audibile alerts if they take hands off the wheel for too long |

| Criticism that solo safety driver job is too hard, that inattention will happen | Criticism that drivers are overtrusting the system, regularly not looking at the road |

| Killed a bystander, though it had right of way | Killed customers who were ignoring monitoring requirements |

| NTSB Investigating | NTSB Investigating |

Each company faces a different challenge to fix its problems. For Uber, they need to improve the quality of their self-drive software so that such a basic failure as we saw here is extremely unlikely. Perhaps even more importantly, they need to revamp their safety driver system so that safety driver alertness is monitored and assured, including going back to two safety drivers in all situations. Further, they should consider some “safety driver assist” technology, such as the use of the system in the Volvo (or some other aftermarket system) to provide alerts to the safety drivers if it looks like something is going wrong. That’s not trivial — if the system beeps too much it gets ignored, but it can be done.

For Tesla, they face a more interesting challenge. Their claim is that in spite of the accidents, the autopilot is still a net win. That because people who drive properly with autopilot have half the accidents of people who drive without it, the total number of accidents is still lower, even if you include the accidents, including these fatalities, which come to those who disregard the warnings about how to properly use it.

That people disregard those warnings is obvious and hard to stop. Tesla argues, however, that turning off Autopilot because of them would make Telsa driving and the world less safe. For them, options exist to make people drive diligently with the autopilot, but they must not make the autopilot so much less pleasant such that people decide to not use it even properly. That would actually make driving less safe if enough people did that.

A theory, now given credence by some sample videos, suggests the Telsa was confused by the white lines which divide the road at an off-ramp, the expanding triangle known as the “gore.” As the triangle expands, a simple system might think they were the borders of a lane. Poor lane marking along the gore might make the vehicle even think the new “lane” is a continuation of the lane the car is in, making the car try to drive the lane — right into the barrier.

This video made by Tesla owners near Indiana, shows a Telsa doing this when the right line of the gore is very washed out compared to the left. At 85/101 (the recent Tesla crash) the lines are mostly stronger but there is a 30-40 foot gap in the right line which perhaps could trick a car into entering and following the gore. The gore at 85/101 also is lacking the chevron “do not drive here” stripes often found at these gores. It is not good at stationary objects like the crumple barrier, but its warning stripes are something that should be in its classification database.

Once again, the Tesla is just a smart cruise control. It is going to make mistakes like this, which is why they tell you you have to keep watching. Perhaps crashes like this will make people do that.

The NTSB is angry that Tesla released any information. I was not aware they frowned on this. This may explain Uber’s silence during the NTSB investigation there.

The governor of Arizona has told Uber to “get an Uber” and stop testing in the state. With no instructions on how to come back.

Unlike the early positive statements from Tempe police, this letter is harsh and to the point. It’s even more bad news for Uber, and the bad news is not over. Uber has not released any log data that makes them look better, the longer they take to do that, the more it seems that the data don’t tell a good story for them.

In other news, both MobilEye and Velodyne have issued releases that their systems would have seen the pedestrian. Waymo has said the same, and I believe that all of them are correct. Waymo has a big press event scheduled for this week in New York, rumoured to announce some new shuttle operations there. I wonder how much consideration they gave to delaying it, because in spite of their superior performance, a lot of the questions they will get at the press conference won’t be about their new project.

There are more signs that Uber’s self-driving project may receive the “death penalty,” or at the very least a very long and major setback. A long and major setback in a field where Uber thought “second place is first loser” to quote Anthony Levandowski.

Today I’m going to examine how you attain safety in a robocar, and outline a contradiction in the things that went wrong for Uber and their victim. Each thing that went wrong is both important and worthy of discussion, but at the same time unimportant. For almost every thing that went wrong Is something that we want to prevent going wrong, but it’s also something that we must expect will go wrong sometimes, and to plan for it.

In particular, I want to consider how things operate in spite of the fact that people will jaywalk, illegal or not, car systems will suffer failures and safety drivers will sometimes not be looking.

First, an update on developments.

Uber has said it is cooperating fully, but we certainly haven’t heard anything more from them, or from the police. That’s because:

A new story in the New York Times is more damning for Uber. There we learn:

In time, either in this investigation or via lawsuits, we should see:

The law seems to be clear that the Uber had the right of way. The victim was tragically unwise to cross there without looking. The vehicle code may find no fault with Uber. In addition, as I will detail later, crosswalk rules exist for a reason, and both human drivers and robocars will treat crosswalks differently from non-crosswalks.

Even so, people will jaywalk, and robocars need to be able to handle that. Nobody can handle somebody leaping quickly off the sidewalk into your lane, but a person crossing 3.5 lanes of open road is something even the most basic cars should be able to handle, and all cars should be able to perceive and stop for a pedestrian standing in their lane on straight non-freeway road. (More on this in a future article.)

The law says this as well. While the car has right of way, the law still puts a duty on the driver to do what they reasonably can to avoid hitting a jaywalker in the middle of the road.

We are of course very concerned as to why the system failed. In particular, this sort of detect-and-stop is a very basic level of operation, expected of even the most simple early prototypes, and certainly of a vehicle from a well funded team that’s logged a million miles.

At the same time, cars must be expected to have failures, even failures as bad as this. In the early days of robocars, even at the best teams, major system failures happened. I’ve been in cars that suddenly tried to drive off the road. It happens, and you have to plan for it. The main fallback is the safety driver, though now that the industry is slightly more mature, it is also possible to use simpler automated systems (like ADAS “forward collision warning” and “lanekeeping” tools) to also guard against major failures.

We’re going to be very hard on Uber, and with justification, for having such a basic failure. “Spot a pedestrian in front of you and stop” have been moving into the “solved problem” category, particularly if you have a high-end LIDAR. But we should not forget there are lots of other things that can, and do go wrong that are far from solved, and we must expect them to happen. These are prototypes. They are on the public roads because we know no other way to make them better, to find and solve these problems.

She clearly was not doing her job. The accident would have been avoided if she had been vigilant. But we must understand that safety drivers will sometimes look away, and miss things, and make mistakes.

That’s true for all of us when we drive, with our own life and others at stake. Many of us do crazy things like send texts, but even the most diligent are sometimes not paying enough attention for short periods. We adjust controls, we look at passengers, we look behind us and (as we should) check blindspots. Yet the single largest cause of accidents is “not paying attention.” What that really means is that two things went wrong at once — something bad happened while we were looking somewhere else. For us the probability of an accident is highly related to the product of those two probabilities.

The same is true for robocars with safety drivers. The cars will make mistakes. Sometimes the driver will not catch it. When both happen, an accident is possible. If the total probability of that is within the acceptable range (which is to say, the range for good human drivers) then testing is not putting the public at any extraordinary risk.

This means a team should properly have a sense of the capabilities of its car. If it’s needing interventions very frequently, as Uber was reported to, it needs highly reliable safety driving. In most cases, the answer is to have two safety drivers, 2 sets of eyes potentially able to spot problems. Or even 1.3 sets of eyes, because the 2nd operator is, on most teams, including Uber, mostly looking at a screen and only sometimes at the road. Still better than just one pair.

At the same time, since the goal is to get to zero safety drivers, it is not inherently wrong to just have one. There has to be a point where a project graduates to needing only one. Uber’s fault is, possibly, graduating far, far too soon.

To top all this, safety drivers, if the company is not careful, are probably more likely to fatigue and look away from the road than ordinary drivers in their own cars. After all, it is actually safer to do so than it is to do in your own car. Tesla autopilot owners are also notoriously bad at this. Perversely, the lower the intervention rate, the more likely it is people will get tempted. Companies have to combat this.

If you’re a developer trying out some brand new and untrusted software, you safety drive with great care. You keep your hands near the wheel. Your feet near the pedals. Your eyes on the lookout. You don’t do it for very long, and you are “rewarded” by having to do an intervention often enough that you never tire. To consider the extreme view of that, think about driving adaptive cruise control. You still have to steer, so there’s no way you take your eyes off the road even though your feet can probably relax.

Once your system gets to a high level (like Tesla’s autopilot in simple situations or Waymo’s car) you need to find other ways to maintain that vigilance. Some options include gaze-tracking systems that make sure eyes are on the road. I have also suggested that systems routinely simulate a failure, but drifting out of their lane when it is safe to do so, but correcting it before it gets dangerous if for some reason the safety driver does not intervene. A safety driver who is grabbing the wheel 3 times an hour and scored on it is much less likely to miss the one time a week they actually have to grab it for real.

While we don’t have final confirmation, reports suggest the vehicle did not slow at all. Even if study of the accident reveals a valid reason for not detecting the victim 1.4 seconds out (as needed to fully stop) there are just too many different technologies that are all, independently, able to detect her at a shorter distance which should have at least triggered some braking and reduced severity.

They key word is independently. As explained above, failures happen. A proper system is designed to still do the best it can in the event of failures of independent components. Failure of the entire system should be extremely unlikely, because the entire system should not be a monolith. Even if the main perception system of the car fails for some reason (as may have happened here) that should result in alarm bells going off to alert the safety driver, and it should also result in independent safety systems kicking in to fire those alarms or even hit the brakes. The Volvo comes with such a system, but that system is presumably disabled. Where possible, a system like that should be enabled, but used only to beep warnings at the safety driver. There should be a “reptile brain” at the low level of the car which, in the event of complete failure of all high level systems, knows enough to look at raw radar, LIDAR or camera data and sound alarms or trigger braking if the main system can’t.

All the classes of individual failures that happened to Uber could happen to a more sophisticated team in some fashion. In extreme bad luck they could even happen all at once. The system should be designed to make it very unlikely that they won’t all happen at once, and that the probability of that is less than the probability of a human having a crash.

So much to write here, so in the future look for thoughts on:

I have written a few times about the unusual nature of robocar accidents. Recently I was discussing this with a former student who is doing some research on the area. As a first step, she began looking at lists of all the reasons that humans cause accidents. (The majority of them, on police reports, are simply that one car was not in its proper right-of-way, which doesn’t reveal a lot.)

This led me, though to the following declaration that goes against most early intuitions.

Every human accident teaches us something about the way people have accidents. Every robocar accident teaches us about a way robocars will never have an accident again.

While this statement is not 100% true, it reveals the stark difference between the way people and robots drive. The whole field of actuarial science is devoted to understanding unusual events (with car accidents being the primary subject) and their patterns. When you notice a pattern, you can calculate probabilities that other people will do it, and they use that to price insurance and even set policy.

When a robocar team discovers their car has made any mistake, and certainly caused any incident, their immediate move is to find and fix the cause of the mistake, and update the software. That particular mistake will generally never happen again. We have learned very little about the pattern of robocar accidents, though the teams have definitely learned something to fix in their software. Since for now, and probably forever, the circumstances of robocar accidents will be a matter of public record, all teams and thus call cars will also learn portions of the same thing. (More on that below.)

The rule won’t be entirely true. There are some patterns. There are patterns of software bugs too — every programmer knows the risk of off-by-one errors and memory allocation mistakes. We actually build our compilers, tools and even processors to help detect and prevent all the known common programming mistakes we can, and there’s an active field of research to develop AI able to do that. But that does not teach us a lot about what type of car accidents this might generate. We know robocars will suffer general software crashes and any good system has to be designed to robustly handle that with redundancies. There is a broad class of errors known as perception false negatives, where a system fails to see or understand something on the road, but usually learning about one such error does not teach us much about the others of its class. It is their consequences which will be similar, not their causes.

Perception errors are the easiest to analogize to human activity. Humans also don’t see things before an accident. This, however, is due to “not paying attention,” or “not looking in that direction,” something that robots won’t ever be guilty of. A robot’s perception failure would be like a human’s mini-stroke temporarily knocking out part of our visual cortex (ie. not a real-world issue) or a flaw in the “design” of the human brain. In the robot, however, design flaws can usually be fixed.

There are some things that can’t be fixed, and thus teach us patterns. Some things are just plain hard, like seeing in fog or under snow, or seeing hidden vehicles/pedestrians. The fact that these things are hard can help you calculate probabilities of error.

This is much less true for the broad class of accidents where the vehicle perceives the world correctly, but decides the wrong thing to do. These are the mistakes that once done, will probably never be done badly again.

There actually have been very few accidents involving robocars that were the fault of the robocar system. In fact, the record is surprisingly good. I am not including things like Tesla autopilot crashes — the Tesla autopilot is designed to be an incomplete system and they document explicitly what it won’t do.

Indeed the only pattern I see from the few reported incidents is the obvious one — they happened in unusual driving situations. Merging with a bus when one wide lane is often used by 2 cars at once. Dealing with a lane splitting motorcycle after aborting an attempt at a lane change. (Note that police ruled against the motorcyclist in this case but it is being disputed in court.) Whatever faults occur here have been fixed by now.

Developers know that unusual situations are an area of risk, so they go out searching for them, and use simulators and test tracks to let them work extensively with them. You may not learn patterns, but you can come up with probability estimates to measure what fraction of everyday driving involves extraordinary situations. This can give you insurance-style confidence, to know that even if you aren’t sure you handle every unusual situation, the overall total risk is low.

One place this can be useful is in dealing with equipment failures. Today, a car driving only with vision and radar is not safe enough; a LIDAR is needed for the full safety level. If the LIDAR fails, however, the car is not blind, it is just a bit less safe. While you would not drive for miles with the LIDAR off, you might judge that the risk of driving to a safe spot to pull off on just camera and radar is acceptable, simply because the amount of driving in that mode will be very small. We do the same thing with physical hardware — driving with a blown out tire is riskier, but we can usually get off the road. We don’t insist every car have 5 wheels to handle that situation.

It is also worth noting that the human race is capable of learning from accidents. In a sense, every traffic safety rule, most road signs, and many elements of road engineering are the result of learning from accidents and improving safety. While the fact that one human misjudges a left turn doesn’t stop other humans from doing so, if it causes a “no left turn” sign to go up, we do. Ironically, the robot does not need the no left turn sign — it will never misjudge the speed of oncoming vehicles and make the turn at the wrong time.

Car design also is guided a lot from lessons of past accidents. That’s everything from features like crumple zones which don’t affect human behaviour, to the blindspot warning system which does.

There are a few teams who hope to make a car drive with only a neural network. That is to say the neural network outputs steering controls and takes in sensor data. Such a system is more akin to humans in that a flaw found in that approach might be found again in other similarly designed vehicles. After an accident, such a car would have its network retrained so that it never made that precise mistake again (nor any of the other potential mistakes in its training library.) This might be a very narrow, retraining however.

This is one reason that only smaller teams are trying this approach. Larger teams like Waymo are making very extensive use of neural networks, but primarily in the area of improving perception, not in making driving decisions. If a perception error is discovered, the network retraining to fix it will ideally be quite extensive, to avoid related errors. Neural network perception errors also tend to be intermittent — ie. the network fails to see something in one frame, but sees it in a later frame. The QA effort is to make it see things sooner and more reliably.

This raises the interesting question of sharing crash reports. Developers are now spending huge amounts of money racking up test miles on their cars. They want to encounter lots of strange situations and learn from them. Waymo’s 4 million miles of testing hardly came for free. This makes them and others highly reluctant to release to the public all that hard-won information.