Robohub highlights 2025

Over the course of the year, we’ve had the pleasure of working with many talented researchers from across the globe. As 2025 draws to a close, we take a look back at some of the excellent blog posts, interviews and podcasts from our contributors.

Teaching robot policies without new demonstrations: interview with Jiahui Zhang and Jesse Zhang

Jiahui Zhang and Jesse Zhang to tell us about their framework for learning robot manipulation tasks solely from language instructions without per-task demonstrations.

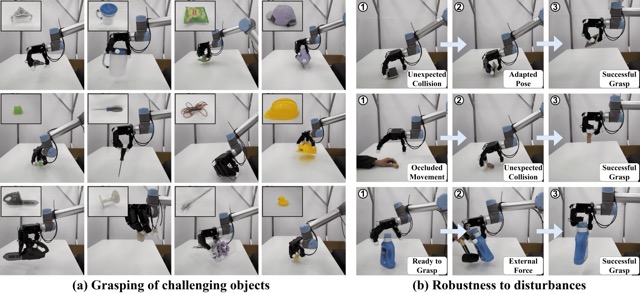

CoRL2025 – RobustDexGrasp: dexterous robot hand grasping of nearly any object

Hui Zhang writes about work presented at CoRL2025 on RobustDexGrasp, a novel framework that tackles different grasping challenges with targeted solutions.

Robot Talk Episode 133 – Creating sociable robot collaborators, with Heather Knight

Robot Talk host Claire Asher chatted to Heather Knight from Oregon State University about applying methods from the performing arts to robotics.

Generations in Dialogue: Human-robot interactions and social robotics with Professor Marynel Vasquez

In this podcast from AAAI, host Ella Lan asked Professor Marynel Vázquez about what inspired her research direction, how her perspective on human-robot interactions has changed over time, robots navigating the social world, and more.

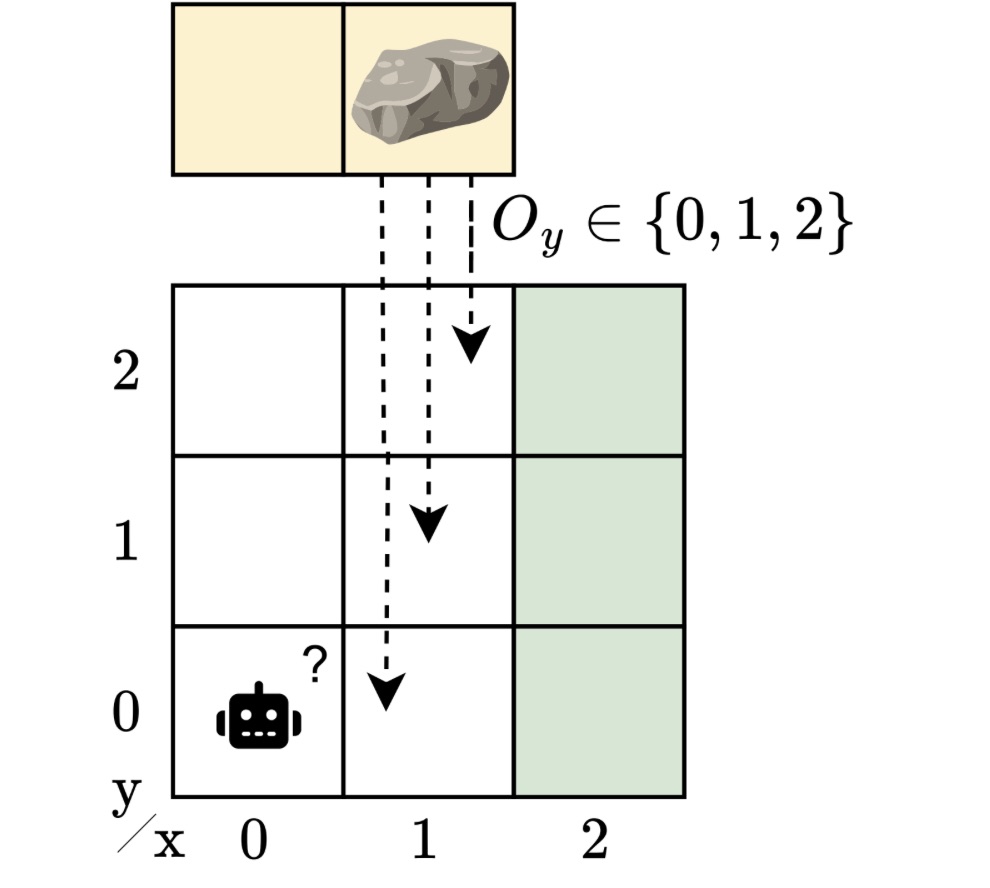

Learning robust controllers that work across many partially observable environments

In this blog post, Maris Galesloot summarizes work presented at IJCAI 2025, which explores designing controllers that perform reliably even when the environment may not be precisely known.

Robot Talk Episode 130 – Robots learning from humans, with Chad Jenkins

Claire Asher chatted to Chad Jenkins from University of Michigan about how robots can learn from people and assist us in our daily lives.

Interview with Zahra Ghorrati: developing frameworks for human activity recognition using wearable sensors

Zahra Ghorrati is pursuing her PhD at Purdue University, where her dissertation focuses on developing scalable and adaptive deep learning frameworks for human activity recognition (HAR) using wearable sensors.

Self-supervised learning for soccer ball detection and beyond: interview with winners of the RoboCup 2025 best paper award

We caught up with some of the authors of the RoboCup 2025 best paper award to find out more about the work, how their method can be transferred to applications beyond RoboCup, and their future plans for the competition.

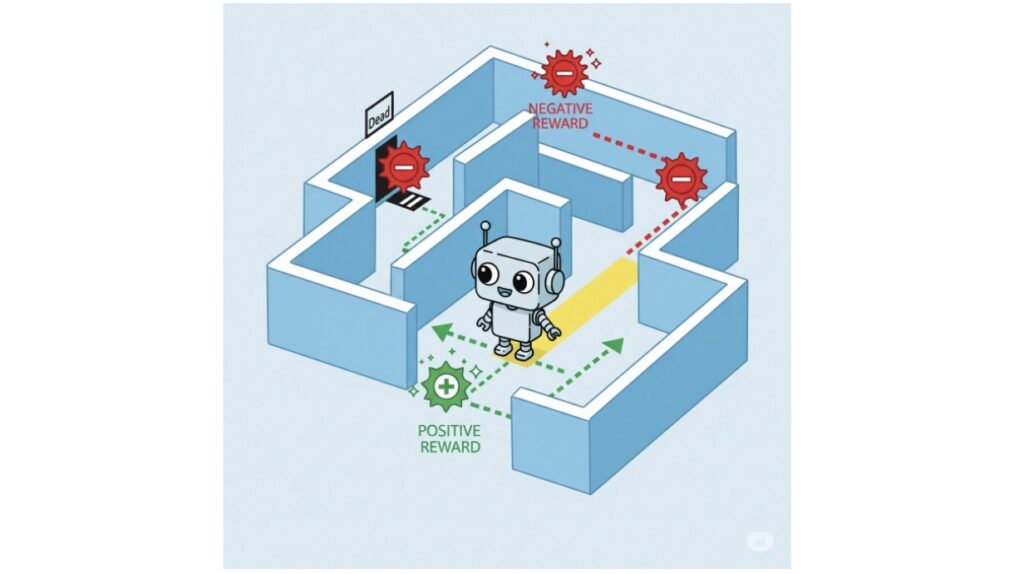

#IJCAI2025 distinguished paper: Combining MORL with restraining bolts to learn normative behaviour

Agata Ciabattoni and Emery Neufeld introduce a framework for guiding reinforcement learning agents to comply with social, legal, and ethical norms.

Robot Talk Episode 114 – Reducing waste with robotics, with Josie Gotz

Claire Asher chatted to Josie Gotz from the Manufacturing Technology Centre about robotics for material recovery, reuse and recycling.

Multi-agent path finding in continuous environments

Kristýna Janovská and Pavel Surynek write about how can a group of agents minimise their journey length whilst avoiding collisions.

RoboCupRescue: an interview with Adam Jacoff

Find out what’s new in the RoboCupRescue League this year.

An interview with Nicolai Ommer: the RoboCup Soccer Small Size League

We caught up with Nicolai to find out more about the Small Size League, how the auto referees work, and how teams use AI.

Interview with Kate Candon: Leveraging explicit and implicit feedback in human-robot interactions

Hear from PhD student Kate about her work on human-robot interactions.

AIhub coffee corner: Agentic AI

The AIhub coffee corner captures the musings of AI experts over a short conversation.

Generations in Dialogue: Multi-agent systems and human-AI interaction with Professor Manuela Veloso

Host Ella Lan chats to Professor Manuela Veloso about her research journey and path into AI, the history and evolution of AI research, inter-generational collaborations, and more.

Preparing for kick-off at RoboCup2025: an interview with General Chair Marco Simões

We spoke to Marco Simões, one of the General Chairs of RoboCup 2025 and President of RoboCup Brazil.

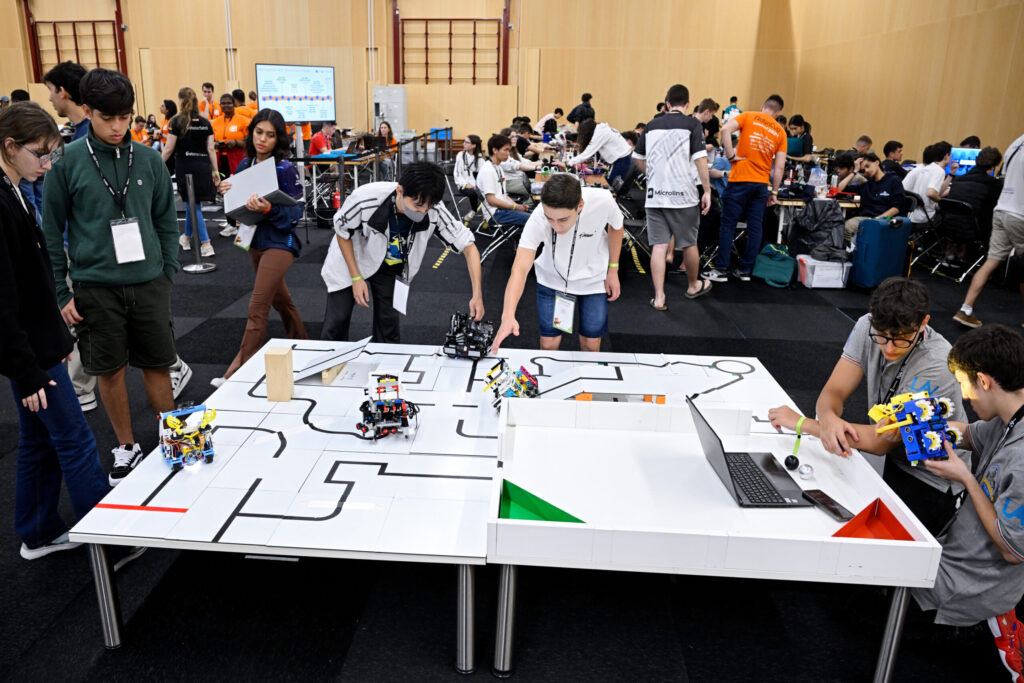

Gearing up for RoboCupJunior: Interview with Ana Patrícia Magalhães

RoboCup Junior Rescue @ WK RoboCup 2024. Photo: RoboCup/Bart van Overbeeke

We heard from the organiser of RoboCupJunior 2025 and find out more about the event.

Top Ten Stories in AI Writing, Q4 2025

Shirking its ‘fun toy’ image, AI like ChatGPT was increasingly seen by the business community in Q4 as a must-have productivity tool destined to reward early adopters and punish Luddites.

ChatGPT’s maker, for example, released a study finding that everyday AI business users are saving at least 40 minutes a day on busy work – while power users are saving up to two hours-a-day.

Meanwhile, MIT released a report concluding that AI can currently eliminate 12% of all jobs — as more businesses were found issuing decrees along the lines of ‘Use AI or You’re Fired.”

Especially alarming for writers was a move by media outlet Business Insider, which started publishing news stories completely written by AI and carrying an AI byline.

Plus, photographers and graphic artists got their own dose of rubber-meets-road reality with new, back-to-back releases of extremely powerful new AI imaging tools built into Gemini and ChatGPT.

But despite the breakneck development, users also continued to report that AI agents – designed to automate multi-step tasks – are continuing to fail miserably.

Plus, many users continued to ‘forget’ that AI makes-up facts, and that using AI responses without fact-checks can lead to major ‘egg-on-face’ moments.

The most gleaming ray of hope in al lthis: The Wall Street Journal reported that AI is considered so essential to U.S. defense, there’s a good chance the U.S. government will bail-out the AI industry if the much-feared ‘AI Bubble’ bursts.

Here’s a full rundown of how those stories — and more — helped shape AI writing in Q4 2024:

*ChatGPT-Maker Study: The State of Enterprise AI: New research from OpenAI finds that everyday business users of AI are saving about 40-60 minutes-a-day when compared to working without the tool.

Even better, the heaviest AI users say they’re saving up to two hours a day with the tech.

*AI Can Already Eliminate 12% of U.S. Workforce: A new study from MIT finds that AI can already eliminate 12% of everyday jobs.

Dubbed the “Iceberg Index,” the study simulated AI’s ability to handle – or partially handle – nearly 1,000 occupations that are currently worked by more than 150 million in the U.S.

Observes writer Megan Cerullo: “AI is also already doing some of the entry-level jobs that have historically been reserved for recent college graduates or relatively inexperienced workers.”

*Use AI or You’re Fired: In another sign that the days of ‘AI is Your Buddy’ are fading fast, increasing numbers of businesses have turned to strong-arming employees when it comes to AI.

Observes Wall Street Journal writer Lindsay Ellis: “Rank-and-file employees across corporate America have grown worried over the past few years about being replaced by AI.

“Something else is happening now: AI is costing workers their jobs if their bosses believe they aren’t embracing the technology fast enough.”

*Breaking News Gets an AI Byline at Business Insider: The next news story you read from Business Insider may be completely written by AI — and carry an AI byline.

The media outlet has announced a pilot test of a story writing algorithm that will grab a piece of breaking news and give it context by combining it with data drawn from stories in the Business Insider archive.

The only human involvement will be an editor, who will look over the finished product before it’s published.

*Study: AI Agents Virtually Useless at Completing Freelance Assignments: New research finds that much-ballyhooed AI agents are literally horrible at completing everyday assignments found on freelance brokerage sites like Fiverr and Upwork.

Observes writer Frank Landymore: “The top performer, they found, was an AI agent from the Chinese startup Manus with an automation rate of just 2.5 percent — meaning it was only able to complete 2.5 percent of the projects it was assigned at a level that would be acceptable as commissioned work in a real-world freelancing job, the researchers said.

“Second place was a tie, at 2.1 percent, between Elon Musk’s Grok 4 and Anthropic’s Claude Sonnet 4.5.”

*Oops, Sorry Australia, Here’s Your Money Back: Consulting firm Deloitte has agreed to refund the Australian government $440,000 for a study both agree was riddled by errors created by AI.

Observes writer Krishani Dhanji: “University of Sydney academic Dr. Christopher Rudge — who first highlighted the errors — said the report contained ‘hallucinations’ where AI models may fill in gaps, misinterpret data, or try to guess answers.”

Insult to injury: The near half-million-dollar payment is only a partial refund to what the Australian government actually paid for the flawed research.

*Forget Benchmarks: Put AI Through Your Own Tests Before You Commit: While benchmarks offer an indication of the AI solution you’re considering, you really need to put the AI through your own tests before you opt for anything, according to Ethan Mollick.

An associate professor of management at the Wharton School of the University of Pennsylvania, Mollick studies and teaches entrepreneurship, innovation and how AI is changing work and education.

Observes Mollick: “You need to know specifically what your AI is good at — not what AIs are good at on average.”

*AI Gets a Number One Country Hit: Well, it’s official: AI can now write and produce a country hit with the best of ’em.

“Walk My Walk,” a song credited to an AI artist named ‘Breaking Rust,’ has hugged the number one spot on Billboard’s Country Digital Song Sales chart for two weeks in a row.

The hit comes on the heels of another AI hit in another music genre, according to Billboard’s Adult R&B Airplay chart.

*Free AI from China Keeps U.S. Tech Titans on Their Toes: While still holding a slim lead, major AI players like ChatGPT, Gemini and Claude are feeling the nip-at-their-heels of ‘nearly as good’ – and free – AI alternatives from China.

Key Chinese players like DeepSeek and Qwen, for example, are within chomping distance of the U.S. marketing leaders — and are Open Source, or freely available for download and tinkering.

One caveat: Researchers have found AI code embedded in some Chinese AI that can be used to forward your data along to the Chinese Communist Party.

*Solution to AI Bubble Fears: U.S. Government?: The Wall Street Journal reports that AI is now considered so essential to U.S. defense, the U.S. government may step in to save the AI industry — should it implode from the irrational exuberance of investors.

Observes lead writer Sarah Myers West: “The federal government is already bailing out the AI industry with regulatory changes and public funds that will protect companies in the event of a private sector pullback.

“Despite the lukewarm market signals, the U.S. government seems intent on backstopping American AI — no matter what.”

Share a Link: Please consider sharing a link to https://RobotWritersAI.com from your blog, social media post, publication or emails. More links leading to RobotWritersAI.com helps everyone interested in AI-generated writing.

–Joe Dysart is editor of RobotWritersAI.com and a tech journalist with 20+ years experience. His work has appeared in 150+ publications, including The New York Times and the Financial Times of London.

The post Top Ten Stories in AI Writing, Q4 2025 appeared first on Robot Writers AI.

The Silicon Manhattan Project: China’s Atomic Gamble for AI Supremacy

Reports surfaced this week confirming what many industry observers had long suspected but feared to articulate: China has launched a “Manhattan Project” for semiconductors. This massive, state-backed initiative has a singular, existential goal—to reverse-engineer the ultra-complex lithography machines currently monopolized […]

The post The Silicon Manhattan Project: China’s Atomic Gamble for AI Supremacy appeared first on TechSpective.

This tiny chip could change the future of quantum computing

2025 Top Article – The Future of Machining: Key Trends and Innovations

Researchers create world’s smallest programmable, autonomous robots

The 100-agent benchmark: why enterprise AI scale stalls and how to fix it

Most enterprises scaling agentic AI are overspending without knowing where the capital is going. This isn’t just a budget oversight. It points to deeper gaps in operational strategy. While building a single agent is a common starting point, the true enterprise challenge is managing quality, scaling use cases, and capturing measurable value across a fleet of 100+ agents.

Organizations treating AI as a collection of isolated experiments are hitting a “production wall.” In contrast, early movers are pulling ahead by building, operating, and governing a mission-critical digital agent workforce.

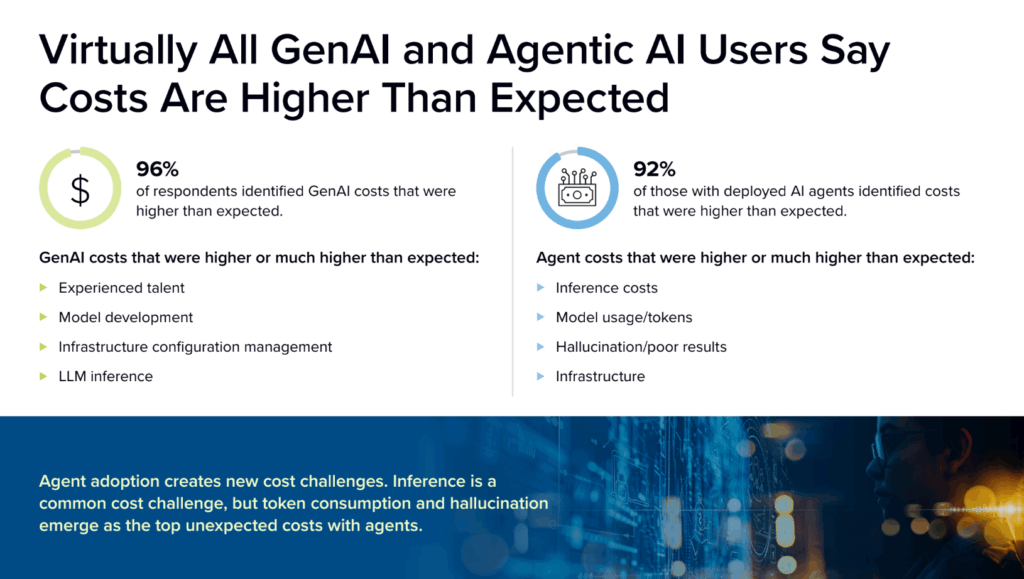

New IDC research reveals the stakes:

- 96% of organizations deploying generative AI report costs higher than expected

- 71% admit they have little to no control over the source of those costs.

The competitive gap is no longer about build speed. It is about who can operate a safe, “Tier 0” service foundation in any environment.

The high cost of complexity: why pilots fail to scale

The “hidden AI tax” is not a one-time fee; it is a compounding financial drain that multiplies as you move from pilot to production. When you scale from 10 agents to 100, a lack of visibility and governance turns minor inefficiencies into an enterprise-wide cost crisis.

The true cost of AI is in the complexity of operation, not just the initial build. Costs compound at scale due to three specific operational gaps:

- Recursive loops: Without strict monitoring and AI-first governance, agents can enter infinite loops of re-reasoning. In a single night, one unmonitored agent can consume thousands of dollars in tokens.

- The integration tax: Scaling agentic AI often requires moving from a few vendors to a complex web of providers. Without a unified runtime, 48% of IT and development teams are bogged down in maintenance and “plumbing” rather than innovation (IDC).

- The hallucination remediator: Remediating hallucinations and poor results has emerged as a top unexpected cost. Without production-focused governance baked into the runtime, organizations are forced to retrofit guardrails onto systems that are already live and losing money.

The production wall: why agentic AI stalls in production

Moving from a pilot to production is a structural leap. Challenges that seem manageable in a small experiment compound exponentially at scale, leading to a production wall where technical debt and operational friction stall progress.

Production reliability

Teams face a hidden burden maintaining zero downtime in mission-critical environments. In high-stakes industries like manufacturing or healthcare, a single failure can stop production lines or cause a network outage.

Example: A manufacturing firm deploys an agent to autonomously adjust supply chain routing in response to real-time disruptions. A brief agent failure during peak operations causes incorrect routing decisions, forcing multiple production lines offline while teams manually intervene.

Deployment constraints

Cloud vendors typically lock organizations into specific environments, preventing deployment on-premises, at the edge, or in air-gapped sites. Enterprises need the ability to maintain AI ownership and comply with sovereign AI requirements that cloud vendors cannot always meet.

Example: A healthcare provider builds a diagnostic agent in a public cloud, only to find that new Sovereign AI compliance requirements demand data stay on-premises. Because their architecture is locked, they are forced to restart the entire project.

Infrastructure complexity

Teams are overwhelmed by “infrastructure plumbing” and struggle to validate or scale agents as models and tools constantly evolve. This unsustainable burden distracts from developing core business requirements that drive value.

Example: A retail giant attempts to scale customer service agents. Their engineering team spends weeks manually stitching together OAuth, identity controls, and model APIs, only to have the system fail when a tool update breaks the integration layer.

Inefficient operations

Connecting inference serving with runtimes is complex, often driving up compute costs and failing to meet strict latency requirements. Without efficient runtime orchestration, organizations struggle to balance performance and value in real time.

Example: A telecommunications firm deploys reasoning agents to optimize network traffic. Without efficient runtime orchestration, the agents suffer from high latency, causing service delays and driving up costs.

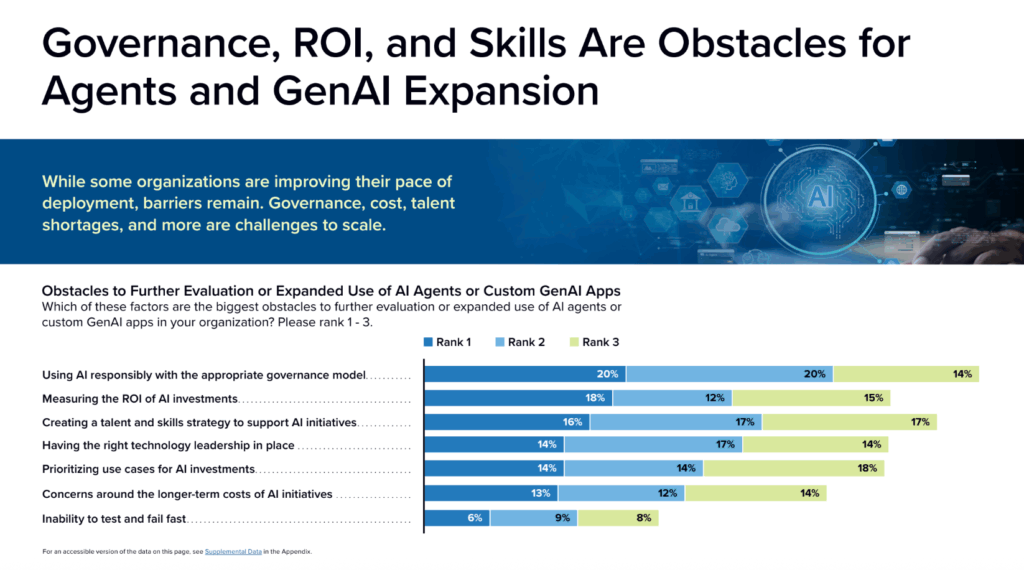

Governance: the constraint that determines whether agents scale

For 68% of organizations, clarifying risk and compliance implications is the top requirement for agent use. Without this clarity, governance becomes the single biggest obstacle to expanding AI.

Success is no longer defined by how fast you experiment, but by your ability to focus on productionizing an agentic workforce from the start. This requires AI-first governance that enforces policy, cost, and risk controls at the agent runtime level, rather than retrofitting guardrails after systems are already live.

Example: A company uses an agent for logistics. Without AI-first governance, the agent might trigger an expensive rush-shipping order through an external API after misinterpreting customer frustration. This results in a financial loss because the agent operated without a policy-based safeguard or a “human-in-the-loop” limit.

This productionization-focused approach to governance highlights a key difference between platforms designed for agentic systems and those whose governance remains limited to the underlying data layer.

Building for the 100 agent benchmark

The 100-agent mark is where the gap between early movers and the rest of the market becomes a permanent competitive divide. Closing this gap requires a unified platform approach, not a fragmented stack of point tools.

Platforms built for managing an agentic workforce are designed to address the operational challenges that stall enterprise AI at scale. DataRobot’s Agent Workforce Platform reflects this approach by focusing on several foundational capabilities:

- Flexible deployment: Whether in the public cloud, private GPU cloud, on-premises, or air-gapped environments, ensure you can deploy consistently across all environments. This prevents vendor lock-in and ensures you maintain full ownership of your AI IP.

- Vendor-neutral and open architecture: Build a flexible layer between hardware, models, and governance rules that allows you to swap components as technology evolves. This future-proofs your digital workforce and reduces the time teams spend on manual validation and integration.

- Full lifecycle management: Managing an agentic workforce requires solving for the entire lifecycle — from Day 0 inception to Day 90 maintenance. This includes leveraging specialized tools like syftr for accurate, low-latency workflows and Covalent for efficient runtime orchestration to control inference costs and latency.

- Built-in AI-first governance: Unlike tools rooted purely in the data layer, DataRobot focuses on agent-specific risks like hallucination, drift, and responsible tool use. Ensure your agents are safe, always operational, and strictly governed from day one.

The competitive gap is widening. Early movers who invest in a foundation of governance, unified tooling, and cost visibility from day one are already pulling ahead. By focusing on the digital agent workforce as a system rather than a collection of experiments, you can finally move beyond the pilot and deliver real business impact at scale.

Want to learn more? Download the research to discover why most AI pilots fail and how early movers are driving real ROI. Read the full IDC InfoBrief here.

The post The 100-agent benchmark: why enterprise AI scale stalls and how to fix it appeared first on DataRobot.

2025 Top Article – The Ultimate Guide to Depth Perception and 3D Imaging Technologies

The science of human touch – and why it’s so hard to replicate in robots

By Perla Maiolino, University of Oxford

Robots now see the world with an ease that once belonged only to science fiction. They can recognise objects, navigate cluttered spaces and sort thousands of parcels an hour. But ask a robot to touch something gently, safely or meaningfully, and the limits appear instantly.

As a researcher in soft robotics working on artificial skin and sensorised bodies, I’ve found that trying to give robots a sense of touch forces us to confront just how astonishingly sophisticated human touch really is.

My work began with the seemingly simple question of how robots might sense the world through their bodies. Develop tactile sensors, fully cover a machine with them, process the signals and, at first glance, you should get something like touch.

Except that human touch is nothing like a simple pressure map. Our skin contains several distinct types of mechanoreceptor, each tuned to different stimuli such as vibration, stretch or texture. Our spatial resolution is remarkably fine and, crucially, touch is active: we press, slide and adjust constantly, turning raw sensation into perception through dynamic interaction.

Engineers can sometimes mimic a fingertip-scale version of this, but reproducing it across an entire soft body, and giving a robot the ability to interpret this rich sensory flow, is a challenge of a completely different order.

Working on artificial skin also quickly reveals another insight: much of what we call “intelligence” doesn’t live solely in the brain. Biology offers striking examples – most famously, the octopus.

Octopuses distribute most of their neurons throughout their limbs. Studies of their motor behaviour show an octopus arm can generate and adapt movement patterns locally based on sensory input, with limited input from the brain.

Their soft, compliant bodies contribute directly to how they act in the world. And this kind of distributed, embodied intelligence, where behaviour emerges from the interplay of body, material and environment, is increasingly influential in robotics.

Touch also happens to be the first sense that humans develop in the womb. Developmental neuroscience shows tactile sensitivity emerging from around eight weeks of gestation, then spreading across the body during the second trimester. Long before sight or hearing function reliably, the foetus explores its surroundings through touch. This is thought to help shape how infants begin forming an understanding of weight, resistance and support – the basic physics of the world.

This distinction matters for robotics too. For decades, robots have relied heavily on cameras and lidars (a sensing method that uses pulses of light to measure distance) while avoiding physical contact. But we cannot expect machines to achieve human-level competence in the physical world if they rarely experience it through touch.

Simulation can teach a robot useful behaviour, but without real physical exploration, it risks merely deploying intelligence rather than developing it. To learn in the way humans do, robots need bodies that feel.

A ‘soft’ robot hand with tactile sensors, developed by the University of Oxford’s Soft Robotics Lab, gets to grips with an apple. Video: Oxford Robotics Institute.

One approach my group is exploring is giving robots a degree of “local intelligence” in their sensorised bodies. Humans benefit from the compliance of soft tissues: skin deforms in ways that increase grip, enhance friction and filter sensory signals before they even reach the brain. This is a form of intelligence embedded directly in the anatomy.

Research in soft robotics and morphological computation argues that the body can offload some of the brain’s workload. By building robots with soft structures and low-level processing, so they can adjust grip or posture based on tactile feedback without waiting for central commands, we hope to create machines that interact more safely and naturally with the physical world.

Healthcare is one area where this capability could make a profound difference. My group recently developed a robotic patient simulator for training occupational therapists (OTs). Students often practise on one another, which makes it difficult to learn the nuanced tactile skills involved in supporting someone safely. With real patients, trainees must balance functional and affective touch, respect personal boundaries and recognise subtle cues of pain or discomfort. Research on social and affective touch shows how important these cues are to human wellbeing.

To help trainees understand these interactions, our simulator, known as Mona, produces practical behavioural responses. For example, when an OT presses on a simulated pain point in the artificial skin, the robot reacts verbally and with a small physical “hitch” of the body to mimic discomfort.

Similarly, if the trainee tries to move a limb beyond what the simulated patient can tolerate, the robot tightens or resists, offering a realistic cue that the motion should stop. By capturing tactile interaction through artificial skin, our simulator provides feedback that has never previously been available in OT training.

Robots that care

In the future, robots with safe, sensitive bodies could help address growing pressures in social care. As populations age, many families suddenly find themselves lifting, repositioning or supporting relatives without formal training. “Care robots” would help with this, potentially meaning the family member could be cared for at home longer.

Surprisingly, progress in developing this type of robot has been much slower than early expectations suggested – even in Japan, which introduced some of the first care robot prototypes. One of the most advanced examples is Airec, a humanoid robot developed as part of the Japanese government’s Moonshot programme to assist in nursing and elderly-care tasks. This multifaceted programme, launched in 2019, seeks “ambitious R&D based on daring ideas” in order to build a “society in which human beings can be free from limitations of body, brain, space and time by 2050”.

Japan’s Airec care robot is one of the most advanced in development. Video by Global Update.

Throughout the world, though, translating research prototypes into regulated robots remains difficult. High development costs, strict safety requirements, and the absence of a clear commercial market have all slowed progress. But while the technical and regulatory barriers are substantial, they are steadily being addressed.

Robots that can safely share close physical space with people need to feel and modulate how they touch anything that comes into contact with their bodies. This whole-body sensitivity is what will distinguish the next generation of soft robots from today’s rigid machines.

We are still far from robots that can handle these intimate tasks independently. But building touch-enabled machines is already reshaping our understanding of touch. Every step toward robotic tactile intelligence highlights the extraordinary sophistication of our own bodies – and the deep connection between sensation, movement and what we call intelligence.

This article was commissioned in conjunction with the Professors’ Programme, part of Prototypes for Humanity, a global initiative that showcases and accelerates academic innovation to solve social and environmental challenges. The Conversation is the media partner of Prototypes for Humanity 2025.![]()

Perla Maiolino, Associate Professor of Engineering Science, member of the Oxford Robotics Institute, University of Oxford

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Agentic AI and the Art of Asking Better Questions

I’ve had a lot of conversations about AI over the past couple years—some insightful, some overhyped, and a few that left me questioning whether we’re even talking about the same technology. But every now and then, I get the opportunity […]

The post Agentic AI and the Art of Asking Better Questions appeared first on TechSpective.