A new model to enable multi-object tracking in unmanned aerial systems

Tiny hand-shaped gripper can grasp and hold a snail’s egg

10 Things to Consider When Exploring Offline Robot Programming Software Solutions

Researchers build $400 self-navigating smart cane

The Mini Series Takes a Taxi

#339: High Capacity Ride Sharing, with Alex Wallar

In this episode, our interviewer Lilly speaks to Alex Wallar, co-founder and CTO of The Routing Company. Wallar shares his background in multi-robot path-planning and optimization, and his research on scheduling and routing algorithms for high-capacity ride-sharing. They discuss how The Routing Company helps cities meet the needs of their people, the technical ins and outs of their dispatcher and assignment system, and the importance of public transit to cities and their economics.

Alex Wallar

Alex Wallar is Co-founder and CTO of the Routing Company, an on-demand vehicle routing and management platform that partners with cities to power the future of public transit. Previously he was pursuing a PhD in the Computer Science and Artificial Intelligence Lab at MIT, conducting research on mathematical optimization for high-capacity ride-sharing.

Links

- Download mp3 (41.7 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

High Capacity Ride Sharing

In this episode, our interviewer Lilly speaks to Alex Wallar, co-founder and CTO of The Routing Company. Wallar shares his background in multi-robot path-planning and optimization, and his research on scheduling and routing algorithms for high-capacity ride-sharing. They discuss how The Routing Company helps cities meet the needs of their people, the technical ins and outs of their dispatcher and assignment system, and the importance of public transit to cities and their economics.

Alex Wallar

Alex Wallar is Co-founder and CTO of the Routing Company, an on-demand vehicle routing and management platform that partners with cities to power the future of public transit. Previously he was pursuing a PhD in the Computer Science and Artificial Intelligence Lab at MIT, conducting research on mathematical optimization for high-capacity ride-sharing.

Links

- Download mp3 (41.7 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

How a Team United in Just 18 Months to Overhaul How America Destroys Its Most Dangerous Chemical Weapons

50 women in robotics you need to know about 2021

It’s Ada Lovelace Day and once again we’re delighted to introduce you to “50 women in robotics you need to know about”! From the Afghanistan Girls Robotics Team to K.G.Engelhardt who in 1989 founded, and was the first Director of, the Center for Human Service Robotics at Carnegie Mellon, these women showcase a wide range of roles in robotics. We hope these short bios will provide a world of inspiration, in our ninth Women in Robotics list! Tweet this

In 2021, we showcase women in robotics in Afghanistan, Australia, Canada, Denmark, Finland, France, Germany, Hong Kong, India, Iran, Ireland, Israel, Italy, Japan, New Zealand, Portugal, Singapore, South Africa, Spain, Switzerland, Russia, United Kingdom and United States. They are researchers, industry leaders, and artists. Some women are at the start of their careers, while others have literally written the book, the program or the standards.

It is, however, disturbing how hard it can be to find records of women who were an important part of the history of robotics, such as K.G. Engelhardt. Statistically speaking, women are far more likely to leave the workforce or change careers due to family pressures, and that contributes to the erasure. Last year, we talked about the importance of having more equitable citation counts. The citation problem is expected to significantly disadvantage women and people of color due to the historical lack of women followed by the recent growth of large scientific teams, multiplying exclusion.

A more dangerous form of erasure is happening today in Afghanistan. The Afghanistan Girls Robotics Team was forced to flee the country, thanks to help from their support group, the Digital Citizen Fund. What steps must the international community take in support of a future for Afghan girls’ education? Hear from UN Deputy Secretary-General Amina Mohammed, Nobel Laureate and Malala Fund Co-Founder Malala Yousafzai, and Somaya Faruqi, Captain of the Afghan Dreamers Robotics Team, in this recent UN video.

You can support The Afghan Dreamers here, and soon watch a documentary about the original team which was shot with the girls in Afghanistan in 2019 and 2020.

Meanwhile, UC Davis developed an online digital backpack to keep academic credentials and school records safe and private. The Article 26 Backpack references the 1948 Universal Declaration of Human Rights, and the right to an education. At the moment, the Backpack is for people 18 and over, with a high school diploma or baccalaureate, whose education has been affected by war, conflict or economic conditions.

On the good news front, the IEEE RAS Women in Engineering (WIE) Committee recently completed a several year study of gender representation in conference leading roles at RAS-supported conferences. Individuals who hold these roles select organizing committees, choose speakers, and make final decisions on paper acceptances. In this video, the authors lead a discussion about the findings and the story behind the study. In addition to presenting detailed data and releasing anonymized datasets for further study, the authors provided suggestions on changes to help ensure a more diverse and representative robotics community where anyone can thrive. The paper “Gender Diversity of Conference Leadership” by Laura Graesser, Aleksandra Faust, Hadas Kress-Gazit, Lydia Tapia, and Risa Ulinskiby was in the June 2021 IEEE Robotics and Automation Magazine, with a follow up “Retrospective on a Watershed Moment for IEEE Robotics and Automation Society Gender Diversity [Women in Engineering]” in Sep 2021 by Lydia Tapia reporting on gender diversity initiatives undertaken by the Robotics and Automation Society.

We publish this list because the lack of visibility of women in robotics leads to the unconscious perception that women aren’t making newsworthy contributions. We encourage you to use our lists to help find women for keynotes, panels, interviews and to cite their research and include them in curricula. Tulane University published a guide to help you calculate how much of your reading list includes female authors and a citation guide, similar to the CiteHer campaign from BlackComputeher.org.

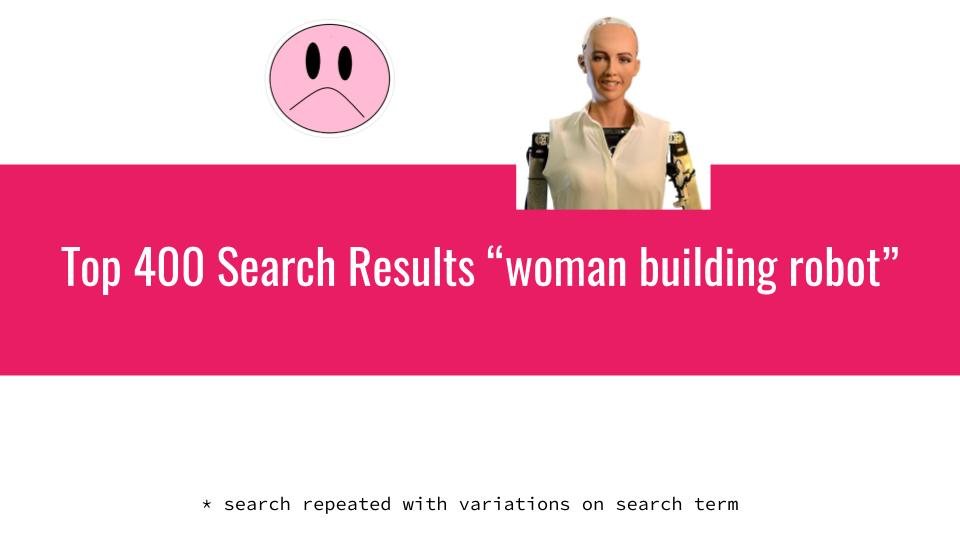

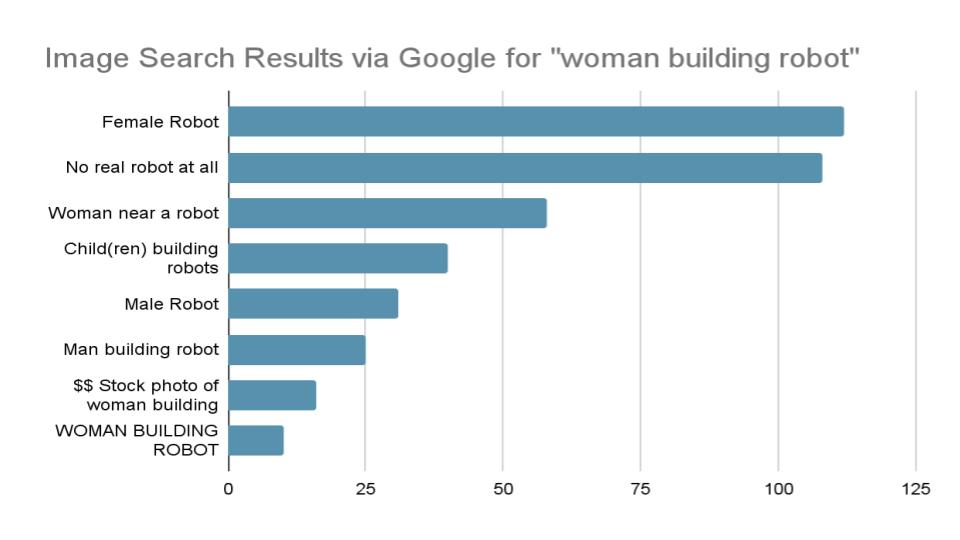

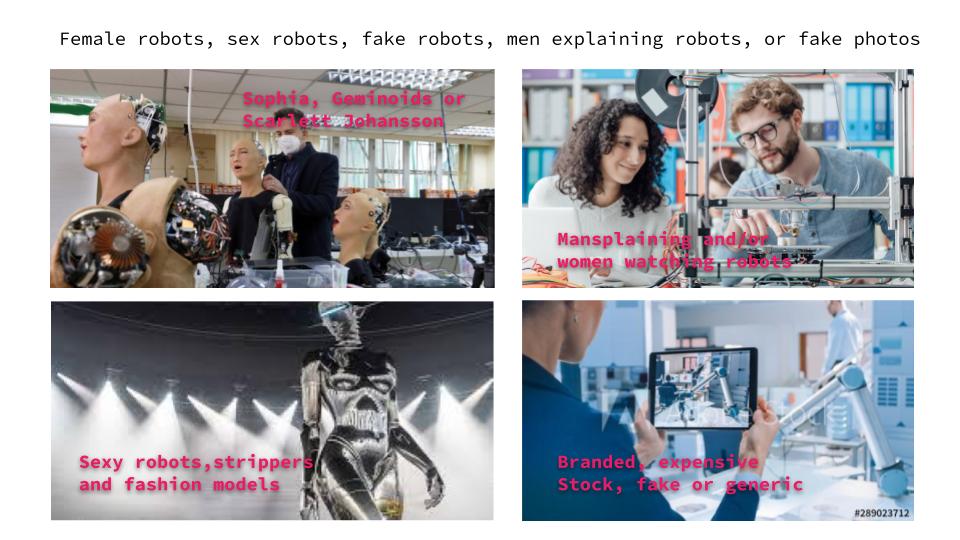

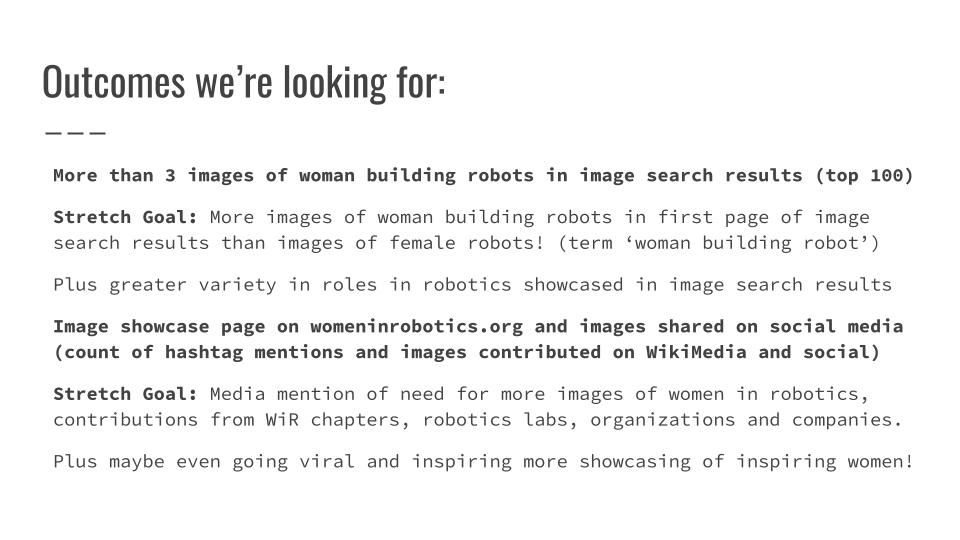

And Women in Robotics have just launched a Photo Challenge! We got so tired of seeing literally hundreds of images of female robots showing up whenever we searched for images of women building robots, or images of men building robots while women watched. Let’s push Sophia out of the top search results and showcase real women building real robots instead!

We hope you are inspired by these profiles, and if you want to work in robotics too, please join us at Women in Robotics. We are now a 501(c)(3) non-profit organization, but even so, this post wouldn’t be possible if not for the hard work of volunteers; Andra Keay, Fatemeh Pahlevan Aghababa, Jeana diNatale and Daniel Carrillo Zapata. Tweet this

And we encourage #womeninrobotics and women who’d like to work in robotics to join our professional network at http://womeninrobotics.org Want to keep reading? There are more than 200 other stories on our 2013 to 2020 lists. Why not nominate someone for inclusion next year! Tweet this

50 women in robotics you need to know about 2021

It’s Ada Lovelace Day and once again we’re delighted to introduce you to “50 women in robotics you need to know about”! From the Afghanistan Girls Robotics Team to K.G.Engelhardt who in 1989 founded, and was the first Director of, the Center for Human Service Robotics at Carnegie Mellon, these women showcase a wide range of roles in robotics. We hope these short bios will provide a world of inspiration, in our ninth Women in Robotics list! Tweet this.

In 2021, we showcase women in robotics in Afghanistan, Australia, Canada, Denmark, Finland, France, Germany, Hong Kong, India, Iran, Ireland, Israel, Italy, Japan, New Zealand, Portugal, Singapore, South Africa, Spain, Switzerland, Russia, United Kingdom and United States. They are researchers, industry leaders, and artists. Some women are at the start of their careers, while others have literally written the book, the program or the standards.

It is, however, disturbing how hard it can be to find records of women who were an important part of the history of robotics, such as K.G. Engelhardt. Statistically speaking, women are far more likely to leave the workforce or change careers due to family pressures, and that contributes to the erasure. Last year, we talked about the importance of having more equitable citation counts. The citation problem is expected to significantly disadvantage women and people of color due to the historical lack of women followed by the recent growth of large scientific teams, multiplying exclusion.

A more dangerous form of erasure is happening today in Afghanistan. The Afghanistan Girls Robotics Team was forced to flee the country, thanks to help from their support group, the Digital Citizen Fund. What steps must the international community take in support of a future for Afghan girls’ education? Hear from UN Deputy Secretary-General Amina Mohammed, Nobel Laureate and Malala Fund Co-Founder Malala Yousafzai, and Somaya Faruqi, Captain of the Afghan Dreamers Robotics Team, in this recent UN video.

You can support The Afghan Dreamers here, and soon watch a documentary about the original team which was shot with the girls in Afghanistan in 2019 and 2020.

Meanwhile, UC Davis developed an online digital backpack to keep academic credentials and school records safe and private. The Article 26 Backpack references the 1948 Universal Declaration of Human Rights, and the right to an education. At the moment, the Backpack is for people 18 and over, with a high school diploma or baccalaureate, whose education has been affected by war, conflict or economic conditions.

On the good news front, the IEEE RAS Women in Engineering (WIE) Committee recently completed a several year study of gender representation in conference leading roles at RAS-supported conferences. Individuals who hold these roles select organizing committees, choose speakers, and make final decisions on paper acceptances. In this video, the authors lead a discussion about the findings and the story behind the study. In addition to presenting detailed data and releasing anonymized datasets for further study, the authors provided suggestions on changes to help ensure a more diverse and representative robotics community where anyone can thrive. The paper “Gender Diversity of Conference Leadership” by Laura Graesser, Aleksandra Faust, Hadas Kress-Gazit, Lydia Tapia, and Risa Ulinskiby was in the June 2021 IEEE Robotics and Automation Magazine, with a follow up “Retrospective on a Watershed Moment for IEEE Robotics and Automation Society Gender Diversity [Women in Engineering]” in Sep 2021 by Lydia Tapia reporting on gender diversity initiatives undertaken by the Robotics and Automation Society.

We publish this list because the lack of visibility of women in robotics leads to the unconscious perception that women aren’t making newsworthy contributions. We encourage you to use our lists to help find women for keynotes, panels, interviews and to cite their research and include them in curricula. Tulane University published a guide to help you calculate how much of your reading list includes female authors and a citation guide, similar to the CiteHer campaign from BlackComputeher.org.

And Women in Robotics have just launched a Photo Challenge! We got so tired of seeing literally hundreds of images of female robots showing up whenever we searched for images of women building robots, or images of men building robots while women watched. Let’s push Sophia out of the top search results and showcase real women building real robots instead!

We hope you are inspired by these profiles, and if you want to work in robotics too, please join us at Women in Robotics. We are now a 501(c)(3) non-profit organization, but even so, this post wouldn’t be possible if not for the hard work of volunteers; Andra Keay, Fatemeh Pahlevan Aghababa, Jeana diNatale and Daniel Carrillo Zapata. Tweet this

And we encourage #womeninrobotics and women who’d like to work in robotics to join our professional network at http://womeninrobotics.org Want to keep reading? There are more than 200 other stories on our 2013 to 2020 lists (and their updates):

- 30 women in robotics you need to know about (2020)

- 30 women in robotics you need to know about (2019)

- 25 women in robotics you need to know about (2018)

- 25 women in robotics you need to know about (2017)

- 25 women in robotics you need to know about (2016)

- 25 women in robotics you need to know about (2015)

- 25 women in robotics you need to know about (2014)

- 25 women in robotics you need to know about (2013)

Why not nominate someone for inclusion next year! Tweet this.

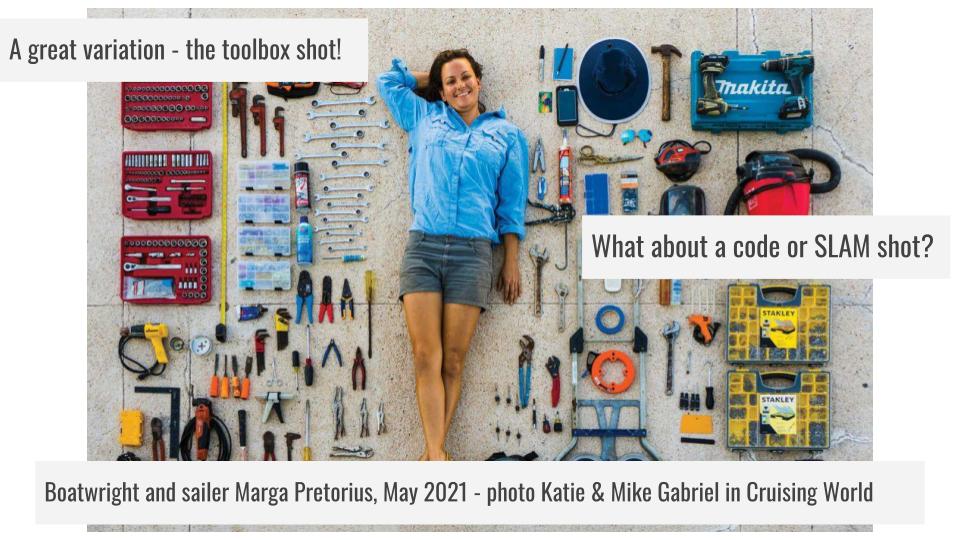

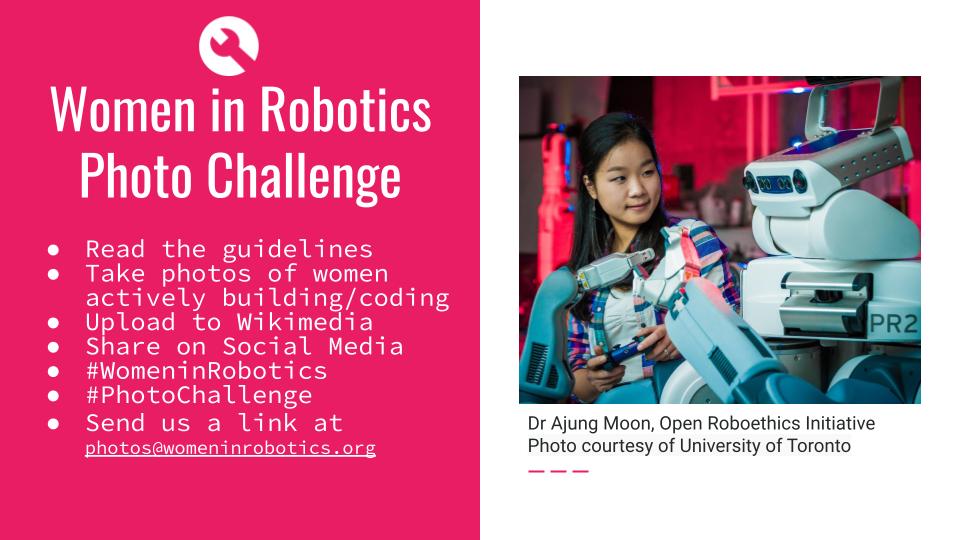

Join the Women in Robotics Photo Challenge

How can women feel as if they belong in robotics if we can’t see any pictures of women building or programming robots? The Civil Rights Activist Marian Wright Edelson aptly said, “You can’t be what you can’t see.” We’d like you all to take photos of women building and coding robots and share them with us!

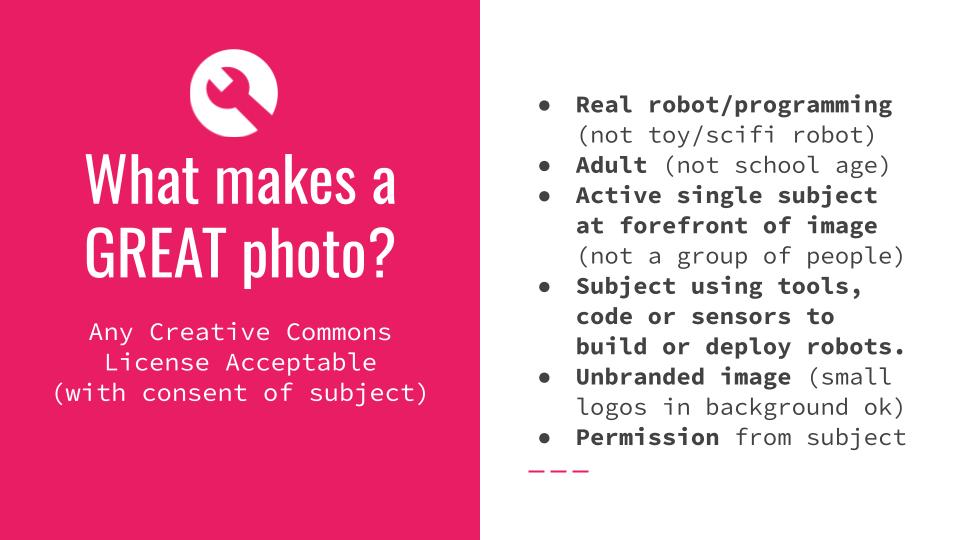

Here’s the handy guide to what a great photo looks like with some awesome examples. This is a great opportunity for research labs and robotics companies to showcase their talented women and other underrepresented groups.

Join the Women in Robotics Photo Challenge

How can women feel as if they belong in robotics if we can’t see any pictures of women building or programming robots? The Civil Rights Activist Marian Wright Edelson aptly said, “You can’t be what you can’t see.” We’d like you all to take photos of women building and coding robots and share them with us!

Here’s the handy guide to what a great photo looks like with some awesome examples. This is a great opportunity for research labs and robotics companies to showcase their talented women and other underrepresented groups.