New drone technology helps track relocated birds

Exploring the $75 Million Ford Robotics Building

The sense of touch – Tactile technologies for cobots

Faster path planning for rubble-roving robots

Three new helper robots at the Hsinchu National Taiwan University Hospital

ADATA Technology has collaborated with researchers at Hsinchu National Taiwan University Hospital (NTUH) to introduce the C-Rob Autonomous Mobile Robots. These robots use Artificial Intelligence (AI) to reduce the workload of healthcare workers as Taiwan continues to combat the Covid-19 pandemic.

Recently, an outbreak of Covid-19 struck Taiwan, and hospitals are prone to becoming hotspots for transmission. When Covid-infected patients enter hospitals, whether for testing or much-needed medical care, hospital staff will often prioritize these patients and devote less time to those visiting the hospital for non-Covid related reasons. On top of this, a clean environment must be maintained, with frequent disinfection to reduce the risk of transmission.

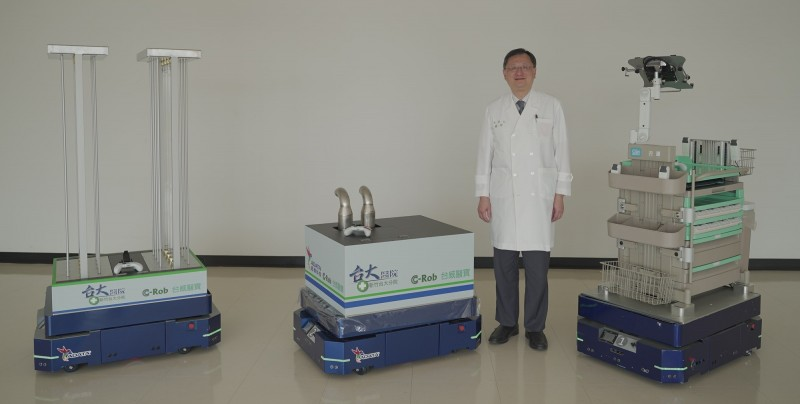

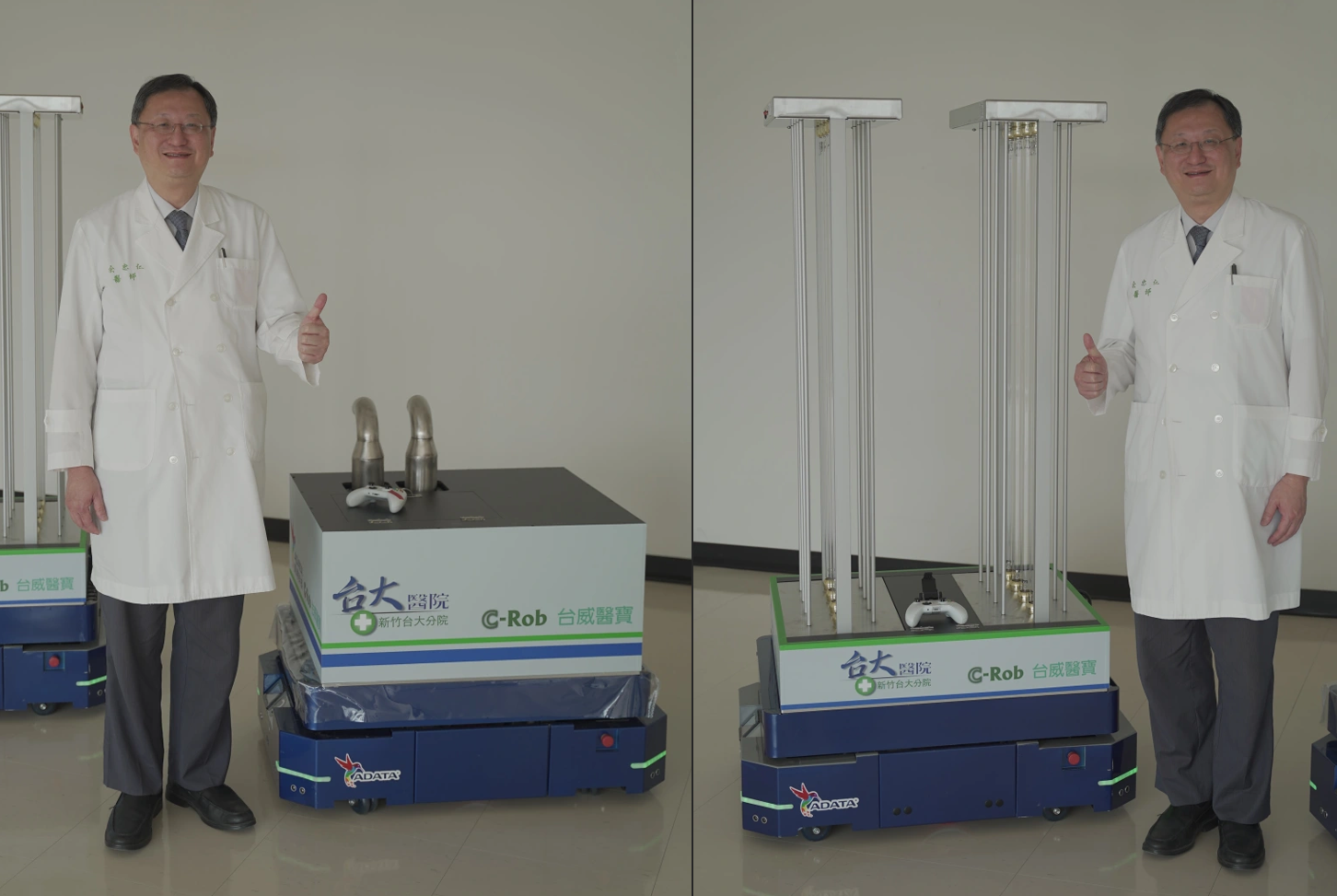

After realizing this problem, researchers at ADATA Technology and NTUH created the C-Rob robots hoping that they will assist hospital staff and help fight the pandemic. As of now, the C-Rob robots serve different purposes: two for disinfection and one for transporting and carrying goods. However, all three are equipped with smart navigation, obstacle avoidance, and the ability to move to the exact desired location accurately.

The first robot utilizes large UV lights to disinfect large rooms quickly, while the second robot is equipped with two nozzles that can spray disinfectant as needed.

The load-carrying robot consists of small shelving and a tablet stand, allowing hospital staff to have quick access to medical supplies or services.

The first priority of ADATA Technology and NTUH was to officially introduce the C-Rob robots into clinics to reduce the heavy workload of current healthcare workers working in Taiwan to combat Covid-19. The second phase of development will prioritize the optimization of AI algorithms, and the ability to observe behavior patterns of nursing staff (using smart detection) to make smarter decisions using AI.

The C-Rob robots can operate in hospitals and clinics and assist staff by carrying loads, helping to reduce the burden of nursing staff so more energy can be devoted to what should be prioritized: patients’ medical and physiological needs. The C-Rob robots’ disinfectant spraying and ultraviolet sterilization robots effectively clean healthcare facilities; without the need to devote staff to disinfection, hospitals can reduce staff members to further minimize transmission risk.

ADATA and Hsinchu NTUH operate near the heart of Taiwan’s science and technology industry. Yu Zhong-Ren, Dean of Hsinchu NTUH, says that there are plans in place to “apply the technology of smart autonomous mobile robots to medical services through the connection of the medical industry and the technological industry.” ADATA and Hsinchu NTUH hope to use cross-field cooperation to drive the transformation and development of Taiwan’s medical industry. In the future, ADATA and Hsinchu NTUH plan on using the C-Rob robots to create hospital beds that can autonomously navigate and avoid obstacles, further decreasing the burden on hospital staff.

Swimming robot gives fresh insight into locomotion and neuroscience

Scientists at the Biorobotics Laboratory (BioRob) in EPFL’s School of Engineering are developing innovative robots in order to study locomotion in animals and, ultimately, gain a better understanding of the neuroscience behind the generation of movement. One such robot is AgnathaX, a swimming robot employed in an international study with researchers from EPFL as well as Tohoku University in Japan, Institut Mines-Télécom Atlantique in Nantes, France, and Université de Sherbrooke in Canada. The study has just been published in Science Robotics.

A long, undulating swimming robot

“Our goal with this robot was to examine how the nervous system processes sensory information so as to produce a given kind of movement,” says Prof. Auke Ijspeert, the head of BioRob and a member of the Rescue Robotics Grand Challenge at NCCR Robotics. “This mechanism is hard to study in living organisms because the different components of the central and peripheral nervous systems* are highly interconnected within the spinal cord. That makes it hard to understand their dynamics and the influence they have on each other.”

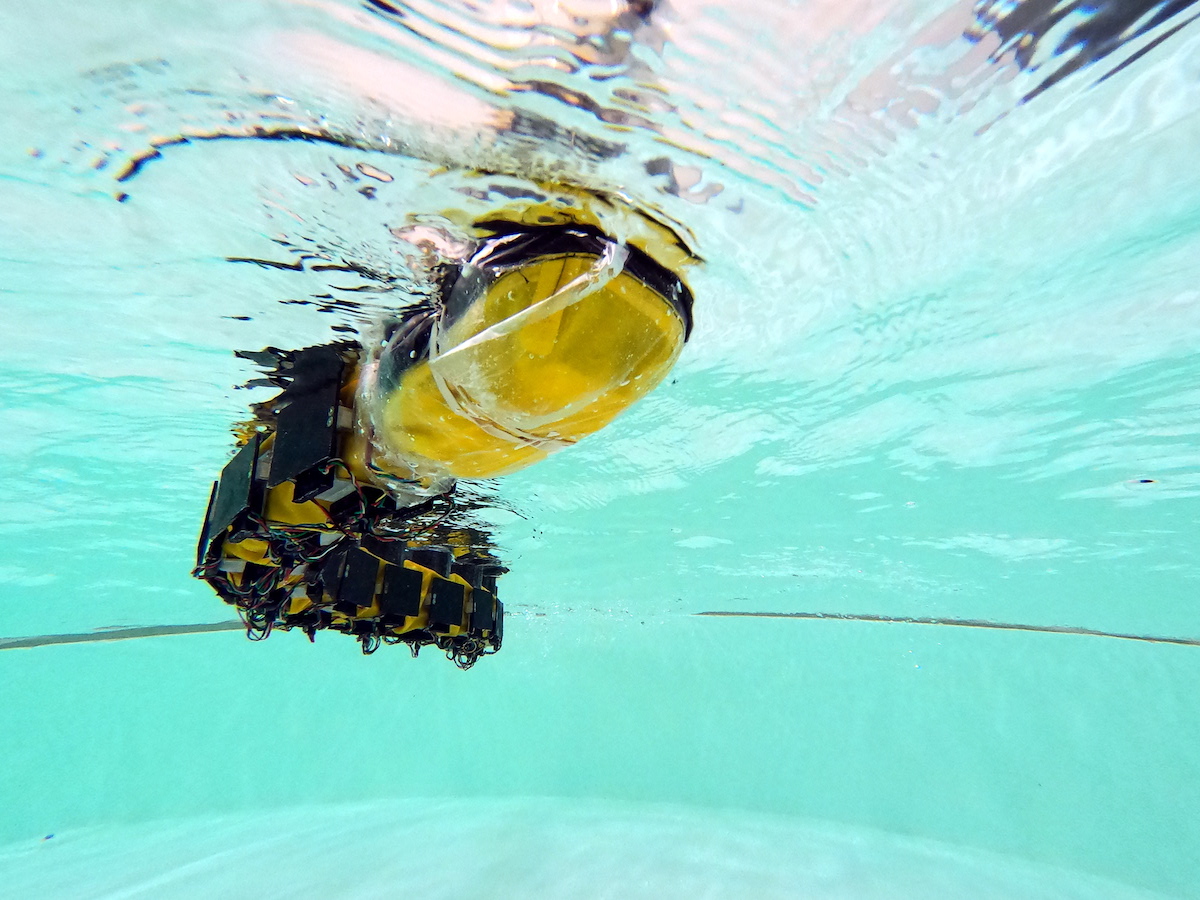

AgnathaX is a long, undulating swimming robot designed to mimic a lamprey, which is a primitive eel-like fish. It contains a series of motors that actuate the robot’s ten segments, which replicate the muscles along a lamprey’s body. The robot also has force sensors distributed laterally along its segments that work like the pressure-sensitive cells on a lamprey’s skin and detect the force of the water against the animal.

The research team ran mathematical models with their robot to simulate the different components of the nervous system and better understand its intricate dynamics. “We had AgnathaX swim in a pool equipped with a motion tracking system so that we could measure the robot’s movements,” says Laura Paez, a PhD student at BioRob. “As it swam, we selectively activated and deactivated the central and peripheral inputs and outputs of the nervous system at each segment, so that we could test our hypotheses about the neuroscience involved.”

Two systems working in tandem

The scientists found that both the central and peripheral nervous systems contribute to the generation of robust locomotion. The benefit of having the two systems work in tandem is that it provides increased resilience against neural disruptions, such as failures in the communication between body segments or muted sensing mechanisms. “In other words, by drawing on a combination of central and peripheral components, the robot could resist a larger number of neural disruptions and keep swimming at high speeds, as opposed to robots with only one kind of component,” says Kamilo Melo, a co-author of the study. “We also found that the force sensors in the skin of the robot, along with the physical interactions of the robot’s body and the water, provide useful signals for generating and synchronizing the rhythmic muscle activity necessary for locomotion.” As a result, when the scientists cut communication between the different segments of the robot to simulate a spinal cord lesion, the signals from the pressure sensors measuring the pressure of the water pushing against the robot’s body were enough to maintain its undulating motion.

These findings can be used to design more effective swimming robots for search and rescue missions and environmental monitoring. For instance, the controllers and force sensors developed by the scientists can help such robots navigate through flow perturbations and better withstand damage to their technical components. The study also has ramifications in the field of neuroscience. It confirms that peripheral mechanisms provide an important function which is possibly being overshadowed by the well-known central mechanisms. “These peripheral mechanisms could play an important role in the recovery of motor function after spinal cord injury, because, in principle, no connections between different parts of the spinal cord are needed to maintain a traveling wave along the body,” says Robin Thandiackal, a co-author of the study. “That could explain why some vertebrates are able to retain their locomotor capabilities after a spinal cord lesion.”

Literature