Responding to the Market Need for Quality

Why soft skills could power the rise of robot leaders

How to use Docker containers and Docker Compose for Deep Learning applications

Robots Partnering With Humans: at FPT Industrial Factory 4.0 is Already a Reality Thanks to Collaboration With Comau

Ultra-sensitive and resilient sensor for soft robotic systems

By Leah Burrows / SEAS communications

Newly engineered slinky-like strain sensors for textiles and soft robotic systems survive the washing machine, cars and hammers.

Think about your favorite t-shirt, the one you’ve worn a hundred times, and all the abuse you’ve put it through. You’ve washed it more times than you can remember, spilled on it, stretched it, crumbled it up, maybe even singed it leaning over the stove once. We put our clothes through a lot and if the smart textiles of the future are going to survive all that we throw at them, their components are going to need to be resilient.

Now, researchers from the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) and the Wyss Institute for Biologically Inspired Engineering have developed an ultra-sensitive, seriously resilient strain sensor that can be embedded in textiles and soft robotic systems. The research is published in Nature.

“Current soft strain gauges are really sensitive but also really fragile,” said Oluwaseun Araromi, Ph.D., a Research Associate in Materials Science and Mechanical Engineering at SEAS and the Wyss Institute and first author of the paper. “The problem is that we’re working in an oxymoronic paradigm — highly sensitivity sensors are usually very fragile and very strong sensors aren’t usually very sensitive. So, we needed to find mechanisms that could give us enough of each property.”

In the end, the researchers created a design that looks and behaves very much like a Slinky.

“A Slinky is a solid cylinder of rigid metal but if you pattern it into this spiral shape, it becomes stretchable,” said Araromi. “That is essentially what we did here. We started with a rigid bulk material, in this case carbon fiber, and patterned it in such a way that the material becomes stretchable.”

The pattern is known as a serpentine meander, because its sharp ups and downs resemble the slithering of a snake. The patterned conductive carbon fibers are then sandwiched between two pre-strained elastic substrates. The overall electrical conductivity of the sensor changes as the edges of the patterned carbon fiber come out of contact with each other, similar to the way the individual spirals of a slinky come out of contact with each other when you pull both ends. This process happens even with small amounts of strain, which is the key to the sensor’s high sensitivity.

Unlike current highly sensitive stretchable sensors, which rely on exotic materials such as silicon or gold nanowires, this sensor doesn’t require special manufacturing techniques or even a clean room. It could be made using any conductive material.

The researchers tested the resiliency of the sensor by stabbing it with a scalpel, hitting it with a hammer, running it over with a car, and throwing it in a washing machine ten times. The sensor emerged from each test unscathed. To demonstrate its sensitivity, the researchers embedded the sensor in a fabric arm sleeve and asked a participant to make different gestures with their hand, including a fist, open palm, and pinching motion. The sensors detected the small changes in the subject’s forearm muscle through the fabric and a machine learning algorithm was able to successfully classify these gestures.

“These features of resilience and the mechanical robustness put this sensor in a whole new camp,” said Araromi.

Such a sleeve could be used in everything from virtual reality simulations and sportswear to clinical diagnostics for neurodegenerative diseases like Parkinson’s Disease. Harvard’s Office of Technology Development has filed to protect the intellectual property associated with this project.

“The combination of high sensitivity and resilience are clear benefits of this type of sensor,” said senior author Robert Wood, Ph.D., Associate Faculty member at the Wyss Institute, and the Charles River Professor of Engineering and Applied Sciences at SEAS. “But another aspect that differentiates this technology is the low cost of the constituent materials and assembly methods. This will hopefully reduce the barriers to get this technology widespread in smart textiles and beyond.”

“We are currently exploring how this sensor can be integrated into apparel due to the intimate interface to the human body it provides,” says co-author and Wyss Associate Faculty member Conor Walsh, Ph.D., who also is the Paul A. Maeder Professor of Engineering and Applied Sciences at SEAS. “This will enable exciting new applications by being able to make biomechanical and physiological measurements throughout a person’s day, not possible with current approaches.”

The combination of high sensitivity and resilience are clear benefits of this type of sensor. But another aspect that differentiates this technology is the low cost of the constituent materials and assembly methods. This will hopefully reduce the barriers to get this technology widespread in smart textiles and beyond.

Robert Wood

The research was co-authored by Moritz A. Graule, Kristen L. Dorsey, Sam Castellanos, Jonathan R. Foster, Wen-Hao Hsu, Arthur E. Passy, James C. Weaver, Senior Staff Scientist at SEAS and Joost J. Vlassak, the Abbott and James Lawrence Professor of Materials Engineering at SEAS. It was funded through the university’s strategic research alliance with Tata. The 6-year, $8.4M alliance was established in 2016 to advance Harvard innovation in fields including robotics, wearable technologies, and the internet of things (IoT).

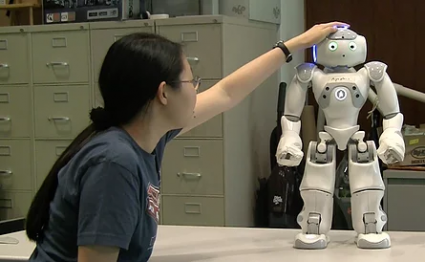

#324: Embodied Interactions: from Robotics to Dance, with Kim Baraka

In this episode, our interviewer Lauren Klein speaks with Kim Baraka about his PhD research to enable robots to engage in social interactions, including interactions with children with Autism Spectrum Disorder. Baraka discusses how robots can plan their actions across multiple modalities when interacting with humans, and how models from psychology can inform this process. He also tells us about his passion for dance, and how dance may serve as a testbed for embodied intelligence within Human-Robot Interaction.

Kim Baraka

Kim Baraka is a postdoctoral researcher in the Socially Intelligent Machines Lab at the University of Texas at Austin, and an upcoming Assistant Professor in the Department of Computer Science at Vrije Universiteit Amsterdam, where he will be part of the Social Artificial Intelligence Group. Baraka recently graduated with a dual PhD in Robotics from Carnegie Mellon University (CMU) in Pittsburgh, USA, and the Instituto Superior Técnico (IST) in Lisbon, Portugal. At CMU, Baraka was part of the Robotics Institute and was advised by Prof. Manuela Veloso. At IST, he was part of the Group on AI for People and Society (GAIPS), and was advised by Prof. Francisco Melo.

Kim Baraka is a postdoctoral researcher in the Socially Intelligent Machines Lab at the University of Texas at Austin, and an upcoming Assistant Professor in the Department of Computer Science at Vrije Universiteit Amsterdam, where he will be part of the Social Artificial Intelligence Group. Baraka recently graduated with a dual PhD in Robotics from Carnegie Mellon University (CMU) in Pittsburgh, USA, and the Instituto Superior Técnico (IST) in Lisbon, Portugal. At CMU, Baraka was part of the Robotics Institute and was advised by Prof. Manuela Veloso. At IST, he was part of the Group on AI for People and Society (GAIPS), and was advised by Prof. Francisco Melo.

Dr. Baraka’s research focuses on computational methods that inform artificial intelligence within Human-Robot Interaction. He develops approaches for knowledge transfer between humans and robots in order to support mutual and beneficial relationships between the robot and human. Specifically, he has conducted research in assistive interactions where the robot or human helps their partner to achieve a goal, and in teaching interactions. Baraka is also a contemporary dancer, with an interest in leveraging lessons from dance to inform advances in robotics, or vice versa.

- Download mp3 (13.7 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

PS. If you enjoy listening to experts in robotics and asking them questions, we recommend that you check out Talking Robotics. They have a virtual seminar on Dec 11 where they will be discussing how to conduct remote research for Human-Robot Interaction; something that is very relevant to researchers working from home due to COVID-19.

Inertial Navigation Solution in Delivery Robots & Drones

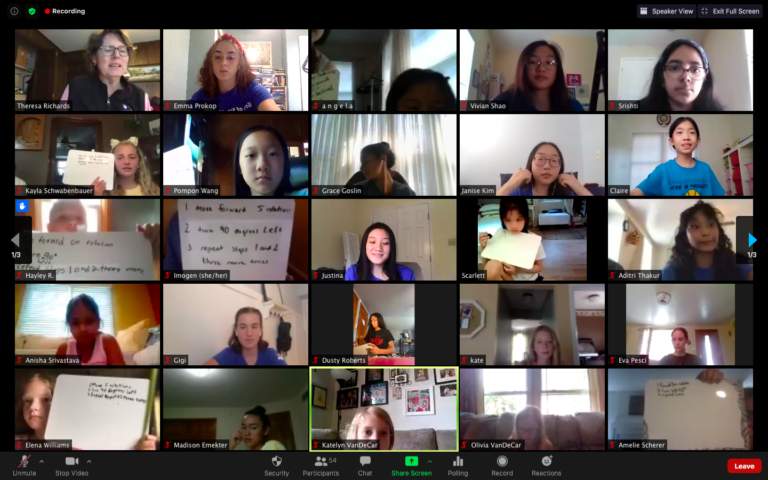

Women in Robotics Update: Girls Of Steel

“Girls of Steel Robotics (featured 2014) was founded in 2010 at Carnegie Mellon University’s Field Robotics Center as FRC Team 3504. The organization now serves multiple FIRST robotics teams offering STEM opportunities for people of all ages.

Since 2019, Girls of Steel also organizes FIRST Ladies, an online community for anyone involved in FIRST robotics programs who supports girls and women in STEM. Their mission statement reflects their commitment to empowering everyone for success in STEM: “Girls of Steel empowers everyone, especially women and girls, to believe they are capable of success in STEM.”

Girls of Steel celebrated their 10th year in FIRST robotics with a Virtual Gala in May 2020 featuring a panel of four Girls of Steel alumni showcasing a range of STEM opportunities. One is a PhD student in Robotics at CMU, two are working as engineers, and one is a computer science teacher. Girls of Steel are extremely proud of their alumni, of whom 80% are studying or working in STEM fields.

In August 2020, Girls of Steel successfully organized 3 weeks of virtual summer camps and were also able to run 4 teams in a virtual FIRST LEGO League program from September 2020. Girls of Steel also restructured their FIRST team and launched two new sub teams; Advocacy and Diversity, Equity, and Inclusion (DEI) focusing on continuing their efforts to advocate for after-school STEM programs, and for creating an inclusive environment that welcomes all Girls of Steel members. The DEI sub team manages a suggestion box where members can anonymously post ideas for team improvements.

In 2016, Robohub published a follow up on the Girls of Steel and their achievements.

In 2017, Girls of Steel won the 2017 Engineering Inspiration award (Greater Pittsburgh Regional), which “celebrates outstanding success in advancing respect and appreciation for engineering within a team’s school and community.”

In 2018, Girls of Steel won the 2018 Regional Chairman’s Award (Greater Pittsburgh Regional), the most prestigious award at FIRST, it honors the team that best represents a model for other teams to emulate and best embodies the purpose and goals of FIRST.

In 2019, Girls of Steel won the 2019 Gracious Professionalism Award (Greater Pittsburgh Regional), which celebrates the outstanding demonstration of FIRST Core Values such as continuous Gracious Professionalism and working together both on and off the playing field.

And in 2020, Girls of Steel members, Anna N. and Norah O., received 2020 Dean’s List Finalist Awards (Greater Pittsburgh Regional) which reflects their ability to lead their teams and communities to increased awareness for FIRST and its mission while achieving personal technical expertise and accomplishment.

Clearly, all the Girls of Steel over the last ten years are winners. Many women in robotics today point to an early experience in a robotics competition as the turning point when they decided that STEM, particularly robotics, was going to be in their future. We want to thank all the Girls of Steel for being such great role models, and sharing the joy and fun of building robots with other girls/women. It’s working! (And it’s worth it!)

Want to keep reading? There are 180 more stories on our 2013 to 2020 lists. Why not nominate someone for inclusion next year!

And we encourage #womeninrobotics and women who’d like to work in robotics to join our professional network at http://womeninrobotics.org

New system optimizes the shape of robots for traversing various terrain types

Energy-generating synthetic skin for affordable prosthetic limbs and touch-sensitive robots

James Bruton focus series #1: openDog, Mini Robot Dog & openDog V2

What if you could ride your own giant LEGO electric skateboard, make a synthesizer that you can play with a barcode reader, or build a strong robot dog based on the Boston Dynamics dog robot? Today sees the start of a new series of videos that focuses on James Bruton’s open source robot projects.

James Bruton is a former toy designer, current YouTube maker and general robotics, electrical and mechanical engineer. He has a reputation for building robot dogs and building Iron Man inspired cosplays. He uses 3D printing, CNC and sometimes welding to build all sorts of robotics related creations. Highlights include building Mark Rober’s auto-strike bowling ball and working with Colin Furze to build a life-sized Iron Man Hulkbuster for an official eBay and Marvel promo. He also built a life-sized Bumblebee Transformer for Paramount to promote the release of the Bumblebee movie.

I discovered James’ impressive work in this episode of Ricardo Tellez’s ROS Developers Podcast on The Construct, which I highly recommend. Whether you enjoy getting your hands dirty with CAD files, 3D-printed parts, arduinos, motors and code, or you like learning about the full research & development (R&D) process of a robotics project, you will have loads of hours of fun following this series.

Today I brought one of James’ coolest and most successful open source projects: openDog and its different versions. In James’ own words, “if you want your very own four-legged friend to play fetch with and go on long walks then this is the perfect project for you.” You can access all the CAD files and code here. And without further ado, here’s the full YouTube playlist of the first version of openDog:

James also released another series of videos developing an affordable version of openDog: Mini Robot Dog. This robot is half the size of openDog and its mechanical components and 3D-printed parts are much more cheaper than the former robot without sacrificing compliance. You can see the full development in the playlist below, and access the open source files of version 1 and version 2.

Based on the insight gained through the R&D of openDog, Mini Robot Dog and these test dogs, James built the ultimate robot dog: openDog V2. For this improved version of openDog, he used brushless motors which can be back-driven to increase compliance. And by adding an Inertial Measurements Unit, he improved the balance of the robot. CAD files and code are available here. If you want to find out whether the robot is able to walk, check out the openDog V2 video series:

If you like James Bruton’s project, you can check out his website for more resources, updates and support options. See you in the next episode of our focus series!

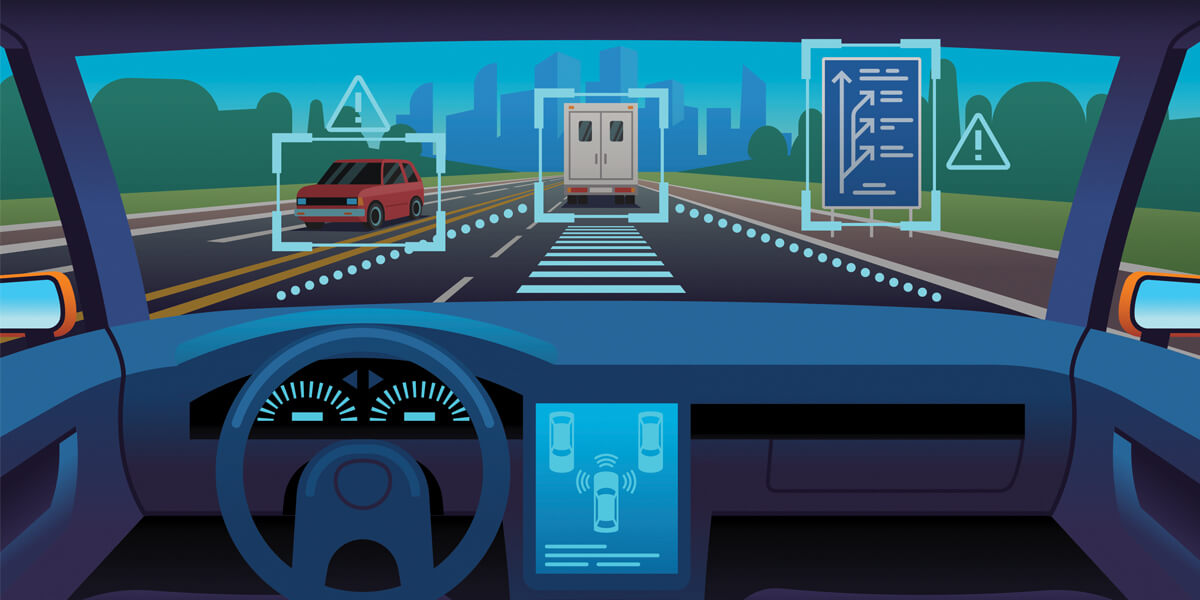

Showing robots how to drive a car… in just a few easy lessons

By Caitlin Dawson

USC researchers have developed a method that could allow robots to learn new tasks, like setting a table or driving a car, from observing a small number of demonstrations.

Imagine if robots could learn from watching demonstrations: you could show a domestic robot how to do routine chores or set a dinner table. In the workplace, you could train robots like new employees, showing them how to perform many duties. On the road, your self-driving car could learn how to drive safely by watching you drive around your neighborhood.

Making progress on that vision, USC researchers have designed a system that lets robots autonomously learn complicated tasks from a very small number of demonstrations—even imperfect ones. The paper, titled Learning from Demonstrations Using Signal Temporal Logic, was presented at the Conference on Robot Learning (CoRL), Nov. 18.

The researchers’ system works by evaluating the quality of each demonstration, so it learns from the mistakes it sees, as well as the successes. While current state-of-art methods need at least 100 demonstrations to nail a specific task, this new method allows robots to learn from only a handful of demonstrations. It also allows robots to learn more intuitively, the way humans learn from each other — you watch someone execute a task, even imperfectly, then try yourself. It doesn’t have to be a “perfect” demonstration for humans to glean knowledge from watching each other.

“Many machine learning and reinforcement learning systems require large amounts of data data and hundreds of demonstrations—you need a human to demonstrate over and over again, which is not feasible,” said lead author Aniruddh Puranic, a Ph.D. student in computer science at the USC Viterbi School of Engineering.

“Also, most people don’t have programming knowledge to explicitly state what the robot needs to do, and a human cannot possibly demonstrate everything that a robot needs to know. What if the robot encounters something it hasn’t seen before? This is a key challenge.”

Above: Using the USC researchers’ method, an autonomous driving system would still be able to learn safe driving skills from “watching” imperfect demonstrations, such this driving demonstration on a racetrack. Source credits: Driver demonstrations were provided through the Udacity Self-Driving Car Simulator.

Learning from demonstrations

Learning from demonstrations is becoming increasingly popular in obtaining effective robot control policies — which control the robot’s movements — for complex tasks. But it is susceptible to imperfections in demonstrations and also raises safety concerns as robots may learn unsafe or undesirable actions.

Also, not all demonstrations are equal: some demonstrations are a better indicator of desired behavior than others and the quality of the demonstrations often depends on the expertise of the user providing the demonstrations.

To address these issues, the researchers integrated “signal temporal logic” or STL to evaluate the quality of demonstrations and automatically rank them to create inherent rewards.

In other words, even if some parts of the demonstrations do not make any sense based on the logic requirements, using this method, the robot can still learn from the imperfect parts. In a way, the system is coming to its own conclusion about the accuracy or success of a demonstration.

“Let’s say robots learn from different types of demonstrations — it could be a hands-on demonstration, videos, or simulations — if I do something that is very unsafe, standard approaches will do one of two things: either, they will completely disregard it, or even worse, the robot will learn the wrong thing,” said co-author Stefanos Nikolaidis, a USC Viterbi assistant professor of computer science.

“In contrast, in a very intelligent way, this work uses some common sense reasoning in the form of logic to understand which parts of the demonstration are good and which parts are not. In essence, this is exactly what also humans do.”

Take, for example, a driving demonstration where someone skips a stop sign. This would be ranked lower by the system than a demonstration of a good driver. But, if during this demonstration, the driver does something intelligent — for instance, applies their brakes to avoid a crash — the robot will still learn from this smart action.

Adapting to human preferences

Signal temporal logic is an expressive mathematical symbolic language that enables robotic reasoning about current and future outcomes. While previous research in this area has used “linear temporal logic”, STL is preferable in this case, said Jyo Deshmukh, a former Toyota engineer and USC Viterbi assistant professor of computer science .

“When we go into the world of cyber physical systems, like robots and self-driving cars, where time is crucial, linear temporal logic becomes a bit cumbersome, because it reasons about sequences of true/false values for variables, while STL allows reasoning about physical signals.”

Puranic, who is advised by Deshmukh, came up with the idea after taking a hands-on robotics class with Nikolaidis, who has been working on developing robots to learn from YouTube videos. The trio decided to test it out. All three said they were surprised by the extent of the system’s success and the professors both credit Puranic for his hard work.

“Compared to a state-of-the-art algorithm, being used extensively in many robotics applications, you see an order of magnitude difference in how many demonstrations are required,” said Nikolaidis.

The system was tested using a Minecraft-style game simulator, but the researchers said the system could also learn from driving simulators and eventually even videos. Next, the researchers hope to try it out on real robots. They said this approach is well suited for applications where maps are known beforehand but there are dynamic obstacles in the map: robots in household environments, warehouses or even space exploration rovers.

“If we want robots to be good teammates and help people, first they need to learn and adapt to human preference very efficiently,” said Nikolaidis. “Our method provides that.”

“I’m excited to integrate this approach into robotic systems to help them efficiently learn from demonstrations, but also effectively help human teammates in a collaborative task.”

Why we need a robot registry

Robots are rolling out into the real world and we need to meet the emerging challenges in responsible fashion but one that doesn’t block innovation. At the recent ARM Developers Summit 2020 I shared my suggestions for five practical steps that we could undertake at a regional, national or global level as part of the Five Laws of Robotics presentation (below).

The Five Laws of Robotics are drawn from the EPSRC Principles of Robotics, first developed in 2010 and a living document workshopped by experts across many relevant disciplines. These five principles are practical and concise, embracing the majority of principles expressed across a wide range of ethics documents. I will explain in more detail.

- There should be no killer robots.

- Robots should (be designed to) obey the law.

- Robots should (be designed to) be good products.

- Robots should be transparent in operation

- Robots should be identifiable

EPSRC says that robots are multi-use tools. Robots should not be designed solely or primarily to kill or harm humans, except in the interests of national security. More information is at the Campaign to Stop Killer Robots.

Humans, not robots, are the responsible agents. Robots should be designed and operated as far as is practicable to comply with existing laws and fundamental rights and freedoms, including privacy.

Robots are products. They should be designed using processes which assure their safety and security. Quality guidelines, processes and standards already exist.

Robots are manufactured artefacts. They should not be designed in a deceptive way to exploit users, instead their machine nature should be made transparent.

It should be possible to find out who is responsible for any robot. My suggestion here is that robots in public spaces require a license plate; a clear identification of robot and the responsible organization.

As well as speaking about Five Laws of Robotics, I introduced five practical proposals to help us respond at a regional, national and global level.

- Robot Registry (license plates, access to database of owners/operators)

- Algorithmic Transparency (via Model Cards and Testing Benchmarks)

- Independent Ethical Review Boards (as in biotech industry)

- Robot Ombudspeople to liaise between public and policy makers

- Rewarding Good Robots design awards and case studies

Silicon Valley Robotics is about to announce the first winners of our inaugural Robotics Industry Awards. The SVR Industry Awards consider the responsible design as well as technological innovation and commercial success. There are also some ethical checkmark or certification initiatives under preparation, but like the development of new standards, these can take a long time to do properly, whereas awards, endorsements and case studies can be available immediately to foster the discussion of what constitutes good robots and what are the social challenges that robotics needs to solve.

In fact, the robot registry suggestion was picked up recently by Stacey Higginbotham in the IEEE Spectrum. Silicon Valley Robotics is putting together these policy suggestions for the new White House administration.