New software allows industrial robots to achieve touch sensitivity and precision close to human hands

An inventory of robotics roadmaps to better inform policy and investment

Much excellent work has been done, by many organizations, to develop ‘Roadmaps for Robotics’, in order to steer government policy, innovation investment, and the development of standards and commercialization. However, unless you took part in the roadmapping activity, it can be very hard to find these resources. Silicon Valley Robotics in partnership with the Industrial Activities Board of the IEEE Robotics and Automation Society, is compiling an up to date resource list of various robotics, AIS and AI roadmaps, national or otherwise. This initiative will allow us all to access the best robotics commercialization advice from around the world, to be able to compare and contrast various initiatives and their regional effectiveness, and to provide guidance for countries and companies without their own robotics roadmaps.

Another issue making it harder to find recent robotics roadmaps is the subsumption of robotics into the AI landscape, at least in some national directives. Or it may appear not as robotics but as ‘AIS’, standing for Autonomous Intelligent Systems, such as in the work of OCEANIS, the Open Community for Ethics in Autonomous aNd Intelligent Systems, which hosts a global standards repository. And finally there are subcategories of robotics, ie Autonomous Vehicles, or Self Driving Cars, or Drones, or Surgical Robotics, all of which may have their own roadmaps. This is not an exhaustive list, but with your help we can continue to evolve it.

-

Global Roadmaps:

- IEEE RAS 2050Robotics.org

- OCEANIS, Open Community for Ethics of Autonomous and Intelligent Systems

- OCEANIS, Global Standards Repository

- OECD.ai Policy Observatory

- OECD.ai Stakeholder Initiatives

- OECD.ai National AI Strategies

- Mapping the Evolution of the Robotics Industry: A Cross-Country Comparison

- BCG Robotics Outlook 2030

- 2021 World Bank Review of National AI Strategies and Policies

-

US Roadmaps

- 2020 US National Robotics Roadmap

- ASME: Robotics & Covid 19

- ASME: Accelerating US Robotics for American Prosperity and Security

- 2020 NASA Technology Taxonomy

- 2017 Automated Vehicles 3.0: Preparing for the Future of Transportation

- NITRD Supplement to the President’s FY2021 Budget

- American AI Initiative

-

Canada Roadmaps

-

Latam Roadmaps

- AI for Social Good in Latin America and the Caribbean

- Mexico Agenda National for Artificial Intelligence

- Brazil Industry 4.0

- Brazil E-Digital

- Colombia National Policy for Digital Transformation and Artificial Intelligence

- Chile National Artificial Intelligence Policy

- Argentina Agenda Digital 2030

- Uruguay Artificial Intelligence Strategy for the Public Administration

-

UK & EU Roadmaps

- AI in the UK: No Room for Complacency

- UK’s Industrial Strategy

- SPARC: Robotics 2020 Multi-Annual Roadmap for Robotics in Europe

- SRIDA: AI, Data and Robotics Partnership

- EC: Communication on Artificial Intelligence

- Sweden National Approach for Artificial Intelligence

- Spain RDI Strategy in Artificial Intelligence

- Strategy for the Development of Artificial Intelligence in the Republic of Serbia for the period 2020-2025

- AI Portugal 2030

- Assumptions for the AI strategy in Poland

- Norway National Strategy for Artificial Intelligence

- The Declaration on AI in the Nordic-Baltic Region

- Malta the Ultimate AI Launchpad: A Strategy and Vision for Artificial Intelligence in Malta 2030

- Artificial Intelligence: a strategic vision for Luxembourg

- Lithuanian Artificial Intelligence Strategy: A vision of the future

- Italy National Strategy on Artificial Intelligence

- Made in Germany: Artificial Intelligence Strategy

- Meaningful Artificial Intelligence: Towards a French and European Strategy

- Finland’s Age of Artificial Intelligence

- Finland Leading the Way into the Age of Artificial Intelligence

- Denmark National Strategy for Artificial Intelligence

- Czech National Artificial Intelligence Strategy

- Cyprus National Strategy AI

- AI 4 Belgium

- Austria AIM AT 2030

-

Russia Roadmaps

-

Africa & MENA Roadmaps

- PIDA Closing the Infrastructure Gap Vital for Africa’s Transformation

- Africa50

- AIDA Accelerated Industrial Development for Africa

- UAE Strategy for Artificial Intelligence

- UAE Artificial Intelligence Strategy 2031

- Saudi Arabia Vision 2030

- National AI Strategy: Unlocking Tunisia’s capabilities potential

-

Asia & SE Asia Roadmaps

-

China Roadmaps

-

Japan Roadmaps

-

Australia Roadmaps

-

New Zealand & Pacifica Roadmaps

-

Non Terrestrial Roadmaps

Do you know of robotics roadmaps not yet included? Please share them with us.

Guidance Automation helps Yantra replace Traditional Navigation with ‘Natural Feature’

Guidance Automation helps Yantra replace Traditional Navigation with ‘Natural Feature’

Onshape 3D CAD & Product Design

Always Easier Than Before – Cobot Superior Series ELITE Collaborative Robots

Easy Programming & High ROI – ELITE ROBOT Collaborative Robots

First ‘robotaxis’ enter service in Beijing

Robots can be companions, caregivers, collaborators — and social influencers

Robot and artificial intelligence are poised to increase their influences within our every day lives. (Shutterstock)

By Shane Saunderson

In the mid-1990s, there was research going on at Stanford University that would change the way we think about computers. The Media Equation experiments were simple: participants were asked to interact with a computer that acted socially for a few minutes after which, they were asked to give feedback about the interaction.

Participants would provide this feedback either on the same computer (No. 1) they had just been working on or on another computer (No. 2) across the room. The study found that participants responding on computer No. 2 were far more critical of computer No. 1 than those responding on the same machine they’d worked on.

People responding on the first computer seemed to not want to hurt the computer’s feelings to its face, but had no problem talking about it behind its back. This phenomenon became known as the computers as social actors (CASA) paradigm because it showed that people are hardwired to respond socially to technology that presents itself as even vaguely social.

The CASA phenomenon continues to be explored, particularly as our technologies have become more social. As a researcher, lecturer and all-around lover of robotics, I observe this phenomenon in my work every time someone thanks a robot, assigns it a gender or tries to justify its behaviour using human, or anthropomorphic, rationales.

What I’ve witnessed during my research is that while few are under any delusions that robots are people, we tend to defer to them just like we would another person.

Social tendencies

While this may sound like the beginnings of a Black Mirror episode, this tendency is precisely what allows us to enjoy social interactions with robots and place them in caregiver, collaborator or companion roles.

The positive aspects of treating a robot like a person is precisely why roboticists design them as such — we like interacting with people. As these technologies become more human-like, they become more capable of influencing us. However, if we continue to follow the current path of robot and AI deployment, these technologies could emerge as far more dystopian than utopian.

The Sophia robot, manufactured by Hanson Robotics, has been on 60 Minutes, received honorary citizenship from Saudi Arabia, holds a title from the United Nations and has gone on a date with actor Will Smith. While Sophia undoubtedly highlights many technological advancements, few surpass Hanson’s achievements in marketing. If Sophia truly were a person, we would acknowledge its role as an influencer.

However, worse than robots or AI being sociopathic agents — goal-oriented without morality or human judgment — these technologies become tools of mass influence for whichever organization or individual controls them.

If you thought the Cambridge Analytica scandal was bad, imagine what Facebook’s algorithms of influence could do if they had an accompanying, human-like face. Or a thousand faces. Or a million. The true value of a persuasive technology is not in its cold, calculated efficiency, but its scale.

Seeing through intent

Recent scandals and exposures in the tech world have left many of us feeling helpless against these corporate giants. Fortunately, many of these issues can be solved through transparency.

There are fundamental questions that are important for social technologies to answer because we would expect the same answers when interacting with another person, albeit often implicitly. Who owns or sets the mandate of this technology? What are its objectives? What approaches can it use? What data can it access?

Since robots could have the potential to soon leverage superhuman capabilities, enacting the will of an unseen owner, and without showing verbal or non-verbal cues that shed light on their intent, we must demand that these types of questions be answered explicitly.

As a roboticist, I get asked the question, “When will robots take over the world?” so often that I’ve developed a stock answer: “As soon as I tell them to.” However, my joke is underpinned by an important lesson: don’t scapegoat machines for decisions made by humans.

I consider myself a robot sympathizer because I think robots get unfairly blamed for many human decisions and errors. It is important that we periodically remind ourselves that a robot is not your friend, your enemy or anything in between. A robot is a tool, wielded by a person (however far removed), and increasingly used to influence us.

Shane receives funding from the Natural Sciences and Engineering Research Council of Canada (NSERC). He is affiliated with the Human Futures Institute, a Toronto-based think tank.

This article appeared in The Conversation.

A Q-learning algorithm to generate shots for walking robots in soccer simulations

Seoul trials pint-sized robots in nursery schools

Robots can be companions, caregivers, collaborators—and social influencers

Interview with Tao Chen, Jie Xu and Pulkit Agrawal: CoRL 2021 best paper award winners

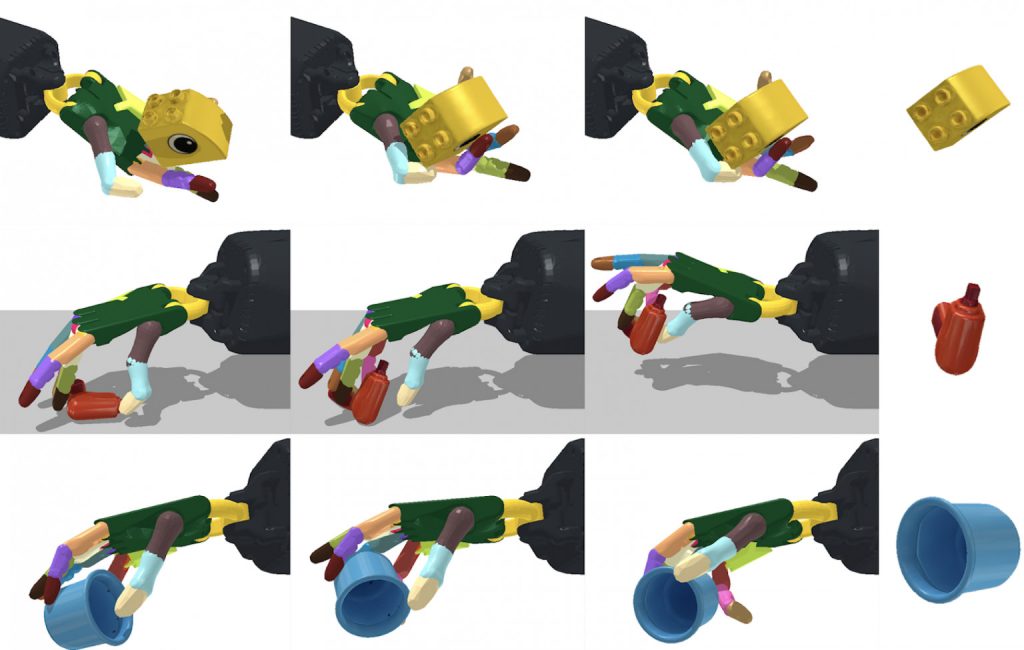

Congratulations to Tao Chen, Jie Xu and Pulkit Agrawal who have won the CoRL 2021 best paper award!

Their work, A system for general in-hand object re-orientation, was highly praised by the judging committee who commented that “the sheer scope and variation across objects tested with this method, and the range of different policy architectures and approaches tested makes this paper extremely thorough in its analysis of this reorientation task”.

Below, the authors tell us more about their work, the methodology, and what they are planning next.

What is the topic of the research in your paper?

We present a system for reorienting novel objects using an anthropomorphic robotic hand with any configuration, with the hand facing both upwards and downwards. We demonstrate the capability of reorienting over 2000 geometrically different objects in both cases. The learned controller can also reorient novel unseen objects.

Could you tell us about the implications of your research and why it is an interesting area for study?

Our learned skill (in-hand object reorientation) can enable fast pick-and-place of objects in desired orientations and locations. For example, in logistics and manufacturing, it is a common demand to pack objects into slots for kitting. Currently, this is usually achieved via a two-stage process involving re-grasping. Our system will be able to achieve it in one step, which can substantially improve the packing speed and boost the manufacturing efficiency.

Another application is enabling robots to operate a wider variety of tools. The most common end-effector in industrial robots is a parallel-jaw gripper, partially due to its simplicity in control. However, such an end-effector is physically unable to handle many tools we see in our daily life. For example, even using pliers is difficult for such a gripper as it cannot dexterously move one handle back and forth. Our system will allow a multi-fingered hand to dexterously manipulate such tools, which opens up a new area for robotics applications.

Could you explain your methodology?

We use a model-free reinforcement learning algorithm to train the controller for reorienting objects. In-hand object reorientation is a challenging contact-rich task. It requires a tremendous amount of training. To speed up the learning process, we first train the policy with privileged state information such as object velocities. Using the privileged state information drastically improves the learning speed. Other than this, we also found that providing a good initialization on the hand and object pose is critical for training the controller to reorient objects when the hand faces downward. In addition, we develop a technique to facilitate the training by building a curriculum on gravitational acceleration. We call this technique “gravity curriculum”.

With these techniques, we are able to train a controller that can reorient many objects even with a downward-facing hand. However, a practical concern of the learned controller is that it makes use of privileged state information, which can be nontrivial to get in the real world. For example, it is hard to measure the object’s velocity in the real world. To ensure that we can deploy a controller reliably in the real world, we use teacher-student training. We use the controller trained with the privileged state information as the teacher. Then we train a second controller (student) that does not rely on any privileged state information and hence has the potential to be deployed reliably in the real world. This student controller is trained to imitate the teacher controller using imitation learning. The training of the student controller becomes a supervised learning problem and is therefore sample-efficient. In the deployment time, we only need the student controller.

What were your main findings?

We developed a general system that can be used to train controllers that can reorient objects with either the robotic hand facing upward or downward. The same system can also be used to train controllers that use external support such as a supporting surface for object re-orientation. Such controllers learned in our system are robust and can also reorient unseen novel objects. We also identified several techniques that are important for training a controller to reorient objects with a downward-facing hand.

A priori one might believe that it is important for the robot to know about object shape in order to manipulate new shapes. Surprisingly, we find that the robot can manipulate new objects without knowing their shape. It suggests that robust control strategies mitigate the need for complex perceptual processing. In other words, we might need much simpler perceptual processing strategies than previously thought for complex manipulation tasks.

What further work are you planning in this area?

Our immediate next step is to achieve such manipulation skills on a real robotic hand. To achieve this, we will need to tackle many challenges. We will investigate overcoming the sim-to-real gap such that the simulation results can be transferred to the real world. We also plan to design new robotic hand hardware through collaboration such that the entire robotic system can be dexterous and low-cost.

About the authors

Tao Chen is a Ph.D. student in the Improbable AI Lab at MIT CSAIL, advised by Professor Pulkit Agrawal. His research interests revolve around the intersection of robot learning, manipulation, locomotion, and navigation. More recently, he has been focusing on dexterous manipulation. His research papers have been published in top AI and robotics conferences. He received his master’s degree, advised by Professor Abhinav Gupta, from the Robotics Institute at CMU, and his bachelor’s degree from Shanghai Jiao Tong University.

Tao Chen is a Ph.D. student in the Improbable AI Lab at MIT CSAIL, advised by Professor Pulkit Agrawal. His research interests revolve around the intersection of robot learning, manipulation, locomotion, and navigation. More recently, he has been focusing on dexterous manipulation. His research papers have been published in top AI and robotics conferences. He received his master’s degree, advised by Professor Abhinav Gupta, from the Robotics Institute at CMU, and his bachelor’s degree from Shanghai Jiao Tong University.

Jie Xu is a Ph.D. student at MIT CSAIL, advised by Professor Wojciech Matusik in the Computational Design and Fabrication Group (CDFG). He obtained a bachelor’s degree from Department of Computer Science and Technology at Tsinghua University with honours in 2016. During his undergraduate period, he worked with Professor Shi-Min Hu in the Tsinghua Graphics & Geometric Computing Group. His research mainly focuses on the intersection of Robotics, Simulation, and Machine Learning. Specifically, he is interested in the following topics: robotics control, reinforcement learning, differentiable physics-based simulation, robotics control and design co-optimization, and sim-to-real.

Jie Xu is a Ph.D. student at MIT CSAIL, advised by Professor Wojciech Matusik in the Computational Design and Fabrication Group (CDFG). He obtained a bachelor’s degree from Department of Computer Science and Technology at Tsinghua University with honours in 2016. During his undergraduate period, he worked with Professor Shi-Min Hu in the Tsinghua Graphics & Geometric Computing Group. His research mainly focuses on the intersection of Robotics, Simulation, and Machine Learning. Specifically, he is interested in the following topics: robotics control, reinforcement learning, differentiable physics-based simulation, robotics control and design co-optimization, and sim-to-real.

Dr Pulkit Agrawal is the Steven and Renee Finn Chair Professor in the Department of Electrical Engineering and Computer Science at MIT. He earned his Ph.D. from UC Berkeley and co-founded SafelyYou Inc. His research interests span robotics, deep learning, computer vision and reinforcement learning. Pulkit completed his bachelor’s at IIT Kanpur and was awarded the Director’s Gold Medal. He is a recipient of the Sony Faculty Research Award, Salesforce Research Award, Amazon Machine Learning Research Award, Signatures Fellow Award, Fulbright Science and Technology Award, Goldman Sachs Global Leadership Award, OPJEMS, and Sridhar Memorial Prize, among others.

Dr Pulkit Agrawal is the Steven and Renee Finn Chair Professor in the Department of Electrical Engineering and Computer Science at MIT. He earned his Ph.D. from UC Berkeley and co-founded SafelyYou Inc. His research interests span robotics, deep learning, computer vision and reinforcement learning. Pulkit completed his bachelor’s at IIT Kanpur and was awarded the Director’s Gold Medal. He is a recipient of the Sony Faculty Research Award, Salesforce Research Award, Amazon Machine Learning Research Award, Signatures Fellow Award, Fulbright Science and Technology Award, Goldman Sachs Global Leadership Award, OPJEMS, and Sridhar Memorial Prize, among others.

Find out more

- Read the paper on arXiv.

- The videos of the learned policies are available here, as is a video of the authors’ presentation at CoRL.

- Read more about the winning and shortlisted papers for the CoRL awards here.