Making industrial robots smarter and more versatile

A philosophy of automation – TM Robotics and Evershed help Ricoh automate its manufacturing process

A philosophy of automation – TM Robotics and Evershed help Ricoh automate its manufacturing process

A catalog of robotics-related books (+ call for holiday season suggestions)

The Women in Robotics network is putting together an open catalog of books related to robotics and technology. Whether you would like to share your own collection with the community, or you just want to find your next read or the perfect holiday season gift (let’s be honest, we all love robots, and we want everyone to love robots), this is a great place to start.

Current titles on the catalog include Frankenstein (Mary Shelley), Uncanny Valley: A Memoir (Anna Wiener) or Sex, Race, and Robots – How to Be Human in the Age of AI (Ayanna Howard). You can access the catalog through this link. And if you would like to contribute with a book suggestion, you can use this form to send it.

Meet the robotics community champions in the SVR Good Robot Industry Awards

If robotics is the technology of the 21st century, rather than biotech, then we have some serious work to do. This week marks the ‘beginning of the end’ of the coronavirus pandemic as a vaccine is deployed in the US. The Wall St Journal recently profiled the incredible effort of Pfizer and BioNTech who pioneered a novel Messenger RNA (mRNA) approach, and got it in production in a tenth to a quarter of the normal vaccine development time. It undoubtedly takes a team, but the WSJ article “How Pfizer delivered a COVID vaccine in record time” highlights the efforts of two men, CEO Albert Bourla and manufacturing chief Mike McDermott, and one woman, head of Pfizer’s vaccine research Dr Kathrin Jansen, in this achievement. And the WSJ feature makes more fuss about CEO Albert Bourla’s Greek heritage than about Dr Kathrin Jansen’s femaleness. Now the WSJ isn’t exactly a leftwing propaganda machine, so this reflects the strides that the biological sciences have taken in diversity in the last fifty years. Given the growing shortage of professionals in computer science, robotics and AI occupations, including basic manufacturing, and given the basic human right of empowering everyone with access to equal opportunities, then it is clear that systemic inequity is still at work in some new technologies like robotics. Biotech shows that hardware and innovation is doable. Sadly, Silicon Valley shows that equity is harder than hardware.

Silicon Valley Robotics announces its inaugural ‘Good Robot’ Industry Awards this week, celebrating Innovation in products, Vision in action, and the Commercialization of new technologies that offer us the chance to address global challenges. The computer age did not usher in the increases in productivity that were anticipated. Unlike the advent of tractors or electricity, productivity due to technology has largely stagnated since shortly after the second world war. This is in spite of large amounts of public research and development funding in advanced computing technologies like robotics and AI. In the meantime, the negative impacts of many technology advances (like plastics) continue to wage war on the planet. But there is no point in promoting a Luddite view of a technology free era (of high infant mortality, short average lifespan and child labor), instead we can use technology wisely to address areas where the gain to society will be great.

We want to also recognize the work of robotics community champions who do all sorts of (often unsung) work that advances the science and technology of robotics, from research, to production and employment. These awardees serve as great examples of how providing support for robotics supports all of us.

Community Champion Award:

Companies:

NASA Intelligent Robotics Group

Open Robotics

PickNik Robotics

Robohub

SICK

Willow Garage (best to see the Red Hat series How to Start a Robot Revolution)

Individuals:

Alex Padilla

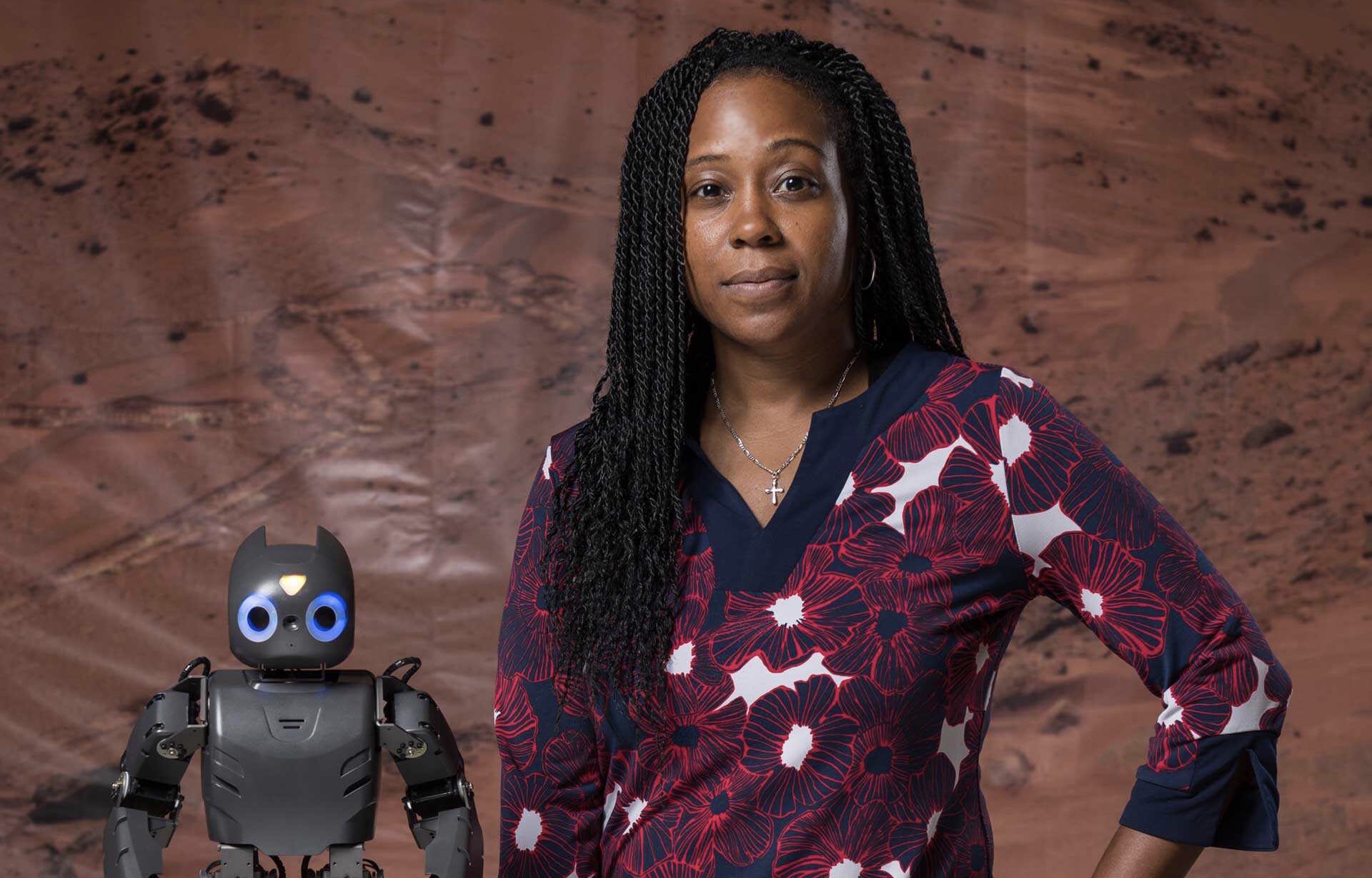

Ayanna Howard

Evan Ackerman

Frank Tobe

Henrik Christensen

Joy Buolamwini

Katherine Scott

Khari Johnson

Louise Poubel

Mark Martin

Rodney Brooks

Rumman Chowdhury

Timnit Gebru

Silicon Valley Robotics appreciates the contributions made by all of our inaugural Community Champions! And we look forward to next year, because there are many of you out there who are making not just good robots, but a better robotics industry. One of the things I love most about robotics is being around so many people who are are passionate about using technology to improve the world. It can be frustrating that the world is so resistant to change sometimes but in ten short years, the robotics industry has gone from insignificant (in Silicon Valley terms) to unicorns. We all have an opportunity to be part of changing the world for the better, like our Community Champions.

Make no mistake, this is not an issue for women or black & brown people to solve. Without an accurate reflection of the society in which our technologies will be used we will not produce the best technologies and we will not be attractive or competitive as an industry that is fighting for the best talent. Diversity and equity in robotics should be worrying everybody in robotics. We need robots to solve our greatest global challenges. And we need global talent to do this.

I dream of seeing a Silicon Valley Robotics industry cluster and robotics education program in every country, hand in hand with non-profit programs like Women in Robotics and Black in Robotics to support workers entering what is still not an equitable work environment.

*The Silicon Valley Robotics Board is incredibly supportive, however this commentary is all my own opinion piece and I put my Ruth Bader Ginsburg socks on today. I highly recommend them.

About Silicon Valley Robotics

Silicon Valley Robotics (SVR) supports the innovation and commercialization of robotics technologies, as a non-profit industry association. Our first strategic plan focused on connecting startups with investment, and since our founding in 2010, our membership has grown tenfold, reflecting our success in increasing investment into robotics. We believe that with robotics, we can improve productivity, meet labor shortages, get rid of jobs that treat humans like robots and finally create precision, personalized food, mobility, housing and health technologies. For more information, please visit https://svrobo.org

SOURCE: Silicon Valley Robotics (SVR)

CONTACT: Andra Keay andra@svrobo.org

Introduction to Kubernetes with Google Cloud: Deploy your Deep Learning model effortlessly

Butler on wheels, robot cutting salad: How COVID-19 sped automation

#325: The Advantage of Fins, with Benjamin Pietro Filardo

Abate interviews Benjamin “Pietro” Filardo, CEO and founder of Pliant Energy Systems. At PES, they developed a novel form of actuation using two undulating fins on a robot. These fins present multiple benefits over traditional propeller systems including excellent energy efficiency, low water turbulence, and an ability to maneuver in water, land, and ice. Aside from its benefits on a robot, Pietro also talks about its advantages for harnessing energy from moving water.

Benjamin “Pietro” Filardo

After several years in the architectural profession, Pietro founded Pliant Energy Systems to explore renewable energy concepts he first pondered while earning his first degree in marine biology and oceanography. With funding from four federal agencies he has broadened the application of these concepts into marine propulsion and a highly novel robotics platform.

After several years in the architectural profession, Pietro founded Pliant Energy Systems to explore renewable energy concepts he first pondered while earning his first degree in marine biology and oceanography. With funding from four federal agencies he has broadened the application of these concepts into marine propulsion and a highly novel robotics platform.

Links

- Download mp3 (16.1 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

Call for robot holiday videos 2020

That’s right! You better not run, you better not hide, you better watch out for brand new robot holiday videos on Robohub!

Drop your submissions down our chimney at daniel.carrillozapata@robohub.org and share the spirit of the season.

For inspiration, here is our first submission:

Call for robot holiday videos 2020

That’s right! You better not run, you better not hide, you better watch out for brand new robot holiday videos on Robohub!

Drop your submissions down our chimney at daniel.carrillozapata@robohub.org and share the spirit of the season.

For inspiration, here is our first submission:

Robohub wins Champion Award in SVR ‘Good Robot’ Industry Awards

President: Sabine Haeurt

Founded: 2012

HQ: Switzerland

Robohub is an online platform and non-profit that brings together leading communicators in robotics research, start-ups, business, and education from around the world, focused on connecting the robotics community to the public. It can be difficult for the public to find free, high-quality information about robotics. At Robohub, we enable roboticists to share their stories in their own words by providing them with a social media platform and editorial guidance. This means that our readers get to learn about the latest research and business news, events and opinions, directly from the experts.

Since 2012, Robohub and its international community of volunteers have published over 300 Robohub Podcasts, 7000 blog posts, videos and more, reaching 1M pageviews every year, and more than 30k followers on social media. You can follow robohub on Twitter at @robohub.

Challenges of Developing a Safety System for an Autonomous Work Site

Celebrating the good robots!

OAKLAND, California, Dec. 14, 2020 /Press Release/ — Silicon Valley Robotics, the world’s largest cluster of innovation in robotics, announces the inaugural ‘Good Robot’ Industry Awards, celebrating the robotics, automation and Artificial Intelligence (AI) that will help us solve global challenges. These 52 companies and individuals have all contributed to innovation that will improve the quality of our lives, whether it’s weed-free pesticide-free farming, like FarmWise or Iron Ox; supporting health workers and the elderly manage health care treatment regimes, like Catalia Health or Multiply Labs; or reimagining the logistics industry so that the transfer of physical goods becomes as efficient as the transfer of information, like Cruise, Embark, Matternet and Zipline.

The categories Innovation, Vision and Commercialization represent the stages robotics companies go through, firstly with an innovative technology or product, then with a vision to change the world (and occasionally the investment to match), and finally with real evidence of customer traction. The criteria for our Commercialization Award is achieving $1 million in revenue, which is a huge milestone for a startup building a new invention.

Tessa Lau, Founder and CEO of Dusty Robotics, an Innovation Awardee said “We’re almost there. $1 million in revenue is our next goal.” Dusty Robotics’ FieldPrinter automates the painstaking, time-consuming process of marking building plans in the field, replacing a traditional process using measuring tape and chalk lines that hasn’t changed in 5000 years. The company’s vision of creating robot-powered tools for the modern construction workforce resonates strongly with commercial construction companies. Dusty’s robot fleet is now in production, producing highly accurate layouts in record time on every floor of two multi-family residential towers going up in San Francisco.

The SVR ‘Good Robot’ Industry Awards also highlight diverse robotics companies. In our Visionary Category, Zoox is the first billion dollar company led by an African-American woman, Aicha Evans, and Robust AI shows diversity at every level of the organization. Diversity of thought will be critical as Robust AI tackles the challenge of building a cognitive engine for robotics that incorporates common sense reasoning.

“Robotics and AI will shape the next century in the same way the Industrial revolution shaped the 20th century. To create a future that amplifies human potential, the full spectrum of human perspective needs to contribute to the design process. This is fundamental to how we are building Robust.AI” explains COO Anthony Jules.

We also wanted to highlight the community in robotics with our Champion Award, because as Silicon Valley Robotics Managing Director Andra Keay says, “In robotics, we’re always standing on the shoulders of giants. And many of these contributions go unrecognized outside of the robotics community. We depend on a strong community to help startups cross the chasm to commercialization, and so we want to recognize our Champions, starting with Willow Garage, which was the Fairchild of the 21st century.”

Willow Garage had a massive impact on robotics in seven short years, from 2006 to 2013. Not only did they produce ROS, now maintained by Open Robotics, but alumni of Willow Garage can be found in the core teams of almost every groundbreaking new robotics company. Some, like Fetch Robotics, winner of our Overall Excellence Award and pioneer in autonomous mobile robots for logistics, achieved the full cycle from startup to ground breaking industry leader in only five short years. By 2018, Fetch Robotics was named a Technology Pioneer by the World Economic Forum, and is winner of our Award for Overall Excellence along with Ambidextrous.

Ambidextrous looks likely to follow the same successful trajectory as Fetch, but focused on manipulation instead of autonomous mobility. Ambidextrous is spinning out research from the University of California Berkeley, that uses AI and simulation to solve the ‘pick problem’ for handling real world items. At only two years old, Ambidextrous is already piloting solutions for significant customers. Two other UC Berkeley startups made our inaugural award list, Squishy Robotics and Covariant.ai, highlighting the large amount of robotics research happening in the Bay Area, in more than fifty robotics research labs.

Hopefully, this will lead to more good robots solving global challenges. As one of our Champions, Dr Ayanna Howard says, “I believe that every engineer has a responsibility to make the world a better place. We are gifted with an amazing power to take people’s wishes and make them a reality.”

The full details of the 52 Awardees in the 2020 Silicon Valley Robotics ‘Good Robot’ Industry Awards can be seen at https://svrobo.org/awards .

Overall Excellence Award:

Innovation Award:

Eve – Halodi

FHA-C with Integrated Servo Drive – Harmonic Drive LLC

FieldPrinter – Dusty Robotics

Inception Drive – SRI International

nanoScan3 – SICK

QRB5 – Qualcomm

Stretch – Hello Robot

Tensegrity Robots – Squishy Robotics

Titan – FarmWise

Velabit – Velodyne Lidar Inc.

Visionary Award:

Agility Robotics

Built Robotics

Covariant.ai

Cruise

Dishcraft Robotics

Embark

Formant

Iron Ox

Robust.ai

Zipline

Zoox

Commercialization Award:

Canvas

Catalia Health

Haddington Dynamics

Kindred.ai

Matternet

Multiply Labs

OhmniLabs

Simbe Robotics

Ubiquity Robotics

Entrepreneurship Award:

Community Champion Award:

Companies:

NASA Intelligent Robotics Group

Open Robotics

PickNik Robotics

Robohub

SICK

Willow Garage (best to see the Red Hat series How to Start a Robot Revolution)

Individuals:

Alex Padilla

Ayanna Howard

Evan Ackerman

Frank Tobe

Henrik Christensen

Joy Buolamwini

Katherine Scott

Khari Johnson

Louise Poubel

Mark Martin

Rodney Brooks

Rumman Chowdhury

Timnit Gebru

About Silicon Valley Robotics

Silicon Valley Robotics (SVR) supports the innovation and commercialization of robotics technologies, as a non-profit industry association. Our first strategic plan focused on connecting startups with investment, and since our founding in 2010, our membership has grown tenfold, reflecting our success in increasing investment into robotics. We believe that with robotics, we can improve productivity, meet labor shortages, get rid of jobs that treat humans like robots and finally create precision, personalized food, mobility, housing and health technologies. For more information, please visit https://svrobo.org

SOURCE: Silicon Valley Robotics (SVR)

PRESS CONTACT: Andra Keay andra@svrobo.org