How Remote Industrial Robotic Control Makes the Most of Automation

Robo-Insight #3

Source: OpenAI’s DALL·E 2 with prompt “a hyperrealistic picture of a robot reading the news on a laptop at a coffee shop”

Welcome to the third edition of Robo-Insight, a biweekly robotics news update! In this post, we are excited to share a range of new advancements in the field and highlight progress in areas like motion, unfamiliar navigation, dynamic control, digging, agriculture, surgery, and food sorting.

A bioinspired robot masters 8 modes of motion for adaptive maneuvering

In a world of constant motion, a newly developed robot named M4 (Multi-Modal Mobility Morphobot) has demonstrated the ability to switch between eight different modes of motion, including rolling, flying, and walking. Designed by researchers from Caltech’s Center for Autonomous Systems and Technologies (CAST) and Northeastern University, the robot can autonomously adapt its movement strategy based on its environment. Created by engineers Mory Gharib and Alireza Ramezani, the M4 project aims to enhance robot locomotion by utilizing a combination of adaptable components and artificial intelligence. The potential applications of this innovation range from medical transport to planetary exploration.

The robot switches from its driving to its walking state. Source.

New navigation approach for robots aiding visually impaired individuals

Speaking of movement, researchers from the Hamburg University of Applied Sciences have presented an innovative navigation algorithm for a mobile robot assistance system based on OpenStreetMap data. The algorithm addresses the challenges faced by visually impaired individuals in navigating unfamiliar routes. By employing a three-stage process involving map verification, augmentation, and generation of a navigable graph, the algorithm optimizes navigation for this user group. The study highlights the potential of OpenStreetMap data to enhance navigation applications for visually impaired individuals, carrying implications for the advancement of robotics solutions that can cater to specific user requirements through data verification and augmentation.

This autonomous vehicle aims to guide visually impaired individuals. Source.

A unique technique enhances robot control in dynamic environments

Along the same lines as new environments, researchers from MIT and Stanford University have developed a novel machine-learning technique that enhances the control of robots, such as drones and autonomous vehicles, in rapidly changing environments. The approach leverages insights from control theory to create effective control strategies for complex dynamics, like wind impacts on flying vehicles. This technique holds potential for a range of applications, from enabling autonomous vehicles to adapt to slippery road conditions to improving the performance of drones in challenging wind conditions. By integrating learned dynamics and control-oriented structures, the researchers’ approach offers a more efficient and effective method for controlling robots, with implications for various types of dynamical systems in robotics.

Robot that could have improved control in different environments. Source.

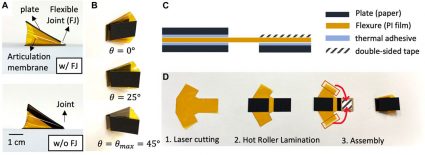

Burrowing robots with origami feet

Robots have been improving in areas above ground for a while but are now also advancing in underground spaces, researchers from the University of California Berkeley and the University of California Santa Cruz have unveiled a new robotics approach that utilizes origami-inspired foldable feet to navigate granular environments. Drawing inspiration from biological systems and their anisotropic forces, this approach harnesses reciprocating burrowing techniques for precise directional motion. By employing simple linear actuators and leveraging passive anisotropic force responses, this study paves the way for streamlined robotic burrowing, shedding light on the prospect of simplified yet effective underground exploration and navigation. This innovative integration of origami principles into robotics opens the door to enhanced subterranean applications.

The prototype for the foot and its method for fabrication. Source.

Innovative processes in agricultural robotics

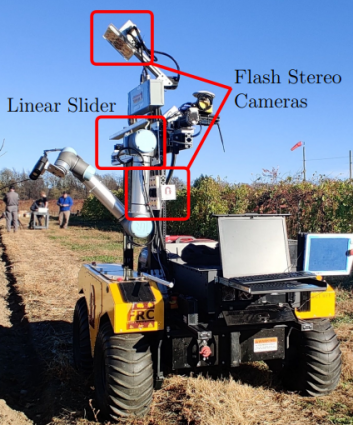

In the world of agriculture, a researcher from Carnegie Mellon University recently explored the synergy between scientific phenotyping and agricultural robotics in a Master’s Thesis. Their study delved into the vital role of accurate plant trait measurement in developing improved plant varieties, while also highlighting the promising realm of robotic plant manipulation in agriculture. Envisioning advanced farming practices, the researcher emphasizes tasks like pruning, pollination, and harvesting carried out by robots. By proposing innovative methods such as 3D cloud assessment for seed counting and vine segmentation, the study aims to streamline data collection for agricultural robotics. Additionally, the creation and use of 3D skeletal vine models exhibit the potential for optimizing grape quality and yield, paving the way for more efficient agricultural practices.

A Robotic data capture platform that was introduced. Source.

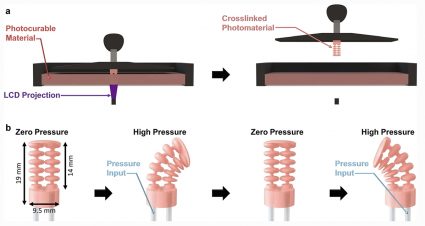

Soft robotic catheters could help improve minimally invasive surgery

Shifting our focus to surgery, a team of mechanical engineers and medical researchers from the University of Maryland, Johns Hopkins University, and the University of Maryland Medical School has developed a pneumatically actuated soft robotic catheter system to enhance control during minimally invasive surgeries. The system allows surgeons to insert and bend the catheter tip with high accuracy simultaneously, potentially improving outcomes in procedures that require navigating narrow and complex body spaces. The researchers’ approach simplifies the mechanical and control architecture through pneumatic actuation, enabling intuitive control of both bending and insertion without manual channel pressurization. The system has shown promise in accurately reaching cylindrical targets in tests, benefiting both novice and skilled surgeons.

Figure showing manufacturing and operation of soft robotic catheter tip using printing process for actuator and pneumatic pressurization to control catheter bending. Source.

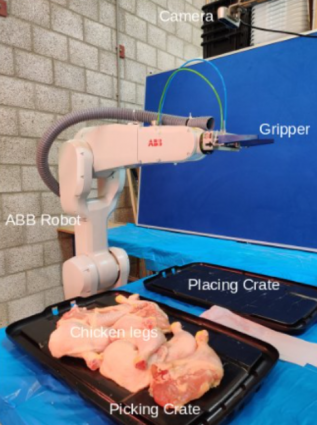

Robotic system enhances poultry handling efficiency

Finally, in the food world, researchers have introduced an innovative robotic system designed to efficiently pick and place deformable poultry pieces from cluttered bins. The architecture integrates multiple modules, enabling precise manipulation of delicate poultry items. A comprehensive evaluation approach is proposed to assess the system’s performance across various modules, shedding light on successes and challenges. This advancement holds the potential to revolutionize meat processing and the broader food industry, addressing demands for increased automation.

An experimental setup. Source.

This array of recent developments spanning various fields shows the versatile and ever-evolving character of robotics technology, unveiling fresh avenues for its integration across different sectors. The steady evolution in robotics exemplifies the ongoing endeavors and the potential ramifications these advancements could have in the times ahead.

Sources:

- New Bioinspired Robot Flies, Rolls, Walks, and More. (2023, June 27). Center for Autonomous Systems and Technologies. Caltech University.

- Application of Path Planning for a Mobile Robot Assistance System Based on OpenStreetMap Data. Stahr, P., Maaß, J., & Gärtner, H. (2023). Robotics, 12(4), 113.

- A simpler method for learning to control a robot. (2023, July 26). MIT News | Massachusetts Institute of Technology.

- Efficient reciprocating burrowing with anisotropic origami feet. Kim, S., Treers, L. K., Huh, T. M., & Stuart, H. S. (2023, July 3). Frontiers.

- Phenotyping and Skeletonization for Agricultural Robotics. The Robotics Institute Carnegie Mellon University. (n.d.). Retrieved August 10, 2023.

- Pneumatically controlled soft robotic catheters offer accuracy, flexibility. (n.d.). Retrieved August 10, 2023.

- Advanced Robotic System for Efficient Pick-and-Place of Deformable Poultry in Cluttered Bin: A Comprehensive Evaluation Approach. Raja, R., Burusa, A. K., Kootstra, G., & van Henten, E. (2023, August 7). TechRviv.

Mobile robots get a leg up from a more-is-better communications principle

Getting a leg up from mobile robots comes down to getting a bunch of legs. Georgia Institute of Technology

By Baxi Chong (Postdoctoral Fellow, School of Physics, Georgia Institute of Technology)

Adding legs to robots that have minimal awareness of the environment around them can help the robots operate more effectively in difficult terrain, my colleagues and I found.

We were inspired by mathematician and engineer Claude Shannon’s communication theory about how to transmit signals over distance. Instead of spending a huge amount of money to build the perfect wire, Shannon illustrated that it is good enough to use redundancy to reliably convey information over noisy communication channels. We wondered if we could do the same thing for transporting cargo via robots. That is, if we want to transport cargo over “noisy” terrain, say fallen trees and large rocks, in a reasonable amount of time, could we do it by just adding legs to the robot carrying the cargo and do so without sensors and cameras on the robot?

Most mobile robots use inertial sensors to gain an awareness of how they are moving through space. Our key idea is to forget about inertia and replace it with the simple function of repeatedly making steps. In doing so, our theoretical analysis confirms our hypothesis of reliable and predictable robot locomotion – and hence cargo transport – without additional sensing and control.

To verify our hypothesis, we built robots inspired by centipedes. We discovered that the more legs we added, the better the robot could move across uneven surfaces without any additional sensing or control technology. Specifically, we conducted a series of experiments where we built terrain to mimic an inconsistent natural environment. We evaluated the robot locomotion performance by gradually increasing the number of legs in increments of two, beginning with six legs and eventually reaching a total of 16 legs.

Navigating rough terrain can be as simple as taking it a step at a time, at least if you have a lot of legs.

As the number of legs increased, we observed that the robot exhibited enhanced agility in traversing the terrain, even in the absence of sensors. To further assess its capabilities, we conducted outdoor tests on real terrain to evaluate its performance in more realistic conditions, where it performed just as well. There is potential to use many-legged robots for agriculture, space exploration and search and rescue.

Why it matters

Transporting things – food, fuel, building materials, medical supplies – is essential to modern societies, and effective goods exchange is the cornerstone of commercial activity. For centuries, transporting material on land has required building roads and tracks. However, roads and tracks are not available everywhere. Places such as hilly countryside have had limited access to cargo. Robots might be a way to transport payloads in these regions.

What other research is being done in this field

Other researchers have been developing humanoid robots and robot dogs, which have become increasingly agile in recent years. These robots rely on accurate sensors to know where they are and what is in front of them, and then make decisions on how to navigate.

However, their strong dependence on environmental awareness limits them in unpredictable environments. For example, in search-and-rescue tasks, sensors can be damaged and environments can change.

What’s next

My colleagues and I have taken valuable insights from our research and applied them to the field of crop farming. We have founded a company that uses these robots to efficiently weed farmland. As we continue to advance this technology, we are focused on refining the robot’s design and functionality.

While we understand the functional aspects of the centipede robot framework, our ongoing efforts are aimed at determining the optimal number of legs required for motion without relying on external sensing. Our goal is to strike a balance between cost-effectiveness and retaining the benefits of the system. Currently, we have shown that 12 is the minimum number of legs for these robots to be effective, but we are still investigating the ideal number.

The Research Brief is a short take on interesting academic work.

The authors has received funding from NSF-Simons Southeast Center for Mathematics and Biology (Simons Foundation SFARI 594594), Georgia Research Alliance (GRA.VL22.B12), Army Research Office (ARO) MURI program, Army Research Office Grant W911NF-11-1-0514 and a Dunn Family Professorship.

The author and his colleagues have one or more pending patent applications related to the research covered in this article.

The author and his colleagues have established a start-up company, Ground Control Robotics, Inc., partially based on this work.

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Cutting-edge UAV technology: New method for dynamic target tracking in GPS-denied environments

Mobile robots get a leg up from a more-is-better communications principle

AI and AM: A Powerful Synergy

New program takes us one step closer to autonomous robots

An open-source and Python-based platform for the 2D simulation of Robocup soccer

Renaissance of Robotics: The Top 10 Marvels in the Post-COVID Age

CMES Robotics Introduces Innovative Mixed Case Palletizing Solutions

Computer science researcher creates flexible robots from soft modules

ICON Injection Molding Deploys Formic Tend to Boost Production by 20% with Automation Partnership that is “Too Good to be True”

The Strange: Scifi Mars robots meet real-world bounded rationality

Even with the addition of a strange mineral, robots still obey the principle of bounded rationality in artificial intelligence set forth by Herb Simon.

I cover bounded rationality in my Science Robotics review (image courtesy of @SciRobotics) but I am adding some more details here.

Did you like the Western True Grit? Classic scifi like The Martian Chronicles? Scifi horror like Annihilation? Steam punk? How about robots? If yes to any or all of the above, The Strange by Nathan Ballingrud is for you! It’s a captivating book. And as a bonus, it’s a great example of the real world principle of bounded rationality.

First off, let’s talk about the book. The Strange is set in a counterfactual Confederate States of America colony on Mars circa 1930s, evocative of Ray Bradbury’s The Martian Chronicles. The colony makes money by mining the Strange, a green mineral which amplifies the sapience and sentience of the Steam Punk robots called Engines. The planet is capable of supporting human life, though conditions are tenuous and though the colony is self-sufficient, all communication with Earth has suddenly stopped without a reason and its long term survival is now in question. The novel’s protagonist is Annabel Crisp, a delightfully frank and unfiltered 13 year old heroine straight out of Charles Portis’ classic Western novel, True Grit. She and Watson, the dishwashing Engine from her parent’s small restaurant, embark on a dangerous trek to recover stolen property and right a plethora of wrongs. Along the way, they deal with increasingly less friendly humans and Engines.

It’s China Meiville’s New Weird meets the Wild West.

Really.

What makes The Strange different from other horror novels is that the Engines (and humans) don’t exceed their intrinsic capabilities but rather the mineral focuses or concentrates on the existing capabilities. In humans it amplifies the deepest aspects of character, a coward becomes more clever at being a coward, a person determined to return to Earth will go to unheard of extreme measures. Yet, the human will not do anything they weren’t already capable of. In robots, it adds a veneer of personality and the ability to converse through natural language, but Engines are still limited by physical capabilities and intrinsic software functionality.

The novel indirectly illustrates important real world concepts in machine intelligence and bounded rationality:

- One is that intelligence is robotics is tailored to the task it is designed for. While the public assumes a general purpose artificial general intelligence that can be universally applied to any task or work domain, robotics focuses on developing forms of intelligence needed to accomplish specific tasks. For example, a factory robot may need to learn to mimic (and improve on) how a person performs a function, but it doesn’t need to be like Sophia and speak to audiences about the role of artificial intelligence in society.

- Another important distinction is that the Public often conflates four related but separate concepts: sapience (intelligence, expertise), sentience (feelings, self awareness), autonomy (ability to adapt how it executes a task or mission), and initiative (ability to modify or discard the task or mission to meet the objectives). In sci-fi, a robot may have all four, but in real-life they typically have very narrow sapience, no sentience, autonomy limited to the tasks they are designed for, and no initiative.

These concepts fall under a larger idea first proposed in the 1950s by Herb Simon, a Nobel Prize winner in economics and a founder of the field of artificial intelligence- the idea is bounded rationality. Bounded rationality states that all decision-making agents, be they human or machine, have limits imposed by their computational resources (IQ, compute hardware, etc.), time, information (either too much or too little). For AI and robots the limits include the algorithms- the core programming. Even humans with high IQs make dumb decisions when they are tired, hungry, misinformed, or stressed. And no matter how smart, they stay within the constraints of their innate abilities. Only in fiction do people suddenly supersede their innate capabilities, and usually that takes something like a radioactive spider bite.

What would our real-world robots grow into if they were suddenly smarter? Would they be obsessive about a task, ignoring humans all together, possibly injuring or even killing them as the robots went about their tasks-sort of making OSHA violations? Would they hunt us down and kill us in one of the myriad ways detailed in Robopocalypse? Or would they deliver inventory, meals, and medicine with the kindly charm of an old-fashioned mailman? Would the social manipulation in healthcare and tutoring robots become real sentience, real caring?

Bounded rationality says it depends on us. The robots will simply do whatever we programmed them for within the limits of their hardware and the situation. Of course, that’s the problem- our programming is imperfect and we have trouble anticipating consequences. But for now, even if there was the Strange on Mars, Curiosity and Perseverance would keep on keeping on. And a big shout out to NASA- It’s hard to imagine how they could work better than they already do.

Pick up a copy of The Strange, it’s a great read. Plus Herb Simon’s Sciences of the Artificial. And don’t forget my books too! You can learn more about bounded rationality in science fiction here, in my textbook Introduction to AI Robotics and more science fiction that illustrates how robots work in Robotics Through Science Fiction and Learn AI and Human-Robot Interaction through Asimov’s I, Robot Stories.

The Strange: Scifi Mars robots meet real-world bounded rationality

Even with the addition of a strange mineral, robots still obey the principle of bounded rationality in artificial intelligence set forth by Herb Simon.

I cover bounded rationality in my Science Robotics review (image courtesy of @SciRobotics) but I am adding some more details here.

Did you like the Western True Grit? Classic scifi like The Martian Chronicles? Scifi horror like Annihilation? Steam punk? How about robots? If yes to any or all of the above, The Strange by Nathan Ballingrud is for you! It’s a captivating book. And as a bonus, it’s a great example of the real world principle of bounded rationality.

First off, let’s talk about the book. The Strange is set in a counterfactual Confederate States of America colony on Mars circa 1930s, evocative of Ray Bradbury’s The Martian Chronicles. The colony makes money by mining the Strange, a green mineral which amplifies the sapience and sentience of the Steam Punk robots called Engines. The planet is capable of supporting human life, though conditions are tenuous and though the colony is self-sufficient, all communication with Earth has suddenly stopped without a reason and its long term survival is now in question. The novel’s protagonist is Annabel Crisp, a delightfully frank and unfiltered 13 year old heroine straight out of Charles Portis’ classic Western novel, True Grit. She and Watson, the dishwashing Engine from her parent’s small restaurant, embark on a dangerous trek to recover stolen property and right a plethora of wrongs. Along the way, they deal with increasingly less friendly humans and Engines.

It’s China Meiville’s New Weird meets the Wild West.

Really.

What makes The Strange different from other horror novels is that the Engines (and humans) don’t exceed their intrinsic capabilities but rather the mineral focuses or concentrates on the existing capabilities. In humans it amplifies the deepest aspects of character, a coward becomes more clever at being a coward, a person determined to return to Earth will go to unheard of extreme measures. Yet, the human will not do anything they weren’t already capable of. In robots, it adds a veneer of personality and the ability to converse through natural language, but Engines are still limited by physical capabilities and intrinsic software functionality.

The novel indirectly illustrates important real world concepts in machine intelligence and bounded rationality:

- One is that intelligence is robotics is tailored to the task it is designed for. While the public assumes a general purpose artificial general intelligence that can be universally applied to any task or work domain, robotics focuses on developing forms of intelligence needed to accomplish specific tasks. For example, a factory robot may need to learn to mimic (and improve on) how a person performs a function, but it doesn’t need to be like Sophia and speak to audiences about the role of artificial intelligence in society.

- Another important distinction is that the Public often conflates four related but separate concepts: sapience (intelligence, expertise), sentience (feelings, self awareness), autonomy (ability to adapt how it executes a task or mission), and initiative (ability to modify or discard the task or mission to meet the objectives). In sci-fi, a robot may have all four, but in real-life they typically have very narrow sapience, no sentience, autonomy limited to the tasks they are designed for, and no initiative.

These concepts fall under a larger idea first proposed in the 1950s by Herb Simon, a Nobel Prize winner in economics and a founder of the field of artificial intelligence- the idea is bounded rationality. Bounded rationality states that all decision-making agents, be they human or machine, have limits imposed by their computational resources (IQ, compute hardware, etc.), time, information (either too much or too little). For AI and robots the limits include the algorithms- the core programming. Even humans with high IQs make dumb decisions when they are tired, hungry, misinformed, or stressed. And no matter how smart, they stay within the constraints of their innate abilities. Only in fiction do people suddenly supersede their innate capabilities, and usually that takes something like a radioactive spider bite.

What would our real-world robots grow into if they were suddenly smarter? Would they be obsessive about a task, ignoring humans all together, possibly injuring or even killing them as the robots went about their tasks-sort of making OSHA violations? Would they hunt us down and kill us in one of the myriad ways detailed in Robopocalypse? Or would they deliver inventory, meals, and medicine with the kindly charm of an old-fashioned mailman? Would the social manipulation in healthcare and tutoring robots become real sentience, real caring?

Bounded rationality says it depends on us. The robots will simply do whatever we programmed them for within the limits of their hardware and the situation. Of course, that’s the problem- our programming is imperfect and we have trouble anticipating consequences. But for now, even if there was the Strange on Mars, Curiosity and Perseverance would keep on keeping on. And a big shout out to NASA- It’s hard to imagine how they could work better than they already do.

Pick up a copy of The Strange, it’s a great read. Plus Herb Simon’s Sciences of the Artificial. And don’t forget my books too! You can learn more about bounded rationality in science fiction here, in my textbook Introduction to AI Robotics and more science fiction that illustrates how robots work in Robotics Through Science Fiction and Learn AI and Human-Robot Interaction through Asimov’s I, Robot Stories.