Mastering Stratego, the classic game of imperfect information

Mastering Stratego, the classic game of imperfect information

Mastering Stratego, the classic game of imperfect information

Caregiving simulator advances research in assistive robotics

San Francisco will allow police to deploy robots that kill

Making ‘transport’ robots smarter

When it comes to delivery drones, the government is selling us a pipe dream. Experts explain the real costs

New Ideas, Collaboration Help Brenton Drive Four Major Product Handling Upgrades to KW Container

Touch sensing: An important tool for mobile robot navigation

In mammals, the touch modality develops earlier than the other senses, yet it is a less studied sensory modality than the visual and auditory counterparts. It not only allows environmental interactions, but also, serves as an effective defense mechanism.

Figure 1: Rat using the whiskers to interact with environment via touch

The role of touch in mobile robot navigation has not been explored in detail. However, touch appears to play an important role in obstacle avoidance and pathfinding for mobile robots. Proximal sensing often is a blind spot for most long range sensors such as cameras and lidars for which touch sensors could serve as a complementary modality.

Overall, touch appears to be a promising modality for mobile robot navigation. However, more research is needed to fully understand the role of touch in mobile robot navigation.

Role of touch in nature

The touch modality is paramount for many organisms. It plays an important role in perception, exploration, and navigation. Animals use this mode of navigation extensively to explore their surroundings. Rodents, pinnipeds, cats, dogs, and fish use this mode differently than humans. While humans primarily use touch sense for prehensile manipulation, mammals such as rats and shrews rely on touch sensing for exploration and navigation due to their poor visual system via the vibrissa mechanism. This vibrissa mechanism is essential for short-range sensing, which works in tandem with the visual system.

Artificial touch sensors for robots

Artificial touch sensor design has evolved over the last four decades. However, these sensors are not as widely used in mobile robot systems as cameras and lidars. Mobile robots usually employ these long range sensors, but short range sensing receives relatively less attention.

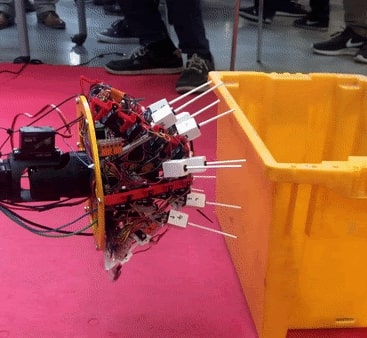

When designing the artificial touch sensors for mobile robot navigation, we typically draw inspiration from nature, i.e., biological whiskers to derive bio-inspired artificial whiskers. One such early prototype is shown in figure below.

Figure 2: Bioinspired artificial rat whisker array prototype V1.0

However, there is no reason for us to limit the design innovations to 100% accurately mimicking biological whisker-like touch sensors. While some researchers are attempting to perfect the tapering of whiskers [1], we are currently investigating abstract mathematical models that can further inspire a whole array of touch sensors [2].

Challenges with designing touch sensors for robots

There are many challenges when designing touch sensors for mobile robots. One key challenge is the trade-off between weight, size, and power consumption. The power consumption of the sensors can be significant, which can limit their applicability in mobile robot applications.

Another challenge is to find the right trade-off between touch sensitivity and robustness. The sensors need to be sensitive enough to detect small changes in the environment, yet robust enough to handle the dynamic and harsh conditions in most mobile robot applications.

Future directions

There is a need for more systematic studies to understand the role of touch in mobile robot navigation. The current studies are mostly limited to specific applications and scenarios geared towards dexterous manipulation and grasping. We need to understand the challenges and limitations of using touch sensors for mobile robot navigation. We also need to develop more robust and power-efficient touch sensors for mobile robots.

Logistically, another factor that limits the use of touch sensors is the lack of openly available off the shelf touch sensors. Few research groups around the world are working towards their own touch sensor prototype, biomimetic or otherwise, but all such designs are closed and extremely hard to replicate and improve.

References

- Williams, Christopher M., and Eric M. Kramer. “The advantages of a tapered whisker.” PLoS one 5.1 (2010): e8806.

- Tiwari, Kshitij, et al. “Visibility-Inspired Models of Touch Sensors for Navigation.” 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2022

Golfing robot uses physics-based model to train its AI system

Steerable soft robots could enhance medical applications

Flexiv’s Rizon Robot Used in Avant-Garde Art Installation

Study: Automation drives income inequality

A newly published paper quantifies the extent to which automation has contributed to income inequality in the U.S., simply by replacing workers with technology — whether self-checkout machines, call-center systems, assembly-line technology, or other devices. Image: Jose-Luis Olivares, MIT

By Peter Dizikes

When you use self-checkout machines in supermarkets and drugstores, you are probably not — with all due respect — doing a better job of bagging your purchases than checkout clerks once did. Automation just makes bagging less expensive for large retail chains.

“If you introduce self-checkout kiosks, it’s not going to change productivity all that much,” says MIT economist Daron Acemoglu. However, in terms of lost wages for employees, he adds, “It’s going to have fairly large distributional effects, especially for low-skill service workers. It’s a labor-shifting device, rather than a productivity-increasing device.”

A newly published study co-authored by Acemoglu quantifies the extent to which automation has contributed to income inequality in the U.S., simply by replacing workers with technology — whether self-checkout machines, call-center systems, assembly-line technology, or other devices. Over the last four decades, the income gap between more- and less-educated workers has grown significantly; the study finds that automation accounts for more than half of that increase.

“This single one variable … explains 50 to 70 percent of the changes or variation between group inequality from 1980 to about 2016,” Acemoglu says.

The paper, “Tasks, Automation, and the Rise in U.S. Wage Inequality,” is being published in Econometrica. The authors are Acemoglu, who is an Institute Professor at MIT, and Pascual Restrepo PhD ’16, an assistant professor of economics at Boston University.

So much “so-so automation”

Since 1980 in the U.S., inflation-adjusted incomes of those with college and postgraduate degrees have risen substantially, while inflation-adjusted earnings of men without high school degrees has dropped by 15 percent.

How much of this change is due to automation? Growing income inequality could also stem from, among other things, the declining prevalence of labor unions, market concentration begetting a lack of competition for labor, or other types of technological change.

To conduct the study, Acemoglu and Restrepo used U.S. Bureau of Economic Analysis statistics on the extent to which human labor was used in 49 industries from 1987 to 2016, as well as data on machinery and software adopted in that time. The scholars also used data they had previously compiled about the adoption of robots in the U.S. from 1993 to 2014. In previous studies, Acemoglu and Restrepo have found that robots have by themselves replaced a substantial number of workers in the U.S., helped some firms dominate their industries, and contributed to inequality.

At the same time, the scholars used U.S. Census Bureau metrics, including its American Community Survey data, to track worker outcomes during this time for roughly 500 demographic subgroups, broken out by gender, education, age, race and ethnicity, and immigration status, while looking at employment, inflation-adjusted hourly wages, and more, from 1980 to 2016. By examining the links between changes in business practices alongside changes in labor market outcomes, the study can estimate what impact automation has had on workers.

Ultimately, Acemoglu and Restrepo conclude that the effects have been profound. Since 1980, for instance, they estimate that automation has reduced the wages of men without a high school degree by 8.8 percent and women without a high school degree by 2.3 percent, adjusted for inflation.

A central conceptual point, Acemoglu says, is that automation should be regarded differently from other forms of innovation, with its own distinct effects in workplaces, and not just lumped in as part of a broader trend toward the implementation of technology in everyday life generally.

Consider again those self-checkout kiosks. Acemoglu calls these types of tools “so-so technology,” or “so-so automation,” because of the tradeoffs they contain: Such innovations are good for the corporate bottom line, bad for service-industry employees, and not hugely important in terms of overall productivity gains, the real marker of an innovation that may improve our overall quality of life.

“Technological change that creates or increases industry productivity, or productivity of one type of labor, creates [those] large productivity gains but does not have huge distributional effects,” Acemoglu says. “In contrast, automation creates very large distributional effects and may not have big productivity effects.”

A new perspective on the big picture

The results occupy a distinctive place in the literature on automation and jobs. Some popular accounts of technology have forecast a near-total wipeout of jobs in the future. Alternately, many scholars have developed a more nuanced picture, in which technology disproportionately benefits highly educated workers but also produces significant complementarities between high-tech tools and labor.

The current study differs at least by degree with this latter picture, presenting a more stark outlook in which automation reduces earnings power for workers and potentially reduces the extent to which policy solutions — more bargaining power for workers, less market concentration — could mitigate the detrimental effects of automation upon wages.

“These are controversial findings in the sense that they imply a much bigger effect for automation than anyone else has thought, and they also imply less explanatory power for other [factors],” Acemoglu says.

Still, he adds, in the effort to identify drivers of income inequality, the study “does not obviate other nontechnological theories completely. Moreover, the pace of automation is often influenced by various institutional factors, including labor’s bargaining power.”

Labor economists say the study is an important addition to the literature on automation, work, and inequality, and should be reckoned with in future discussions of these issues.

“Acemoglu and Restrepo’s paper proposes an elegant new theoretical framework for understanding the potentially complex effects of technical change on the aggregate structure of wages,” says Patrick Kline, a professor of economics at the University of California, Berkeley. “Their empirical finding that automation has been the dominant factor driving U.S. wage dispersion since 1980 is intriguing and seems certain to reignite debate over the relative roles of technical change and labor market institutions in generating wage inequality.”

For their part, in the paper Acemoglu and Restrepo identify multiple directions for future research. That includes investigating the reaction over time by both business and labor to the increase in automation; the quantitative effects of technologies that do create jobs; and the industry competition between firms that quickly adopted automation and those that did not.

The research was supported in part by Google, the Hewlett Foundation, Microsoft, the National Science Foundation, Schmidt Sciences, the Sloan Foundation, and the Smith Richardson Foundation.

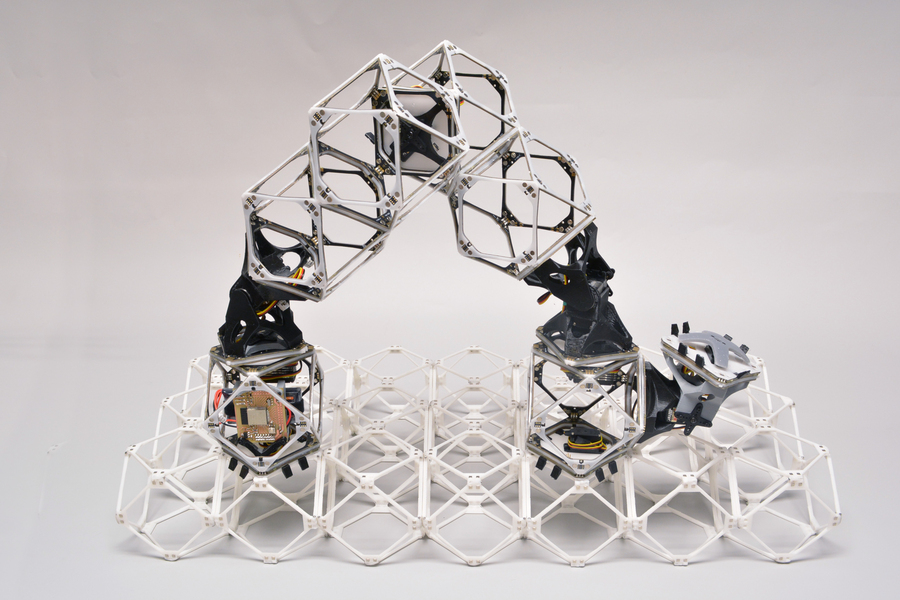

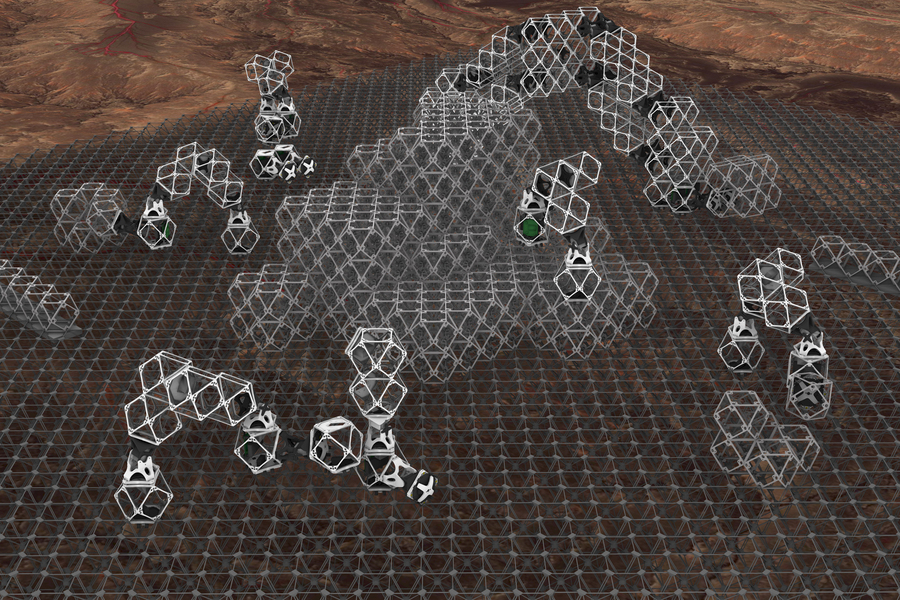

Flocks of assembler robots show potential for making larger structures

Researchers at MIT have made significant steps toward creating robots that could practically and economically assemble nearly anything, including things much larger than themselves, from vehicles to buildings to larger robots. The new system involves large, usable structures built from an array of tiny identical subunits called voxels (the volumetric equivalent of a 2-D pixel). Courtesy of the researchers.

By David L. Chandler

Researchers at MIT have made significant steps toward creating robots that could practically and economically assemble nearly anything, including things much larger than themselves, from vehicles to buildings to larger robots.

The new work, from MIT’s Center for Bits and Atoms (CBA), builds on years of research, including recent studies demonstrating that objects such as a deformable airplane wing and a functional racing car could be assembled from tiny identical lightweight pieces — and that robotic devices could be built to carry out some of this assembly work. Now, the team has shown that both the assembler bots and the components of the structure being built can all be made of the same subunits, and the robots can move independently in large numbers to accomplish large-scale assemblies quickly.

The new work is reported in the journal Nature Communications Engineering, in a paper by CBA doctoral student Amira Abdel-Rahman, Professor and CBA Director Neil Gershenfeld, and three others.

A fully autonomous self-replicating robot assembly system capable of both assembling larger structures, including larger robots, and planning the best construction sequence is still years away, Gershenfeld says. But the new work makes important strides toward that goal, including working out the complex tasks of when to build more robots and how big to make them, as well as how to organize swarms of bots of different sizes to build a structure efficiently without crashing into each other.

As in previous experiments, the new system involves large, usable structures built from an array of tiny identical subunits called voxels (the volumetric equivalent of a 2-D pixel). But while earlier voxels were purely mechanical structural pieces, the team has now developed complex voxels that each can carry both power and data from one unit to the next. This could enable the building of structures that can not only bear loads but also carry out work, such as lifting, moving and manipulating materials — including the voxels themselves.

“When we’re building these structures, you have to build in intelligence,” Gershenfeld says. While earlier versions of assembler bots were connected by bundles of wires to their power source and control systems, “what emerged was the idea of structural electronics — of making voxels that transmit power and data as well as force.” Looking at the new system in operation, he points out, “There’s no wires. There’s just the structure.”

The robots themselves consist of a string of several voxels joined end-to-end. These can grab another voxel using attachment points on one end, then move inchworm-like to the desired position, where the voxel can be attached to the growing structure and released there.

Gershenfeld explains that while the earlier system demonstrated by members of his group could in principle build arbitrarily large structures, as the size of those structures reached a certain point in relation to the size of the assembler robot, the process would become increasingly inefficient because of the ever-longer paths each bot would have to travel to bring each piece to its destination. At that point, with the new system, the bots could decide it was time to build a larger version of themselves that could reach longer distances and reduce the travel time. An even bigger structure might require yet another such step, with the new larger robots creating yet larger ones, while parts of a structure that include lots of fine detail may require more of the smallest robots.

Credit: Amira Abdel-Rahman/MIT Center for Bits and Atoms

As these robotic devices work on assembling something, Abdel-Rahman says, they face choices at every step along the way: “It could build a structure, or it could build another robot of the same size, or it could build a bigger robot.” Part of the work the researchers have been focusing on is creating the algorithms for such decision-making.

“For example, if you want to build a cone or a half-sphere,” she says, “how do you start the path planning, and how do you divide this shape” into different areas that different bots can work on? The software they developed allows someone to input a shape and get an output that shows where to place the first block, and each one after that, based on the distances that need to be traversed.

There are thousands of papers published on route-planning for robots, Gershenfeld says. “But the step after that, of the robot having to make the decision to build another robot or a different kind of robot — that’s new. There’s really nothing prior on that.”

While the experimental system can carry out the assembly and includes the power and data links, in the current versions the connectors between the tiny subunits are not strong enough to bear the necessary loads. The team, including graduate student Miana Smith, is now focusing on developing stronger connectors. “These robots can walk and can place parts,” Gershenfeld says, “but we are almost — but not quite — at the point where one of these robots makes another one and it walks away. And that’s down to fine-tuning of things, like the force of actuators and the strength of joints. … But it’s far enough along that these are the parts that will lead to it.”

Ultimately, such systems might be used to construct a wide variety of large, high-value structures. For example, currently the way airplanes are built involves huge factories with gantries much larger than the components they build, and then “when you make a jumbo jet, you need jumbo jets to carry the parts of the jumbo jet to make it,” Gershenfeld says. With a system like this built up from tiny components assembled by tiny robots, “The final assembly of the airplane is the only assembly.”

Similarly, in producing a new car, “you can spend a year on tooling” before the first car gets actually built, he says. The new system would bypass that whole process. Such potential efficiencies are why Gershenfeld and his students have been working closely with car companies, aviation companies, and NASA. But even the relatively low-tech building construction industry could potentially also benefit.

While there has been increasing interest in 3-D-printed houses, today those require printing machinery as large or larger than the house being built. Again, the potential for such structures to instead be assembled by swarms of tiny robots could provide benefits. And the Defense Advanced Research Projects Agency is also interested in the work for the possibility of building structures for coastal protection against erosion and sea level rise.

The new study shows that both the assembler bots and the components of the structure being built can all be made of the same subunits, and the robots can move independently in large numbers to accomplish large-scale assemblies quickly. Courtesy of the researchers.

Aaron Becker, an associate professor of electrical and computer engineering at the University of Houston, who was not associated with this research, calls this paper “a home run — [offering] an innovative hardware system, a new way to think about scaling a swarm, and rigorous algorithms.”

Becker adds: “This paper examines a critical area of reconfigurable systems: how to quickly scale up a robotic workforce and use it to efficiently assemble materials into a desired structure. … This is the first work I’ve seen that attacks the problem from a radically new perspective — using a raw set of robot parts to build a suite of robots whose sizes are optimized to build the desired structure (and other robots) as fast as possible.”

The research team also included MIT-CBA student Benjamin Jenett and Christopher Cameron, who is now at the U.S. Army Research Laboratory. The work was supported by NASA, the U.S. Army Research Laboratory, and CBA consortia funding.