Spiders are truly amazing creatures. They have evolved over more than 200 million years and can be found in almost every corner of our planet. They are one of the most successful animals. Not less impressive are their webs, highly intricate structures that have been optimised through evolution over approximately 100 million years with the ultimate purpose of catching prey.

However, interestingly, the closer you look at spiders’ webs the more details you can observe and the structures are much more complicated than one would expect from a simple snare. They are made of a variety of different types of silks, use water droplets to keep the tension [see: citation 4], and the structure is highly dynamic [see: citation 4]. Spider’s webs have a great deal more morphological complexity than what you would need to simply catch flies.

Since nature typically does not spoil resources the question arises: why are spiders’ webs so complex? Might they have other functionalities besides being a simple trap? One of the most interesting answers to this question is that spiders might use their webs as computational devices.

How does the spider use the web as a computer?

Despite the fact that most spiders have a lot of eyes (the majority has 8, but some have even up to 12), a lot of the spiders have bad sight. In order to understand what is going on in their webs, they use mechanoreceptors in their legs (called lyriforms) to “listen” to vibrations in the web. Different species of spiders have different preferred places to sit and observe. While some can be found right at the center, others prefer to sit outside the actual web and to listen to one single thread. It is quite remarkable that based only on the information that comes through this single thread the spider seems to be able to deduce what is going on in their web and where these events are taking place.

For example, they need to know if there is a prey, like a fly, entangled in their web. Or if the vibrations are coming from a dangerous insect like a wasp and they should stay away. The web is also used to communicate with potential mates and the spider even excites the web and listens to the echo. This might be a way for the spider to check if threads are broken or if the tension in the web has to be increased.

From a computational point of view, the spider needs to classify different vibration pattern (e.g., prey vs predator vs mate) and to locate its origin (i.e., where the vibration started).

One way to understand how a spider’s web could help to carry out this computational functionality is the concept of morphological computation. This is a term that describes the understanding that mechanical structures all over in nature are carrying out useful computations. For example, they help to stabilise running, facilitate sensory data processing, and helps animals and plants to interact with complex and unpredictable environments.

One could say computation is outsourced to the physical body (e.g., from the brain to another part of the body).

From this point of view, the spider’s web can be seen as a nonlinear, dynamic filter. It can be understood as some kind of pre-processing unit that makes it easier for the animal to interpret the vibration signals. The web’s dynamic properties and its complex morphological structure mix vibration signals in a nonlinear fashion. It even has some memory. This can be easily seen by pinching the web. It responds with vibrations for some moments after the impact echoing the original input. The web can also damp unwanted frequencies, which is crucial to get rid of noise. On the other hand, it might even be able to highlight other signals at certain frequencies that carry more relevant information about the events taking place on the web.

These are all useful computations and they make it easier for the spider to “read” and understand the vibration patterns. As a result, the brain of the animal has to do less work and it can concentrate on other tasks. In effect, the spider seems to devolve computation to the web. This might be also the reason why spiders tend to their webs so intensively. They constantly observe it and adapt the tension if it has changed, e.g. due to change in humidity, and repair it as soon a thread is broken.

From spider webs to sensors

People have speculated for a while that spider webs might have additional functionalities. A great article that discusses that is “The Thoughts of a Spiderweb“.

However, nobody so far has systematically looked into the actual computational capabilities of the web. This is about to change. We recently started a Leverhulme Trust Research project that will investigate naturally spun spider webs of different species to understand how and which computing might take place in these structures. Moreover, the project does not only try to understand the underlying computational principles but will also develop morphological computation-based sensor technology to measure flow and vibrations.

The project combines our research expertise in Morphological Computation at the University of Bristol and the expertise on spider webs at the Silk Group in Oxford.

In experimental setups we will use solenoids and laser Doppler vibrometers to measure vibrations in the web with very high precision. The goal is to understand how computation is carried out. We will systematically investigate how filtering capabilities, memory, and signal integration can happen in such structures. In parallel, we will develop a general simulation environment for vibrating structures. We will use this to ask specific questions about how different shapes and materials others than spider webs and silk can help to carry out computations. In addition, we will develop real prototypes of vibration and flow sensors, which will be inspired by these findings. It’s very likely they will look different from spider webs and they will use various types of materials.

Such sensors can be used in various applications. For example, morphological computation based flow sensors could be used to detect anomalies in the flow in tubes. Or vibration sensors put at strategic places on buildings could be able to detect earthquakes or structural failure. Also highly dynamic machines, for example, a wind turbine, could be monitored by such sensors to predict failure.

Ultimately, the project will provide not only a new technology to build sensors, but we hope also to get a fundamental understanding how spiders use their webs for computation.

References

[1] Hauser, H.; Ijspeert, A.; Füchslin, R.; Pfeifer, R. & Maass, W.”Towards a theoretical foundation for morphological computation with compliant bodies.”Biological Cybernetics, Springer Berlin / Heidelberg, 2011, 105, 355-370

[2] Hauser, H.; Ijspeert, A.; Füchslin, R.; Pfeifer, R. & Maass, W. “The role of feedback in morphological computation with compliant bodies”. Biological Cybernetics, Springer Berlin / Heidelberg, 2012, 106, 595-613

[3] Hauser, H.; Füchslin, R.M.; Nakajima, K.“Morphological Computation – The Physical Body as a Computational Resource” Opinions and Outlooks on Morphological Computation, editors Hauser, H.; Füchslin, R.M. and Pfeifer, R., Chapter 20, pp 226-244, 2014, ISBN 978-3-033-04515-6

[4] Mortimer, B., Gordon, S. D., Holland, C., Siviour, C. R., Vollrath, F. and Windmill, J. F. C. (2014), The Speed of Sound in Silk: Linking Material Performance to Biological Function. Adv. Mater., 26: 5179–5183. doi:10.1002/adma.201401027

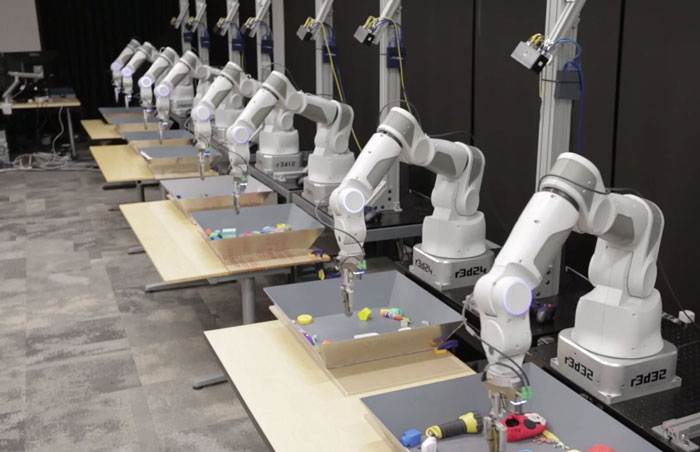

Sergey Levine is an assistant professor at UC Berkeley. His research focuses on robotics and machine learning. In his PhD thesis, he developed a novel guided policy search algorithm for learning complex neural network control policies, which was later applied to enable a range of robotic tasks, including end-to-end training of policies for perception and control. He has also developed algorithms for learning from demonstration, inverse reinforcement learning, efficient training of stochastic neural networks, computer vision, and data-driven character animation.

Sergey Levine is an assistant professor at UC Berkeley. His research focuses on robotics and machine learning. In his PhD thesis, he developed a novel guided policy search algorithm for learning complex neural network control policies, which was later applied to enable a range of robotic tasks, including end-to-end training of policies for perception and control. He has also developed algorithms for learning from demonstration, inverse reinforcement learning, efficient training of stochastic neural networks, computer vision, and data-driven character animation.

The second panel featured talks on sector based solutions starting with the International Federation of the Red Cross (IFRC). The Federation (Aarathi) spoke about their joint project with WeRobotics; looking at cross-sectoral needs for various robotics solutions in the South Pacific. IFRC is exploring at the possibility of launching a South Pacific Flying Labs with a strong focus on women and girls. Pix4D (Lorenzo) addressed the role of aerial robotics in agriculture, giving concrete examples of successful applications while providing guidance to our Flying Labs Coordinators.

The second panel featured talks on sector based solutions starting with the International Federation of the Red Cross (IFRC). The Federation (Aarathi) spoke about their joint project with WeRobotics; looking at cross-sectoral needs for various robotics solutions in the South Pacific. IFRC is exploring at the possibility of launching a South Pacific Flying Labs with a strong focus on women and girls. Pix4D (Lorenzo) addressed the role of aerial robotics in agriculture, giving concrete examples of successful applications while providing guidance to our Flying Labs Coordinators. Panel number three addressed the transformation of transportation. UNICEF (Judith) highlighted the field tests they have been carrying out in Malawi; using cargo robotics to transport HIV samples in order to accelerate HIV testing and thus treatment. UNICEF has also launched an air corridor in Malawi to enable further field-testing of flying robots. MSF (Oriol) shared their approach to cargo delivery using aerial robotics. They shared examples from Papua New Guinea

Panel number three addressed the transformation of transportation. UNICEF (Judith) highlighted the field tests they have been carrying out in Malawi; using cargo robotics to transport HIV samples in order to accelerate HIV testing and thus treatment. UNICEF has also launched an air corridor in Malawi to enable further field-testing of flying robots. MSF (Oriol) shared their approach to cargo delivery using aerial robotics. They shared examples from Papua New Guinea