Kyrgyzstan to King’s Cross: the star baker cooking up code

Dynamic language understanding: adaptation to new knowledge in parametric and semi-parametric models

Interactive design pipeline enables anyone to create their own customized robotic hand

The art of making robots – #ICRA2022 Day 2 interviews and video digest

Every year, ICRA gathers an astonishing number of robot makers. With a quick look at the exhibitors, one can already perceive the immense creativity and inventiveness that creators put in their robots. To my eyes, creating a robot is an art: how does a robot should look like? What would it be good for? Should we go for legs or wheels? There are too many questions!

Every robot maker probably has a different answer to all these questions, and that is great. We are in the infancy of the consumer robotics industry and that lets us be open to all possibilities. It is an exciting moment to see how the large and diverse ecosystem of robots develops. Many companies are already driving this evolution of robotics systems, often committed to make better, safer, and more efficient robots.

This is the case of Clearpath Robotics, producers of state-of-the-art multi-purpose mobile robot platforms, and PAL Robotics, producers of robots that aim to enhance people’s quality of life. I met Bryan Webb, President of Clearpath, and Carlos Vivas, Business Manager of PAL Robotics, and I asked them about their thoughts on the art of making robots. Below some excerpts from their answers.

Bryan Webb

Q1. What comes to your mind if I say that making robots is an art?

People need to be able to connect with the robots. There need to be ergonomic and aesthetic features that make it easy for people to use and connect with a robot. Some people call that art, because you cannot reduce it very well to a science.

Q2. How is the process that gets you from ‘I want to make a robot’ to ‘I have a robot’ and, later, to ‘I am selling a robot’?

We talk a lot with costumers about their needs. That is what drives our innovation. There are two streams that we look at there: how can we improve our existing products and make them better?; and, are there any gaps in the market where people are trying to do research and where there is no solution yet?

Q3. What does it mean for you and for your company to be in ICRA 2022?

It is so important. It is just very good to be able to connect again. One just gets a lot of information by sharing and talking with costumers and other exhibitors.

Carlos Vivas

Q1. What comes to your mind if I say that making robots is an art?

Making robots can be an art. There are a lot of processes that require inspiration, creativity, and thrive, but they also need functionality. It is a nice mix. Probably, it is an art to make it work all together.

Q2. How is the process that gets you from ‘I want to make a robot’ to ‘I have a robot’ and, later, to ‘I am selling a robot’?

For us, everything starts because we want to solve a problem, or we want to help a community. After that, we do it so that it is useful for the people.

Q3. What does it mean for you and for your company to be in ICRA 2022?

This year is very special because, after the pandemic, is great to see a lot of good friends again. We love being part of the robotics research community. I am happy to be here.

Finally, do not miss the most funky robots in #ICRA2022.

Tiny robotic crab is smallest-ever remote-controlled walking robot

Efforts to deliver the first drone-based, mobile quantum network

Automate 2022 Product Preview

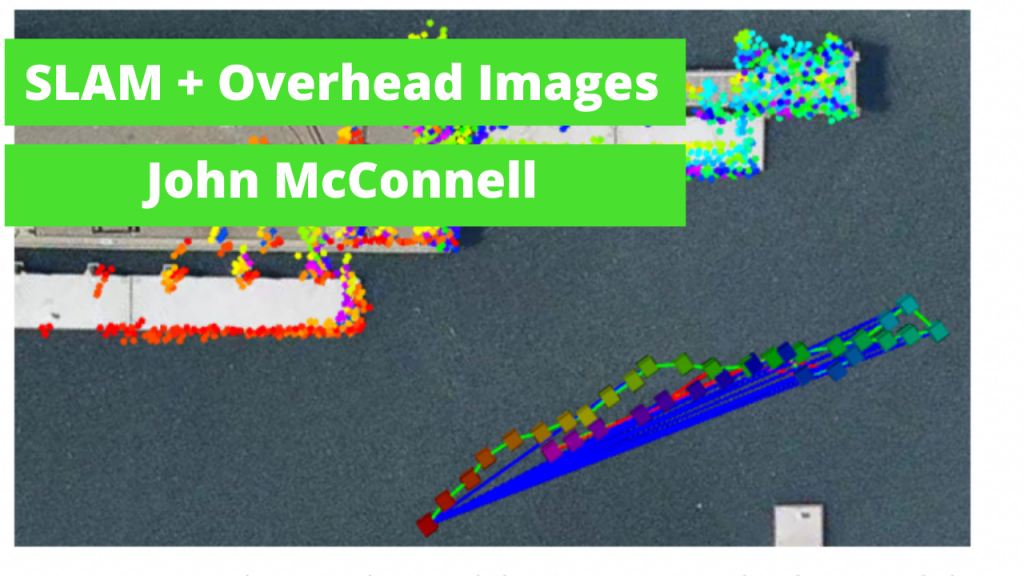

ep.355: SLAM fused with Satellite Imagery (ICRA 2022), with John McConnell

Underwater Autonomous Vehicles face challenging environments where GPS Navigation is rarely possible. John McConnell discusses his research, presented at ICRA 2022, into fusing overhead imagery with traditional SLAM algorithms. This research results in a more robust localization and mapping, with reduced drift commonly seen in SLAM algorithms.

Satellite imagery can be obtained for free or low cost through Google or Mapbox, creating an easily deployable framework for companies in industry to implement.

Links

- Download mp3 (12.2 MB)

- Sonar SLAM opensource repo

- Research Paper

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

#ICRA2022, the great robotics scicommer – Day 1 video digest

The IEEE International Conference on Automation and Robotics, ICRA, is the itinerant flagship conference of the IEEE Robotics and Automation Society, RAS. In its 39th edition, ICRA is being held in the Pennsylvania Convention Center, in Philadelphia, PA, USA, between May 23 and 27, 2022.

ICRA started just after the birth of the IEEE Robotics and Automation Society (formerly IEEE Robotics and Automation Council) in 1983. The first edition was held in Atlanta, GA, USA, in 1984. During its first years, the conference showed the growing interest of researchers and industry leaders in the emergent field of robotics. The 1986 edition, San Francisco, CA, USA, gathered 800 attendees from 20 countries. In 2022, and the conference has grown to be one of the biggest robotics events.

The numbers of ICRA 2022 speak for themselves: over 7.000 participants (4.460 in-person); 95 countries; 3.344 paper submissions and 1.498 paper presentations; 12 keynote speakers; 96 partners, sponsors and exhibitors; 10 major competitions; 6 forums; 56 workshops; 10 networking events; and a group of 101 amazing student volunteers that help to coordinate this reunion.

The talented people that share robotics advances in ICRA make this conference one of the greatest Robotics & Science Communication events. In its extension, the conference allows the attendees to get a taste of a large and diverse range of robotics fields: localization and navigation; assistive robotics; robotics learning; collective construction; you name it, ICRA has it! Unfortunately, it can only be a taste—it is practically impossible to follow all the events.

For the academics, Monday 23rd and Friday 27th have dedicated and specialized satellite workshops organized within the framework of the conference. Besides the Keynotes, the main track of the conference, between May 24th and May 26th, is divided into topic sessions that are followed by poster presentations in which speakers can address possible questions. On the same days, the exhibitors are showcasing their new technologies and the trends in the robotics market.

For the most enthusiastic like me, and because we want to see robots in action, there are robotics competitions during the entire week. Also, the technical tours to the Signh Center for Nanotechnology, Penn Medicine, and the Penn GRASP Lab are a great opportunity to see robotics demos and presentations from local researchers. The art and robotics exhibitions scheduled between Monday 23rd and Wednesday 25th might give you a different perspective on how we can interact with a robot.

Quite important!: the virtual access to the conference is open to everyone at no cost! And also, if you want to explore a little and interact with researchers in Philly, a group of telepresence robots are available for curious virtual attendees.

If you have little time in your agenda but you still wish to follow the highlights of the conference, follow the official social media accounts (Twitter, Facebook, LinkedIn), or join the Twitter List of the Sci Communicators that will cover this amazing robotics festival.

Dan O’Mara: Turning Robotics Education on its Head | Sense Think Act Podcast #19

In this episode, Audrow Nash speaks to Dan O’Mara, who is the founder and COO of Circuit Launch and Mechlabs. Circuit Launch is a space for hardware entrepreneurs to work in Oakland, California, and Mechlabs is a project-based course to learn robotics. This interview is mostly about Mechlabs, but talks about the origins of Circuit Launch, including how it is not a maker or coworking space and its business model. For Mechlabs, we talk about several of its aspects that make it different than a university education in robotics, including how there are mentors not instructors, how projects are scoped, and how people are invited to work on what is most interesting to them. We also talk about the future of Mechlabs and how it fits with current universities.

Episode Links

Podcast info

Aerial imaging technique improves ability to detect and track moving targets through thick foliage

The IEEE International Conference on Robotics and Automation (ICRA) kicks off with the largest in-person participation and number of represented countries ever

Photo credit: Wise Owl Multimedia

The IEEE International Conference on Robotics and Automation (ICRA), taking place simultaneously at the Pennsylvania Convention Center in Philadelphia and virtually, has just kicked off. ICRA 2022 brings together the world’s top researchers and most important companies to share ideas and advances in the fields of robotics and automation.

This is the first time the ICRA community is reunited after the pandemic, resulting in record breaking attendance with over 7,000 registrations and 95 countries represented. As the ICRA 2022 Co-Chair Vijay Kumar (University of Pennsylvania, USA) states, “we could not be happier to host the largest robotics conference in the world in Philadelphia, and the beginning of the re-emergence from the pandemic after a three year hiatus. We are back!”

Many important developments in robotics and automation have historically been first presented at ICRA, and in its 39th year, ICRA 2022 promises to take this trend one step further. As the practical and socio-economic impact of our field continues to expand, robotics and automation are increasingly taking the center stage in our lives and will play an important role in the Future of Work and Society, the theme of this year’s conference. Indeed, the Future of Work Forum Session being held on Thursday May 26th will specifically address the impact of automation on the future of employment, featuring panelists Jeff Burnstein (A3), Erik Brynjolfssonn (Stanford), Moshe Vardi (Rice University), Michael Lotito (Littler), Bernd Liepert (EuRobotics), Cecilia Laschi (NUS), and Ioana Marinescu (University of Pennsylvania). Five more Forums will be happening from Tuesday to Thursday, including an Industry Forum on Tuesday May 24th, or a Startup Forum on Wednesday May 25th.

The conference will also feature Plenaries and Keynotes by world-renowned roboticists on topics such as Robot Ethics, Legged Robots for Industrial and Search & Rescue Applications, Robot Farming, Autonomous Logistics or Smart Sensing, as well as 1500 Technical Talks on the state-of-the-art in robotics. A total of 39 researchers have been nominated to the 13 awards that ICRA 2022 is giving on Thursday, for their outstanding research contributions in categories such as Automation, Coordination, Interaction, Learning, Locomotion, Manipulation, Navigation or Planning, among others. As the ICRA 2022 Program Chair Hadas Kress-Gazit (Cornell University, USA) comments, “we are so excited to see the latest and greatest in robotics research and meet old and new friends!”.

Furthermore, a robot exhibition hall has been prepared with nearly 100 confirmed Exhibitors offering robot displays and demos from companies like Dyson, Motional, Built Robotics, NVIDIA, Technology Innovation Institute, Boston Dynamics, Pal-Robotics, KUKA Robotics, Toyota Research Institute, Tesla or Waymo, among others.

Competitions are also a big part of ICRA 2022. A total of 10 exciting Competitions will be taking place from Monday May 23rd to Friday May 27th, on the following topics: Autonomous Ground & Aerial Robots Navigation, Localization and Mapping, Robot Manipulation, Autonomous Racing, Roboethics, and LEGO League for 12th grade students.

To complete the program of the largest worldwide robotics conference, there will also be several Industry Tech Huddles led by industry experts, Technical Tours to the Singh Center for Nanotechnology, Penn Medicine and the General Robotics, Automation, Sensing and Perception (GRASP) Laboratory, and several Networking Events.

ICRA 2022 is also proud to partner with the RAD Lab and several Philadelphia-based art galleries to offer a central space for art in its program. Building on the previous ICRA robotic art programs, this year’s installment explores aesthetic and creative influences on robot motion through interactive, expressive, and meditative robotic art installations. The exhibition and the associated workshop will provide new perspectives on imagining new technology futures.

“This is a very special conference for the majority of ICRA attendees,” ICRA 2022 General Co-Chair George J. Pappas (University of Pennsylvania, USA) comments. “The reason? 64% of all registrants and 56% of all in-person registrants are attending ICRA for the very first time! Given this influx of fantastic talent to our field, the future of ICRA is as bright as it has ever been.”

Not everyone can attend ICRA in person. That’s why the ICRA Organizing Committee, the IEEE Robotics and Automation Society and OhmniLabs have teamed up to offer access to the OhmiLab’s telepresence robots that will be on site. Three OhmniBots will be in the main exhibition hall (with all the other robots) from opening to closing on Tuesday May 24th, Wednesday May 25th and Thursday May 26th, with time slots aligning with Poster Sessions, networking breaks and Expo Hall hours.

No matter where you are, we hope you enjoy the conference either in person or virtually!

We would like to thank ICRA 2022 Partners, which have also supported us in record numbers this year.