Education and healthcare are set for a high-tech boost

Robotics and AI are poised to fundamentally change the future of healthcare. © Elnur, Shutterstock

In a Swiss classroom, two children are engrossed in navigating an intricate maze with the help of a small, rather cute, robot. The interaction is easy and playful – it is also providing researchers with valuable information on how children learn and the conditions in which information is most effectively absorbed.

Rapid improvements in intuitive human-machine interactions (HMI) are poised to kick off big changes in society. In particular, two European research projects give a sense of how these trends could influence two core areas: education and healthcare.

Child learning

In EU-funded ANIMATAS, a cross-border network of universities and industrial partners is exploring if, and how, robots and artificial intelligence (AI) can help us learn more effectively. One idea is around making mistakes: children can learn by spotting and correcting others’ errors – and having a robot make them might be useful.

‘A teacher can’t make mistakes,’ said project coordinator Professor Mohamed Chetouani of the Sorbonne University in Paris, France. ‘But a robot? They could. And mistakes are very useful in education.’

According to Prof Chetouani, it is simplistic to ask questions like ‘can robots help children learn better’ because learning is such a complex concept. He said that, for example, any automatic assumption that pupils who concentrate on lessons are learning more isn’t necessarily true.

That’s why, from the start, the project set out to ask smarter, more specific questions that would help identify just how robots could be useful in classrooms.

ANIMATAS is made up of sub-projects each led by an early-stage researcher. One of the sub-project goals was to better understand the learning process in children and analyse what types of interaction best help them to retain information.

“Mistakes are very useful in education.”

– Professor Mohamed Chetouani, ANIMATAS

Robot roles

An experiment set up to investigate this question invited children to team up with the aptly named QTRobot to find the most efficient route around a map.

During the exercise, the robot reacts interactively with the children to offer tips and suggestions. It is also carefully measuring various indicators in the children’s body language such as eye contact and direction, tone of voice and facial expression.

As hoped, researchers did indeed find that certain patterns of interaction corresponded with improved learning. With this information, they will be better able to evaluate how well children are engaging with educational material and, in the longer term, develop strategies to maximise such engagement – thereby boosting learning potential.

Future steps will include looking at how to adapt this robot-enhanced learning to children with special educational needs.

‘We believe that it could be really important in this context,’ said Prof Chetouani.

Help at hand

Aki Härmä, a researcher at Philips Research Eindhoven in the Netherlands, believes that robotics and AI are going to fundamentally change healthcare.

“Healthcare can be 24/7.”

Aki Härmä, PhilHumans

In the EU-funded PhilHumans project that he is coordinating, early-stage researchers from five universities across Europe work with two commercial partners – R2M Solution in Spain and Philips Electronics in the Netherlands – to learn how innovative technologies can improve people’s health.

AI makes new services possible and ‘it means healthcare can be 24/7,’ Härmä said.

He points to the vast potential for technology to help people manage their own health from home: apps able to track a person’s mental and physical state and spot problems early on, chatbots that can give advice and propose diagnoses, and algorithms for robots to navigate safely around abodes.

Empathetic bots

The project, which started in 2019 and will run until late 2023, is made of up of eight sub-projects, each led by a doctoral student.

One sub-project, supervised by Phillips researcher Rim Helaoui, is looking at how the specific skills of mental-health practitioners – such as empathy and open-ended questioning – may be encoded into an AI-powered chatbot. This could mean that people with mental-health conditions would be able to access relevant support from home, potentially at a lower cost.

The team quickly realised that replicating the full range of psychotherapeutic skills in a chatbot would involve challenges that could not be solved all at once. It focused instead on one key challenge: how to generate a bot that displayed empathy.

‘This is the essential first step to get people to feel they can open up and share,’ said Helaoui.

As a starting point, the team produced an algorithm able to respond with the appropriate tone and content to convey empathy. The technology has yet to be converted into an app or product, but provides a building block that could be used in many different applications.

Rapid advances

PhilHumans is also exploring other possibilities for the application of AI in healthcare. An algorithm is being developed that can use ‘camera vision’ to understand the tasks that a person is trying to carry out and analyse the surrounding environment.

The ultimate goal would be to use this algorithm in a home-assistant robot to help people with cognitive decline complete everyday tasks successfully.

One thing that has helped the project overall, said Härmä, is the speed with which other organisations have been developing natural language processors with impressive capabilities, like GPT-3 from OpenAI. The project expects to be able to harness the unexpectedly rapid improvements in these and other areas to advance faster.

Both ANIMATAS and PhilHumans are actively working on expanding the limits of intuitive HMI.

In doing so, they have provided a valuable training ground for young researchers and given them important exposure to the commercial world. Overall, the two projects are ensuring that a new generation of highly skilled researchers is equipped to lead the way forward in HMI and its potential applications.

Research in this article was funded via the EU’s Marie Skłodowska-Curie Actions (MSCA).

This article was originally published in Horizon, the EU Research and Innovation magazine.

Cartesian robots with the right design upgrades can take over manual transfer operations with ease

12 Ways Companies Are Automating Workflows With Mobile Robots

Robotic system offers hidden window into collective bee behavior

Understanding the Role of Machine Vision in Industry 4.0

One million robots work in car industry worldwide – new record

The automotive industry has the largest number of robots working in factories around the world: Operational stock hit a new record of about one million units. This represents about one third of the total number installed across all industries.

“The automotive industry effectively invented automated manufacturing,” says Marina Bill, President of the International Federation of Robotics. “Today, robots are playing a vital role in enabling this industry’s transition from combustion engines to electric power. Robotic automation helps car manufacturers manage the wholesale changes to long-established manufacturing methods and technologies.”

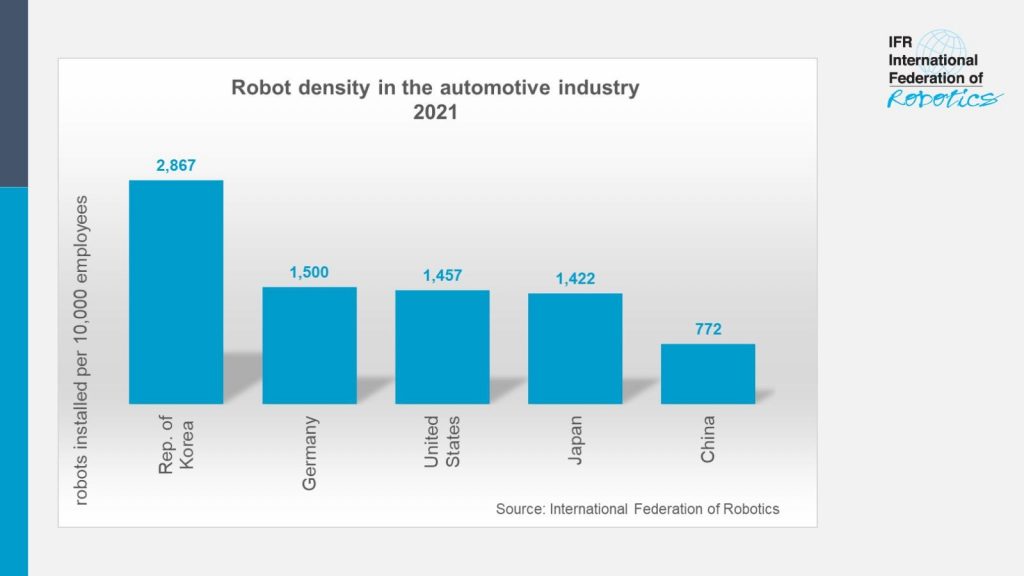

Robot density in automotive

Robot density is a key indicator which illustrates the current level of automation in the top car producing economies: In the Republic of Korea, 2,867 industrial robots per 10,000 employees were in operation in 2021. Germany ranks in second place with 1,500 units followed by the United States counting 1,457 units and Japan with 1,422 units per 10,000 workers.

The world´s biggest car manufacturer, China, has a robot density of 772 units, but is catching up fast: Within a year, new robot installations in the Chinese automotive industry almost doubled to 61,598 units in 2021- accounting for 52% of the total 119,405 units installed in factories around the world.

Electric vehicles drive automation

Ambitious political targets for electric vehicles are forcing the car industry to invest: The European Union has announced plans to end the sale of air-polluting vehicles by 2035. The US government aims to reach a voluntary goal of 50% market share for electric vehicle sales by 2030 and all new vehicles sold in China must be powered by “new energy” by 2035. Half of them must be electric, fuel cell, or plug-in hybrid – the remaining 50%, hybrid vehicles.

Most automotive manufacturers who have already invested in traditional “caged” industrial robots for basic assembling are now also investing in collaborative applications for final assembly and finishing tasks. Tier-two automotive parts suppliers, many of which are SMEs, are slower to automate fully. Yet, as robots become smaller, more adaptable, easier to program, and less capital-intensive this is expected to change.

Robot Talk Episode 42 – Thom Kirwan-Evans

Claire chatted to Thom Kirwan-Evans from Origami Labs all about computer vision, machine learning, and robots in industry.

Claire chatted to Thom Kirwan-Evans from Origami Labs all about computer vision, machine learning, and robots in industry.

Thom Kirwan-Evans is a co-founder at Origami Labs where he applies the latest AI research to solve complex real world problems. Thom started as a physicist at Dstl working with camera systems before moving to an engineering consultancy and then setting up his own company last year. A keen runner and father of two, a key aim in starting his business was a good work-life balance.

Consumers are Ready for a Robot-Assisted World

AMRs in Fleet Operation and Test Deployment

Resilient bug-sized robots keep flying even after wing damage

MIT researchers have developed resilient artificial muscles that can enable insect-scale aerial robots to effectively recover flight performance after suffering severe damage. Photo: Courtesy of the researchers

By Adam Zewe | MIT News Office

Bumblebees are clumsy fliers. It is estimated that a foraging bee bumps into a flower about once per second, which damages its wings over time. Yet despite having many tiny rips or holes in their wings, bumblebees can still fly.

Aerial robots, on the other hand, are not so resilient. Poke holes in the robot’s wing motors or chop off part of its propellor, and odds are pretty good it will be grounded.

Inspired by the hardiness of bumblebees, MIT researchers have developed repair techniques that enable a bug-sized aerial robot to sustain severe damage to the actuators, or artificial muscles, that power its wings — but to still fly effectively.

They optimized these artificial muscles so the robot can better isolate defects and overcome minor damage, like tiny holes in the actuator. In addition, they demonstrated a novel laser repair method that can help the robot recover from severe damage, such as a fire that scorches the device.

Using their techniques, a damaged robot could maintain flight-level performance after one of its artificial muscles was jabbed by 10 needles, and the actuator was still able to operate after a large hole was burnt into it. Their repair methods enabled a robot to keep flying even after the researchers cut off 20 percent of its wing tip.

This could make swarms of tiny robots better able to perform tasks in tough environments, like conducting a search mission through a collapsing building or dense forest.

“We spent a lot of time understanding the dynamics of soft, artificial muscles and, through both a new fabrication method and a new understanding, we can show a level of resilience to damage that is comparable to insects,” says Kevin Chen, the D. Reid Weedon, Jr. Assistant Professor in the Department of Electrical Engineering and Computer Science (EECS), the head of the Soft and Micro Robotics Laboratory in the Research Laboratory of Electronics (RLE), and the senior author of the paper on these latest advances. “We’re very excited about this. But the insects are still superior to us, in the sense that they can lose up to 40 percent of their wing and still fly. We still have some catch-up work to do.”

Chen wrote the paper with co-lead authors Suhan Kim and Yi-Hsuan Hsiao, who are EECS graduate students; Younghoon Lee, a postdoc; Weikun “Spencer” Zhu, a graduate student in the Department of Chemical Engineering; Zhijian Ren, an EECS graduate student; and Farnaz Niroui, the EE Landsman Career Development Assistant Professor of EECS at MIT and a member of the RLE. The article appeared in Science Robotics.

Robot repair techniques

Using the repair techniques developed by MIT researchers, this microrobot can still maintain flight-level performance even after the artificial muscles that power its wings were jabbed by 10 needles and 20 percent of one wing tip was cut off. Credit: Courtesy of the researchers.

The tiny, rectangular robots being developed in Chen’s lab are about the same size and shape as a microcassette tape, though one robot weighs barely more than a paper clip. Wings on each corner are powered by dielectric elastomer actuators (DEAs), which are soft artificial muscles that use mechanical forces to rapidly flap the wings. These artificial muscles are made from layers of elastomer that are sandwiched between two razor-thin electrodes and then rolled into a squishy tube. When voltage is applied to the DEA, the electrodes squeeze the elastomer, which flaps the wing.

But microscopic imperfections can cause sparks that burn the elastomer and cause the device to fail. About 15 years ago, researchers found they could prevent DEA failures from one tiny defect using a physical phenomenon known as self-clearing. In this process, applying high voltage to the DEA disconnects the local electrode around a small defect, isolating that failure from the rest of the electrode so the artificial muscle still works.

Chen and his collaborators employed this self-clearing process in their robot repair techniques.

First, they optimized the concentration of carbon nanotubes that comprise the electrodes in the DEA. Carbon nanotubes are super-strong but extremely tiny rolls of carbon. Having fewer carbon nanotubes in the electrode improves self-clearing, since it reaches higher temperatures and burns away more easily. But this also reduces the actuator’s power density.

“At a certain point, you will not be able to get enough energy out of the system, but we need a lot of energy and power to fly the robot. We had to find the optimal point between these two constraints — optimize the self-clearing property under the constraint that we still want the robot to fly,” Chen says.

However, even an optimized DEA will fail if it suffers from severe damage, like a large hole that lets too much air into the device.

Chen and his team used a laser to overcome major defects. They carefully cut along the outer contours of a large defect with a laser, which causes minor damage around the perimeter. Then, they can use self-clearing to burn off the slightly damaged electrode, isolating the larger defect.

“In a way, we are trying to do surgery on muscles. But if we don’t use enough power, then we can’t do enough damage to isolate the defect. On the other hand, if we use too much power, the laser will cause severe damage to the actuator that won’t be clearable,” Chen says.

The team soon realized that, when “operating” on such tiny devices, it is very difficult to observe the electrode to see if they had successfully isolated a defect. Drawing on previous work, they incorporated electroluminescent particles into the actuator. Now, if they see light shining, they know that part of the actuator is operational, but dark patches mean they successfully isolated those areas.

The new research could make swarms of tiny robots better able to perform tasks in tough environments, like conducting a search mission through a collapsing building or dense forest. Photo: Courtesy of the researchers

Flight test success

Once they had perfected their techniques, the researchers conducted tests with damaged actuators — some had been jabbed by many needles while other had holes burned into them. They measured how well the robot performed in flapping wing, take-off, and hovering experiments.

Even with damaged DEAs, the repair techniques enabled the robot to maintain its flight performance, with altitude, position, and attitude errors that deviated only very slightly from those of an undamaged robot. With laser surgery, a DEA that would have been broken beyond repair was able to recover 87 percent of its performance.

“I have to hand it to my two students, who did a lot of hard work when they were flying the robot. Flying the robot by itself is very hard, not to mention now that we are intentionally damaging it,” Chen says.

These repair techniques make the tiny robots much more robust, so Chen and his team are now working on teaching them new functions, like landing on flowers or flying in a swarm. They are also developing new control algorithms so the robots can fly better, teaching the robots to control their yaw angle so they can keep a constant heading, and enabling the robots to carry a tiny circuit, with the longer-term goal of carrying its own power source.

“This work is important because small flying robots — and flying insects! — are constantly colliding with their environment. Small gusts of wind can be huge problems for small insects and robots. Thus, we need methods to increase their resilience if we ever hope to be able to use robots like this in natural environments,” says Nick Gravish, an associate professor in the Department of Mechanical and Aerospace Engineering at the University of California at San Diego, who was not involved with this research. “This paper demonstrates how soft actuation and body mechanics can adapt to damage and I think is an impressive step forward.”

This work is funded, in part, by the National Science Foundation (NSF) and a MathWorks Fellowship.