6-axis robots for injection molding open possibilities for manufacturers

#IROS2022 best paper awards

Did you have the chance to attend the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022) in Kyoto? Here we bring you the papers that received an award this year in case you missed them. Congratulations to all the winners and finalists!

Best Paper Award on Cognitive Robotics

Best RoboCup Paper Award

Best Paper Award on Robot Mechanisms and Design

Best Entertainment and Amusement Paper Award

Robot Learning to Paint from Demonstrations. Younghyo Park, Seunghun Jeon, and Taeyoon Lee.

Best Paper Award on Safety, Security, and Rescue Robotics

Best Paper Award on Agri-Robotics

Best Paper Award on Mobile Manipulation

Best Application Paper Award

Best Paper Award for Industrial Robotics Research for Applications

ABB Best Student Paper Award

Best Paper Award

SpeedFolding: Learning Efficient Bimanual Folding of Garments. Yahav Avigal, Lars Berscheid, Tamim Asfour, Torsten Kroeger, and Ken Goldberg.

Robot Talk Podcast – October episodes

Episode 20 – Paul Dominick Baniqued

Claire talked to Dr Paul Dominick Baniqued from The University of Manchester all about brain-computer interface technology and rehabilitation robotics.

Paul Dominick Baniqued received his PhD in robotics and immersive technologies at the University of Leeds. His research tackled the integration of a brain-computer interface with virtual reality and hand exoskeletons for motor rehabilitation and skills learning. He is currently working as a postdoc researcher on cyber-physical systems and digital twins at the Robotics for Extreme Environments Group at the University of Manchester.

Episode 21 – Sean Katagiri

Claire chatted to Sean Katagiri from The National Robotarium all about underwater robots, offshore energy, and other industrial applications of robotics.

Sean Katagiri is a robotics engineer who has the pleasure of being surrounded by and working with robots for a living. His experience in robotics mainly comes from the subsea domain, but has also worked with wheeled and legged ground robots as well. Sean is very excited to have recently started his role at The National Robotarium, whose goal is to bring ideas from academia and turn them into real world solutions.

Episode 22 – Iveta Eimontaite

Claire talked to Dr Iveta Eimontaite from Cranfield University about psychology, human-robot interaction, and industrial robots.

Iveta Eimontaite studied Cognitive Neuroscience at the University of York and completed her PhD in Cognitive Psychology at Hull University. Prior to joining Cranfield University, Iveta held research positions at Bristol Robotics Laboratory and Sheffield Robotics. Her work mainly focuses on behavioural and cognitive aspects of Human-Technology Interaction, with particular interest in user needs and requirements for the successful integration of technology within the workplace/social environments.

Mickey Li

Claire talked to Mickey Li from the University of Bristol about aerial robotics, building inspection and multi-robot teams.

Mickey Li is a Robotics and Autonomous systems PhD researcher at the Bristol Robotics Laboratory and the University of Bristol. His research focuses on optimal multi-UAV path planning for building inspection, in particular how guarantees can be provided despite vehicle failures. Most recently he has been developing a portable development and deployment infrastructure for multi-UAV experimentation for the BRL Flight Arena inspired by advances in cloud computing.

Researchers’ study of human-robot interactions is an early step in creating future robot ‘guides’

A soft robotic microfinger that enables interaction with insects through tactile sensing

Researchers help robots navigate crowded spaces with new visual perception method

How to Choose the Right Sensor in Ambient Conditions

Using vibrations to control a swarm of tiny robots

Robots are taking over jobs, but not at the rate you might think

BOWE GROUP leads an $8.2M investment round in robot software innovator MOV.AI

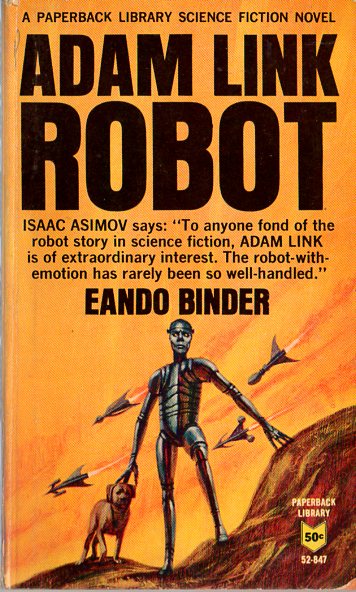

The original “I, Robot” had a Frankenstein complex

Eando Binder’s Adam Link scifi series predates Isaac Asimov’s more famous robots, posing issues in trust, control, and intellectual property.

Read more about these challenges in my Science Robotics article here.

And yes, there’s a John Wick they-killed-my-dog scene in there too.

Snippet for the article with some expansion:

In 1939, Eando Binder began a short story cycle about a robot named Adam Link. The first story in Binder’s series was titled “I, Robot.” That clever phrase would be recycled by Isaac Asimov’s publisher (against Asimov’s wishes) for his famous short story cycle that started in 1940 about the Three Laws of Robotics. But the Binder series had another influence on Asimov: the stories explicitly related Adam’s poor treatment to how humans reacted to the Creature in Frankenstein. (After the police killed his dog- did I mention John Wick?- and put him in jail, Adam conveniently finds a copy of Mary Shelley’s Frankenstein and the penny drops on why everyone is so mean to him…) In response, Asimov coined the term “the Frankenstein Complex” in his stories[1], with his characters stating that Three Laws of Robotics gave humans the confidence in robots to overcome this neurosis.

Note that the Frankenstein Complex is different from the Uncanny Valley; in the Uncanny Valley, the robot is creepy because it almost looks and moves like a human or animal but not quite, in the Frankenstein Complex people believe that intelligent robots regardless of what they look like will rise up against their creators.

Whether humans really have a Frankenstein Complex is a source of endless debate. Frederic Kaplan in a seminal paper presented the baseline assessment of the cultural differences and the role of popular media in trust of robots that everyone still uses[2]. Humanoid robotics researchers even have developed a formal measure of a user’s perception of the Frankenstein Complex.[3] So that group of HRI researchers believes the Frankenstein Complex is a real phenomena. But Binder’s Adam Link story cycle is also worth reexamining because it foresaw two additional challenges for robots and society that Asimov, and other early writers, did not: what is the appropriate form of control and can a robot own intellectual property.

You can get the Adam Link stories from the web as individual stories published in the online back issues of Amazing Stories but it is probably easier to get the story collection here. Binder did a fix-up novel where he organized the stories to form a chronology and added segue ways between stories.

If you’d like to learn more about

- robot control and what is appropriate for what types of tasks, see Introduction to AI Robotics, second edition

- Asimov’s Three Laws and whether they really would prevent the Frankenstein Complex, see Learn AI and Human-Robot Interaction from Asimov’s I, Robot Stories, and links about Asimov

References

[1] Frankenstein Monster, Encyclopedia of Science Fiction, https://sf-encyclopedia.com/entry/frankenstein_monster, accessed July 28, 2022

[2] F. Kaplan, “Who is afraid of the humanoid? Investigating cultural differences in the acceptance of robots,” International Journal of Humanoid Robotics, 1–16 (2004)

[3] Syrdal, D.S., Nomura, T., Dautenhahn, K. (2013). The Frankenstein Syndrome Questionnaire – Results from a Quantitative Cross-Cultural Survey. In: Herrmann, G., Pearson, M.J., Lenz, A., Bremner, P., Spiers, A., Leonards, U. (eds) Social Robotics. ICSR 2013. Lecture Notes in Computer Science(), vol 8239. Springer, Cham. https://doi.org/10.1007/978-3-319-02675-6_27

The original “I, Robot” had a Frankenstein complex

Eando Binder’s Adam Link scifi series predates Isaac Asimov’s more famous robots, posing issues in trust, control, and intellectual property.

Read more about these challenges in my Science Robotics article here.

And yes, there’s a John Wick they-killed-my-dog scene in there too.

Snippet for the article with some expansion:

In 1939, Eando Binder began a short story cycle about a robot named Adam Link. The first story in Binder’s series was titled “I, Robot.” That clever phrase would be recycled by Isaac Asimov’s publisher (against Asimov’s wishes) for his famous short story cycle that started in 1940 about the Three Laws of Robotics. But the Binder series had another influence on Asimov: the stories explicitly related Adam’s poor treatment to how humans reacted to the Creature in Frankenstein. (After the police killed his dog- did I mention John Wick?- and put him in jail, Adam conveniently finds a copy of Mary Shelley’s Frankenstein and the penny drops on why everyone is so mean to him…) In response, Asimov coined the term “the Frankenstein Complex” in his stories[1], with his characters stating that Three Laws of Robotics gave humans the confidence in robots to overcome this neurosis.

Note that the Frankenstein Complex is different from the Uncanny Valley; in the Uncanny Valley, the robot is creepy because it almost looks and moves like a human or animal but not quite, in the Frankenstein Complex people believe that intelligent robots regardless of what they look like will rise up against their creators.

Whether humans really have a Frankenstein Complex is a source of endless debate. Frederic Kaplan in a seminal paper presented the baseline assessment of the cultural differences and the role of popular media in trust of robots that everyone still uses[2]. Humanoid robotics researchers even have developed a formal measure of a user’s perception of the Frankenstein Complex.[3] So that group of HRI researchers believes the Frankenstein Complex is a real phenomena. But Binder’s Adam Link story cycle is also worth reexamining because it foresaw two additional challenges for robots and society that Asimov, and other early writers, did not: what is the appropriate form of control and can a robot own intellectual property.

You can get the Adam Link stories from the web as individual stories published in the online back issues of Amazing Stories but it is probably easier to get the story collection here. Binder did a fix-up novel where he organized the stories to form a chronology and added segue ways between stories.

If you’d like to learn more about

- robot control and what is appropriate for what types of tasks, see Introduction to AI Robotics, second edition

- Asimov’s Three Laws and whether they really would prevent the Frankenstein Complex, see Learn AI and Human-Robot Interaction from Asimov’s I, Robot Stories, and links about Asimov

References

[1] Frankenstein Monster, Encyclopedia of Science Fiction, https://sf-encyclopedia.com/entry/frankenstein_monster, accessed July 28, 2022

[2] F. Kaplan, “Who is afraid of the humanoid? Investigating cultural differences in the acceptance of robots,” International Journal of Humanoid Robotics, 1–16 (2004)

[3] Syrdal, D.S., Nomura, T., Dautenhahn, K. (2013). The Frankenstein Syndrome Questionnaire – Results from a Quantitative Cross-Cultural Survey. In: Herrmann, G., Pearson, M.J., Lenz, A., Bremner, P., Spiers, A., Leonards, U. (eds) Social Robotics. ICSR 2013. Lecture Notes in Computer Science(), vol 8239. Springer, Cham. https://doi.org/10.1007/978-3-319-02675-6_27