Can a robot’s ability to speak affect how much human users trust it?

Tiny, caterpillar-like soft robot folds, rolls, grabs and degrades

ABB survey reveals re-industrialization at risk from global “education gap” in automation

ep.360: Building Communities Around AI in Africa, with Benjamin Rosman

At ICRA 2022, Benjamin Rosman delivered a keynote presentation on an organization he co-founded called “Deep learning Indaba”.

Deep Learning Indaba is based in South Africa and their mission is to strengthen Artificial Intelligence and Machine Learning communities across Africa. They host yearly meetups in varying countries on the continent, as well as promote grass roots communities in each of the countries to run their own local events.

What is Indaba?

An indaba is a Zulu word for a gathering or meeting. Such meetings are held throughout southern Africa, and serve several functions: to listen and share news of members of the community, to discuss common interests and issues facing the community, and to give advice and coach others.

Benjamin Rosman

Benjamin Rosman is an Associate Professor in the School of Computer Science and Applied Mathematics at the University of the Witwatersrand, South Africa, where he runs the Robotics, Autonomous Intelligence, and Learning (RAIL) Laboratory and is the Director of the National E-Science Postgraduate Teaching and Training Platform (NEPTTP).

He is a founder and organizer of the Deep Learning Indaba machine learning summer school, with a focus on strengthening African machine learning. He was a 2017 recipient of a Google Faculty Research Award in machine learning, and a 2021 recipient of a Google Africa Research Award. In 2020, he was made a Senior Member of the IEEE.

Links

- Deep Learning Indaba

- Download mp3

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

How our principles helped define AlphaFold’s release

How our principles helped define AlphaFold’s release

How our principles helped define AlphaFold’s release

Erotophilia and sexual sensation-seeking are good predictors of engagement with sex robots, according to new research

Robots for Inspection and Maintenance Tasks

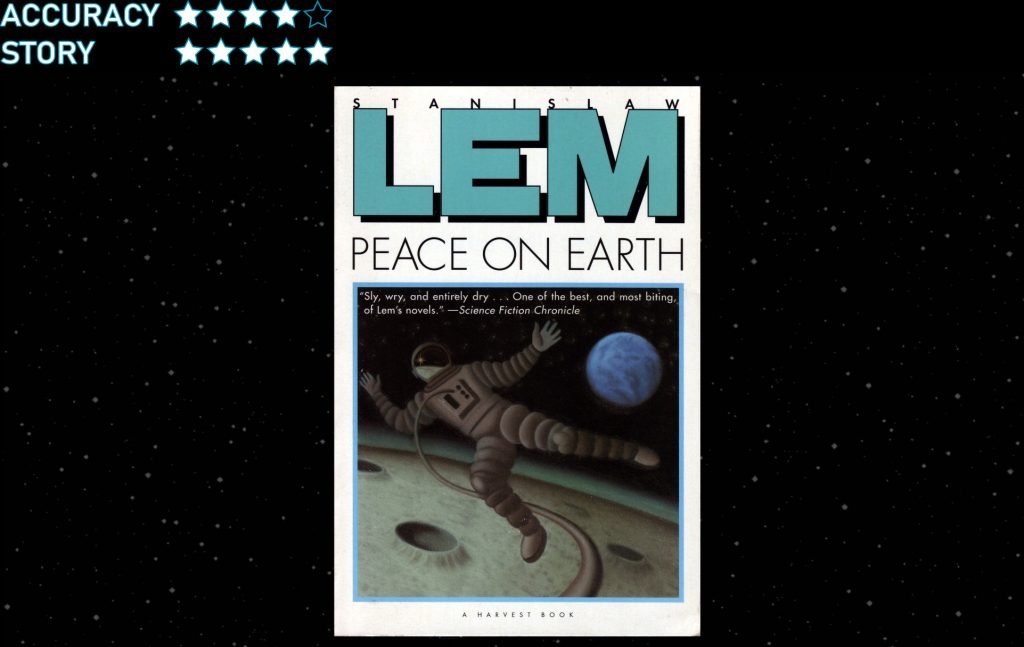

Peace on Earth (1987): Using telerobotics to check in on a swarm robot uprising on the Moon

Robots: humanoids, teleoperated reconfigurable robots, swarms.

Recommendation: Read this classic hard sci-fi novel and expand your horizons about robots, teleoperation, and swarms.

Stanislaw Lem was one of the most read science fiction authors in the world in his day, especially the 70s and 80s, though not in America because there were rarely translations from his native Polish to English. Europeans could parse the French translations, we couldn’t even parlez vous francais. Lem famously did not like American science fiction, with a very few exceptions. One being Philip K. Dick- and it is no wonder since Lem’s 1987 novel Peace on Earth shares many of the same themes that Dick covered: militarization of robots, people losing their memory or not being what they seem, and government conspiracies. In some ways Peace on Earth is like the longer, more detailed, and, actually, *better* version of Dick’s 1953 short story Second Variety (which was basis for the Peter Weller movie Screamers).

Peace on Earth has a sort of a Battlestar Galatica (reboot) backstory. Mankind has put all their military robots on the moon to do whatever military robots do. The rapidly evolving, super smart robots can continue to use simulations and machine learning to improve or work out alternatives to Clauswitz style of warfare but out of the way so that it can’t impact humans.

Or can it?

Except after a couple of decades no one has heard from the robots. This is not unexpected, but people, being people, are beginning to wonder if the robots are still up there. Or maybe the robots have evolved into something peaceful. Or into some supreme intelligence that might want to take over the Earth. Or maybe the robots have run out of things to shot at up there and the winners are now thinking about shooting at Earth. Oooops. Maybe we should send someone to check in on them, just in case…

The story is told from the viewpoint of the astronaut, Ijon Tichy, sent to check in on the robots. The book starts with his return on Earth with brain damage that has severed his corpus callosum, left him with major memory loss as to what happened and why he is on the run. We are in Christopher Nolan Momento territory (without the tattoos) or Jonathan Nolan’s/HBO’s Westworld out of sequence story telling as Tichy tries to figure out what happened on the Moon and what it means.

Along the way we get some interesting descriptions of telerobotics and telepresence as well as swarm and distributed robotics. Lem was a hard science ficition writer, who had gone to medical school before switching to physics. He was very much into the science component of his books and in this case more of the ideas of biological evolution. He posits that biological evolution has been about the evolution of small to large— from viruses and bacteria to single cells to animals and people, but that robotics evolution will be from large to small. We started with big robots improving, then getting smaller with miniaturization of sensors and actuators, then smaller computation as a single robot would not need to carry all its computation onboard but could rely on distributed computation, and the trend will continue finally a robot becomes a collection of tiny, simple robots that can cast itself into a larger shape with greater intelligence— the idea behind Michael Crichton’s novel Prey. These swarms of what we would now call nanorobots would provide the ultimate flexibility in reconfigurable robots. Of course, Lem hand waves over limiting factors such as power and communication. But that aside, it’s a thought-provoking idea and a radically different take than Dick’s on how military robots would evolve.

One of the interesting scientific themes in Peace on Earth is Tichy’s use of teleoperation robots to land on the Moon and attempt to check out the robots in the different sectors of the Moon. Eventually Tichy quits using humanoid robots and begins using a reconfigurable robot body that can transform into different animal shapes so as to move more effectively through the different structures built by the robots.

Teleoperated robots are sometimes called avatars, though the term avatar was originally restricted to software simulations- James Cameron changed that connotation with his movie. There is increasing interest in telecommuting (and telesex) through robots, so much so, there is now a XPrize competition on avatars.

My favorite shape that Tichy’s teleoperated robot took on was that of a dachshund. And here is where Lem underestimated the scientific challenges of teleoperation. Lem focused on the physical science— how the avatar might reconfigure into a new shape. He assumed that Tichy would have little difficulty adjusting to the new shape because Tichy would be wearing a suit that sensed his body movements. Except this ignores the human-robot interaction component— how does Tichy know to move like a dog and synthesize perception from angles much lower than a human? The degrees of freedom are different, the movement patterns are different, the location of sensors are different. Operators get rapidly fatigued with humanoid robots where there is a one-to-one correspondence between the human and robot and there is no change in size. The cognitive load for trying to control a four legged animal would be huge. It is hard to imagine that Tichy would be successful without an intermediary intelligent assistance program that would translate his intent into the appropriate motions for the current shape.

And that type of assistive AI is a hard, open research question.

The XPrize ANA Avatar competition is making a similar assumption, that if you can build a humanoid avatar, it will be easy and natural for a human to control. That hasn’t been supported by decades of research in telerobotics and the humanoid robots in the DARPA robotics challenge often required multiple operators.

But back to Peace on Earth. It’s a very readable book jam packed with scientific ideas that were ahead of its time, combined with a serious jab at the stupidity of the nuclear arms race that was in progress at the time.

More importantly, Lew foresaw a world in which robots could be a threat, though politicians were a bigger threat, and were a solution to the threat. A refreshing take on robotics and the New World Order. What a shame Lem has been relatively unknown in the US.

You really should read this one, especially if you like hard science fiction like Arthur C. Clarke or if you want to get beyond the US viewpoint of sci-fi.

For an audio version of this review, click below…

Original article posted in Robotics Through Science Fiction blog.

A robot that draws circuits with conductive ink to survive

IMTS 2022 Opens Sept. 12, Showcases Digital Tech That Address Manufacturing’s Biggest Challenges

Robotics in the home

The first robots went into space over 60 years ago and robotics have become a familiar sight in many industrial settings, but it took a bit longer for robots to make their way into our homes. Robotic vacuum cleaners first started to make an appearance in homes about 20 years ago now, and according to a recent survey by the UK-RAS Network, 28% of people say that robots are now an occasional part of their everyday lives while 13% say they are fully integrated into their day to day lives. But home robots can do a lot more than clean the floors. Social and assistive robots can offer company and help around the home which can be game-changing for people with limited mobility or dementia.

In this special live recording for the UK Festival of Robotics, Claire chatted to Dr. Patrick Holthaus (University of Hertfordshire / Robot House), Prof. Praminda Caleb-Solly (University of Nottingham) and Dr Mike Aldred (Dyson).

Patrick Holthaus researches how robots can socially engage with humans letting them interact with each other in a special research facility, the Robot House at the University of Hertfordshire. He is particularly interested in non-verbal communication like body movements, gestures, and gaze and how they can influence the social credibility and trust of assistive and companion robots. At the same time, he maintains the robots and all the interactive technology in the house and coordinates and advises other users of the house as the Robot House manager.

Praminda Caleb-Solly is Professor of Embodied Intelligence at the University of Nottingham where she leads the Cyber-physical Health and Assistive Robotics Technologies research group. Prior to joining Nottingham, she led research in Assistive Robotics at the Bristol Robotics Laboratory for over ten years. In 2020 she co-founded Robotics for Good CIC, a ‘robots-as-a-service’ start-up which is currently supporting the deployment of telepresence robots.

Having gained a PhD in robotics from the University of Kent, Mike Aldred left in 1998 to be part of the original team of 5 roboticists at Dyson. He spent 15 years taking the company’s first robot vacuum cleaner from technology research right the way through to a manufactured product. He has worked on pretty much every area of domestic robotics from developing vision based navigation systems, to helping define international robot standards and ensuring the production line runs smoothly. The common theme running across all his roles at Dyson has been turning academic theory into practical reality.

Keywords: assistive, home, domestic, social, vacuum

Why household robot servants are a lot harder to build than robotic vacuums and automated warehouse workers

Who wouldn’t want a robot to handle all the household drudgery? Skathi/iStock via Getty Images

By Ayonga Hereid (Assistant Professor of Mechanical and Aerospace Engineering, The Ohio State University)

With recent advances in artificial intelligence and robotics technology, there is growing interest in developing and marketing household robots capable of handling a variety of domestic chores.

Tesla is building a humanoid robot, which, according to CEO Elon Musk, could be used for cooking meals and helping elderly people. Amazon recently acquired iRobot, a prominent robotic vacuum manufacturer, and has been investing heavily in the technology through the Amazon Robotics program to expand robotics technology to the consumer market. In May 2022, Dyson, a company renowned for its power vacuum cleaners, announced that it plans to build the U.K.’s largest robotics center devoted to developing household robots that carry out daily domestic tasks in residential spaces.

Despite the growing interest, would-be customers may have to wait awhile for those robots to come on the market. While devices such as smart thermostats and security systems are widely used in homes today, the commercial use of household robots is still in its infancy.

As a robotics researcher, I know firsthand how household robots are considerably more difficult to build than smart digital devices or industrial robots.

Robots that can handle a variety of domestic chores are an age-old staple of science fiction.

Handling objects

One major difference between digital and robotic devices is that household robots need to manipulate objects through physical contact to carry out their tasks. They have to carry the plates, move the chairs and pick up dirty laundry and place it in the washer. These operations require the robot to be able to handle fragile, soft and sometimes heavy objects with irregular shapes.

The state-of-the-art AI and machine learning algorithms perform well in simulated environments. But contact with objects in the real world often trips them up. This happens because physical contact is often difficult to model and even harder to control. While a human can easily perform these tasks, there exist significant technical hurdles for household robots to reach human-level ability to handle objects.

Robots have difficulty in two aspects of manipulating objects: control and sensing. Many pick-and-place robot manipulators like those on assembly lines are equipped with a simple gripper or specialized tools dedicated only to certain tasks like grasping and carrying a particular part. They often struggle to manipulate objects with irregular shapes or elastic materials, especially because they lack the efficient force, or haptic, feedback humans are naturally endowed with. Building a general-purpose robot hand with flexible fingers is still technically challenging and expensive.

It is also worth mentioning that traditional robot manipulators require a stable platform to operate accurately, but the accuracy drops considerably when using them with platforms that move around, particularly on a variety of surfaces. Coordinating locomotion and manipulation in a mobile robot is an open problem in the robotics community that needs to be addressed before broadly capable household robots can make it onto the market.

A sophisticated robotic kitchen is already on the market, but it operates in a highly structured environment, meaning all of the objects it interacts with – cookware, food containers, appliances – are where it expects them to be, and there are no pesky humans to get in the way.

They like structure

In an assembly line or a warehouse, the environment and sequence of tasks are strictly organized. This allows engineers to preprogram the robot’s movements or use simple methods like QR codes to locate objects or target locations. However, household items are often disorganized and placed randomly.

Home robots must deal with many uncertainties in their workspaces. The robot must first locate and identify the target item among many others. Quite often it also requires clearing or avoiding other obstacles in the workspace to be able to reach the item and perform given tasks. This requires the robot to have an excellent perception system, efficient navigation skills, and powerful and accurate manipulation capability.

For example, users of robot vacuums know they must remove all small furniture and other obstacles such as cables from the floor, because even the best robot vacuum cannot clear them by itself. Even more challenging, the robot has to operate in the presence of moving obstacles when people and pets walk within close range.

Keeping it simple

While they appear straightforward for humans, many household tasks are too complex for robots. Industrial robots are excellent for repetitive operations in which the robot motion can be preprogrammed. But household tasks are often unique to the situation and could be full of surprises that require the robot to constantly make decisions and change its route in order to perform the tasks.

The vision for household humanoid robots like the proposed Tesla Bot is of an artificial servant capable of handling any mundane task. Courtesy Tesla

Think about cooking or cleaning dishes. In the course of a few minutes of cooking, you might grasp a sauté pan, a spatula, a stove knob, a refrigerator door handle, an egg and a bottle of cooking oil. To wash a pan, you typically hold and move it with one hand while scrubbing with the other, and ensure that all cooked-on food residue is removed and then all soap is rinsed off.

There has been significant development in recent years using machine learning to train robots to make intelligent decisions when picking and placing different objects, meaning grasping and moving objects from one spot to another. However, to be able to train robots to master all different types of kitchen tools and household appliances would be another level of difficulty even for the best learning algorithms.

Not to mention that people’s homes often have stairs, narrow passageways and high shelves. Those hard-to-reach spaces limit the use of today’s mobile robots, which tend to use wheels or four legs. Humanoid robots, which would more closely match the environments humans build and organize for themselves, have yet to be reliably used outside of lab settings.

A solution to task complexity is to build special-purpose robots, such as robot vacuum cleaners or kitchen robots. Many different types of such devices are likely to be developed in the near future. However, I believe that general-purpose home robots are still a long way off.

Ayonga Hereid does not work for, consult, own shares in or receive funding from any company or organization that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

This article appeared in The Conversation.