Making virtual assistants sound human poses a challenge for designers

5 MIR1000 Robots Automates the Internal Transportation of Heavy Loads at Florisa

A system to benchmark the posture control and balance of humanoid robots

Expectations and perceptions of healthcare professionals for robot deployment in hospital environments during the COVID-19 pandemic

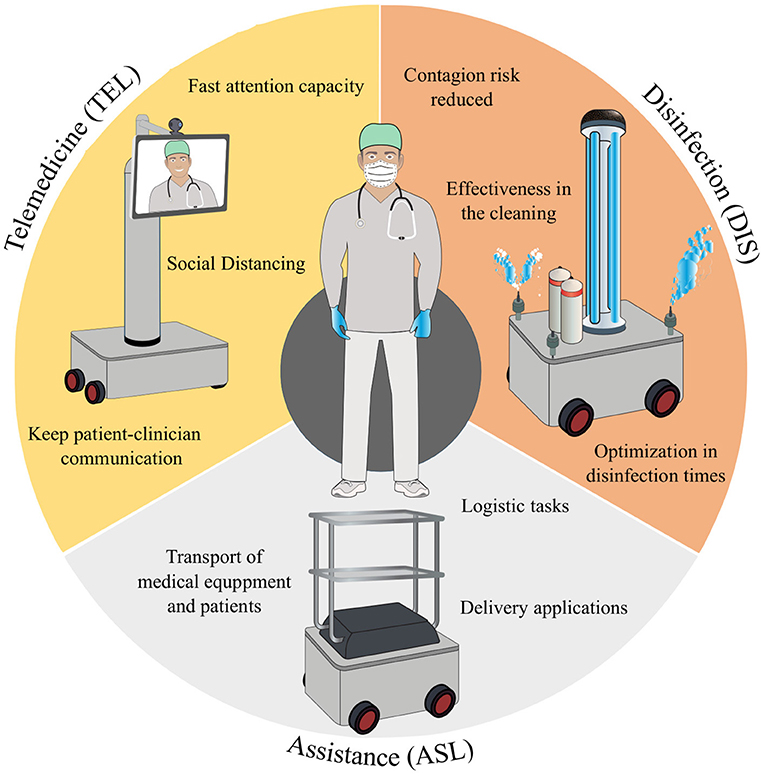

Image extracted from [1].

The recent outbreak of the severe acute respiratory syndrome, caused by coronavirus 2 (SARS-CoV-2), also referred to as COVID-19, has spread globally in an unprecedented way. In response, the efforts of most countries have been focused on containing and mitigating the effects of the pandemic. Given the transmission rate of the virus, the World Health Organization (WHO) recommended several strategies, such as physical distancing, to contain the spread and widespread transmission. Driven by other factors, including the effects of this pandemic on the economy, some countries are now resuming economic activities, making it all the more necessary to ensure compliance with bio-safety protocols to contain further spread of the virus. So, the background to our project is this diverse landscape of different public health measures that are having to be adopted around the world, oftentimes with the measures being iteratively refined by the authorities as their impacts on the social, economic, and political sectors become clearer.

In the health sector, all levels and different stakeholders of the world’s health systems have been unwaveringly focused on providing medical care during this pandemic. The demands on the health systems remain high despite the successful rollouts of vaccination among many in this population across the globe. Numerous challenges have arisen, such as (1) the vulnerability and overloading of healthcare professionals, (2) the need for decongestion and reduction in the risk of contagion in intra-hospital environments, (3) the availability of biomedical technology, and (4) the sustainability of patient care. As a consequence, multiple strategies have been proposed to address these challenges. For instance, robotics is a promising part of the solution to help control and mitigate the effects of the COVID-19.

Although the literature has shown some robotics applications to be able to overcome the potential hazards and risks in hospital environments, actual deployments of those developments are limited. Interestingly, few studies measure the perception and the acceptance among clinicians of these robotics platforms. This work [1] presents the design and implementation of several perception questionnaires to assess healthcare providers’ level of acceptance and awareness of robotics for COVID-19 control in clinical scenarios. The data of these results are available in a public repository. Specifically, 41 healthcare professionals (e.g., nurses, doctors, biomedical engineers, among others, from two private healthcare institutions in the city of Bogotá D.C., Colombia) completed the surveys, assessing three categories: (DIS) Disinfection and cleaning robots, (ASL) Assistance, Service, and Logistics robots, and (TEL) Telemedicine and Telepresence robots. The survey revealed that there is generally a relatively low level of knowledge about robotics applications among the target population. Likewise, the surveys revealed that some fear of being replaced by robots very much remains in the medical community. However, 82.9 % of participants indicated a positive perception concerning the development and implementation of robotics in clinical environments.

The outcomes showed that the healthcare staff expects these robots to interact within hospital environments socially and communicate with users, connecting patients and doctors in this sense. Related work [2] focused on Socially Assistive Robotics presents a potential tool to support clinical care areas, promoting physical distancing, and reducing the contagion rate. The paper [2] presents a long-term evaluation of a social robotic platform for gait neurorehabilitation.

The social robot is located in front of the patient during the exercise, guiding their performance through non-verbal and verbal gestures and monitoring physiological progress. Thus, the platform enables the physical distancing between the clinicians and the patient. A clinical validation with ten patients during 15 sessions was conducted in a rehabilitation center located in Colombia. Results showed that the robot’s support improves the patients’ physiological progress. It helped them to maintain a healthy posture, evidenced in their thoracic and cervical posture. The perception and the acceptance were also measured in this work, and Clinicians highlighted that they trust the system as a complementary tool in rehabilitation.

Previous studies before the pandemic showed that clinicians were worried about being replaced by the robot before any real interaction in their environments [3]. Considering the robot’s role during the COVID-19 pandemic, clinicians have a positive perception of the robot used as a tool to manage the rehabilitation procedures. Most healthcare personnel will consent to use the robot during the pandemic, as they consider this tool can promote physical distancing. It is a secure device to carry out the healthcare protocol. Another encouraging result is that clinicians recommend the robot to other colleagues and institutions to support rehabilitation during the COVID-19 pandemic.

These works are funded by the Royal Academy of Engineering – Pandemic Preparedness (Grant EXPP20211\1\183). This project aims to develop robotic strategies in developing countries for monitoring non-safety conditions related to human behaviors and planning processes of disinfection of outdoor and indoor environments. This project configures an international cooperation network led by the Colombian School of Engineering Julio Garavito, with leading Investigator Carlos A. Cifuentes and the University of Edinburgh support by Professor Subramanian Ramamoorthy along with researchers and clinicians from Latin America (Colombia, Brazil, Argentina, and Chile).

References

- Sierra, S., Gomez-Vargas, D., Cespedes, N., Munera, M., Roberti, F., Barria, P., Ramamoorthy, S., Becker, M., Carelli. R. Cifuentes, C.A. (2021) Expectations and Perceptions of Healthcare Professionals for Robot Deployment in Hospital Environments during the COVID-19 Pandemic. Frontiers in Robotics and AI.

- Cespedes N., Raigoso, D., Munera, M., Cifuentes, C.A. (2021) Long-Term Social Human-Robot Interaction for Neurorehabilitation: Robots as a tool to support gait therapy in the Pandemic, Frontiers in Neurorobotics.

- Casas, J. Cespedes, N., Cifuentes, C.A., Gutierrez, L., M., Rincon-Roncancio, Munera, M., (2019). Expectations vs Reality: Attitudes Towards a Socially Assistive Robot in Cardiac Rehabilitation. Appl. Sci, 9(21), 4651.

Toyota Research Institute Shares Design to Help Advance the Field of Soft Robotics

Shoot better drone videos with a single word

Better Together: the Human and Robot Relationship

Engineers create a programmable fiber

By Becky Ham | MIT News correspondent

MIT researchers have created the first fiber with digital capabilities, able to sense, store, analyze, and infer activity after being sewn into a shirt.

Yoel Fink, who is a professor of material sciences and electrical engineering, a Research Laboratory of Electronics principal investigator, and the senior author on the study, says digital fibers expand the possibilities for fabrics to uncover the context of hidden patterns in the human body that could be used for physical performance monitoring, medical inference, and early disease detection.

Or, you might someday store your wedding music in the gown you wore on the big day — more on that later.

Fink and his colleagues describe the features of the digital fiber in Nature Communications. Until now, electronic fibers have been analog — carrying a continuous electrical signal — rather than digital, where discrete bits of information can be encoded and processed in 0s and 1s.

“This work presents the first realization of a fabric with the ability to store and process data digitally, adding a new information content dimension to textiles and allowing fabrics to be programmed literally,” Fink says.

MIT PhD student Gabriel Loke and MIT postdoc Tural Khudiyev are the lead authors on the paper. Other co-authors MIT postdoc Wei Yan; MIT undergraduates Brian Wang, Stephanie Fu, Ioannis Chatziveroglou, Syamantak Payra, Yorai Shaoul, Johnny Fung, and Itamar Chinn; John Joannopoulos, the Francis Wright Davis Chair Professor of Physics and director of the Institute for Soldier Nanotechnologies at MIT; Harrisburg University of Science and Technology master’s student Pin-Wen Chou; and Rhode Island School of Design Associate Professor Anna Gitelson-Kahn. The fabric work was facilitated by Professor Anais Missakian, who holds the Pevaroff-Cohn Family Endowed Chair in Textiles at RISD.

Memory and more

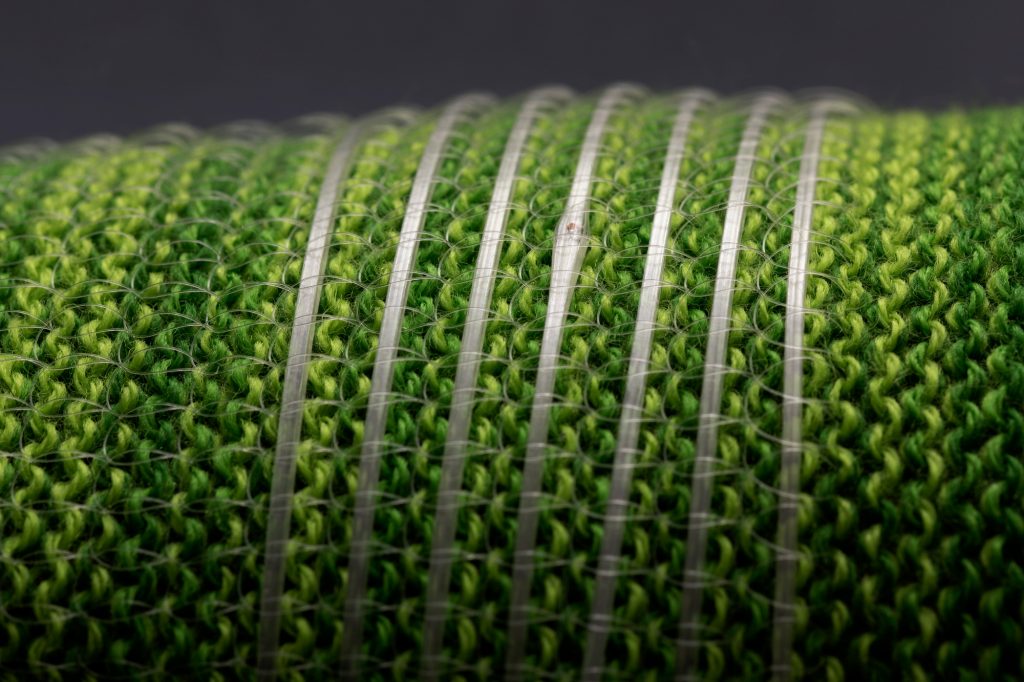

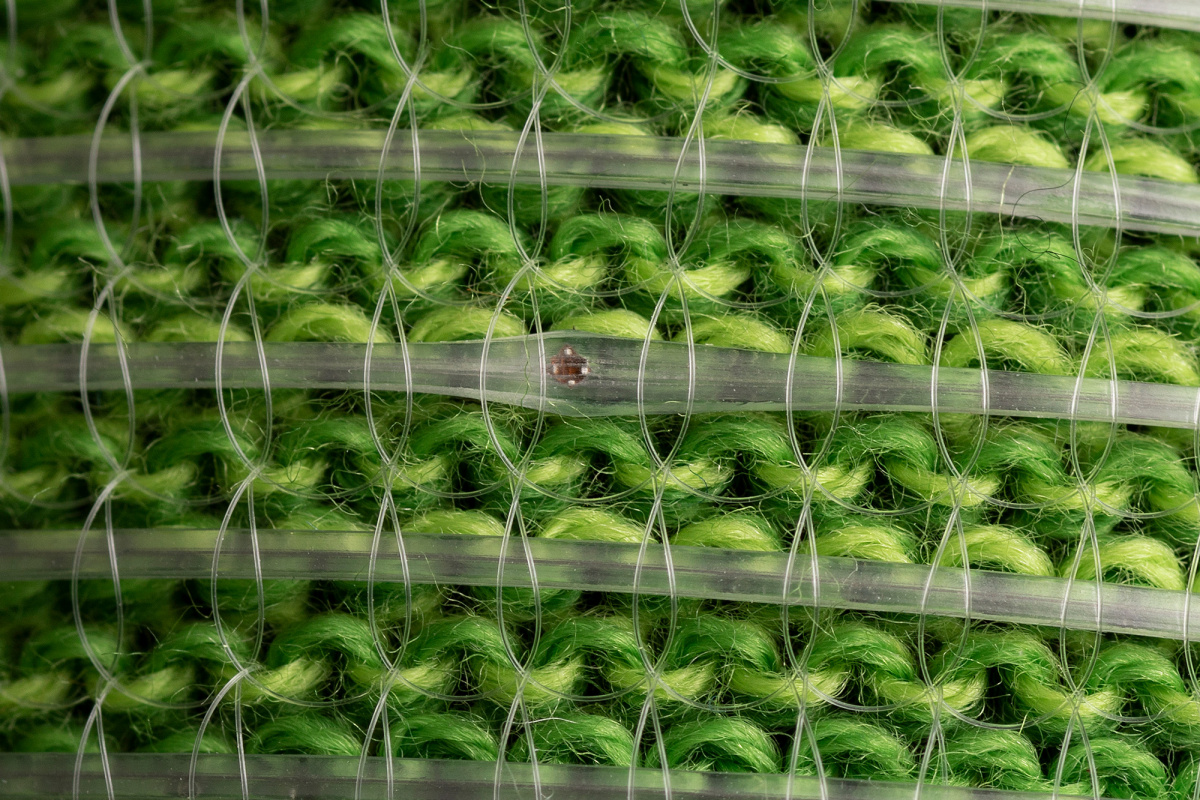

The new fiber was created by placing hundreds of square silicon microscale digital chips into a preform that was then used to create a polymer fiber. By precisely controlling the polymer flow, the researchers were able to create a fiber with continuous electrical connection between the chips over a length of tens of meters.

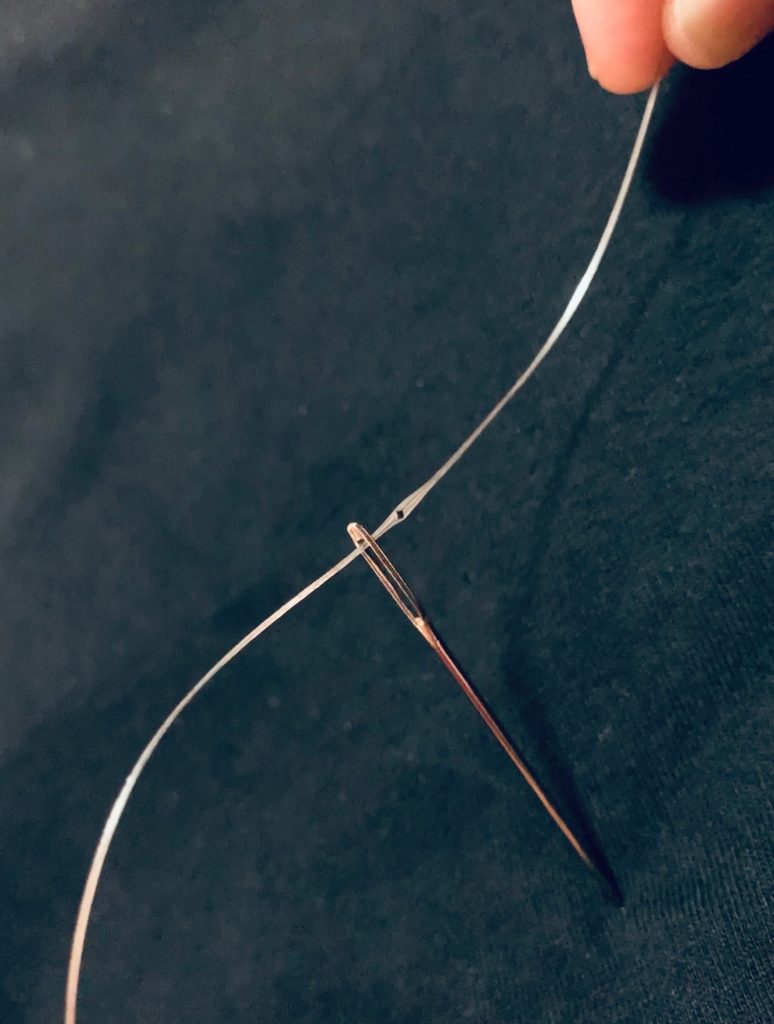

The fiber itself is thin and flexible and can be passed through a needle, sewn into fabrics, and washed at least 10 times without breaking down. According to Loke, “When you put it into a shirt, you can’t feel it at all. You wouldn’t know it was there.”

Making a digital fiber “opens up different areas of opportunities and actually solves some of the problems of functional fibers,” he says.

For instance, it offers a way to control individual elements within a fiber, from one point at the fiber’s end. “You can think of our fiber as a corridor, and the elements are like rooms, and they each have their own unique digital room numbers,” Loke explains. The research team devised a digital addressing method that allows them to “switch on” the functionality of one element without turning on all the elements.

A digital fiber can also store a lot of information in memory. The researchers were able to write, store, and read information on the fiber, including a 767-kilobit full-color short movie file and a 0.48 megabyte music file. The files can be stored for two months without power.

When they were dreaming up “crazy ideas” for the fiber, Loke says, they thought about applications like a wedding gown that would store digital wedding music within the weave of its fabric, or even writing the story of the fiber’s creation into its components.

Fink notes that the research at MIT was in close collaboration with the textile department at RISD led by Missakian. Gitelson-Kahn incorporated the digital fibers into a knitted garment sleeve, thus paving the way to creating the first digital garment.

On-body artificial intelligence

The fiber also takes a few steps forward into artificial intelligence by including, within the fiber memory, a neural network of 1,650 connections. After sewing it around the armpit of a shirt, the researchers used the fiber to collect 270 minutes of surface body temperature data from a person wearing the shirt, and analyze how these data corresponded to different physical activities. Trained on these data, the fiber was able to determine with 96 percent accuracy what activity the person wearing it was engaged in.

Adding an AI component to the fiber further increases its possibilities, the researchers say. Fabrics with digital components can collect a lot of information across the body over time, and these “lush data” are perfect for machine learning algorithms, Loke says.

“This type of fabric could give quantity and quality open-source data for extracting out new body patterns that we did not know about before,” he says.

With this analytic power, the fibers someday could sense and alert people in real-time to health changes like a respiratory decline or an irregular heartbeat, or deliver muscle activation or heart rate data to athletes during training.

The fiber is controlled by a small external device, so the next step will be to design a new chip as a microcontroller that can be connected within the fiber itself.

“When we can do that, we can call it a fiber computer,” Loke says.

This research was supported by the U.S. Army Institute of Soldier Nanotechnology, National Science Foundation, the U.S. Army Research Office, the MIT Sea Grant, and the Defense Threat Reduction Agency.