AGV Robotization: The Solution

Quadruped robot automatically adapts in unstructured outdoor environments

Shortening Sales Cycles; A Top Priority for Manufacturers

Maximum Entropy RL (Provably) Solves Some Robust RL Problems

By Ben Eysenbach

Nearly all real-world applications of reinforcement learning involve some degree of shift between the training environment and the testing environment. However, prior work has observed that even small shifts in the environment cause most RL algorithms to perform markedly worse. As we aim to scale reinforcement learning algorithms and apply them in the real world, it is increasingly important to learn policies that are robust to changes in the environment.

Robust reinforcement learning maximizes reward on an adversarially-chosen environment.

Broadly, prior approaches to handling distribution shift in RL aim to maximize performance in either the average case or the worst case. The first set of approaches, such as domain randomization, train a policy on a distribution of environments, and optimize the average performance of the policy on these environments. While these methods have been successfully applied to a number of areas (e.g., self-driving cars, robot locomotion and manipulation), their success rests critically on the design of the distribution of environments. Moreover, policies that do well on average are not guaranteed to get high reward on every environment. The policy that gets the highest reward on average might get very low reward on a small fraction of environments. The second set of approaches, typically referred to as robust RL, focus on the worst-case scenarios. The aim is to find a policy that gets high reward on every environment within some set. Robust RL can equivalently be viewed as a two-player game between the policy and an environment adversary. The policy tries to get high reward, while the environment adversary tries to tweak the dynamics and reward function of the environment so that the policy gets lower reward. One important property of the robust approach is that, unlike domain randomization, it is invariant to the ratio of easy and hard tasks. Whereas robust RL always evaluates a policy on the most challenging tasks, domain randomization will predict that the policy is better if it is evaluated on a distribution of environments with more easy tasks.

Prior work has suggested a number of algorithms for solving robust RL problems. Generally, these algorithms all follow the same recipe: take an existing RL algorithm and add some additional machinery on top to make it robust. For example, robust value iteration uses Q-learning as the base RL algorithm, and modifies the Bellman update by solving a convex optimization problem in the inner loop of each Bellman backup. Similarly, Pinto ‘17 uses TRPO as the base RL algorithm and periodically updates the environment based on the behavior of the current policy. These prior approaches are often difficult to implement and, even once implemented correctly, they requiring tuning of many additional hyperparameters. Might there be a simpler approach, an approach that does not require additional hyperparameters and additional lines of code to debug?

To answer this question, we are going to focus on a type of RL algorithm known as maximum entropy RL, or MaxEnt RL for short (Todorov ‘06, Rawlik ‘08, Ziebart ‘10). MaxEnt RL is a slight variant of standard RL that aims to learn a policy that gets high reward while acting as randomly as possible; formally, MaxEnt maximizes the entropy of the policy. Some prior work has observed empirically that MaxEnt RL algorithms appear to be robust to some disturbances the environment. To the best of our knowledge, no prior work has actually proven that MaxEnt RL is robust to environmental disturbances.

In a recent paper, we prove that every MaxEnt RL problem corresponds to maximizing a lower bound on a robust RL problem. Thus, when you run MaxEnt RL, you are implicitly solving a robust RL problem. Our analysis provides a theoretically-justified explanation for the empirical robustness of MaxEnt RL, and proves that MaxEnt RL is itself a robust RL algorithm. In the rest of this post, we’ll provide some intuition into why MaxEnt RL should be robust and what sort of perturbations MaxEnt RL is robust to. We’ll also show some experiments demonstrating the robustness of MaxEnt RL.

Intuition

So, why would we expect MaxEnt RL to be robust to disturbances in the environment? Recall that MaxEnt RL trains policies to not only maximize reward, but to do so while acting as randomly as possible. In essence, the policy itself is injecting as much noise as possible into the environment, so it gets to “practice” recovering from disturbances. Thus, if the change in dynamics appears like just a disturbance in the original environment, our policy has already been trained on such data. Another way of viewing MaxEnt RL is as learning many different ways of solving the task (Kappen ‘05). For example, let’s look at the task shown in videos below: we want the robot to push the white object to the green region. The top two videos show that standard RL always takes the shortest path to the goal, whereas MaxEnt RL takes many different paths to the goal. Now, let’s imagine that we add a new obstacle (red blocks) that wasn’t included during training. As shown in the videos in the bottom row, the policy learned by standard RL almost always collides with the obstacle, rarely reaching the goal. In contrast, the MaxEnt RL policy often chooses routes around the obstacle, continuing to reach the goal for a large fraction of trials.

| Standard RL | MaxEnt RL | |

|

Trained and evaluated without the obstacle: |

|

|

|

Trained without the obstacle, but evaluated with |

|

|

Theory

We now formally describe the technical results from the paper. The aim here is not to provide a full proof (see the paper Appendix for that), but instead to build some intuition for what the technical results say. Our main result is that, when you apply MaxEnt RL with some reward function and some dynamics, you are actually maximizing a lower bound on the robust RL objective. To explain this result, we must first define the MaxEnt RL objective: $J_{MaxEnt}(\pi; p, r)$ is the entropy-regularized cumulative return of policy $\pi$ when evaluated using dynamics $p(s’ \mid s, a)$ and reward function $r(s, a)$. While we will train the policy using one dynamics $p$, we will evaluate the policy on a different dynamics, $\tilde{p}(s’ \mid s, a)$, chosen by the adversary. We can now formally state our main result as follows:

The left-hand-side is the robust RL objective. It says that the adversary gets to choose whichever dynamics function $\tilde{p}(s’ \mid s, a)$ makes our policy perform as poorly as possible, subject to some constraints (as specified by the set $\tilde{\mathcal{P}}$). On the right-hand-side we have the MaxEnt RL objective (note that $\log T$ is a constant, and the function $\exp(\cdots)$ is always increasing). Thus, this objective says that a policy that has a high entropy-regularized reward (right hand-side) is guaranteed to also get high reward when evaluated on an adversarially-chosen dynamics.

The most important part of this equation is the set $\tilde{\mathcal{P}}$ of dynamics that the adversary can choose from. Our analysis describes precisely how this set is constructed and shows that, if we want a policy to be robust to a larger set of disturbances, all we have to do is increase the weight on the entropy term and decrease the weight on the reward term. Intuitively, the adversary must choose dynamics that are “close” to the dynamics on which the policy was trained. For example, in the special case where the dynamics are linear-Gaussian, this set corresponds to all perturbations where the original expected next state and the perturbed expected next state have a Euclidean distance less than $\epsilon$.

More Experiments

Our analysis predicts that MaxEnt RL should be robust to many types of disturbances. The first set of videos in this post showed that MaxEnt RL is robust to static obstacles. MaxEnt RL is also robust to dynamic perturbations introduced in the middle of an episode. To demonstrate this, we took the same robotic pushing task and knocked the puck out of place in the middle of the episode. The videos below show that the policy learned by MaxEnt RL is more robust at handling these perturbations, as predicted by our analysis.

|

Standard RL |

MaxEnt RL |

|

|

The policy learned by MaxEntRL is robust to dynamic perturbations of the puck (red frames).

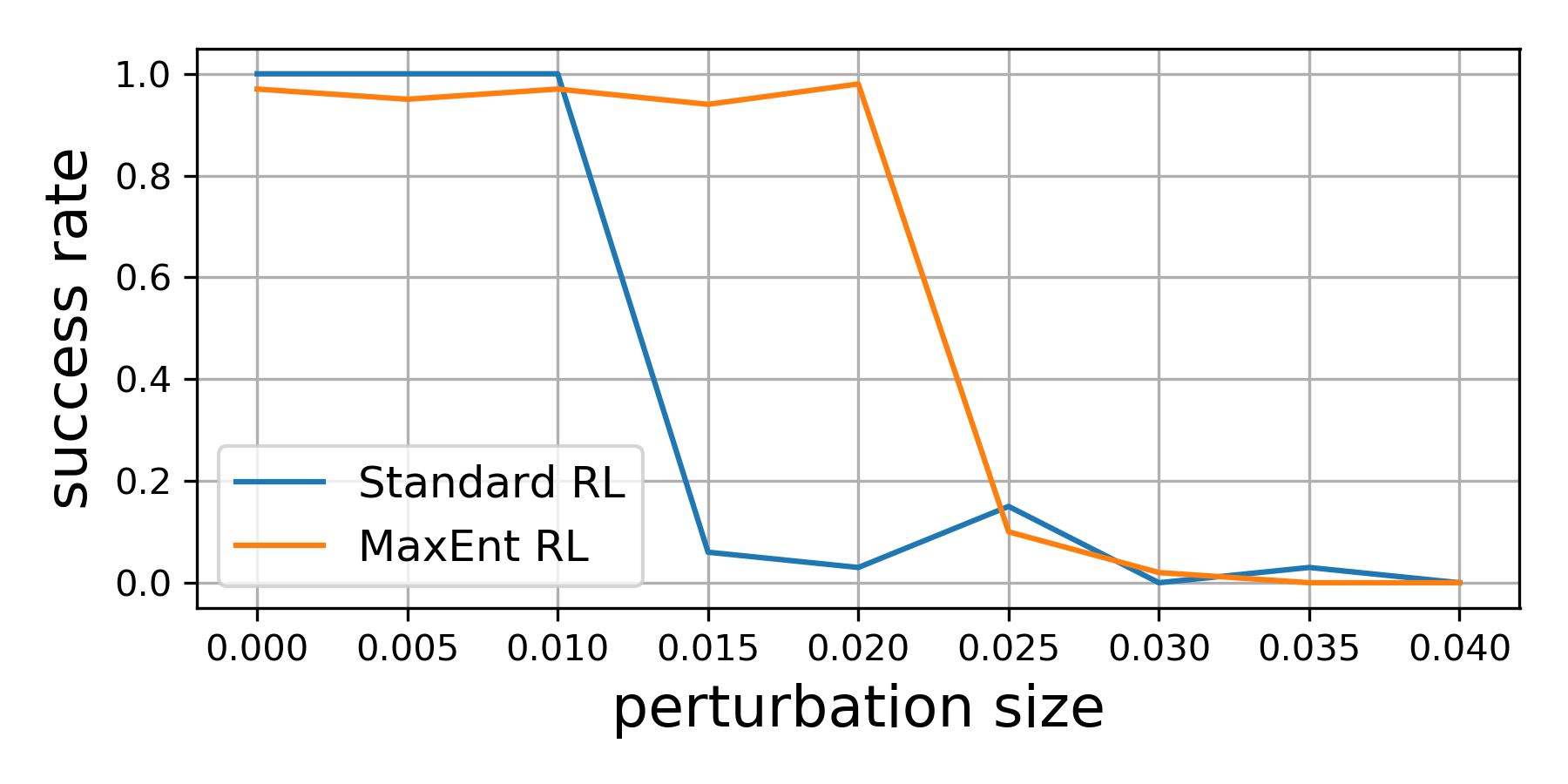

Our theoretical results suggest that, even if we optimize the environment perturbations so the agent does as poorly as possible, MaxEnt RL policies will still be robust. To demonstrate this capability, we trained both standard RL and MaxEnt RL on a peg insertion task shown below. During evaluation, we changed the position of the hole to try to make each policy fail. If we only moved the hole position a little bit ($\le$ 1 cm), both policies always solved the task. However, if we moved the hole position up to 2cm, the policy learned by standard RL almost never succeeded in inserting the peg, while the MaxEnt RL policy succeeded in 95% of trials. This experiment validates our theoretical findings that MaxEnt really is robust to (bounded) adversarial disturbances in the environment.

|

|

|

|

Standard RL |

MaxEnt RL |

Evaluation on adversarial perturbations |

MaxEnt RL is robust to adversarial perturbations of the hole (where the robot

inserts the peg).

Conclusion

In summary, our paper shows that a commonly-used type of RL algorithm, MaxEnt RL, is already solving a robust RL problem. We do not claim that MaxEnt RL will outperform purpose-designed robust RL algorithms. However, the striking simplicity of MaxEnt RL compared with other robust RL algorithms suggests that it may be an appealing alternative to practitioners hoping to equip their RL policies with an ounce of robustness.

Acknowledgements

Thanks to Gokul Swamy, Diba Ghosh, Colin Li, and Sergey Levine for feedback on drafts of this post, and to Chloe Hsu and Daniel Seita for help with the blog.

This post is based on the following paper:

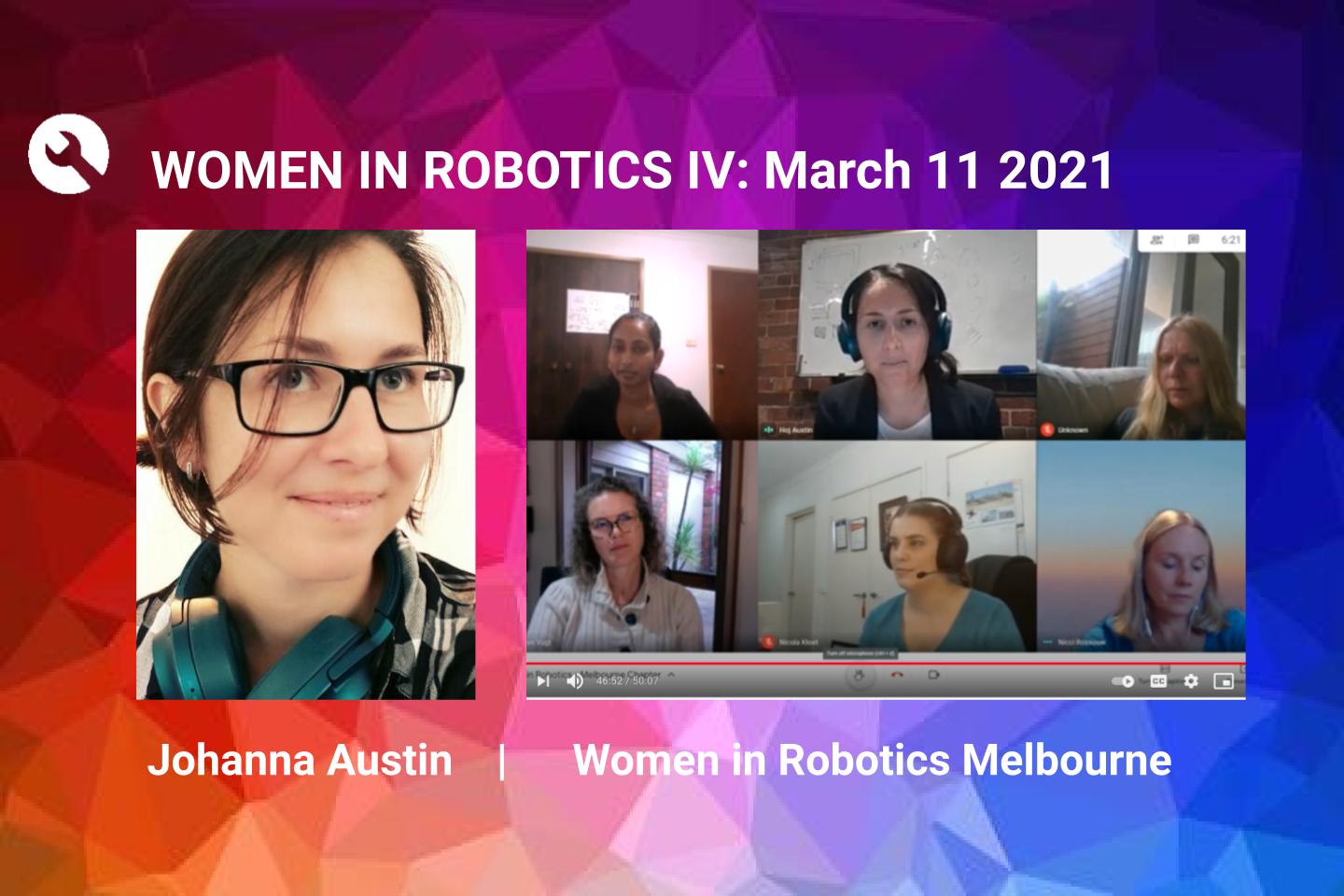

WiR IV with Johanna Austin, roboticist, helicopter pilot & techsupervixen

Eight lessons for robotics startups from NRI PI workshop

Research is all about being the first, but commercialization is all about repeatability, not just many times but every single time. This was one of the key takeaways from the Transitioning Research From Academia to Industry panel during the National Robotics Initiative Foundational Research in Robotics PI Meeting on March 10 2021. I had the pleasure of moderating a discussion between Lael Odhner, Co-Founder of RightHand Robotics, Andrea Thomaz, Co-Founder/CEO of Diligent Robotics and Assoc Prof at UTexas Austin, and Kel Guerin, Co-Founder/CIO of READY Robotics.

Research is all about being the first, but commercialization is all about repeatability, not just many times but every single time. This was one of the key takeaways from the Transitioning Research From Academia to Industry panel during the National Robotics Initiative Foundational Research in Robotics PI Meeting on March 10 2021. I had the pleasure of moderating a discussion between Lael Odhner, Co-Founder of RightHand Robotics, Andrea Thomaz, Co-Founder/CEO of Diligent Robotics and Assoc Prof at UTexas Austin, and Kel Guerin, Co-Founder/CIO of READY Robotics.

RightHand Robotics, Diligent Robotics and READY Robotics are young robotics startups that have all transitioned from the ICorps program and SBIR grant funding into becoming venture backed robotics startups. RightHand Robotics was founded in 2014 and is a Boston based company that specializes in robotics manipulation. It is spun out of work performed for the DARPA Autonomous Robotics Manipulation program and has since raised more than $34.3 million from investors that include Maniv Mobility, Playground and Menlo Ventures.

Diligent Robotics is based in Austin where they design and build robots like Moxi that assist clinical staff with routine activities so they can focus on caring for patients. Diligent Robotics is the youngest startup, founded in 2017 and having raised $15.8 million so far from investors that include True Ventures and Ubiquity Ventures. Andrea Thomaz maintains her position at UTexas Austin but has taken leave to focus on Diligent Robotics.

READY Robotics creates unique solutions that remove the barriers faced by small manufacturers when adopting robotic automation. Founded in 2016, and headquartered in Columbus, Ohio, the company has raised more than $41.8 million with investors that include Drive Capital and Canaan Capital. READY Robotics enables manufacturers to more easily deploy robots to the factory floor through a patented technology platform that combines a very easy to use programming interface and plug’n’play hardware. This enables small to medium sized manufacturers to be more competitive through the use of industrial robots.

To summarize the conversation into 8 key takeaways for startups.

- Research is primarily involved in developing a prototype (works once), whereas commercialization requires a product (works every time). Robustness and reliability are essential features of whatever you build.

- The customer development focus of the ICorps program speeds up the commercialization process, by forcing you into the field to talk face to face with potential customers and deeply explore their issues.

- Don’t lead with the robot! Get comfortable talking to people and learn to speak the language your customers use. Your job is to solve their problem, not persuade them to use your technology.

- The faster you can deeply embed yourself with your first customers, the faster you attain the critical knowledge that lets you define your product’s essential features, that the majority of your customers will need, from the merely ‘nice to have’ features or ‘one off’ ideas that can be misdirection.

- Team building is your biggest challenge, as many roles you will need to hire for are outside of your own experience. Conduct preparatory interviews with experts in an area that you don’t know, so that you learn what real expertize looks like, what questions to ask and what skillsets to look for.

- There is a lack of robotics skill sets in the marketplace so learn to look for transferable skills from other disciplines.

- It is actually easy to get to ‘yes’, but the real trick is knowing when to say ‘no’. In other words, don’t create or agree to bad contracts or term sheets, just for the sake of getting an agreement, considering it a ‘loss leader’. Focus on the agreements that make repeatable business sense for your company.

- Utilize the resources of your university, the accelerators, alumni funds, tech transfer departments, laboratories, experts and testing facilities.

And for robotics startups that don’t have immediate access to universities, then robotics clusters can provide similar assistance. From large clusters like RoboValley in Odense, MassRobotics in Boston and Silicon Valley Robotics which have startup programs, space and prototyping equipment, to smaller robotics clusters that can still provide a connection point to other resources.

Eight lessons for robotics startups from NRI PI workshop

Research is all about being the first, but commercialization is all about repeatability, not just many times but every single time. This was one of the key takeaways from the Transitioning Research From Academia to Industry panel during the National Robotics Initiative Foundational Research in Robotics PI Meeting on March 10 2021. I had the pleasure of moderating a discussion between Lael Odhner, Co-Founder of RightHand Robotics, Andrea Thomaz, Co-Founder/CEO of Diligent Robotics and Assoc Prof at UTexas Austin, and Kel Guerin, Co-Founder/CIO of READY Robotics.

Research is all about being the first, but commercialization is all about repeatability, not just many times but every single time. This was one of the key takeaways from the Transitioning Research From Academia to Industry panel during the National Robotics Initiative Foundational Research in Robotics PI Meeting on March 10 2021. I had the pleasure of moderating a discussion between Lael Odhner, Co-Founder of RightHand Robotics, Andrea Thomaz, Co-Founder/CEO of Diligent Robotics and Assoc Prof at UTexas Austin, and Kel Guerin, Co-Founder/CIO of READY Robotics.

RightHand Robotics, Diligent Robotics and READY Robotics are young robotics startups that have all transitioned from the ICorps program and SBIR grant funding into becoming venture backed robotics startups. RightHand Robotics was founded in 2014 and is a Boston based company that specializes in robotics manipulation. It is spun out of work performed for the DARPA Autonomous Robotics Manipulation program and has since raised more than $34.3 million from investors that include Maniv Mobility, Playground and Menlo Ventures.

Diligent Robotics is based in Austin where they design and build robots like Moxi that assist clinical staff with routine activities so they can focus on caring for patients. Diligent Robotics is the youngest startup, founded in 2017 and having raised $15.8 million so far from investors that include True Ventures and Ubiquity Ventures. Andrea Thomaz maintains her position at UTexas Austin but has taken leave to focus on Diligent Robotics.

READY Robotics creates unique solutions that remove the barriers faced by small manufacturers when adopting robotic automation. Founded in 2016, and headquartered in Columbus, Ohio, the company has raised more than $41.8 million with investors that include Drive Capital and Canaan Capital. READY Robotics enables manufacturers to more easily deploy robots to the factory floor through a patented technology platform that combines a very easy to use programming interface and plug’n’play hardware. This enables small to medium sized manufacturers to be more competitive through the use of industrial robots.

To summarize the conversation into 8 key takeaways for startups.

- Research is primarily involved in developing a prototype (works once), whereas commercialization requires a product (works every time). Robustness and reliability are essential features of whatever you build.

- The customer development focus of the ICorps program speeds up the commercialization process, by forcing you into the field to talk face to face with potential customers and deeply explore their issues.

- Don’t lead with the robot! Get comfortable talking to people and learn to speak the language your customers use. Your job is to solve their problem, not persuade them to use your technology.

- The faster you can deeply embed yourself with your first customers, the faster you attain the critical knowledge that lets you define your product’s essential features, that the majority of your customers will need, from the merely ‘nice to have’ features or ‘one off’ ideas that can be misdirection.

- Team building is your biggest challenge, as many roles you will need to hire for are outside of your own experience. Conduct preparatory interviews with experts in an area that you don’t know, so that you learn what real expertize looks like, what questions to ask and what skillsets to look for.

- There is a lack of robotics skill sets in the marketplace so learn to look for transferable skills from other disciplines.

- It is actually easy to get to ‘yes’, but the real trick is knowing when to say ‘no’. In other words, don’t create or agree to bad contracts or term sheets, just for the sake of getting an agreement, considering it a ‘loss leader’. Focus on the agreements that make repeatable business sense for your company.

- Utilize the resources of your university, the accelerators, alumni funds, tech transfer departments, laboratories, experts and testing facilities.

And for robotics startups that don’t have immediate access to universities, then robotics clusters can provide similar assistance. From large clusters like RoboValley in Odense, MassRobotics in Boston and Silicon Valley Robotics which have startup programs, space and prototyping equipment, to smaller robotics clusters that can still provide a connection point to other resources.

#330: Construction Site Automation by Dusty Robotics, with Tessa Lau

Abate interviews Tessa Lau on her startup Dusty Robotics which is innovating in the field of construction.

At Dusty Robotics, they developed a robot to automate the laying of floor plans on the floors in construction sites. Typically, this is done manually using a tape measure and reading printed out plans. This difficult task can often take a team of two a week to complete. Time-consuming tasks like this are incredibly expensive on a construction site where multiple different teams are waiting on this task to complete. Any errors in this process are even more time-consuming to fix. By using a robot to automatically convert 3d models of building plans into markings on the floors, the amount of time and errors are dramatically reduced.

Dr. Tessa Lau

Dr. Tessa Lau is an experienced entrepreneur with expertise in AI, machine learning, and robotics. She is currently Founder/CEO at Dusty Robotics, a construction robotics company building robot-powered tools for the modern construction workforce. Prior to Dusty, she was CTO/co-founder at Savioke, where she orchestrated the deployment of 75+ delivery robots into hotels and high-rises. Previously, Dr. Lau was a Research Scientist at Willow Garage, where she developed simple interfaces for personal robots. She also spent 11 years at IBM Research working in business process automation and knowledge capture. More generally, Dr. Lau is interested in technology that gives people super-powers, and building businesses that bring that technology into people’s lives. Dr. Lau was recognized as one of the Top 5 Innovative Women to Watch in Robotics by Inc. in 2018 and one of Fast Company’s Most Creative People in 2015. Dr. Lau holds a PhD in Computer Science from the University of Washington.

Links

- Download mp3 (20.9 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

How AI is Changing the Future of Supply Chain Costs

Edge Roller Technology (ERT®250) Conveyor

Ethics of connected and automated vehicles

The European Commission has published a report by an independent group of experts on Ethics of Connected and Automated Vehicles (CAVs). This report advises on specific ethical issues raised by driverless mobility for road transport. The report aims to promote a safe and responsible transition to connected and automated vehicles by supporting stakeholders in the systematic inclusion of ethical considerations in the development and regulation of CAVs.

The report presents 20 ethical recommendations concerning the future development and use of CAVs based on ethical and legal principles. The recommendations are discussed in the context of three topics areas:

Road Safety, Risk, Dilemmas

Improvements in safety achieved by CAVs should be publicly demonstrable and monitored through solid and shared scientific methods and data; these improvements should be achieved in compliance with basic ethical and legal principles, such as a fair distribution of risk and the protection of basic rights, including those of vulnerable users; these same considerations should apply to dilemma scenarios.

Data and algorithm Ethics: Privacy, Fairness, Explainability

The acquisition and processing of static and dynamic data by CAVs should safeguard basic privacy rights, should not create discrimination between users, and should happen via processes that are accessible and understandable to the subjects involved.

Responsibility

Considering who should be liable for paying compensation following a collision is not sufficient; it is also important to make different stakeholders willing, able and motivated to take responsibility for preventing undesirable outcomes and promoting societally beneficial outcomes of CAVs, that is creating a culture of responsibility for CAVs.

Read the full report.