Optimize Space with Conveyor Elevations and Line Egress Solutions

Flying joysticks for better immersion in virtual reality

Automated mining inspection against the odds

Image from Rajant.

Learn how Rajant Corporation, PBE Group, and Australian Droid + Robot – as part of a #MSHA (U.S. Department of Labor)-backed mine safety mission – achieved a historic unmanned underground mine inspection at one of the US’ largest underground room and pillar limestone operations in this comprehensive IM report.

Using ten ADR Explora XL unmanned robots, a Rajant wireless Kinetic Mesh below-ground communication network, and PBE hardware and technology, a horizontal mobile infrastructure distance of 1.7 km was achieved. This allowed the unmanned robots to record high-definition video and LiDAR to create a virtual 3D mine model to assess the condition of the mine, for the deepest remote underground mine inspection in history.

The inspection made it possible for MSHA to conclude within a very short time that it was safe to re-enter the operation and begin remediation efforts, which included allowing mine personnel back into the mine to re-establish power and communications, after which mining was able to recommence quickly at the site.

The project, in many ways, is the ultimate example of necessity breeding innovation. It also showcased the capability of Rajant wireless mesh networks underground to facilitate autonomous mining operations where underground Wi-Fi would not be up to the task.

New robot helps dairy workers make Havarti and Danbo

A reachability-expressive motion planning algorithm to enhance human-robot collaboration

A labriform swimming robot to complete missions underwater

A Fantastic Feeling – Lightweight Exoskeleton

Autonomously Transporting Crops

Suma Reddy, CEO of Future Acres, talks about her company which is focused on creating smart farming tools to reduce labor demand and increase efficiency. Suma introduces her journey into agriculture technology and the issues in current farming practices that can benefit from robotic solutions. Future Acres’s robotic harvest companion, Carry, autonomously transports crops within the farms and collects valuable data. Suma also discusses the impact of their technology and their visions for the future of agricultural technology.

Suma Reddy is Co-founder and CEO of Future Acres, an AgTech startup building advanced mobility and AI solutions for farms to increase production efficiency, farmworker safety and provide real-time data and analytics. She is a three-times AgTech + ClimateTech founder (vertical farming, organic waste-to-energy, renewable energy), is on the advisory board of Scale for ClimateTech, a Board Member of GrainPro, and teaches Entrepreneurship for Sustainability and Resilience at the NYC School of Visual Arts. Suma is passionate about sustainable solutions and the disruptive technology that will help advance a better environment and more resilient world forward.

Links

- Download mp3

- Future Acres

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

People emotionally tied to robots can undermine relationships with co-workers

Autonomous Movers Set for US Launch in 2024

Czech Digital Innovation Hubs: who are they and how can they help?

Photo by RICAIP.eu

By Tereza Šamanová

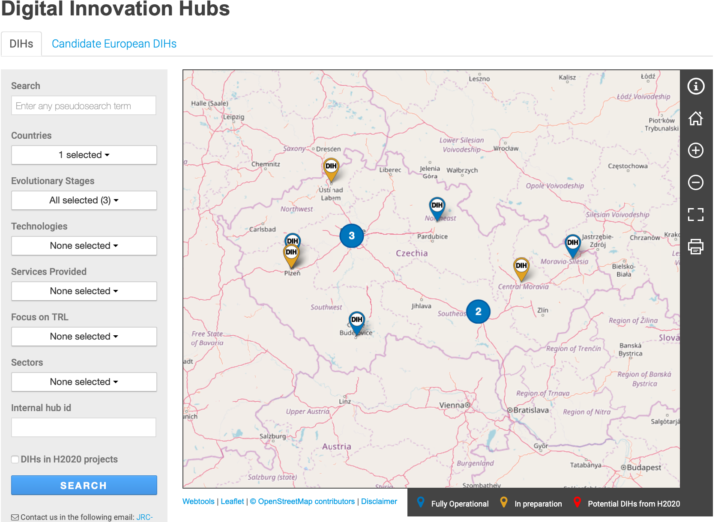

In the Czech Republic, the initial network of Digital Innovation Hubs (DIHs) in 2016 was very small – it included only two pioneer hubs raised from Horizon 2020 European projects focused on support of digital manufacturing (DIGIMAT located in Kuřim was the first one) and high-performance computing (the National Supercomputing Centre IT4Innovations located in Ostrava was the first DIH registered in CZ). Since then, the Czech network has enlarged up to twelve DIHs and their specialisation varies from manufacturing, cybersecurity, artificial intelligence, robotics, high-performance computing up to sectoral focus such as agriculture and food industry, health or smart cities and smart regions development.

Nowadays you can find at least one DIH in each region of the Czech Republic:

Source: https://s3platform.jrc.ec.europa.eu

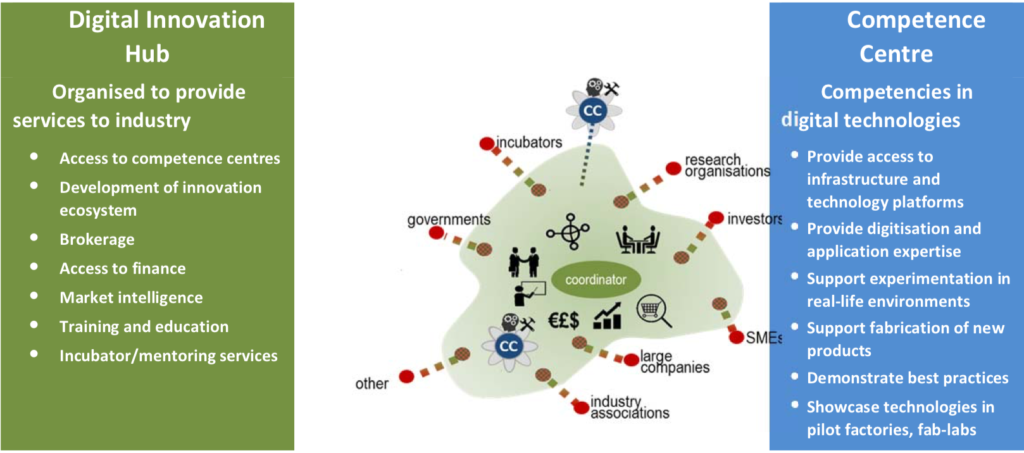

How are the Czech DIHs built and structured? They are based often on a leading competence centre, research organisation, technical university, science park, technology centre or the most active innovations-supporting NGOs who have created an efficient consortium of other partners around them, always following the selected field of specialisation. The DIHs in Czechia, as well as all over the Europe, have not been built “on a green meadow” – as we say in Czech, but based on a record of projects, activities and common references that have created the optimal mixture of the DIH`s expertise and capacities. The scheme developed by TNO in 2015 illustrates the typical position of a DIH as an “orchestrator” and cross-point of the regional activities focused on development and implementation of digital innovation:

Photo by DIH-HERO.EU

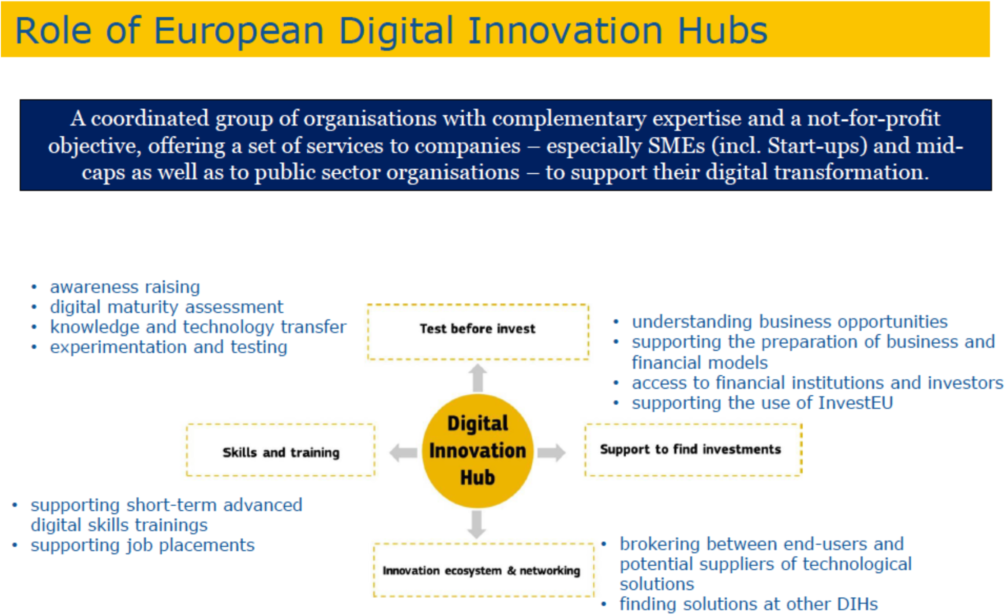

In 2021, based on the Digital Europe Programme, there were – among the existing DIHs and other players – a selection of an initial network of European Digital Innovation Hubs – DIHs with European relevance, serving not only clients coming from their region and/or country, but providing also cross-border services in the field of their specialisation.

The basic services of DIHs and European DIHs do not differ too much, their principal difference lies in the European and cross-border nature of their services. Therefore, when it comes to the arrangement of services of a standard DIH, you can imagine the mixture of different ingredients that together make a Digital Innovation Hub as a “satellite” published in 2019 by the European Commission:

Source: https://digital-strategy.ec.europa.eu/en/activities/edihs

How do DIHs in the Czech Republic cope with the mission to help SMEs and public organisations with their digitalisation?

- Explaining that advanced digitalisation means working with data and sophisticated interconnection of people, devices, and technologies, not simply transferring paper or analogue-based data to digital ones.

- Organising common events – regional roadshows, awareness raising activities and brainstorming among Czech DIHs to update information at national level on advanced digitalisation, and sharing examples of best practices.

- Sharing information about projects and recommending them to the appropriate beneficiaries with the objective of creating the most added value for the national innovative ecosystem.

- Attracting traditional non-tech SMEs and smaller public organisations and communities (i.e., farmers and/or service providers) to communicate with DIHs so they can offer them a starting plan of digitalisation in an appropriate way.

- Including all the new players (those who are interested in creating a DIH or cooperating with an existing one) inside the Czech DIH community and Central European Platform for Digital Innovations – CEEInno, organising an annual overview of Czech Digital Innovation Hubs and regular meetings for Czech DIHs, always with the support of our Ministry of Industry and Trade as the governmental manager of DIHs.

Photo by CEEInno

How can Czech DIHs become useful for foreign partners, especially operating in the field of robotics or interested in some of the sectors, i.e., agriculture?

- Identifying relevant partners for your project in the Czech Republic (CZ) and bordering countries. Foreign partners will be able to cooperate not only with the Czech Digital Innovation Hubs, but also with the DIHs and other players of the surrounding countries within our Central European Platform for Digital Innovations, CEEInno.

- Providing all the DIH services to clients not only from CZ, we are strengthening our forces in the near future under the Digital Europe Programme and within the European Digital Innovation Hubs initial network.

- Helping to contact Czech SMEs and public organisations, through members and cooperating organisations of the CEEInno Platform.

- Organising, co-organising or hosting your project if you intend to attract the Czech participants.

Photo by RICAIP.EU

Among relevant projects of the Czech DIHs focused on robotics and agro-robotics, there could be pointed out the following:

- RICAIP (Research and Innovation Centre on Advanced Industrial Production), which as an international distributed research centre of excellence focusing on research in robotics and artificial intelligence. The project includes Czech and German partners, being hosted at CIIRC CTU and National Centre for Industry 4.0 as one of the Czech DIHs. Its aim is to strengthen research in industrial production and to connect robotic and AI testbeds in Prague, Brno and Saarbrücken.

- Smart Agri Hub CZ-SK (AgriHub made in CzechoSlovakia), joining eight Czech and Slovak partners on common project aiming to promote digital innovation in the agri-food domain and to facilitate set-up and realisation of Innovation Experiments (IEs) in the region. Activities such as innovative hackathons, matchmaking activities for partners from different sectors and awareness raising events will take place within forthcoming year, the international community (not only from CZ and SK) is invited to participate as well.

If you would like to know more about the Czech Digital Innovation ecosystem you can contact me, Tereza Šamanová (LinkedIn, email), DIHNET Ambassador and informal coordinator of the “Czech DIHs Community” in the DIHNET community platform.