Transducer powered by electrochemical reactions for operating fluid pumps in soft robots

A locally reactive controller to enhance visual teach and repeat systems

Frontmatec Food Handling with Stainless Steel ATI Tool Changers

Autonomous Vehicles for Operational Logistics with Evocargo

Oleg Shipitko, Chief Technical Director of Evocargo, an integrated logistics service company using autonomous vehicles speaks with Kate. Oleg talks about the need for automating operational logistics inside enclosed facilities centers and how their autonomous vehicles and other operational services can greatly improve the current way we transport goods within facilities such as ports, warehouses and factories. Oleg also discusses the sensing choices for the specific use cases of these autonomous vehicles.

Oleg Shipitko

Oleg Shipitko is the Chief Technical Director of Evocargo. He has a bachelors and masters degree in autonomous information and control systems (bachelors: 4.96/5.0, masters: 5.0/5.0), and a PhD in Space Science and Technology with focus in Mathematical modeling, numerical methods and program complexes.

Oleg has received numerous awards including: Best paper awarded at 32nd European Conference on Modeling and Simulation (ECMS-2018): Ground Vehicle Localization With Particle Filter Based On Simulated Road Marking Image and Best paper awarded at IV International Conference on Information Technology and Nanotechnology (ITNT-2018): Gaussian filtering for FPGA based image processing with High-Level Synthesis tools.

Links

- Evocargo

- Evocargo Youtube

- Download mp3

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

Using gelatin and sugar as ink to print 3D soft robots

Novanta Partners with MassRobotics to Advance Next Generation of Robotics

Happy Birthday Nao!

NAO robot. Image credits: Stephen Chin on Flickr [CC BY 2.0]

Created by the French company ‘Aldebaran Robotics’ in 2008, and acquired by ‘Softbank Robotics Japan’ in 2015, NAO is an autonomous and programmable humanoid robot that has been successfully applied to research and development applications for children and adults. More than 13,000 NAO robots are used in more than 70 countries around the world. Pretty much every lab working in human-robot interaction research owns a NAO making it the social robot that has been the most used in the history of the field.

In our paper ‘10 Years of Human-NAO Interaction Research: A Scoping Review’ published in Frontiers in Robotics and AI, we present an overview of the evolution of NAO’s technical capabilities. We also present the main results from a scoping review of the human-robot interaction research literature in which NAO was used.

Technical overview of Nao over the years

Appearance-wise, NAO hasn’t aged a bit. The childish anthropomorphic appearance of the robot has stayed pretty much the same over the last 10 years. However, a lot of improvement has been made over the last versions of the robots.

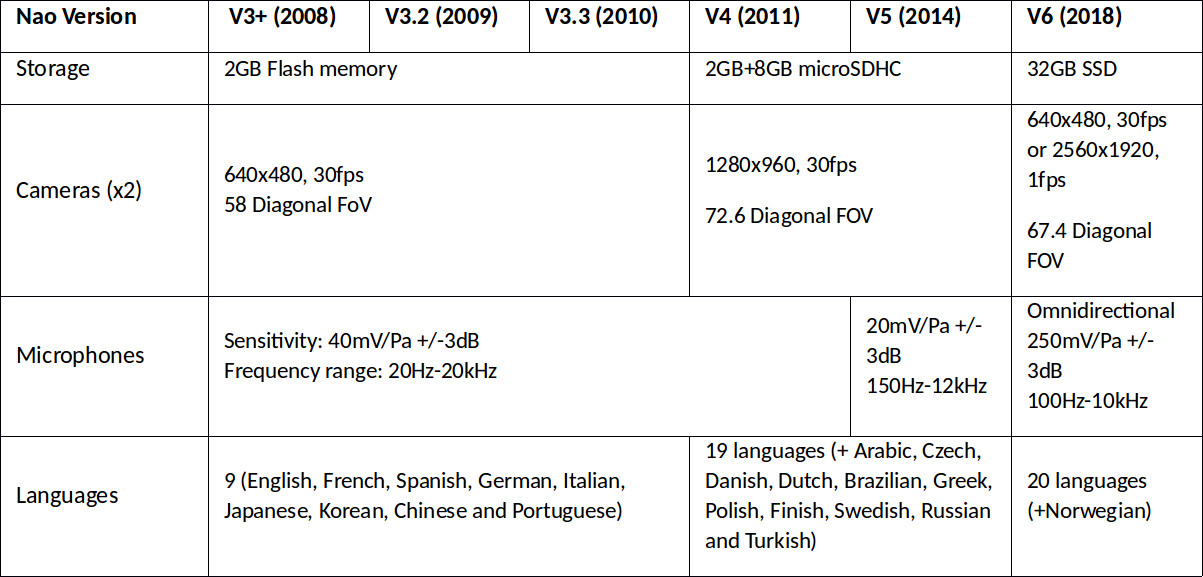

Evolution of the NAO robot’s technical characteristic since 2008

We also note that the software environment for the robot has also improved over the years integrating new technologies and features as years passed: face recognition and tracking, semantic engine. The NAOqi API also supports various programming languages. In 2013, Aldebaran also created a developer community. This community platform allowed to share resources, behaviours and featured a forum. This has probably participated a lot in the expansion and adoption of robot with developers and researcher sharing designs of behaviours, and other games they developed with the robot.

Quantitative analysis of the scoping review

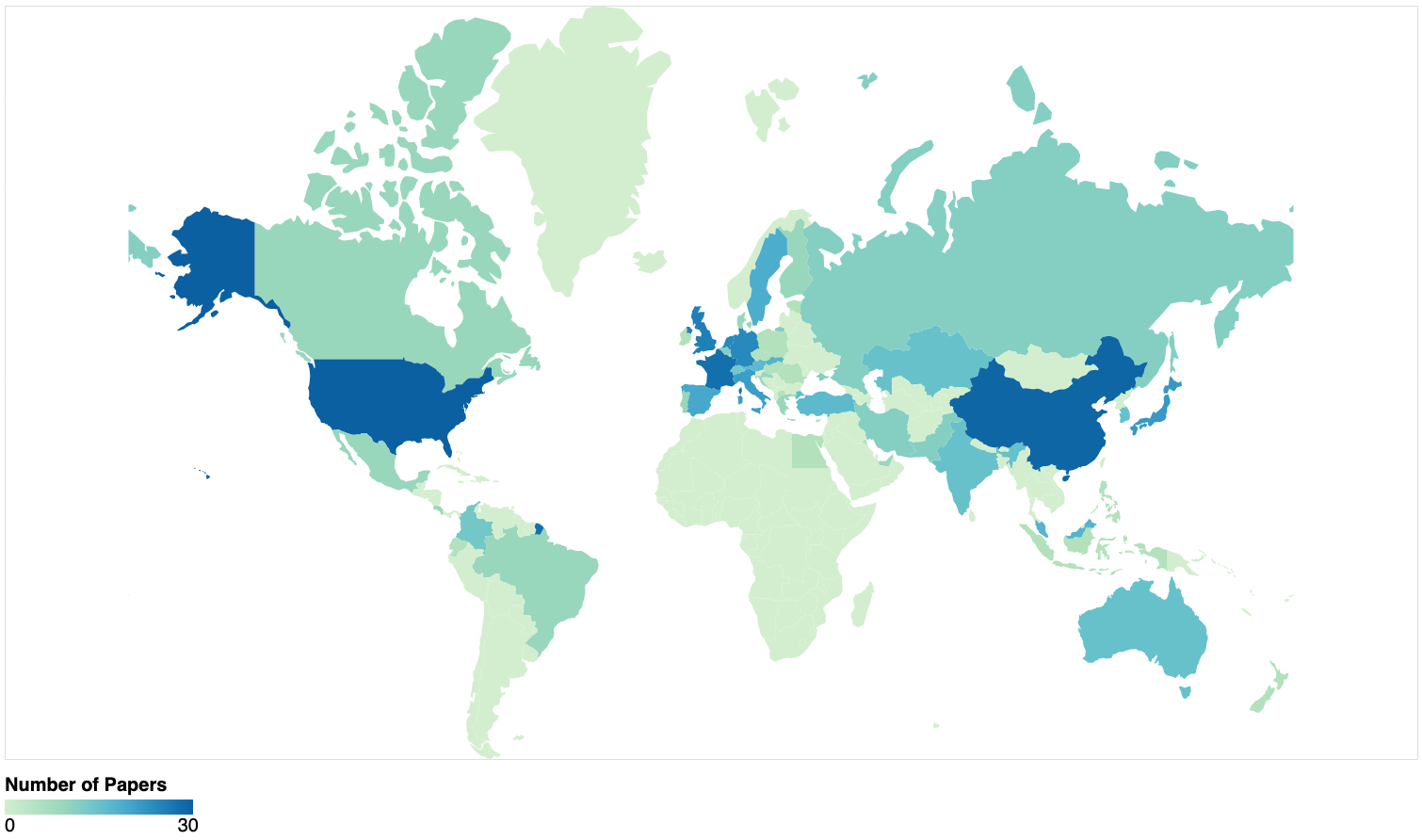

Geographical distribution of the papers included in our scoping review

The geographical distribution of authors using NAO for their research in HRI is unprecedented for a social robot. NAO has been used in more than 50 countries all around the world. And we see from the above map that the distribution is well split between North-America, Europe and Asia. But still, just like in a lot of research field the global north, tends to publish more than southern countries.

In terms of the role of the robot, researchers have investigated different application domain with the robot being used as a peer or a demonstrator in public spaces, an educational and assistant for therapists (for children with ASD, learning disability or hearing disability) being the dominant ones.

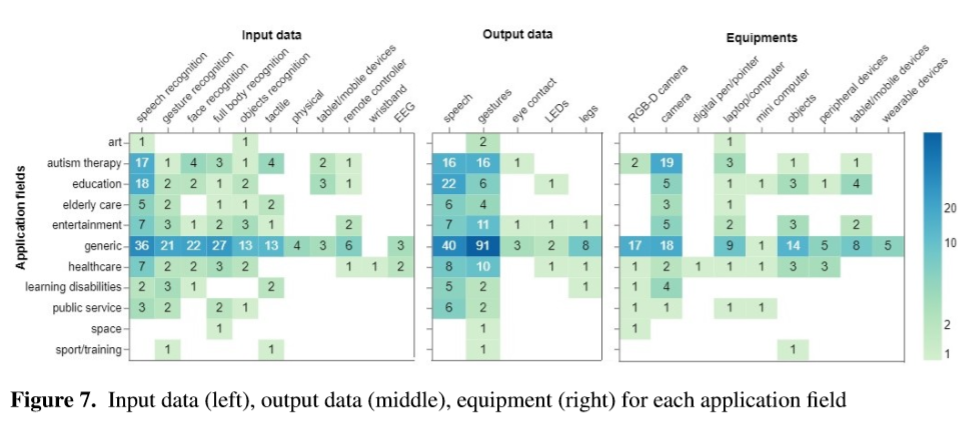

Input data (left), output data (middle), equipment (right) for each application field

As mentioned above, NAO is equipped with various sensors and actuators allowing for multi-modal interaction. Often researchers have used the robot to speak and/or make gestures. On the perception side, the robot’s limited speech recognition and poor microphone quality refrained researcher to use NLP but instead made them rely on facial, and gesture recognition. We also noted in our analysis that researchers often used external devices such as RGBD cameras or tablets in order to capture other signals allowing to assess the situation.

Qualitative results

Multiple studies reported how fun and enjoyable was the appearance of the robot. NAO is certainly not just an eye-catcher robot as its portability is highly appreciated by the researchers. NAO can be regarded as a plug-and-play robot due to its robust and easy setup characteristics. It has also been found affordable compared to other humanoid robots.

On the other side, several technical issues were reported such as low battery life and overheating issues. The speech recognition limits the interaction distance and context (i.e. noisy environments). Finally, not much embedded autonomous functionality could be used in the initial versions.

Nao’s communities

Over the years, several types of communities have formed around the NAO robotic platform.

1) Educational communities

In 2010, the University of Tokyo piloted a new educational program developed by Aldebaran Robotics that aimed to train students to use NAO. Along with some discounts to buy NAO for educational purposes, the company released some ebooks. The books and educational program cover both technical aspects of using the robot as well as more creative projects that can be given as exercise to students. The educational market certainly helped in making NAO widely used in the 2010’s.

2) Engineering communities

In 2007, the Robocup decided to use the NAO robot as the official platform for its soccer league. As detailed in the review, this led to fruitful advancement on technical capabilities of the robot such as its locomotion and vision system.

More recently, the introduction of the Pepper robot as a new platform for the robocup@Home challenge certainly can let us think that it will boost the robot’s economical life and produce more advances in social and at home tasks with the robot.

3) Research communities

Aldebaran had a strong Research & Development strategy in its early days and naturally became partner of several large European projects: ALIZ-E, DREAM, SQUIRREL, L2Tor. These strategies had the advantage to form the future researchers in the use of their platform and made NAO a dominant robot in human-robot interaction research between 2015 and 2019.

4) Industrial translation

Image from RobotLab website visited on December 20th 2021

Recently a network of start-ups has woven around the NAO robot. Providing various services such as educational workshops, therapeutic sessions, or business and marketing services, the number of these start-ups have raised these past few years (e.g. Interactive Robotics, RobotLAB and Avatarion).

Conclusion

In our review, we present a comprehensive overview on the use of NAO, which is a remarkable social robot in many instances. So far, NAO has been exposed to a challenging yet rewarding journey. Its social roles have expanded thanks to its likeable appearance and multi-modal capabilities followed by its fitness to deliver socially important tasks. Still, there are gaps to be filled in view of sustainable and user-focused human-NAO interaction. We hope that our review can contribute to the field of HRI that needs more reflection and general evidence on the use of the social robots, such as NAO in a wide variety of contexts. An implication of the findings shows a greater need for increasing the value and practical application of NAO in user-centered studies. Future studies should consider the importance of real-world and unrestricted experiments with NAO and involve other humans that might facilitate human-robot interaction.

STIHL Acquires 23 Percent of Successful Danish Robot Company TinyMobileRobots

Bristol scientists develop insect-sized flying robots with flapping wings

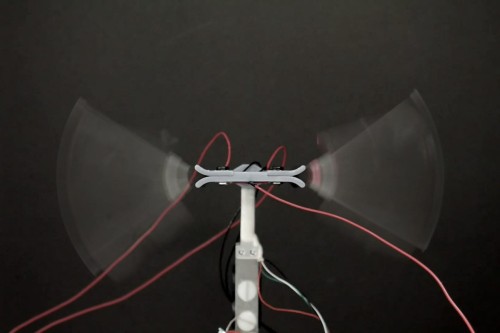

Front view of the flying robot. Image credit: Dr Tim Helps

This new advance, published in the journal Science Robotics, could pave the way for smaller, lighter and more effective micro flying robots for environmental monitoring, search and rescue, and deployment in hazardous environments.

Until now, typical micro flying robots have used motors, gears and other complex transmission systems to achieve the up-and-down motion of the wings. This has added complexity, weight and undesired dynamic effects.

Taking inspiration from bees and other flying insects, researchers from Bristol’s Faculty of Engineering, led by Professor of Robotics Jonathan Rossiter, have successfully demonstrated a direct-drive artificial muscle system, called the Liquid-amplified Zipping Actuator (LAZA), that achieves wing motion using no rotating parts or gears.

The LAZA system greatly simplifies the flapping mechanism, enabling future miniaturization of flapping robots down to the size of insects.

In the paper, the team show how a pair of LAZA-powered flapping wings can provide more power compared with insect muscle of the same weight, enough to fly a robot across a room at 18 body lengths per second.

They also demonstrated how the LAZA can deliver consistent flapping over more than one million cycles, important for making flapping robots that can undertake long-haul flights.

The team expect the LAZA to be adopted as a fundamental building block for a range of autonomous insect-like flying robots.

Dr Tim Helps, lead author and developer of the LAZA system said: “With the LAZA, we apply electrostatic forces directly on the wing, rather than through a complex, inefficient transmission system. This leads to better performance, simpler design, and will unlock a new class of low-cost, lightweight flapping micro-air vehicles for future applications, like autonomous inspection of off-shore wind turbines.”

Professor Rossiter added: “Making smaller and better performing flapping wing micro robots is a huge challenge. LAZA is an important step toward autonomous flying robots that could be as small as insects and perform environmentally critical tasks such as plant pollination and exciting emerging roles such as finding people in collapsed buildings.”