Why robots and artificial intelligence creep us out

A raptor-inspired drone with morphing wing and tail

By Nicola Nosengo

NCCR Robotics researchers at EPFL have developed a drone with a feathered wing and tail that give it unprecedented flight agility.

The northern goshawk is a fast, powerful raptor that flies effortlessly through forests. This bird was the design inspiration for the next-generation drone developed by scientists of the Laboratory of Intelligent Systems of EPFL led by Dario Floreano. They carefully studied the shape of the bird’s wings and tail and its flight behavior, and used that information to develop a drone with similar characteristics.

“Goshawks move their wings and tails in tandem to carry out the desired motion, whether it is rapid changes of direction when hunting in forests, fast flight when chasing prey in the open terrain, or when efficiently gliding to save energy,” says Enrico Ajanic, the first author and PhD student in Floreano’s lab. Floreano adds: “our design extracts principles of avian agile flight to create a drone that can approximate the flight performance of raptors, but also tests the biological hypothesis that a morphing tail plays an important role in achieving faster turns, decelerations, and even slow flight.”

A drone that moves its wings and tail

The engineers already designed a bird-inspired drone with morphing wing back in 2016. In a step forward, their new model can adjust the shape of its wing and tail thanks to its artificial feathers. “It was fairly complicated to design and build these mechanisms, but we were able to improve the wing so that it behaves more like that of a goshawk,” says Ajanic. “Now that the drone includes a feathered tail that morphs in synergy with the wing, it delivers unparalleled agility.” The drone changes the shape of its wing and tail to change direction faster, fly slower without falling to the ground, and reduce air resistance when flying fast. It uses a propeller for forward thrust instead of flapping wings because it is more efficient and makes the new wing and tail system applicable to other winged drones and airplanes.

The advantage of winged drones over quadrotor designs is that they have a longer flight time for the same weight. However, quadrotors tend to have greater dexterity, as they can hover in place and make sharp turns. “The drone we just developed is somewhere in the middle. It can fly for a long time yet is almost as agile as quadrotors,” says Floreano. This combination of features is especially useful for flying in forests or in cities between buildings, as it can be necessary during rescue operation. The project is part of the Rescue Robotics Grand Challenge of NCCR Robotics.

Opportunities for artificial intelligence

Flying this new type of drone isn’t easy, due to the large number of wing and tail configurations possible. To take full advantage of the drone’s flight capabilities, Floreano’s team plans to incorporate artificial intelligence into the drone’s flight system so that it can fly semi-automatically. The team’s research has been published in Science Robotics.

A system to improve a robot’s indoor navigation

ResinDek® Panels, The Flooring Solution for Robotic Platforms

White Castle to Expand Implementations with Miso Robotics and Target Up to 10 New Locations Following Pilot

Honeywell Study: Advancements In Warehouse Automation Will Present New Job Classes, Career Opportunities

Deep learning in MRI beyond segmentation: Medical image reconstruction, registration, and synthesis

RoboTED: a case study in Ethical Risk Assessment

A few weeks ago I gave a short paper at the excellent International Conference on Robot Ethics and Standards (ICRES 2020), outlining a case study in Ethical Risk Assessment – see our paper here. Our chosen case study is a robot teddy bear, inspired by one of my favourite movie robots: Teddy, in A. I. Artificial Intelligence.

Although Ethical Risk Assessment (ERA) is not new – it is after all what research ethics committees do – the idea of extending traditional risk assessment, as practised by safety engineers, to cover ethical risks is new. ERA is I believe one of the most powerful tools available to the responsible roboticist, and happily we already have a published standard setting out a guideline on ERA for robotics in BS 8611, published in 2016.

Before looking at the ERA, we need to summarise the specification of our fictional robot teddy bear: RoboTed. First, RoboTed is based on the following technology:

- RoboTed is an Internet (WiFi) connected device,

- RoboTed has cloud-based speech recognition and conversational AI (chatbot) and local speech synthesis,

- RoboTed’s eyes are functional cameras allowing RoboTed to recognise faces,

- RoboTed has motorised arms and legs to provide it with limited baby-like movement and locomotion.

And second RoboTed is designed to:

- Recognise its owner, learning their face and name and turning its face toward the child.

- Respond to physical play such as hugs and tickles.

- Tell stories, while allowing a child to interrupt the story to ask questions or ask for sections to be repeated.

- Sing songs, while encouraging the child to sing along and learn the song.

- Act as a child minder, allowing parents to both remotely listen, watch and speak via RoboTed.

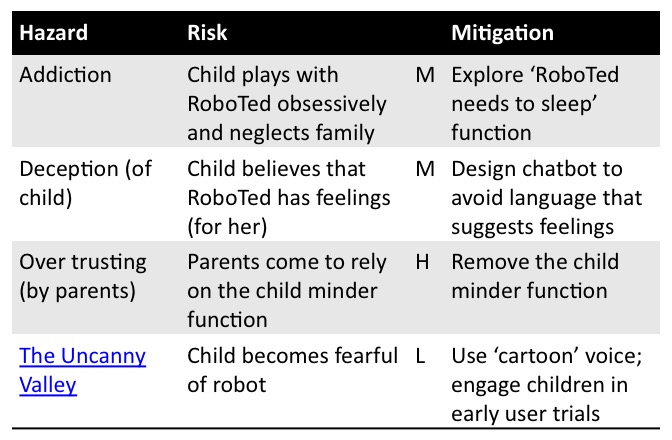

The tables below summarise the ERA of RoboTED for (1) psychological, (2) privacy & transparency and (3) environmental risks. Each table has 4 columns, for the hazard, risk, level of risk (high, medium or low) and actions to mitigate the risk. BS8611 defines an ethical risk as the “probability of ethical harm occurring from the frequency and severity of exposure to a hazard”; an ethical hazard as “a potential source of ethical harm”, and an ethical harm as “anything likely to compromise psychological and/or societal and environmental well-being”.

(1) Psychological Risks

(2) Security and Transparency Risks

(3) Environmental Risks

For a more detailed commentary on each of these tables see our full paper – which also, for completeness, covers physical (safety) risks. And here are the slides from my short ICRES 2020 presentation:

Through this fictional case study we argue we have demonstrated the value of ethical risk assessment. Our RoboTed ERA has shown that attention to ethical risks can

- suggest new functions, such as “RoboTed needs to sleep now”,

- draw attention to how designs can be modified to mitigate some risks,

- highlight the need for user engagement, and

- reject some product functionality as too risky.

But ERA is not guaranteed to expose all ethical risks. It is a subjective process which will only be successful if the risk assessment team are prepared to think both critically and creatively about the question: what could go wrong? As Shannon Vallor and her colleagues write in their excellent Ethics in Tech Practice toolkit design teams must develop the “habit of exercising the skill of moral imagination to see how an ethical failure of the project might easily happen, and to understand the preventable causes so that they can be mitigated or avoided”.