The traditional Japanese art of origami transforms a simple sheet of paper into complex, three-dimensional shapes through a very specific pattern of folds, creases, and crimps. Folding robots based on that principle have emerged as an exciting new frontier of robotic design, but generally require onboard batteries or a wired connection to a power source, making them bulkier and clunkier than their paper inspiration and limiting their functionality.

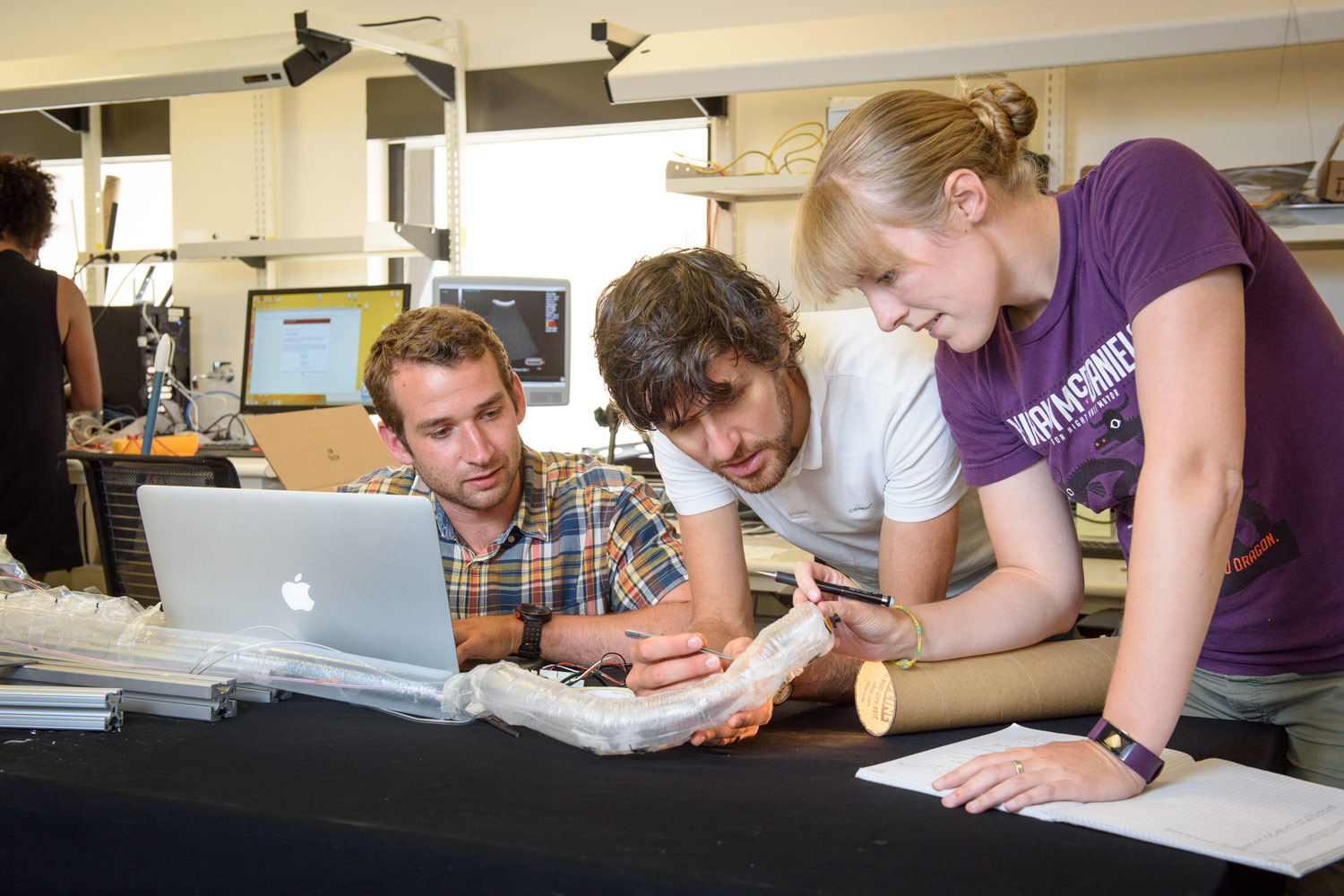

A team of researchers at the Wyss Institute for Biologically Inspired Engineering and the John A. Paulson School of Engineering and Applied Sciences (SEAS) at Harvard University has created battery-free folding robots that are capable of complex, repeatable movements powered and controlled through a wireless magnetic field.

“Like origami, one of the main points of our design is simplicity,” says co-author Je-sung Koh, Ph.D., who conducted the research as a Postdoctoral Fellow at the Wyss Institute and SEAS and is now an Assistant Professor at Ajou University in South Korea. “This system requires only basic, passive electronic components on the robot to deliver an electric current—the structure of the robot itself takes care of the rest.”

The research team’s robots are flat and thin (resembling the paper on which they’re based) plastic tetrahedrons, with the three outer triangles connected to the central triangle by hinges, and a small circuit on the central triangle. Attached to the hinges are coils made of a type of metal called shape-memory alloy (SMA) that can recover its original shape after deformation by being heated to a certain temperature. When the robot’s hinges lie flat, the SMA coils are stretched out in their “deformed” state; when an electric current is passed through the circuit and the coils heat up, they spring back to their original, relaxed state, contracting like tiny muscles and folding the robots’ outer triangles in toward the center. When the current stops, the SMA coils are stretched back out due to the stiffness of the flexure hinge, thus lowering the outer triangles back down.

The power that creates the electrical current needed for the robots’ movement is delivered wirelessly using electromagnetic power transmission, the same technology inside wireless charging pads that recharge the batteries in cell phones and other small electronics. An external coil with its own power source generates a magnetic field, which induces a current in the circuits in the robot, thus heating the SMA coils and inducing folding. In order to control which coils contract, the team built a resonator into each coil unit and tuned it to respond only to a very specific electromagnetic frequency. By changing the frequency of the external magnetic field, they were able to induce each SMA coil to contract independently from the others.

“Not only are our robots’ folding motions repeatable, we can control when and where they happen, which enables more complex movements,” explains lead author Mustafa Boyvat, Ph.D., also a Postdoctoral Fellow at the Wyss Institute and SEAS.

Just like the muscles in the human body, the SMA coils can only contract and relax: it’s the structure of the body of the robot — the origami “joints” — that translates those contractions into specific movements. To demonstrate this capability, the team built a small robotic arm capable of bending to the left and right, as well as opening and closing a gripper around an object. The arm is constructed with a special origami-like pattern to permit it to bend when force is applied, and two SMA coils deliver that force when activated while a third coil pulls the gripper open. By changing the frequency of the magnetic field generated by the external coil, the team was able to control the robot’s bending and gripping motions independently.

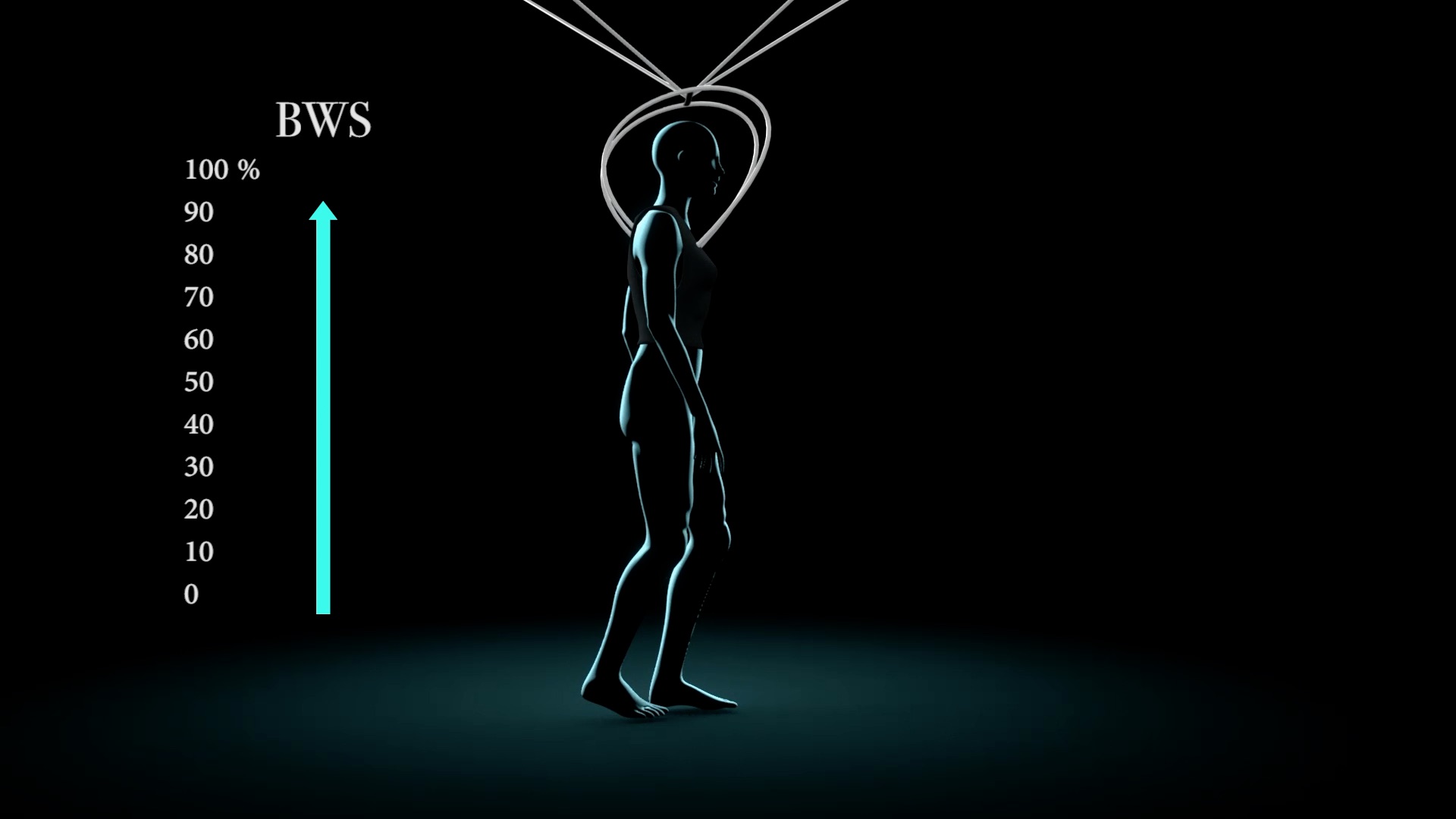

There are many applications for this kind of minimalist robotic technology; for example, rather than having an uncomfortable endoscope put down their throat to assist a doctor with surgery, a patient could just swallow a micro-robot that could move around and perform simple tasks, like holding tissue or filming, powered by a coil outside their body. Using a much larger source coil — on the order of yards in diameter — could enable wireless, battery-free communication between multiple “smart” objects in an entire home. The team built a variety of robots — from a quarter-sized flat tetrahedral robot to a hand-sized ship robot made of folded paper — to show that their technology can accommodate a variety of circuit designs and successfully scale for devices large and small. “There is still room for miniaturization. We don’t think we went to the limit of how small these can be, and we’re excited to further develop our designs for biomedical applications,” Boyvat says.

“When people make micro-robots, the question is always asked, ‘How can you put a battery on a robot that small?’ This technology gives a great answer to that question by turning it on its head: you don’t need to put a battery on it, you can power it in a different way,” says corresponding author Rob Wood, Ph.D., a Core Faculty member at the Wyss Institute who co-leads its Bioinspired Robotics Platform and the Charles River Professor of Engineering and Applied Sciences at SEAS.

“Medical devices today are commonly limited by the size of the batteries that power them, whereas these remotely powered origami robots can break through that size barrier and potentially offer entirely new, minimally invasive approaches for medicine and surgery in the future,” says Wyss Founding Director Donald Ingber, who is also the Judah Folkman Professor of Vascular Biology at Harvard Medical School and the Vascular Biology Program at Boston Children’s Hospital, as well as a Professor of Bioengineering at Harvard’s School of Engineering and Applied Sciences.

The research paper was published in Science Robotics.

Knightscope

Knightscope

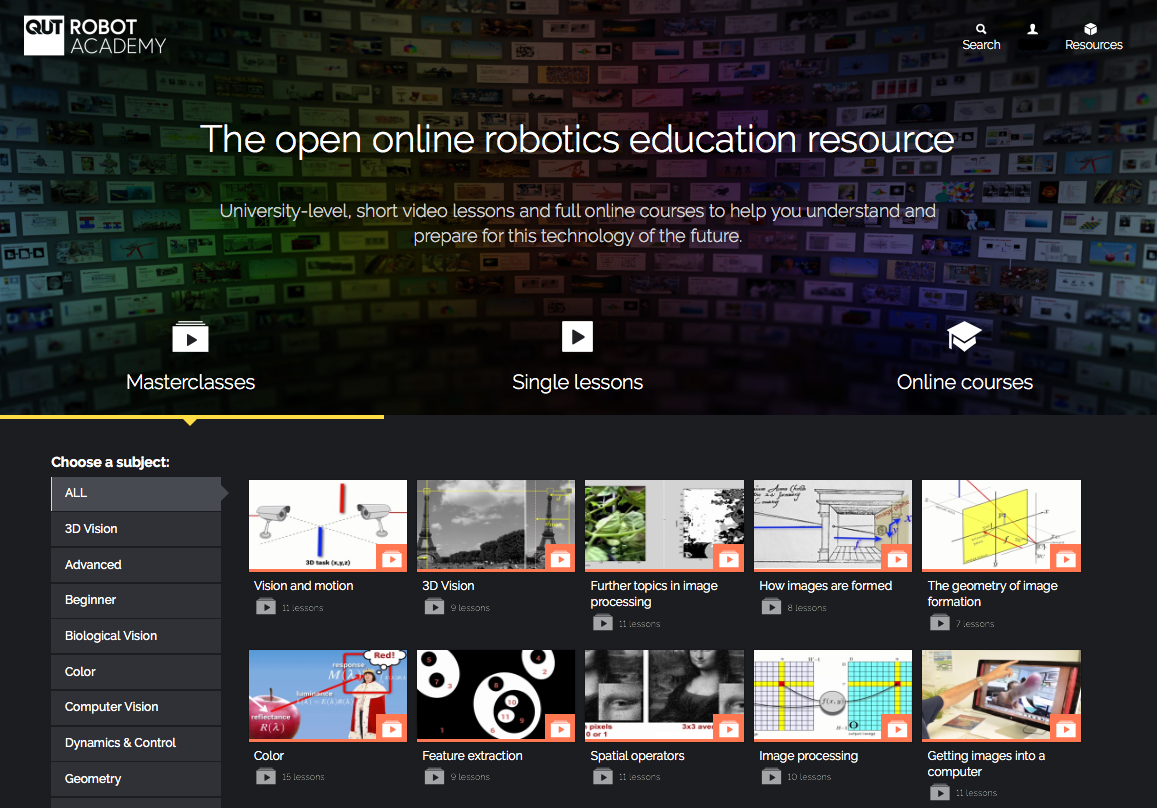

Peter Corke is Professor of Robotics and Control at the Queensland University of Technology leading the ARC Centre of Excellence for Robotic Vision in Australia. Previously he was a Senior Principal Research Scientist at the CSIRO ICT Centre where he founded and led the Autonomous Systems laboratory, the Sensors and Sensor Networks research theme and the Sensors and Sensor Networks Transformational Capability Platform. He is a Fellow of the IEEE. He was the Editor-in-Chief of the IEEE Robotics and Automation magazine; founding editor of the Journal of Field Robotics; member of the editorial board of the International Journal of Robotics Research, and the Springer STAR series. He has over 300 publications in the field and has held visiting positions at the University of Pennsylvania, University of Illinois at Urbana-Champaign, Carnegie-Mellon University Robotics Institute, and Oxford University.

Peter Corke is Professor of Robotics and Control at the Queensland University of Technology leading the ARC Centre of Excellence for Robotic Vision in Australia. Previously he was a Senior Principal Research Scientist at the CSIRO ICT Centre where he founded and led the Autonomous Systems laboratory, the Sensors and Sensor Networks research theme and the Sensors and Sensor Networks Transformational Capability Platform. He is a Fellow of the IEEE. He was the Editor-in-Chief of the IEEE Robotics and Automation magazine; founding editor of the Journal of Field Robotics; member of the editorial board of the International Journal of Robotics Research, and the Springer STAR series. He has over 300 publications in the field and has held visiting positions at the University of Pennsylvania, University of Illinois at Urbana-Champaign, Carnegie-Mellon University Robotics Institute, and Oxford University.