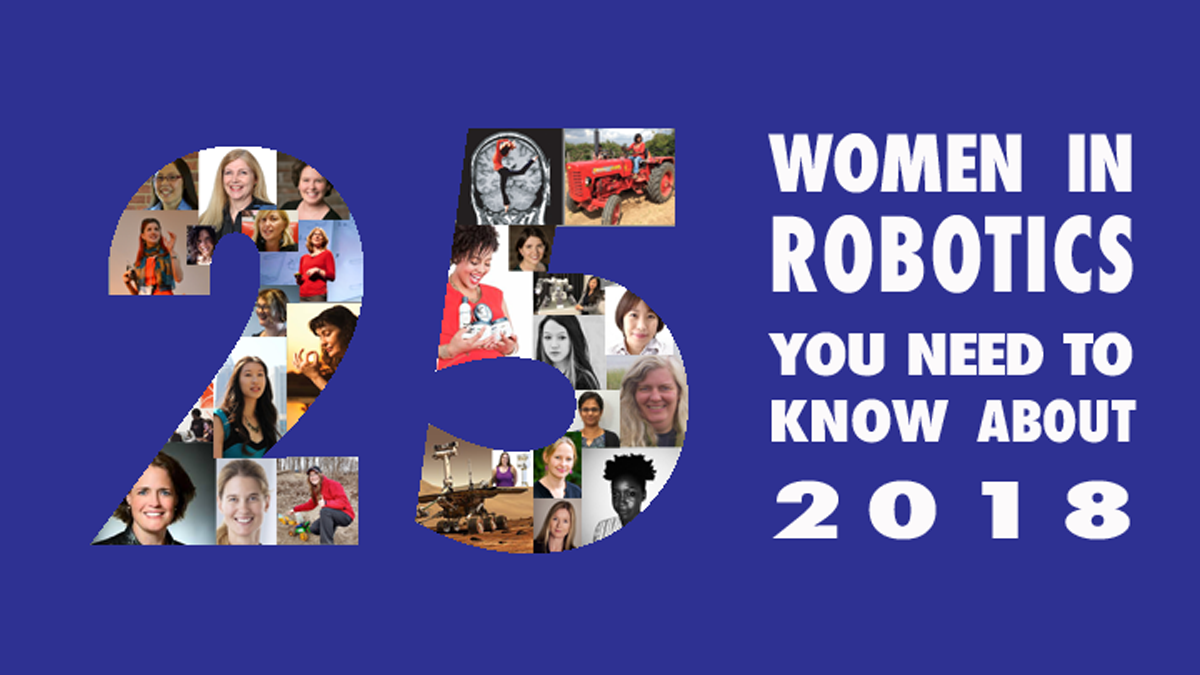

From driving rovers on Mars to improving farm automation for Indian women, once again we’re bringing you a list of 25 amazing women in robotics! These women cover all aspects of the robotics industry, both research, product and policy. They are founders and leaders, they are investigators and activists. They are early career stage and emeritus. There is a role model here for everyone! And there is no excuse – ever – not to have a woman speaking on a panel on robotics and AI.

But to start, here’s some news about previous nominees (and this is just a sample because we’ve showcased over 125 women so far and this is our 6th year).

In 2013, Melonee Wise was just launching her first startup! Since then she’s raised $48 million USD for Fetch Robotics and Fetch and Freight robots are rolling out in warehouses all over the world! Maja Mataric’s startup Embodied Inc has raised $34.4 million for home companion robots. Amy Villeneuve has moved from President and COO of Kiva Systems, VP at Amazon Robotics to the Board of Directors of 4 new robotics startups. And Manuela Veloso joined the Corporate & Investment Bank J.P. Morgan as head of Artificial Intelligence (AI) Research.

In 2014, Sampriti Bhattacharya was a PhD student at MIT, since then she has turned her research into a startup Hydroswarm and been named one of Forbes 30 most powerful young change agents. Noriko Kageki moved from Kawada Robotics Corp in Japan to join the very female friendly Omron Adept Technologies in Silicon Valley.

2015’s Cecilia Laschi and Barbara Mazzolai are driving the Preparatory action for a European Robotics Flagship, which has the potential to become a 1B EUR project. The goal is to make new robots and AIs that are ethically, socially, economically, energetically, and environmentally responsible and sustainable. And PhD candidate Kavita Krishnaswamy. who depends on robots to travel, has received Microsoft, Google, Ford and NSF fellowships to help her design robots for people with disabilities.

2015’s Hanna Kurniawati was a Keynote Speaker at IROS 2018 (Oct 1-5) in Madrid, Spain. As were nominees Raquel Urtasun, Jamie Paik, Barbara Mazzolai, Anca Dragan, Amy Loutfi (and many more women!) 2017’s Raia Hadsell was the Plenary Speaker at ICRA 2018 (May 21-25) in Brisbane, Australia. And while it’s great to see so many women showcased this year at robotics conferences – don’t forget 2015 when the entire ICRA organizing committee was comprised of women.

2016’s Vivian Chu finished her Social Robotics PhD and founded the robotics startup Diligent Robotics with her supervisor Dr Andrea Thomaz (featured in 2013). Their hospital robot Moxi was just featured on the BBC . And 2016’s Gudrun Litzenberger was just awarded the Engelberger Award by the RIA, joining 2013’s Daniela Rus. (The RIA is finally recognizing the role of women after we/Andra pointed out that they’d given out 120 awards and only 1 was to a woman – Bala Krishnamurthy in 2007 – and are now also offering grants for eldercare robots and women in robotics.)

We try to cover the whole globe, not just the whole career journey for women in robotics – so we welcome a nominee from Ashesi University in Ghana to this year’s list! So, without further ado, here are the 25 Women In Robotics you need to know about – 2018 edition!

|

Crystal Chao

Chief Scientist of AI/Robotics – Huawei

Crystal Chao is Chief Scientist at Huawei and the Global Lead of Robotics Projects, overseeing a team that operates in Silicon Valley, Boston, Shenzhen, Beijing, and Tokyo. She has worked with every part of the robotics software stack in her previous experience, including a stint at X, Google’s moonshot factory. In 2012, Chao won Outstanding Doctoral Consortium Paper Award, ICMI, for her PhD at Georgia Tech, where she developed an architecture for social human-robot interaction (HRI) called CADENCE: Control Architecture for the Dynamics of Natural Embodied Coordination and Engagement, enabling a robot to collaborate fluently with humans using dialogue and manipulation. |

|

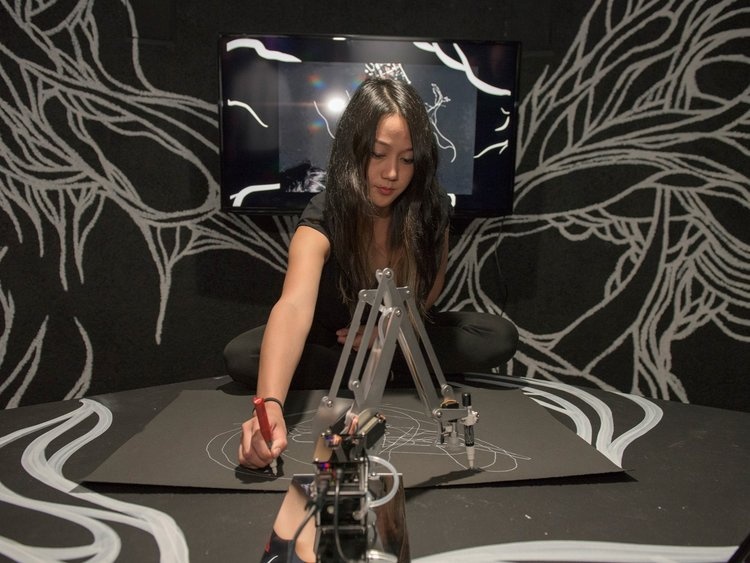

Sougwen Chung

Interdisciplinary Artist

Sougwen Chung is a Chinese-born, Canadian-raised artist based in New York. Her work explores the mark-made-by-hand and the mark-made-by-machine as an approach to understanding the interaction between humans and computers. Her speculative critical practice spans installation, sculpture, still image, drawing, and performance. She is a former researcher fellow at MIT’s Media Lab and inaugural member of NEW INC, the first museum-led art and technology in collaboration with The New Museum. She received a BFA from Indiana University and a masters diploma in interactive art from Hyper Island in Sweden. |

|

Emily Cross

Professor of Social Robotics / Director of SoBA Lab

Emily Cross is a cognitive neuroscientist and dancer. As the Director of the Social Brain in Action Laboratory (www.soba-lab.com), she explores how our brains and behaviors are shaped by different kinds of experience throughout our lifespans and across cultures. She is currently the Principal Investigator on the European Research Council Starting Grant entitled ‘Social Robots’, which runs from 2016-2021. |

|

Rita Cucchiara

Full Professor / Head of AImage Lab

Rita Cucchiara is Full Professor of Computer Vision at the Department of Engineering “Enzo Ferrari” of the University of Modena and Reggio Emilia, where since 1998 she has led the AImageLab, a lab devoted to computer vision and pattern recognition, AI and multimedia. She coordinates the RedVision Lab UNIMORE-Ferrari for human-vehicle interaction. She was President of the Italian Association in Computer Vision, Pattern Recognition and Machine Learning (CVPL) from 2016 to 2018, and is currently Director of the Italian CINI Lab in Artificial Intelligence and Intelligent Systems. In 2018 she was recipient of the Maria Petrou Prize of IAPR |

|

Sanja Fidler

Assistant Professor / Director of AI at NVIDIA

Sanja Fidler is Director of AI at NVIDIA’s new Toronto Lab, conducting cutting-edge research projects in machine learning, computer vision, graphics, and the intersection of language and vision. She remains Assistant Professor at the Department of Computer Science, University of Toronto. She is recipient of the Amazon Academic Research Award (2017) and the NVIDIA Pioneer of AI Award (2016). She completed her PhD in computer science at University of Ljubljana in 2010, and has served as a Program Chair of the 3DV conference, and as an Area Chair of CVPR, EMNLP, ICCV, ICLR, and NIPS. |

|

Kanako Harada

ImPACT Program Manager

Kanako Harada, is Program Manager of the ImPACT program “Bionic Humanoids Propelling New Industrial Revolution” of the Cabinet Office, Japan. She is also Associate Professor of the departments of Bioengineering and Mechanical Engineering, School of Engineering and the University of Tokyo, Japan. She obtained her M.Sc. in Engineering from the University of Tokyo in 2001, and her Ph.D. in Engineering from Waseda University in 2007. She worked for Hitachi Ltd., Japan Association for the Advancement of Medical Equipment, and Scuola Superiore Sant’Anna, Italy, before joining the University of Tokyo. Her research interests include surgical robots and surgical skill assessment. |

|

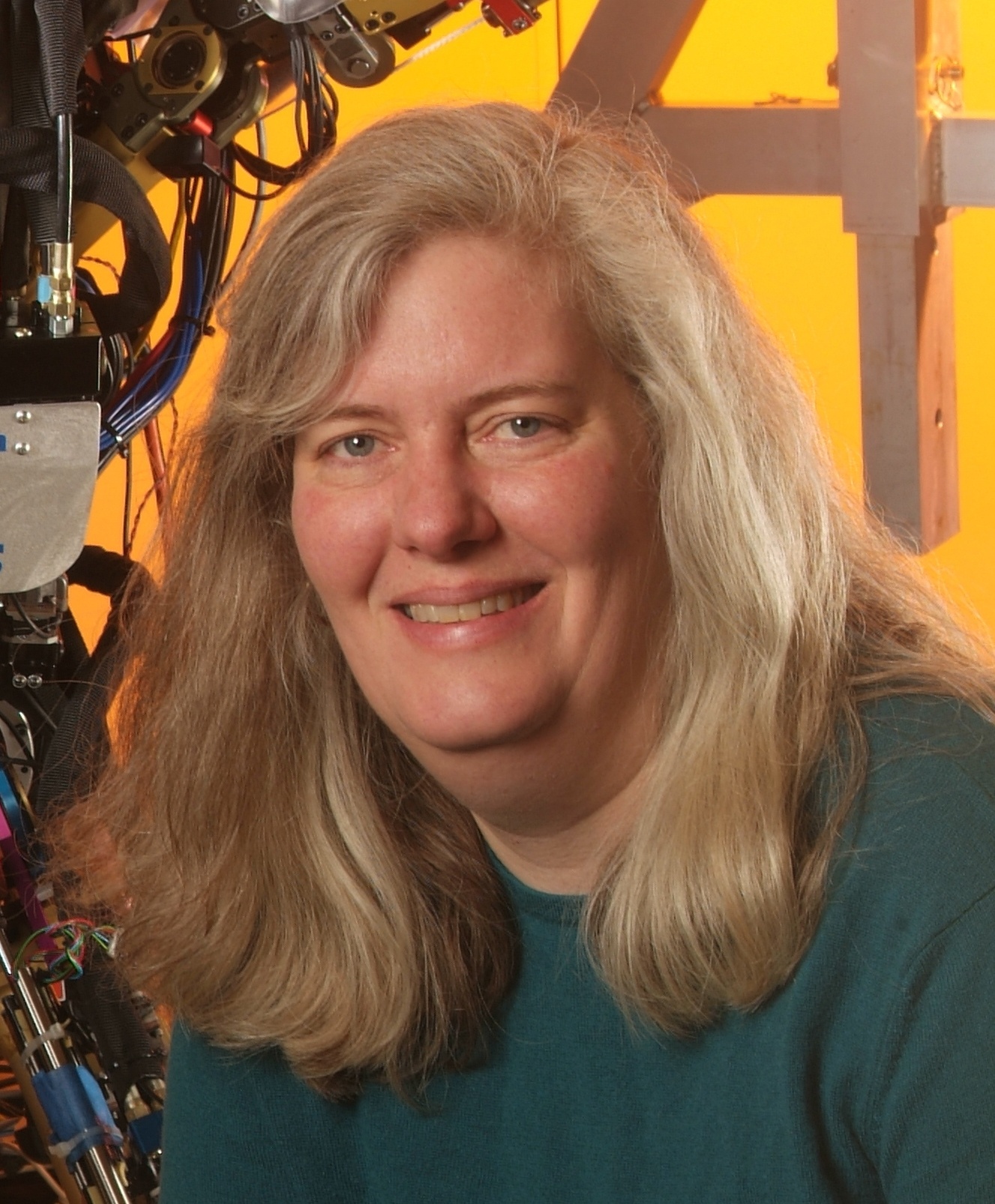

Jessica Hodgins

Professor / FAIR Research Mgr and Operations Lead

Jessica Hodgins is a Professor in the Robotics Institute and Computer Science Department at Carnegie Mellon University, and the new lead of Facebook’s AI Research Lab in Pittsburgh. The FAIR lab will focus on robotics, lifelong-learning systems that learn continuously, teaching machines to reason and AI in support of creativity. From 2008-2016, Hodgins founded and ran research labs for Disney, rising to VP of Research and leading the labs in Pittsburgh and Los Angeles. She received her Ph.D. in Computer Science from Carnegie Mellon University in 1989. She has received an NSF Young Investigator Award, a Packard Fellowship, a Sloan Fellowship, the ACM SIGGRAPH Computer Graphics Achievement Award, and in 2017 she was awarded the Steven Anson Coons Award for Outstanding Creative Contributions to Computer Graphics. Her groundbreaking research focuses on computer graphics, animation, and robotics, with an emphasis on generating and analyzing human motion. |

|

Heather Justice

Mars Exploration Rover Driver

Heather Justice has the dream job title of Mars Exploration Rover Driver, and is a Software Engineer at NASA JPL. As a 16-year-old watching the first Rover landing on Mars, she said: “I saw just how far robotics could take us and I was inspired to pursue my interests in computer science and engineering.” Justice graduated from Harvey Mudd College with a B.S. in computer science in 2009 and an M.S. from the Robotics Institute at Carnegie Mellon University in 2011, having also interned at three different NASA centers, and working in a variety of research areas including computer vision, mobile robot path planning, and spacecraft flight rule validation. |

|

Sue Keay

COO

Sue Keay is the Chief Operating Officer of the ACRV and in 2018 launched Australia’s first National Robotics Roadmap at Parliament House. A university medallist and Jaeger scholar, Sue has more than 20 years experience in the research sector, managing and ensuring impact from multidisciplinary R&D programs and teams. She has a PhD in Earth Sciences from the Australian National University and was an ARC post-doctoral fellow at the University of Queensland, before turning to science communication, research management, research commercialisation, and IP management. Keay is a graduate of the Australian Institute of Company Directors, and Chairs the IP and Commercialisation Committee for the Board of the CRC for Optimising Resource Extraction. In 2017, Keay was also named one of the first Superstars of STEM by Science & Technology Australia. |

|

Erin Kennedy

Founder

Erin Kennedy is a robot maker and the founder of Robot Missions, an organization that empowers communities to embark on missions aimed at helping our planet using robots. She designed and developed a robot to collect shoreline debris, replicable anywhere with a 3D printer. Kennedy studied digital fabrication at the Fab Academy, and worked with a global team at MIT on a forty-eight-hour challenge during Fab11 to build a fully functional submarine. A former fellow in social innovation and systems thinking at the MaRS Discovery District’s Studio Y, Kennedy has been recognized as a finalist in the Lieutenant Governor’s Visionaries Prize (Ontario), and her previous robotic work has been featured in Forbes, Wired, and IEEE Spectrum, and on the Discovery Channel. |

|

Kathrine Kuchenbecker

Director at Max Planck Institute for Intelligent Systems / Associate Professor

Katherine J. Kuchenbecker is Director and Scientific Member at the Max Planck Institute for Intelligent Systems in Stuttgart, on leave from the Department of Computer and Information Science at UPenn. Kuchenbecker received her PhD degree in Mechanical Engineering from Stanford University in 2006. She received the IEEE Robotics and Automation Society Academic Early Career Award, NSF CAREER Award, and Best Haptic Technology Paper at the IEEE World Haptics Conference. Her keynote at RSS 2018 is online. Kuchenbecker’s research expertise is in the design and control of robotic systems that enable a user to touch virtual objects and distant environments as though they were real and within reach, uncovering new opportunities for its use in interactions between humans, computers, and machines. |

|

Jasmine Lawrence

Technical Program Manager – Facebook

Jasmine Lawrence currently serves as a Technical Program Manager on the Building 8 team at Facebook, a research lab to develop hardware projects in the style of DARPA. Previously, she served as a Technical Program Manager at SoftBank Robotics where she lead a multidisciplinary team to create software for social, humanoid robots. Before that she was a Program Manager at Microsoft on the HoloLens Experience team and the Xbox Engineering team. Lawrence earned her B.S. in Computer Science, from the Georgia Institute of Technology, and her M.S. in Human Centered Design & Engineering from U of Washington. At the age of 13, after attending a NFTE BizCamp, Jasmine founded EDEN BodyWorks to meet her own need for affordable natural hair and body care products. After almost 14 years in business her products are available at Target, Wal-Mart, CVS, Walgreens, Amazon.com , Kroger, HEB, and Sally Beauty Supply stores just to name a few. |

|

Jade Le Maître

CTO & CoFounder – Hease Robotics

Jade Le Maître spearheads the technical side of Hease Robotics, a robot catered to the retail industry and customer service. With a background in engineering and having conducted a research project about human-robot interaction, Le Maître found her passion in working in the science communication sector. Since then she has cofounded Hease Robotics to bring the robotics experience to the consumer. |

|

Laura Margheri

Programme Manager and Knowledge Transfer Fellow – Imperial College London

Laura Margheri develops the scientific program and manages the research projects at the Aerial Robotics Laboratory at the Imperial College London, managing international and multidisciplinary partnerships. Before joining Imperial College, she was project manager and post doc fellow at the BioRobotics Institute of the Scuola Superiore Sant’Anna. Margheri has an M.S. in Biomedical Engineering (with Honours) and the PhD in BioRobotics (with Honours). She is also member of the IEEE RAS Technical Committee on Soft Robotics and of the euRobotics Topic Group on Aerial Robotics, with interdisciplinary expertise in bio-inspired robotics, soft robotics, and aerial robotics. Since the beginning of 2014 she is the Chair of the Women In Engineering (WIE) Committee of the Robotics & Automation Society. |

|

Brenda Mboya

Undergraduate Student – Ashesi University Ghana

Brenda Mboya is just finishing a B.S. in Computer Science at Ashesi University in Ghana. A technology enthusiast who enjoys working with young people, she also volunteers in VR at Ashesi University, with Future of Africa, Tech Era, and as a coach with the Ashesi Innovation Experience (AIX). Mboya was a Norman Foster Fellows in 2017, one of 10 scholars chosen from around the world to attend a one week robotics atelier in Madrid. “Through this conference, the great potential robotics has, especially in Africa, been reaffirmed in my mind.” said Mboya. |

|

Katja Mombaur

Professor at the Institute of Computer Engineering (ZITI) – Heidelberg University

Katja Mombaur is coordinator of the newly founded Heidelberg Center for Motion Research and full professor at the Institute of Computer Engineering (ZITI), where she is head of the Optimization in Robotics & Biomechanics (ORB) group and the Robotics Lab. She holds a diploma degree in Aerospace Engineering from the University of Stuttgart and a Ph.D. degree in Mathematics from Heidelberg University. Mombaur is PI in the European H2020 project SPEXOR. She coordinated the EU project KoroiBot and was PI in MOBOT and ECHORD–GOP, and founding chair of the IEEE RAS technical committee on Model-based optimization for robotics. Her research focuses on the interactions of humans with exoskeletons, prostheses, and external physical devices. |

|

Devi Murthy

CEO – Kamal Kisan

Devi Murthy has a Bachelors degree in Engineering from Drexel University, USA and a Masters in Entrepreneurship from IIM, Bangalore. She has over 6 years of experience in Product Development & Business Development at Kamal Bells, a sheet metal fabrications and components manufacturing company. In 2013 she founded Kamal Kisan, a for-profit Social Enterprise that works on improving farmer livelihoods through smart mechanization interventions that help them adopt modern agricultural practices, and cultivate high value crops while reducing inputs costs to make them more profitable and sustainable. |

|

Sarah Osentoski

COO – Mayfield Robotics

Sarah Osentoski is COO at Mayfield Robotics, who produced Kuri, ‘the adorable home robot’. Previously she was the manager of the Personal Robotics Group at the Bosch Research and Technology Center in Palo Alto, CA. Osentoski is one of the authors of Robot Web Tools. She was also a postdoctoral research associate at Brown University working with Chad Jenkins in the Brown Robotics Laboratory. She received her Ph.D. from the University of Massachusetts Amherst, under Sridhar Mahadevan. Her research interests include robotics, shared autonomy, web interfaces for robots, reinforcement learning, and machine learning. Osentoski featured as a 2017 Silicon Valley Biz Journal “Women of Influence”. |

|

Kirsten H. Petersen

Assistant Professor – Cornell University

Kirstin H. Petersen is Assistant Professor of Electrical and Computer Engineering. She is interested in design and coordination of bio-inspired robot collectives and studies of their natural counterparts, especially in relation to construction. Her thesis work on a termite-inspired robot construction team made the cover of Science, and was ranked among the journal’s top ten scientific breakthroughs of 2014. Petersen continued on to a postdoc with Director Metin Sitti at the Max Planck Institute for Intelligent Systems 2014-2016, and became a fellow with the Max Planck ETH Center for Learning Systems in 2015. Petersen started the Collective Embodied Intelligence Lab in 2016 as part of the Electrical and Computer Engineering department at Cornell University, and has field memberships in Computer Science and Mechanical Engineering. |

|

Kristen Y. Pettersen

Professor Department of Engineering Cybernetics – NTNU

Kristin Ytterstad Pettersen (1969) is a Professor at the Department of Engineering Cybernetics, and holds a PhD and an MSc in Engineering Cybernetics from NTNU. She is also a Key Scientist at the Center of Excellence: Autonomous marine operations and systems (NTNU AMOS) and an Adjunct Professor at the Norwegian Defence Research Establishment (FFI). Her research interests include nonlinear control theory and motion control, in particular for marine vessels, AUVs, robot manipulators, and snake robots. She is also Co-Founder and Board Member of Eelume AS, a company that develops technology for for subsea inspection, maintenance, and repair. In 2017 she received the Outstanding Paper Award from IEEE Transactions on Control Systems Technology, and in 2018 she was appointed Member of the Academy of the Royal Norwegian Society of Sciences and Letters. |

|

Veronica Santos

Assoc. Prof. of UCLA Mechanical & Aerospace Engineering / Principal Investigator – Director of the UCLA Biomechatronics Laboratory

Veronica J. Santos is an Associate Professor in the Mechanical and Aerospace Engineering Department at UCLA, and Director of the UCLA Biomechatronics Lab. She is one of 16 individuals selected for the Defense Science Study Group (DSSG), a two year opportunity for emerging scientific leaders to participate in dialogues related to US security challenges. She received her B.S. from UC Berkeley in 1999 and her M.S. and Ph.D. degrees in Mech. Eng. with a biometry minor from Cornell University in 2007. Santos was a postdoctoral research associate at the Alfred E. Mann Institute for Biomedical Engineering at USC where she worked on a team to develop a novel biomimetic tactile sensor for prosthetic hands. She then directed the ASU Mechanical and Aerospace Engineering Program and ASU Biomechatronics Lab. Santos has received many honors and awards for both research and teaching. |

|

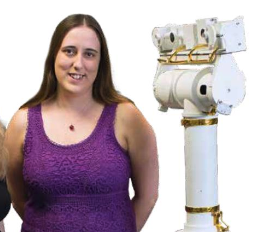

Casey Schulz

Systems Engineer – Omron Adept

Casey Schulz is a Systems Engineer at Omron Adept Technologies (OAT). She currently leads the engineering and design verification testing for a new mobile robot. Prior to OAT, Schulz worked at several Silicon Valley startups, a biotech consulting firm, and the National Ignition Facility at Lawrence Livermore National Labs.Casey received her M.S in Mech. Eng. from Carnegie Mellon University in 2009 for NSF funded research in biologically inspired mobile robotics. She received her B.S from Santa Clara University in 2008 by building a proof-of-concept urban search and rescue mobile robot. Her focus is the development of new robotics technologies to better society. |

|

Kavitha Velusamy

Senior Director Computer Vision – BossaNova Robotics

Kavita Velusamy is the Senior Director of Computer Vision at BossaNova Robotics, where she builds robot vision applications. Previously, she was a Senior Manager at NVIDA, where she managed a global team responsible for delivering computer vision and deep learning software for self-driving vehicles. Prior to this, she was Senior Manager at Amazon, where she wrote the “far field” white paper that defined the device side of Amazon Echo, its vision, its architecture and its price points, and got approval from Jeff Bezos to build a team and lead Amazon Echo’s technology from concept to product. She holds a PhD in Signal Processing/Electrical Communication Engineering from the Indian Institute of Science. |

|

Martha Wells

Author

Martha Wells is a New York Times bestselling author of sci-fi and speculative fiction. Her Hugo award-winning series, The Murderbot Diaries, is about a self-aware security robot that hacks its “governor module”. Known for her world-building narratives, and detailed descriptions of fictional societies, Wells brings an academic grounding in anthropology to her fantasy writing. She holds a B.A. in Anthropology from Texas A&M University, and is the winner of over a dozen awards and nominations for fiction, including a Hugo Award, Nebula Award, and Locus Award. |

|

Andie Zhang

Global Product Manager – ABB Collaborative Robotics

Andie Zhang is Global Product Manager of Robotics at ABB, where she has full global ownership of a portfolio of industrial robot products, develops strategy for the company’s product portfolio, and drives product branding. Zhang’s previous experience includes 10+ years working for world leading companies in Supply Chain, Quality, Marketing and Sales Management. She holds a Masters in Engineering from KTH in Stockholm. Her focus is on collaborative applications for robots and user centered interface design. |

Join more than 700 women in our global online community https://womeninrobotics.org and find or host your own Women in Robotics event locally! Women In Robotics is a grassroots not-for-profit organization supported by Robohub and Silicon Valley Robotics.

And don’t forget to browse previous year’s lists, add all these women to wikipedia (let’s have a Wikipedia Hackathon!), or nominate someone for inclusion next year!

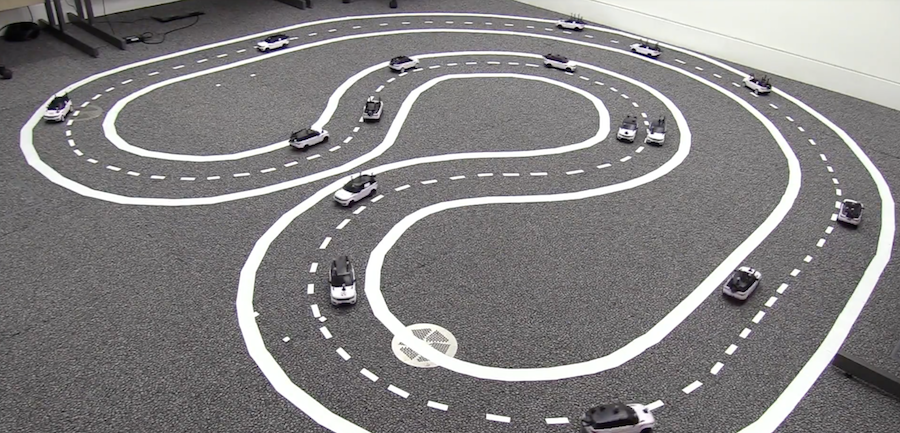

The deployment of connected, automated, and autonomous vehicles presents us with transformational opportunities for road transport. These opportunities reach beyond single-vehicle automation: by enabling groups of vehicles to jointly agree on maneuvers and navigation strategies, real-time coordination promises to improve overall traffic throughput, road capacity, and passenger safety. However, coordinated driving for intelligent vehicles still remains a challenging research problem, and testing new approaches is cumbersome. Developing true-scale facilities for safe, controlled vehicle testbeds is massively expensive and requires a vast amount of space. One approach to facilitating experimental research and education is to build low-cost testbeds that incorporate fleets of down-sized, car-like mobile platforms.

The deployment of connected, automated, and autonomous vehicles presents us with transformational opportunities for road transport. These opportunities reach beyond single-vehicle automation: by enabling groups of vehicles to jointly agree on maneuvers and navigation strategies, real-time coordination promises to improve overall traffic throughput, road capacity, and passenger safety. However, coordinated driving for intelligent vehicles still remains a challenging research problem, and testing new approaches is cumbersome. Developing true-scale facilities for safe, controlled vehicle testbeds is massively expensive and requires a vast amount of space. One approach to facilitating experimental research and education is to build low-cost testbeds that incorporate fleets of down-sized, car-like mobile platforms.