Robots are being programmed to adapt in real time

It Takes a Swarm: These Robots Talk to Each Other, Make Decisions as a Group

MiR200 Improves Safety, Quality, and Competitiveness at Metro Plastics

Autonomous Mobility Grows Its Sea Legs — Toyota AI Investment in Sea Machines

Call for robot holiday videos 2018

That’s right! You better not run, you better not hide, you better watch out for brand new robot holiday videos on Robohub! Drop your submissions down our chimney at sabine.hauert@robohub.org and share the spirit of the season.

For inspiration, here are our first submissions .

Robot makes world-first baby coral delivery to Great Barrier Reef

In a world first, an undersea robot has dispersed microscopic baby corals (coral larvae) to help scientists working to repopulate parts of the Great Barrier Reef during this year’s mass coral spawning event.

Six weeks after winning the Great Barrier Reef Foundation’s $300,000 Out of the Blue Box Reef Innovation Challenge, Southern Cross University’s Professor Peter Harrison and QUT’s Professor Matthew Dunbabin trialled the ground-breaking initiative on Vlasoff Reef, near Cairns in north Queensland.

Professor Dunbabin engineered QUT’s reef protector RangerBot into LarvalBot specifically for the coral restoration project led by Professor Harrison.

The project builds on Professor Harrison’s successful larval reseeding technique piloted on the southern Great Barrier Reef in 2016 and 2017 in collaboration with the Great Barrier Reef Foundation, the Great Barrier Reef Marine Park Authority (GBRMPA) and Queensland Parks & Wildlife Service (QPWS), following successful small scale trials in the Philippines funded by the Australian Centre for International Agricultural Research.

Watch interview below

“This year represents a big step up for our larval restoration research and the first time we’ve been able to capture coral spawn on a bigger scale using large floating spawn catchers then rearing them into tiny coral larvae in our specially constructed larval pools and settling them on damaged reef areas,” Professor Harrison said.

“Winning the GBRF’s Reef Innovation Challenge meant that we could increase the scale of the work planned for this year using mega-sized spawn catchers and fast track an initial trial of LarvalBot as a novel method of dispersing the coral larvae out on to the Reef.

“With further research and refinement, this technique has enormous potential to operate across large areas of reef and multiple sites in a way that hasn’t previously been possible.

“We’ll be closely monitoring the progress of settled baby corals over coming months and working to refine both the technology and the technique to scale up further in 2019.”

This research and the larval production process was also directly supported by the recent successful SBIR 2018 Coral larval restoration research project on Vlasoff Reef led by Professor Harrison with Katie Chartrand (James Cook University) and Associate Professor David Suggett (University of Technology Sydney), in collaboration with Aroona Boat Charters, the GBRMPA and QPWS.

With a current capacity to carry around 100,000 coral larvae per mission and plans to scale up to millions of larvae, the robot gently releases the larvae onto damaged reef areas allowing it to settle and over time develop into coral polyps or baby corals.

Professor Dunbabin said LarvalBot could be compared to ‘an underwater crop duster’ operating very safely to ensure existing coral wasn’t disturbed.

“During this year’s trial, the robot was tethered so it could be monitored precisely but future missions will see it operate alone and on a much larger scale,” Professor Dunbabin said.

“Using an iPad to program the mission, a signal is sent to deliver the larvae and it is gently pushed out by LarvalBot. It’s like spreading fertiliser on your lawn.

“The robot is very smart, and as it glides along we target where the larvae need to be distributed so new colonies can form and new coral communities can develop.

“We have plans to do this again in Australia and elsewhere and I’m looking forward to working with Professor Harrison and Southern Cross University, the Great Barrier Reef Foundation and other collaborators to help tackle an important problem.”

This project builds on the work by Professor Dunbabin who developed RangerBot to help control the coral-killing crown-of-thorns starfish which is responsible for 40 per cent of the reef’s decline in coral cover.

Great Barrier Reef Foundation Managing Director Anna Marsden said, “It’s exciting to see this project progress from concept to implementation in a matter of weeks, not years. The recent IPCC report highlights that we have a very short window in which to act for the long term future of the Reef, underscoring the importance of seeking every opportunity to give our reefs a fighting chance.

“This project is testament to the power of collaboration between science, business and philanthropy. With the support of the Tiffany & Co. Foundation, whose longstanding support for coral reef conservation globally spans almost two decades, our international call for innovations to help the Reef has uncovered a solution that holds enormous promise for restoring coral reefs at scales never before possible.”

Following the success of this initial trial in 2018, the researchers plan to fully implement their challenge-winning proposal in 2019, building even larger mega spawn-catchers and solar powered floating larval incubation pools designed to rear hundreds of millions of genetically diverse, heat-tolerant coral larvae to be settled on damaged reefs through a combination of larval clouds and LarvalBots.

A new drone can change its shape to fly through a narrow gap

A research team from the University of Zurich and EPFL has developed a new drone that can retract its propeller arms in flight and make itself small to fit through narrow gaps and holes. This is particularly useful when searching for victims of natural disasters.

A research team from the University of Zurich and EPFL has developed a new drone that can retract its propeller arms in flight and make itself small to fit through narrow gaps and holes. This is particularly useful when searching for victims of natural disasters.

Inspecting a damaged building after an earthquake or during a fire is exactly the kind of job that human rescuers would like drones to do for them. A flying robot could look for people trapped inside and guide the rescue team towards them. But the drone would often have to enter the building through a crack in a wall, a partially open window, or through bars – something the typi-cal size of a drone does not allow.

To solve this problem, researchers from Scaramuzza lab at the University of Zurich and Floreano lab at EPFL created a new kind of drone. Both groups are part of the National Centre of Competence in Research (NCCR) Robotics funded by the Swiss National Science Foundation. Inspired by birds that fold their wings in mid-air to cross narrow passages, the new drone can squeeze itself to pass through gaps and then go back to its previous shape, all the while continuing to fly. And it can even hold and transport objects along the way.

Mobile arms can fold around the main frame

“Our solution is quite simple from a mechanical point of view, but it is very versatile and very au-tonomous, with onboard perception and control systems,” explains Davide Falanga, researcher at the University of Zurich and the paper’s first author. In comparison to other drones, this morphing drone can maneuver in tight spaces and guarantee a stable flight at all times.

The Zurich and Lausanne teams worked in collaboration and designed a quadrotor with four pro-pellers that rotate independently, mounted on mobile arms that can fold around the main frame thanks to servo-motors. The ace in the hole is a control system that adapts in real time to any new position of the arms, adjusting the thrust of the propellers as the center of gravity shifts.

“The morphing drone can adopt different configurations according to what is needed in the field,” adds Stefano Mintchev, co-author and researcher at EPFL. The standard configuration is X-shaped, with the four arms stretched out and the propellers at the widest possible distance from each other. When faced with a narrow passage, the drone can switch to a “H” shape, with all arms lined up along one axis or to a “O” shape, with all arms folded as close as possible to the body. A “T” shape can be used to bring the onboard camera mounted on the central frame as close as possible to objects that the drone needs to inspect.

First step to fully autonomous rescue searches

In the future, the researchers hope to further improve the drone structure so that it can fold in all three dimensions. Most importantly, they want to develop algorithms that will make the drone truly autonomous, allowing it to look for passages in a real disaster scenario and automatically choose the best way to pass through them. “The final goal is to give the drone a high-level instruction such as ‘enter that building, inspect every room and come back’ and let it figure out by itself how to do it,” says Falanga.

Literature

Davide Falanga, Kevin Kleber, Stefano Mintchev, Dario Floreano, Davide Scaramuzza. The Foldable Drone: A Morphing Quadrotor that can Squeeze and Fly. IEEE Robotics and Auto-mation Letter, 10 December 2018. DOI:10.1109/LRA.2018.2885575

Drones and satellite imaging to make forest protection pay

by Steve Gillman

Every year 7 million hectares of forest are cut down, chipping away at the 485 gigatonnes of carbon dioxide (CO2) stored in trees around the world, but low-cost drones and new satellite imaging could soon protect these carbon stocks and help developing countries get paid for protecting their trees.

‘If you can measure the biomass you can measure the carbon and get a number which has value for a country,’ said Pedro Freire da Silva, a satellite and flight system expert at Deimos Engenharia, a Portuguese technology company.

International financial institutions, such as the World Bank and the European Investment Bank, provide developing countries with economic support to keep forests’ carbon stocks intact through the UN REDD+ programme.

The more carbon a developing country can show it keeps in its forests, the more money the government could get, which would give them a greater incentive to protect these lands. But, according to Silva, these countries often lack the tools to determine the exact amount of carbon stored in their forests and that means they could be missing out on funding.

‘If you have a 10% error in your carbon stock (estimation), that can have a financial value,’ he said, adding that it also takes governments a lot of time and energy to collect the relevant data about their forests.

To address these challenges, a project called COREGAL developed automated low-cost drones that map biomass. They put a special sensor on drones that fly over forests and analyse Global Positioning System (GPS) and Galileo satellite signals as they bounce back through a tree canopy, which then reveals the biomass density of an area and, in turn, the carbon stocks.

‘The more leaves you have, the more power (from GPS and Galileo) is lost,’ said Silva who coordinated the project. This means when the drone picks up weaker satellite navigation readings there is more biomass below.

‘If you combine this data with satellite data we get a more accurate map of biomass than either would (alone),’ he added.

Sentinels

The project trialled their drone prototype in Portugal, with Brazil in mind as the target end user as it is on the frontline of global deforestation. According to Brazilian government data, an area about five times to size of London was destroyed between August 2017 and July this year.

COREGAL’s drones could end up enabling countries such as Brazil to access more from climate funds, in turn creating a stronger incentive for governments to protect their forests. Silva also believes the drones could act as a deterrent against illegal logging.

‘If people causing deforestation know that there are (drone) flight campaigns or people going to the field to monitor forests it can demotivate them,’ he said. ‘It is like a sentinel system.’

In the meantime, governments in other developing countries still need the tools to help them fight deforestation. According to Dr Thomas Häusler, a forest and climate expert at GAF, a German earth observation company, the many drivers of deforestation make it very difficult to sustainably manage forests.

‘(Deforestation) differs between regions and even in regions you have different drivers,’ said Dr Häusler. ‘In some countries they (governments) give concessions for timber logging and companies are (then) going to huge (untouched forest) areas to selectively log the highest value trees.’

Logging like this is occurring in Brazil, central Africa and Southeast Asia. When it happens, Dr Häusler says this can cause huge collateral damage because loggers leave behind roads that local populations use to access previously untouched forests which they further convert for agriculture or harvest wood for energy.

Demand for timber and agricultural produce from developed countries can also drive deforestation in developing countries because their governments see the forest as a source of economic development and then allow expansion.

With such social, political and economic dependency, it can be difficult, and expensive, for governments to implement preventative measures. According to Dr Häusler, to protect untouched forests these governments should be compensated for fighting deforestation.

‘To be compensated you need strong (forest) management and observation (tools),’ said Dr Häusler, who is also the coordinator of EOMonDis, a project developing an Earth-observation-based forest monitoring system that aims to support governments.

Domino effect

They combine high-resolution data from the European Sentinel satellites, available every five days through Copernicus, the EU’s Earth observation system, along with data from the North American Landsat-8 satellite.

Automated processing using special algorithms generates detailed maps on the current and past land use and forest situation to identify the carbon-rich forest areas. The project also has access to satellite data going as far back as the 1970s which can be used to determine how much area has been affected by deforestation.

Like COREGAL, using these maps, and the information they contain, a value is put on the most carbon-rich forest areas, meaning countries can access more money from international financial institutions. The project is almost finished and they soon hope to have a commercially viable system for use.

‘The main focus is the climate change reporting process for countries who want compensation in fighting climate change,’ said Dr Häusler. ‘We can support this process by showing the current land-use situation and show the low and high carbon stocks.’

Another potential user of this system is the international food industry that sells products containing commodities linked to deforestation such as palm oil, cocoa, meat and dairy. In response to their contribution, and social pressure, some of these big companies have committed to zero-deforestation in their supply chain.

‘When someone (a company) is declaring land as zero deforestation, or that palm plantations fit into zero deforestation, they have to prove it,’ said Dr Häusler. ’And a significant result (from the project) is we can now prove that.’

Dr Häusler says the system will help civil society and NGOs who want to make sure industry or governments are behaving themselves as well as allow the different groups to make environmentally sound decisions when choosing land for different purposes.

‘We can show everybody – the government, NGO stakeholders, but also the industry – how to better select the areas they want to use.’

The research in this article was funded by the EU. If you liked this article, please consider sharing it on social media.

3Q: Aleksander Madry on building trustworthy artificial intelligence

Photo courtesy of CSAIL

By Kim Martineau

Machine learning algorithms now underlie much of the software we use, helping to personalize our news feeds and finish our thoughts before we’re done typing. But as artificial intelligence becomes further embedded in daily life, expectations have risen. Before autonomous systems fully gain our confidence, we need to know they are reliable in most situations and can withstand outside interference; in engineering terms, that they are robust. We also need to understand the reasoning behind their decisions; that they are interpretable.

Aleksander Madry, an associate professor of computer science at MIT and a lead faculty member of the Computer Science and Artificial Intelligence Lab (CSAIL)’s Trustworthy AI initiative, compares AI to a sharp knife, a useful but potentially-hazardous tool that society must learn to weild properly. Madry recently spoke at MIT’s Symposium on Robust, Interpretable AI, an event co-sponsored by the MIT Quest for Intelligence and CSAIL, and held Nov. 20 in Singleton Auditorium. The symposium was designed to showcase new MIT work in the area of building guarantees into AI, which has almost become a branch of machine learning in its own right. Six faculty members spoke about their research, 40 students presented posters, and Madry opened the symposium with a talk the aptly titled, “Robustness and Interpretability.” We spoke with Madry, a leader in this emerging field, about some of the key ideas raised during the event.

Q: AI owes much of its recent progress to deep learning, a branch of machine learning that has significantly improved the ability of algorithms to pick out patterns in text, images and sounds, giving us automated assistants like Siri and Alexa, among other things. But deep learning systems remain vulnerable in surprising ways: stumbling when they encounter slightly unfamiliar examples in the real world or when a malicious attacker feeds it subtly-altered images. How are you and others trying to make AI more robust?

A: Until recently, AI researchers focused simply on getting machine-learning algorithms to accomplish basic tasks. Achieving even average-case performance was a major challenge. Now that performance has improved, attention has shifted to the next hurdle: improving the worst-case performance. Most of my research is focused on meeting this challenge. Specifically, I work on developing next-generation machine-learning systems that will be reliable and secure enough for mission-critical applications like self-driving cars and software that filters malicious content. We’re currently building tools to train object-recognition systems to identify what’s happening in a scene or picture, even if the images fed to the model have been manipulated. We are also studying the limits of systems that offer security and reliability guarantees. How much reliability and security can we build into machine-learning models, and what other features might we need to sacrifice to get there?

My colleague Luca Daniel, who also spoke, is working on an important aspect of this problem: developing a way to measure the resilience of a deep learning system in key situations. Decisions made by deep learning systems have major consequences, and thus it’s essential that end-users be able to measure the reliability of each of the model’s outputs. Another way to make a system more robust is during the training process. In her talk, “Robustness in GANs and in Black-box Optimization,” Stefanie Jegelka showed how the learner in a generative adversarial network, or GAN, can be made to withstand manipulations to its input, leading to much better performance.

Q: The neural networks that power deep learning seem to learn almost effortlessly: Feed them enough data and they can outperform humans at many tasks. And yet, we’ve also seen how easily they can fail, with at least three widely publicized cases of self-driving cars crashing and killing someone. AI applications in health care are not yet under the same level of scrutiny but the stakes are just as high. David Sontag focused his talk on the often life-or-death consequences when an AI system lacks robustness. What are some of the red flags when training an AI on patient medical records and other observational data?

A: This goes back to the nature of guarantees and the underlying assumptions that we build into our models. We often assume that our training datasets are representative of the real-world data we test our models on — an assumption that tends to be too optimistic. Sontag gave two examples of flawed assumptions baked into the training process that could lead an AI to give the wrong diagnosis or recommend a harmful treatment. The first focused on a massive database of patient X-rays released last year by the National Institutes of Health. The dataset was expected to bring big improvements to the automated diagnosis of lung disease until a skeptical radiologist took a closer look and found widespread errors in the scans’ diagnostic labels. An AI trained on chest scans with a lot of incorrect labels is going to have a hard time generating accurate diagnoses.

A second problem Sontag cited is the failure to correct for gaps and irregularities in the data due to system glitches or changes in how hospitals and health care providers report patient data. For example, a major disaster could limit the amount of data available for emergency room patients. If a machine-learning model failed to take that shift into account its predictions would not be very reliable.

Q: You’ve covered some of the techniques for making AI more reliable and secure. What about interpretability? What makes neural networks so hard to interpret, and how are engineers developing ways to peer beneath the hood?

A: Understanding neural-network predictions is notoriously difficult. Each prediction arises from a web of decisions made by hundreds to thousands of individual nodes. We are trying to develop new methods to make this process more transparent. In the field of computer vision one of the pioneers is Antonio Torralba, director of The Quest. In his talk, he demonstrated a new tool developed in his lab that highlights the features that a neural network is focusing on as it interprets a scene. The tool lets you identify the nodes in the network responsible for recognizing, say, a door, from a set of windows or a stand of trees. Visualizing the object-recognition process allows software developers to get a more fine-grained understanding of how the network learns.

Another way to achieve interpretability is to precisely define the properties that make the model understandable, and then train the model to find that type of solution. Tommi Jaakkola showed in his talk, “Interpretability and Functional Transparency,” that models can be trained to be linear or have other desired qualities locally while maintaining the network’s overall flexibility. Explanations are needed at different levels of resolution much as they are in interpreting physical phenomena. Of course, there’s a cost to building guarantees into machine-learning systems — this is a theme that carried through all the talks. But those guarantees are necessary and not insurmountable. The beauty of human intelligence is that while we can’t perform most tasks perfectly, as a machine might, we have the ability and flexibility to learn in a remarkable range of environments.

Soft actor critic – Deep reinforcement learning with real-world robots

By Tuomas Haarnoja, Vitchyr Pong, Kristian Hartikainen, Aurick Zhou, Murtaza Dalal, and Sergey Levine

We are announcing the release of our state-of-the-art off-policy model-free reinforcement learning algorithm, soft actor-critic (SAC). This algorithm has been developed jointly at UC Berkeley and Google Brain, and we have been using it internally for our robotics experiment. Soft actor-critic is, to our knowledge, one of the most efficient model-free algorithms available today, making it especially well-suited for real-world robotic learning. In this post, we will benchmark SAC against state-of-the-art model-free RL algorithms and showcase a spectrum of real-world robot examples, ranging from manipulation to locomotion. We also release our implementation of SAC, which is particularly designed for real-world robotic systems.

Desired Features for Deep RL for Real Robots

What makes an ideal deep RL algorithm for real-world systems? Real-world experimentation brings additional challenges, such as constant interruptions in the data stream, requirement for a low-latency inference and smooth exploration to avoid mechanical wear and tear on the robot, which set additional requirement for both the algorithm and also the implementation of the algorithm.

Regarding the algorithm, several properties are desirable:

- Sample Efficiency. Learning skills in the real world can take a substantial amount of time. Prototyping a new task takes several trials, and the total time required to learn a new skill quickly adds up. Thus good sample complexity is the first prerequisite for successful skill acquisition.

- No Sensitive Hyperparameters. In the real world, we want to avoid parameter tuning for the obvious reason. Maximum entropy RL provides a robust framework that minimizes the need for hyperparameter tuning.

- Off-Policy Learning. An algorithm is off-policy if we can reuse data collected for another task. In a typical scenario, we need to adjust parameters and shape the reward function when prototyping a new task, and use of an off-policy algorithm allows reusing the already collected data.

Soft actor-critic (SAC), described below, is an off-policy model-free deep RL algorithm that is well aligned with these requirements. In particular, we show that it is sample efficient enough to solve real-world robot tasks in only a handful of hours, robust to hyperparameters and works on a variety of simulated environments with a single set of hyperparameters.

In addition to the desired algorithmic properties, experimentation in the real-world sets additional requirements for the implementation. Our release supports many of these features that we have found crucial when learning with real robots, perhaps the most importantly:

- Asynchronous Sampling. Inference needs to be fast to minimize delay in the control loop, and we typically want to keep training during the environment resets too. Therefore, data sampling and training should run in independent threads or processes.

- Stop / Resume Training. When working with real hardware, whatever can go wrong, will go wrong. We should expect constant interruptions in the data stream.

- Action smoothing. Typical Gaussian exploration makes the actuators jitter at high frequency, potentially damaging the hardware. Thus temporally correlating the exploration is important.

Soft Actor-Critic

Soft actor-critic is based on the maximum entropy reinforcement learning framework, which considers the entropy augmented objective

where $\mathbf{s}_t$ and $\mathbf{a}_t$ are the state and the action, and the expectation is taken over the policy and the true dynamics of the system. In other words, the optimal policy not only maximizes the expected return (first summand) but also the expected entropy of itself (second summand). The trade-off between the two is controlled by the non-negative temperature parameter $\alpha$, and we can always recover the conventional, maximum expected return objective by setting $\alpha=0$. In a technical report, we show that we can view this objective as an entropy constrained maximization of the expected return, and learn the temperature parameter automatically instead of treating it as a hyperparameter.

This objective can be interpreted in several ways. We can view the entropy term as an uninformative (uniform) prior over the policy, but we can also view it as a regularizer or as an attempt to trade off between exploration (maximize entropy) and exploitation (maximize return). In our previous post, we gave a broader overview and proposed applications that are unique to maximum entropy RL, and a probabilistic view of the objective is discussed in a recent tutorial. Soft actor-critic maximizes this objective by parameterizing a Gaussian policy and a Q-function with a neural network, and optimizing them using approximate dynamic programming. We defer further details of soft actor-critic to the technical report. In this post, we will view the objective as a grounded way to derive better reinforcement learning algorithms that perform consistently and are sample efficient enough to be applicable to real-world robotic applications, and—perhaps surprisingly—can yield state-of-the-art performance under the conventional, maximum expected return objective (without entropy regularization) in simulated benchmarks.

Simulated Benchmarks

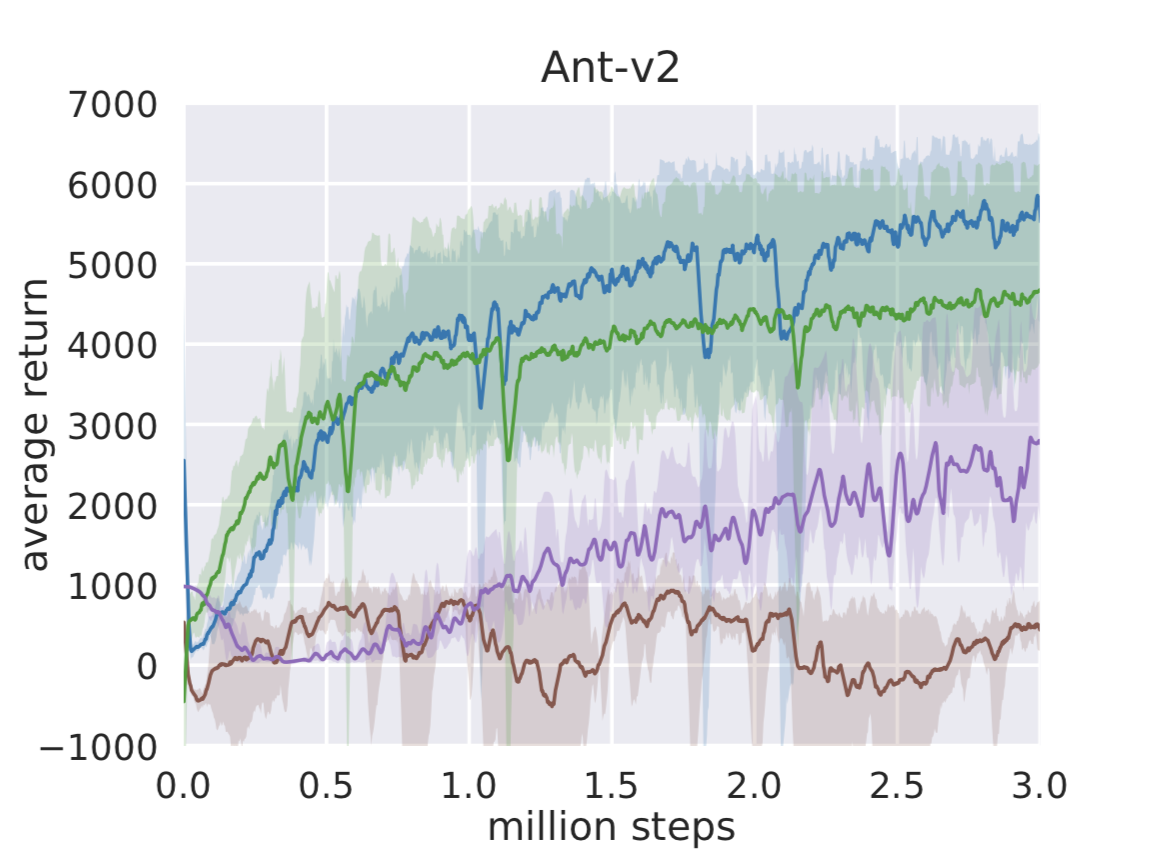

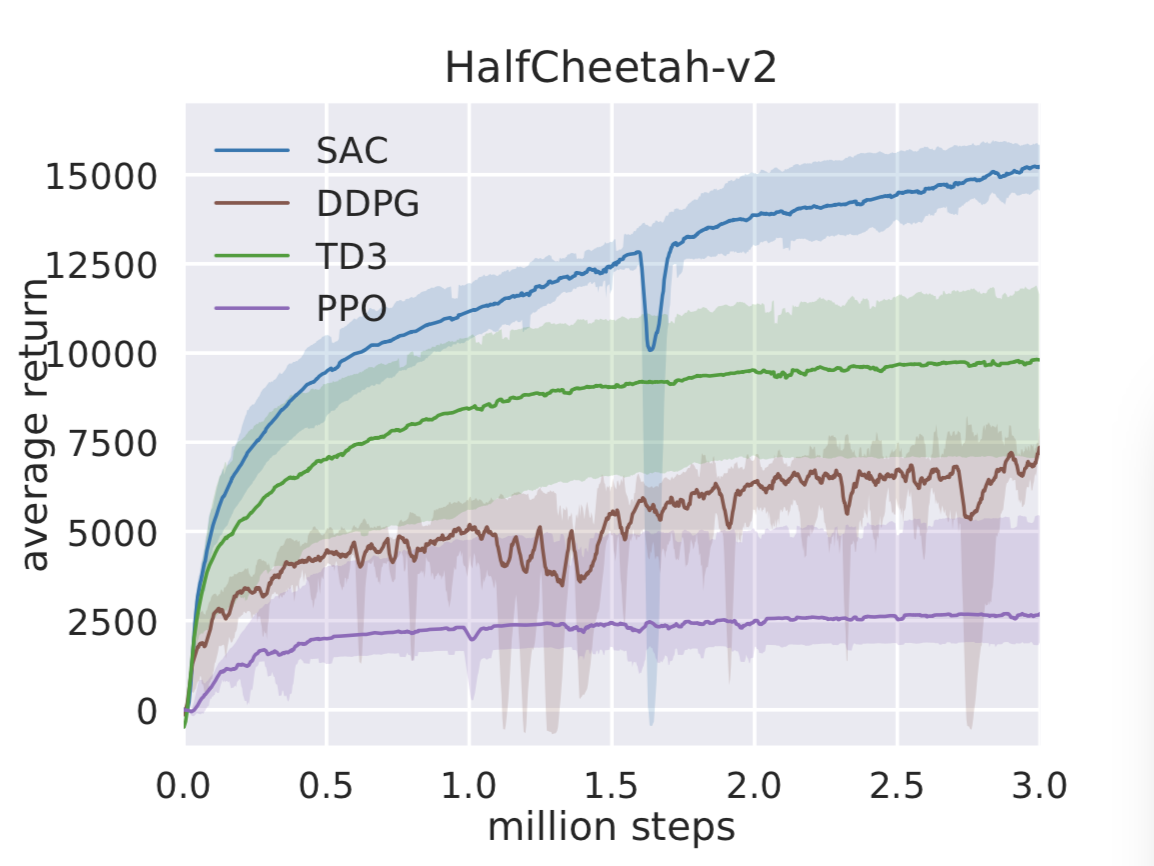

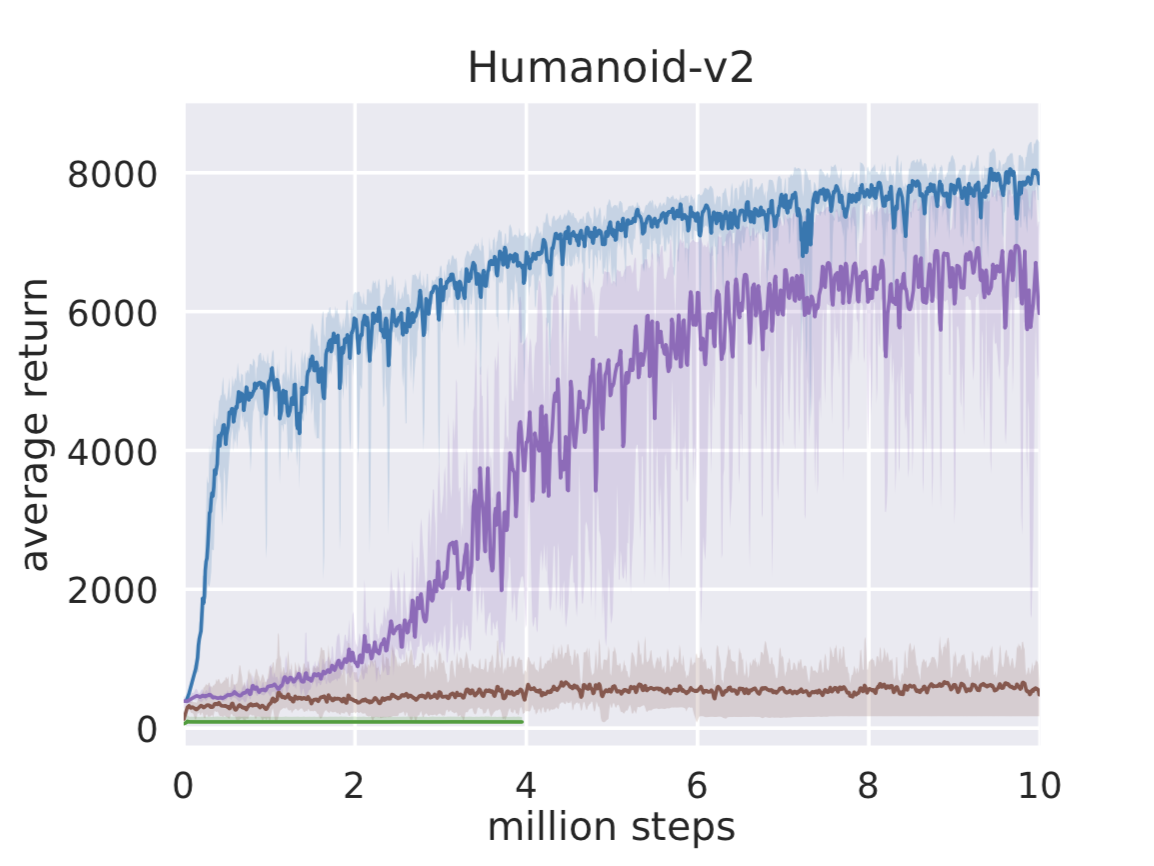

Before we jump into real-world experiments, we compare SAC on standard benchmark tasks to other popular deep RL algorithms, deep deterministic policy gradient (DDPG), twin delayed deep deterministic policy gradient (TD3), and proximal policy optimization (PPO). The figures below compare the algorithms on three challenging locomotion tasks, HalfCheetah, Ant, and Humanoid, from OpenAI Gym. The solid lines depict the total average return and the shadings correspond to the best and the worst trial over five random seeds. Indeed, soft actor-critic, which is shown in blue, achieves the best performance, and—what’s even more important for real-world applications—it performs well also in the worst case. We have included more benchmark results in the technical report.

Deep RL in the Real World

We tested soft actor-critic in the real world by solving three tasks from scratch without relying on simulation or demonstrations. Our first real-world task involves the Minitaur robot, a small-scale quadruped with eight direct-drive actuators. The action space consists of the swing angle and the extension of each leg, which are then mapped to desired motor positions and tracked with a PD controller. The observations include the motor angles as well as roll and pitch angles and angular velocities of the base. This learning task presents substantial challenges for real-world reinforcement learning. The robot is underactuated, and must therefore delicately balance contact forces on the legs to make forward progress. An untrained policy can lose balance and fall, and too many falls will eventually damage the robot, making sample-efficient learning essentially. The video below illustrates the learned skill. Although we trained our policy only on flat terrain, we then tested it on varied terrains and obstacles. Because soft actor-critic learns robust policies, due to entropy maximization at training time, the policy can readily generalize to these perturbations without any additional learning.

The Minitaur robot (Google Brain, Tuomas Haarnoja, Sehoon Ha, Jie Tan, and Sergey Levine).

Our second real-world robotic task involves training a 3-finger dexterous robotic hand to manipulate an object. The hand is based on the Dynamixel Claw hand, discussed in another post. This hand has 9 DoFs, each controlled by a Dynamixel servo-motor. The policy controls the hand by sending target joint angle positions for the on-board PID controller. The manipulation task requires the hand to rotate a “valve’‘-like object as shown in the animation below. In order to perceive the valve, the robot must use raw RGB images shown in the inset at the bottom right. The robot must rotate the valve so that the colored peg faces the right (see video below). The initial position of the valve is reset uniformly at random for each episode, forcing the policy to learn to use the raw RGB images to perceive the current valve orientation. A small motor is attached to the valve to automate resets and to provide the ground truth position for the determination of the reward function. The position of this motor is not provided to the policy. This task is exceptionally challenging due to both the perception challenges and the need to control a hand with 9 degrees of freedom.

Rotating a valve with a dexterous hand, learned directly from raw pixels (UC Berkeley, Kristian Hartikainen, Vikash Kumar, Henry Zhu, Abhishek Gupta, Tuomas Haarnoja, and Sergey Levine).

In the final task, we trained a 7-DoF Sawyer robot to stack Lego blocks. The policy receives the joint positions and velocities, as well as end-effector force as an input and outputs torque commands to each of the seven joints. The biggest challenge is to accurately align the studs before exerting a downward force to overcome the friction between them.

Stacking Legos with Sawyer (UC Berkeley, Aurick Zhou, Tuomas Haarnoja, and Sergey Levine).

Soft actor-critic solves all of these tasks quickly: the Minitaur locomotion and the block-stacking tasks both take 2 hours, and the valve-turning task from image observations takes 20 hours. We also learned a policy for the valve-turning task without images by providing the actual valve position as an observation to the policy. Soft actor-critic can learn this easier version of the valve task in 3 hours. For comparison, prior work has used PPO to learn the same task without images in 7.4 hours.

Conclusion

Soft actor-critic is a step towards feasible deep RL with real-world robots. Work still needs to be done to scale up these methods to more challenging tasks, but we believe we are getting closer to the critical point where deep RL can become a practical solution for robotic tasks. Meanwhile, you can connect your robot to our toolbox and get learning started!

Acknowledgements

We would like to thank the amazing teams at Google Brain and UC Berkeley—specifically Pieter Abbeel, Abhishek Gupta, Sehoon Ha, Vikash Kumar, Sergey Levine, Jie Tan, George Tucker, Vincent Vanhoucke, Henry Zhu—who contributed to the development of the algorithm, spent long days running experiments, and provided the support and resources that made the project possible.

This article was initially published on the BAIR blog, and appears here with the authors’ permission.

Links:

- Project website

- Technical description of SAC

- softlearning (our robot learning toolbox, including a SAC implementation in Tensorflow)

- rlkit (another SAC implementation from UC Berkeley in PyTorch)

The metaphysical impact of automation

Earlier this month, I crawled into Dr. Wendy Ju‘s autonomous car simulator to explore the future of human-machine interfaces at CornellTech’s Tata Innovation Center. Dr. Ju recently moved to the Roosevelt Island campus from Stanford University. While in California, the roboticist was famous for making videos capturing people’s reactions to self-driving cars using students disguised as “ghost-drivers” in seat costumes. Professor Ju’s work raises serious questions of the metaphysical impact of docility.

Last January, Toyota Research published a report on the neurological effects of speeding. The team displayed images and videos of sports cars racing down highways that produced spikes in brain activity. The study states,”we hypothesized that sensory inputs during high-speed driving would activate the brain reward system. Humans commonly crave sensory inputs that give rise to pleasant sensations, and abundant evidence indicates that the craving for pleasant sensations is associated with activation within the brain reward system.” The brain reward system is directly correlated to the body’s release of dopamine via the Ventral Tegmental Area. The findings confirmed that higher levels of brain activity on the VTA “were stronger in the fast condition than in the slow condition.” Essentially, speeding (which most drivers engage in regardless of laws) is addicting, as the brain rewards such aggressive behaviors with increased levels of dopamine.

As we relegate more driving to machines, the roads are in danger of becoming highways of strung out dopamine junkies craving new ways to get their fix. Self-driving systems could lead to a marketing battle for in-cabin services pushed by manufacturers, software providers, and media/Internet companies. As an example, Apple filed a patent in August for “an augmented-reality powered windshield system,” This comes two years after Ford filed a similar patent for a display or “system for projecting visual content onto a vehicle’s windscreen.” Both of these filings, along with a handful of others, indicate that the race for capturing rider mindshare will be critical to driving the adoption of robocars. Strategy Analytics estimates this “passenger economy” could generate $7 trillion by 2050. Commuters who spend 250 million hours a year in the car are seen by these marketers as a captive audience for new ways to fill dopamine-deprived experiences.

I predict at next month’s Consumer Electronic Show (CES) in-cabin services will be the lead story coming out of Las Vegas. For example, last week Audi announced a new partnership with Disney to develop innovative ways to entertain passengers. Audi calls the in-cabin experience “The 25th Hour,” which will be further unveiled at CES. Providing a sneak peak into its meaning, CNET interviewed Nils Wollny, head of Audi’s digital business strategy. According to Wollny, the German automobile manufacturer approached Disney 18 months ago to forge a relationship. Wollny explains, “You might be familiar with their Imagineering division [Walt Disney Imagineering], they’re very heavy into building experiences for customers. And they were highly interested in what happens in cars in the future.” He continues, “There will be a commercialization or business approach behind it [for Audi] I’d call it a new media type that isn’t existing yet that takes full advantage of being in a vehicle. We created something completely new together, and it’s very technologically driven.” When illustrating this vision to CNET’s Road Show, Wollny directed the magazine to Audi’s fully autonomous concept car design that “blurs the lines between the outside world and the vehicle’s cabin.” This is accomplished by turning windows into screens with digital overlays that simultaneously show media while the outside world rushes by at 60 miles per an hour.

Self-driving cars will be judged not by speed of their engines, but the comfort of their cabins. Wollny’s description is reminiscent of the marketing efforts of social media companies that were successful in turning an entire generation into screen addicts. Facebook founder Sean Parker, admitted recently that the social network was founded with the strategy of consuming “as much of your time and conscious attention as possible.” To accomplish this devious objective, Parker confesses that the company exploited the “vulnerability in human psychology.” When you like something or comment on a friend’s photo, Parker boasted “we… give you a little dopamine hit.” The mobile economy has birthed dopamine experts such as Ramsay Brown, cofounder of Dopamine Labs, which promises app designers with increased levels of “stickiness” by aligning game play to the player’s cerebral reward system. Using machine learning Brown’s technology monitors each player’s activity by providing the most optimal spike of dopamine. New York Times columnist David Brook’s said it best, “Tech companies understand what causes dopamine surges in the brain and they lace their products with ‘hijacking techniques’ that lure us in and create ‘compulsion loops’.”

The promise of automation is to free humans from dull, dirty, and dangerous chores. The flip side many espouse is that artificial intelligence could make us too reliant on technology, idling society. Already, semi-autonomous systems are being cited as a cause of workplace accidents. Andrew Moll of the United Kingdom’s Chamber of Shipping warned that greater levels of automation by outsourcing decision making to computers has lead to higher levels of maritime collisions. Moll pointed to a recent spat of seafaring incidents,”We have seen increasing integration of ship systems and increasing reliance on computers. He elaborated that “Humans do not make good monitors. We need to set alarms and alerts, otherwise mariners will not do checks.” Moll exclaimed that technology is increasingly making workers lazy as many feel a “lack of meaning and purpose,” and are suffering from mental fatigue which is leading to a rise in workplace injuries. “Seafarers would be tired and demotivated when they get to port,” cautioned Moll. These observations are not isolated to shipping, the recent fatality by Uber’s autonomous taxi program in Arizona faulted safety driver fatigue as one of the main causes for the tragedies. In the Pixar movie WALL-E, the future is so automated that humans have lost all motivation to leave their mobile lounge chairs. To avoid this dystopian vision, successful robotic deployments will have to strike the right balance of augmenting the physical, while providing cerebral stimulation.

To better understand the automation landscape, join us at the next RobotLab event on “Cybersecurity & Machines” with John Frankel of ffVC and Guy Franklin of SOSA on February 12th in New York City, RSVP Today!