Four robots that aim to teach your kids to code

Few jobs can be completely replaced by new technologies

The Tale of the Painting Robot that didn’t Steal Anyone’s Job

Robots in Depth with Dave Rollinson

In this episode of Robots in Depth, Per Sjöborg speaks with Dave Rollinson from Hebi Robotics about their modular robotics systems.

In this episode of Robots in Depth, Per Sjöborg speaks with Dave Rollinson from Hebi Robotics about their modular robotics systems.

Dave shares how he had the opportunity to work with sewer inspection robots early on. He learned a lot from constantly bringing the robots out in the field. Seeing how the robots were used, as regular tools, and how they succeeded and failed helped him iterate the design and take the steps needed to create a well-functioning product.

Dave also talks about how he wanted to continue to build robots as he continued his education. He chose to go to Howie Choset’s laboratory at CMU because they build their own hardware.

We get the opportunity to see one of the modular robotics system Hebi is working on live, and a few examples of how it is being used.

At the end of the interview, Dave and the host Per, who is a major modular robotics fan, discuss the significance of modularity in robotics and how it can change how we develop and use robots and machines.

Uber and Waymo settle lawsuit in a giant victory for Uber

In a shocker, it was announced that Uber and Waymo (Google/Alphabet) have settled their famous lawsuit for around $245 million in Uber stock. No cash, and Uber agrees it won’t use any Google hardware or software trade secrets — which it of course had always denied that it ever did.

I think this is a tremendous victory for Uber. Google had proposed a $1B settlement early on that was rejected. Waymo had not yet provided all the evidence necessary to show damages, but one has to presume they had more to come that made Uber feel it should settle. Of course, the cloud of a lawsuit and years of appeals over their programs and eventual IPO also were worth closing out.

What’s great for Uber is that it’s a stock deal. While the number is not certain, some estimates suggest that this amount of stock might not be much more than the shares of Uber lost by Anthony Levandowski when he was fired for not helping with the lawsuit. In other words, Uber fixes the problems triggered by Anthony’s actions by paying off Waymo with the stock they used to buy Otto from Anthony. They keep the team (which is really what they bought, since at 7 months of age, Otto had done some impressive work but nothing worth $700M) and they get clear of the lawsuit.

The truth is, Uber can’t be in a fight with Google. All Uber rides are booked through the platforms of Google and Apple. Without those platforms there is no Uber. I am not suggesting that Apple or Google would do illegal-monopoly tricks to fight Uber. They don’t have to, though there are some close-to-the-line tricks they could use that don’t violate anti-trust but make Uber’s life miserable. You simply don’t want to be in a war for your existence with the platform you depend on for that existence.

Instead, Alphabet now increases its stake in Uber. They are now more motivated to be positively inclined to it. There is still going to be a heavy competition between Waymo and Uber, but Waymo now has this incentive not to hurt Uber too much.

For a long time, it had seemed like there would be a fantastic synergy between the companies. Google had been an early investor in Uber. Waymo has the world’s #1 robocar technology. Uber has the world’s #1 brand in selling rides — the most important use of that technology. Together they would have ruled the world. That never happened, and is unlikely to happen now (though no longer impossible). Alphabet has instead invested in Lyft.

Absent working with Lyft or Uber, Waymo needs to create its own ride service on the scale that Uber has. Few companies could enter that market convincingly today, but Alphabet is one of those few. Yet they have never done this. You need to do more than a robot ride service. Robocars won’t take you from anywhere to anywhere for decades, and so you need a service that combines robocar rides on the popular routes, and does the long tail rides with human drivers. Uber and Lyft are very well poised to deliver that; Waymo is not.

Uber settles this dangerous lawsuit for “free” and turns Alphabet back from an enemy to a frenemy. They get to go ahead full steam, and if they botch their own self-drive efforts they still have the option of buying somebody else’s technology, even Waymo’s. With new management they are hoping to convince the public they aren’t chaotic neutral any more. I think they have come out of this pretty well.

For Waymo, what have they won? Well, they got some Uber stock, which is nice but it’s just money. Alphabet has immense piles of money that this barely dents. They stuck it to Anthony, and retarded Uber for a while. The hard reality is that many companies are developing long-range LIDAR like they alleged Anthony stole for Uber. When they built it, and Otto tried to build it, nobody had it for sale. Time has past and that’s just not as much of an advantage as it used to be. In addition, Waymo has put their focus (correctly) on urban driving, not the highway driving where long range LIDAR is so essential. While Anthony won’t use the knowledge he gained on the Waymo team to help Uber, several other former team members are there, and while they can’t use any trade secrets (and couldn’t before, really) their experience is not so restricted.

For the rest of the field, they can no longer chuckle at their rivals fighting. Not so great news for Lyft and other players.

Israel, a land flowing with AI and autonomous cars

I recently led a group of 20 American tech investors to Israel in conjunction with the UJA and Israel’s Ministry of Economy and Industry. We witnessed firsthand the innovation that has produced more than $22 billion of investments and acquisitions within the past year. We met with the University that produced Mobileye, with the investor that believed in its founder, and the network of every multinational company supporting the startup ecosystem. Mechatronics is blooming in the desert from the CyberTech Convention in Tel Aviv to the robotic labs at Capsula to the latest in autonomous driving inventions in the hills of Jerusalem.

Sitting in a suspended conference room that floats three stories above the ground enclosed within the “greenest building in the Middle East,” I had the good fortune to meet Torr Polakow of Curiosity Lab. Torr is the PhD student of famed roboticist Dr. Goren Gordon. Gordon’s research tests the boundaries of human-robot social interactions. At the 2014 World Science Fair in New York City, Gordon partnered with MIT professor, and Jibo founder, Dr. Cynthia Breazeal to prove it is possible to teach machines to be curious. The experiment was conducted by embedding into Breazeal’s DragonBot (below) a reinforcement learning algorithm that enabled the robot to acquire its own successful behaviors to engage humans. The scientists programmed within the robot nine non-verbal expressions and a reward system that was activated based upon its ability to engage crowds. The greater the number of faces staring back at the robot the bigger the reward. After two hours it successfully learned that by deploying its”sad face” with big eyes, that evokes Puss-in-Boots from the movie Shrek, it captured the most sustained attention from the audience. The work of Gordon and Breazeal opened up the field of social robots and the ability to teach computers human empathy, which will eventually be deployed in fleets of mechanical caregivers for our aging society. Gordon is now working tirelessly to create a mathematical model of “Hierarchical Curiosity Loops (HCL)” for “curiosity-driven behavior” to “increase our understanding of the brain mechanisms behind it.”

Traveling east, we met with Yissum the tech transfer arm of Hebrew University, the birth place of the cherry tomato and Mobileye. The history of the automative startup that became the largest Israeli Initial Public Offering, and later a $15.3 billion acquisition by Intel, is a great example of how today’s Israeli innovations are evolving more quickly into larger enterprises than at anytime in history. Mobileye was founded in 1999 by Professor Amnon Shashua, a leader in computer vision and machine learning software. Dr. Shashua realized early on that his technology had wider applications for industries outside of Israel, particularly automative. Supported by his University, Mobileye’s first product, EyeQ chip, was piloted by Tier 1 powerhouse Denso within years of inception. While it took Mobileye 18 years to achieve full liquidity, Israeli cybersecurity startup, Argus, was sold last month to Elektrobit for a rumored $400 million within just four years from its founding. Shashua’s latest computer vision startup, OrCam, is focusing on giving sight to people with impaired vision.

In addition to Shahua’s successful portfolio of startups, computer vision innovator Professor Shmuel Peleg is probably most famous for catching the 2013 Boston Marathon Bombers using his imaging-tracking software. Dr. Peleg’s company, BriefCam, tracks anomalies in multiple video feeds at once, layering simultaneous events on top of one another. This technique autonomously isolated the Boston bombers by programing in known aspects of their appearance into the system (e.g., men with backpacks), and then isolating the suspects by their behavior. For example, as everyone was watching the marathon minutes before the explosion, the video captured the Tsarnaev brothers leaving the scene after dropping their backpacks on the street. BriefCam’s platform made it possible for the FBI to capture the perpetrators within 101 hours of the event, versus manually sifting through the more than 13,000 videos and 120,000 photographs taken by stationary and cell phone cameras.

This past New Year’s Eve, BriefCam was deployed by the New York City to secure the area around Times Square. In a joint statement by the FBI, NYPD, Department of Homeland Security and the New York Port Authority the authorities exclaimed that they plan to deploy BriefCam’s Video Synopsis across all their video cameras as they “remain concerned about international terrorists and domestic extremists potentially targeting” the celebration. According to Peleg, “You can take the entire night and instead of watching 10 hours you can watch ten minutes. You can detect these people and check what they are planning.”

BriefCam is just one of thousands of new innovations entering the SmartCity landscape from Israel. In the past three years, 400 new smart mobility startups have opened shop in Israel accumulating over $4 billion in investment. During that time, General Motor’s Israeli research & development office went from a few engineers to more than 200 leading computer scientists and roboticists. In fact, Tel Aviv now is a global hub of innovation of every major car manufacturer and automobile supplier, encircling many new accelerators and international consortiums, from the Drive Accelerator to Ecomotion, which seem to be opening up faster than Starbucks in the United States.

As the auto industry goes through its biggest revolution in 100 years, the entire world’s attention is now centered on this small country known for ground-breaking innovation. In the words of Israel’s Innovation Authority, “The acquisition of Mobileye by Intel this past March for $15.3 billion, one of the largest transactions in the field of auto-tech in 2017, has focused the attention of global corporations and investors on the tremendous potential of combining Israeli technological excellence with the autonomous vehicle revolution.”

Japan lays groundwork for boom in robot carers

Learning robot objectives from physical human interaction

Humans physically interact with each other every day – from grabbing someone’s hand when they are about to spill their drink, to giving your friend a nudge to steer them in the right direction, physical interaction is an intuitive way to convey information about personal preferences and how to perform a task correctly.

So why aren’t we physically interacting with current robots the way we do with each other? Seamless physical interaction between a human and a robot requires a lot: lightweight robot designs, reliable torque or force sensors, safe and reactive control schemes, the ability to predict the intentions of human collaborators, and more! Luckily, robotics has made many advances in the design of personal robots specifically developed with humans in mind.

However, consider the example from the beginning where you grab your friend’s hand as they are about to spill their drink. Instead of your friend who is spilling, imagine it was a robot. Because state-of-the-art robot planning and control algorithms typically assume human physical interventions are disturbances, once you let go of the robot, it will resume its erroneous trajectory and continue spilling the drink. The key to this gap comes from how robots reason about physical interaction: instead of thinking about why the human physically intervened and replanning in accordance with what the human wants, most robots simply resume their original behavior after the interaction ends.

We argue that robots should treat physical human interaction as useful information about how they should be doing the task. We formalize reacting to physical interaction as an objective (or reward) learning problem and propose a solution that enables robots to change their behaviors while they are performing a task according to the information gained during these interactions.

Reasoning About Physical Interaction: Unknown Disturbance versus Intentional Information

The field of physical human-robot interaction (pHRI) studies the design, control, and planning problems that arise from close physical interaction between a human and a robot in a shared workspace. Prior research in pHRI has developed safe and responsive control methods to react to a physical interaction that happens while the robot is performing a task. Proposed by Hogan et. al., impedance control is one of the most commonly used methods to move a robot along a desired trajectory when there are people in the workspace. With this control method, the robot acts like a spring: it allows the person to push it, but moves back to an original desired position after the human stops applying forces. While this strategy is very fast and enables the robot to safely adapt to the human’s forces, the robot does not leverage these interventions to update its understanding of the task. Left alone, the robot would continue to perform the task in the same way as it had planned before any human interactions.

Why is this the case? It boils down to what assumptions the robot makes about its knowledge of the task and the meaning of the forces it senses. Typically, a robot is given a notion of its task in the form of an objective function. This objective function encodes rewards for different aspects of the task like “reach a goal at location X” or “move close to the table while staying far away from people”. The robot uses its objective function to produce a motion that best satisfies all the aspects of the task: for example, the robot would move toward goal X while choosing a path that is far from a human and close to the table. If the robot’s original objective function was correct, then any physical interaction is simply a disturbance from its correct path. Thus, the robot should allow the physical interaction to perturb it for safety purposes, but it will return to the original path it planned since it stubbornly believes it is correct.

In contrast, we argue that human interventions are often intentional and occur because the robot is doing something wrong. While the robot’s original behavior may have been optimal with respect to its pre-defined objective function, the fact that a human intervention was necessary implies that the original objective function was not quite right. Thus, physical human interactions are no longer disturbances but rather informative observations about what the robot’s true objective should be. With this in mind, we take inspiration from inverse reinforcement learning (IRL), where the robot observes some behavior (e.g., being pushed away from the table) and tries to infer an unknown objective function (e.g., “stay farther away from the table”). Note that while many IRL methods focus on the robot doing better the next time it performs the task, we focus on the robot completing its current task correctly.

Formalizing Reacting to pHRI

With our insight on physical human-robot interactions, we can formalize pHRI as a dynamical system, where the robot is unsure about the correct objective function and the human’s interactions provide it with information. This formalism defines a broad class of pHRI algorithms, which includes existing methods such as impedance control, and enables us to derive a novel online learning method.

We will focus on two parts of the formalism: (1) the structure of the objective function and (2) the observation model that lets the robot reason about the objective given a human physical interaction. Let be the robot’s state (e.g., position and velocity) and be the robot’s action (e.g., the torque it applies to its joints). The human can physically interact with the robot by applying an external torque, called , and the robot moves to the next state via its dynamics, .

The Robot Objective: Doing the Task Right with Minimal Human Interaction

In pHRI, we want the robot to learn from the human, but at the same time we do not want to overburden the human with constant physical intervention. Hence, we can write down an objective for the robot that optimizes both completing the task and minimizing the amount of interaction required, ultimately trading off between the two.

Here, encodes the task-related features (e.g., “distance to table”, “distance to human”, “distance to goal”) and determines the relative weight of each of these features. In the function, encapsulates the true objective – if the robot knew exactly how to weight all the aspects of its task, then it could compute how to perform the task optimally. However, this parameter is not known by the robot! Robots will not always know the right way to perform a task, and certainly not the human-preferred way.

The Observation Model: Inferring the Right Objective from Human Interaction

As we have argued, the robot should observe the human’s actions to infer the unknown task objective. To link the direct human forces that the robot measures with the objective function, the robot uses an observation model. Building on prior work in maximum entropy IRL as well as the Bolzmann distributions used in cognitive science models of human behavior, we model the human’s interventions as corrections which approximately maximize the robot’s expected reward at state while taking action . This expected reward emcompasses the immediate and future rewards and is captured by the -value:

Intuitively, this model says that a human is more likely to choose a physical correction that, when combined with the robot’s action, leads to a desirable (i.e., high-reward) behavior.

Learning from Physical Human-Robot Interactions in Real-Time

Much like teaching another human, we expect that the robot will continuously learn while we interact with it. However, the learning framework that we have introduced requires that the robot solve a Partially Observable Markov Decision Process (POMDP); unfortunately, it is well known that solving POMDPs exactly is at best computationally expensive, and at worst intractable. Nonetheless, we can derive approximations from this formalism that can enable the robot to learn and act while humans are interacting.

To achieve such in-task learning, we make three approximations summarized below:

1) Separate estimating the true objective from solving for the optimal control policy. This means at every timestep, the robot updates its belief over possible values, and then re-plans an optimal control policy with the new distribution.

2) Separate planning from control. Computing an optimal control policy means computing the optimal action to take at every state in a continuous state, action, and belief space. Although re-computing a full optimal policy after every interaction is not tractable in real-time, we can re-compute an optimal trajectory from the current state in real-time. This means that the robot first plans a trajectory that best satisfies the current estimate of the objective, and then uses an impedance controller to track this trajectory. The use of impedance control here gives us the nice properties described earlier, where people can physically modify the robot’s state while still being safe during interaction.

Looking back at our estimation step, we will make a similar shift to trajectory space and modify our observation model to reflect this:

Now, our observation model depends only on the cumulative reward along a trajectory, which is easily computed by summing up the reward at each timestep. With this approximation, when reasoning about the true objective, the robot only has to consider the likelihood of a human’s preferred trajectory, , given the current trajectory it is executing, .

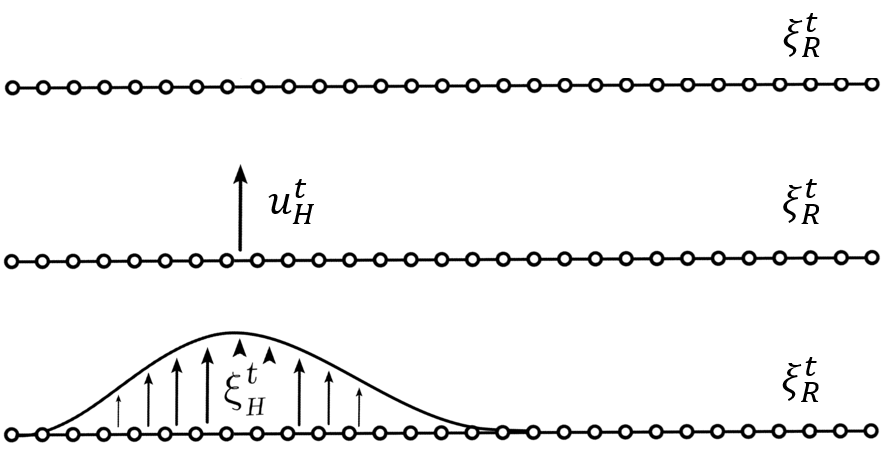

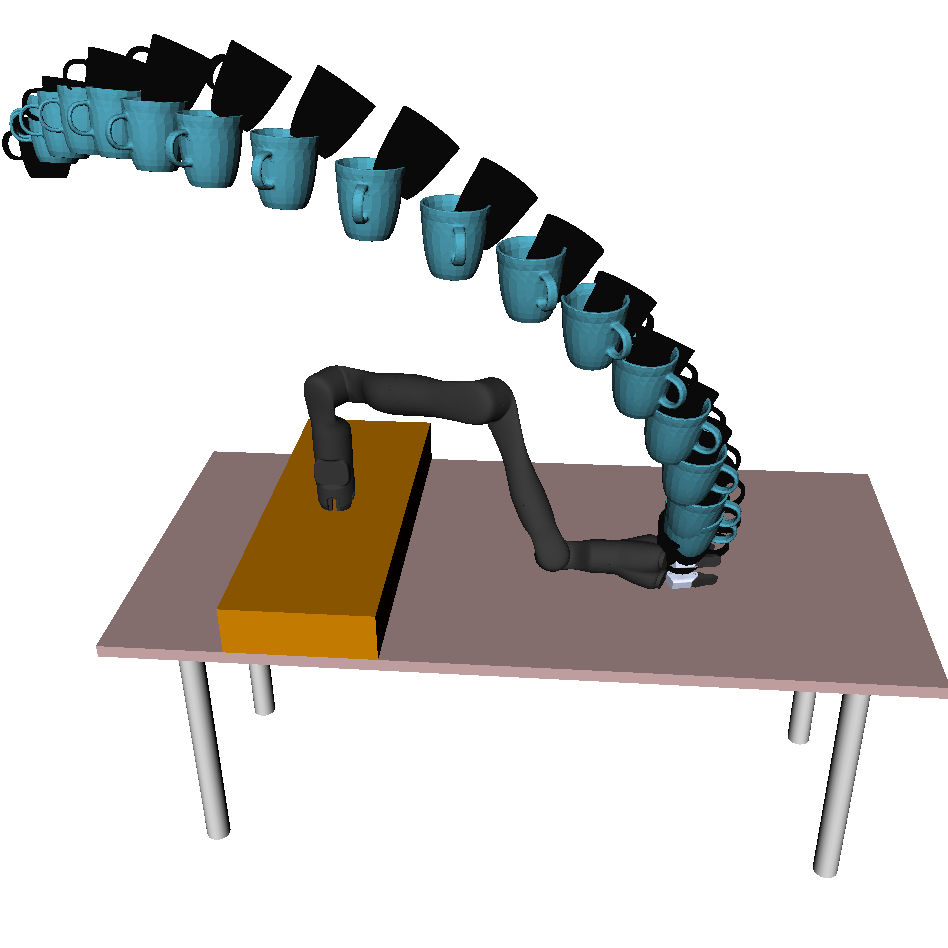

But what is the human’s preferred trajectory, ? The robot only gets to directly measure the human’s force $u_H$. One way to infer what is the human’s preferred trajectory is by propagating the human’s force throughout the robot’s current trajectory, . Figure 1. builds up the trajectory deformation based on prior work from Losey and O’Malley, starting from the robot’s original trajectory, then the force application, and then the deformation to produce .

Fig 1. To infer the human’s prefered trajectory given the current planned trajectory, the robot first measures the human’s interaction force, $u_H$, and then smoothly deforms the waypoints near interaction point to get the human’s preferred trajectory, $\xi_H$.

3) Plan with maximum a posteriori (MAP) estimate of . Finally, because is a continuous variable and potentially high-dimensional, and since our observation model is not Gaussian, rather than planning with the full belief over , we will plan only with the MAP estimate. We find that the MAP estimate under a 2nd order Taylor Series Expansion about the robot’s current trajectory with a Gaussian prior is equivalent to running online gradient descent:

At every timestep, the robot updates its estimate of in the direction of the cumulative feature difference, , between its current optimal trajectory and the human’s preferred trajectory. In the Learning from Demonstration literature, this update rule is analogous to online Max Margin Planning; it is also analogous to coactive learning, where the user modifies waypoints for the current task to teach a reward function for future tasks.

Ultimately, putting these three steps together leads us to an elegant approximate solution to the original POMDP. At every timestep, the robot plans a trajectory and begins to move. The human can physically interact, enabling the robot to sense their force $u_H$. The robot uses the human’s force to deform its original trajectory and produce the human’s desired trajectory, . Then the robot reasons about what aspects of the task are different between its original and the human’s preferred trajectory, and updates in the direction of that difference. Using the new feature weights, the robot replans a trajectory that better aligns with the human’s preferences.

For a more thorough description of our formalism and approximations, please see our recent paper from the 2017 Conference on Robot Learning.

Learning from Humans in the Real World

To evaluate the benefits of in-task learning on a real personal robot, we recruited 10 participants for a user study. Each participant interacted with the robot running our proposed online learning method as well as a baseline where the robot did not learn from physical interaction and simply ran impedance control.

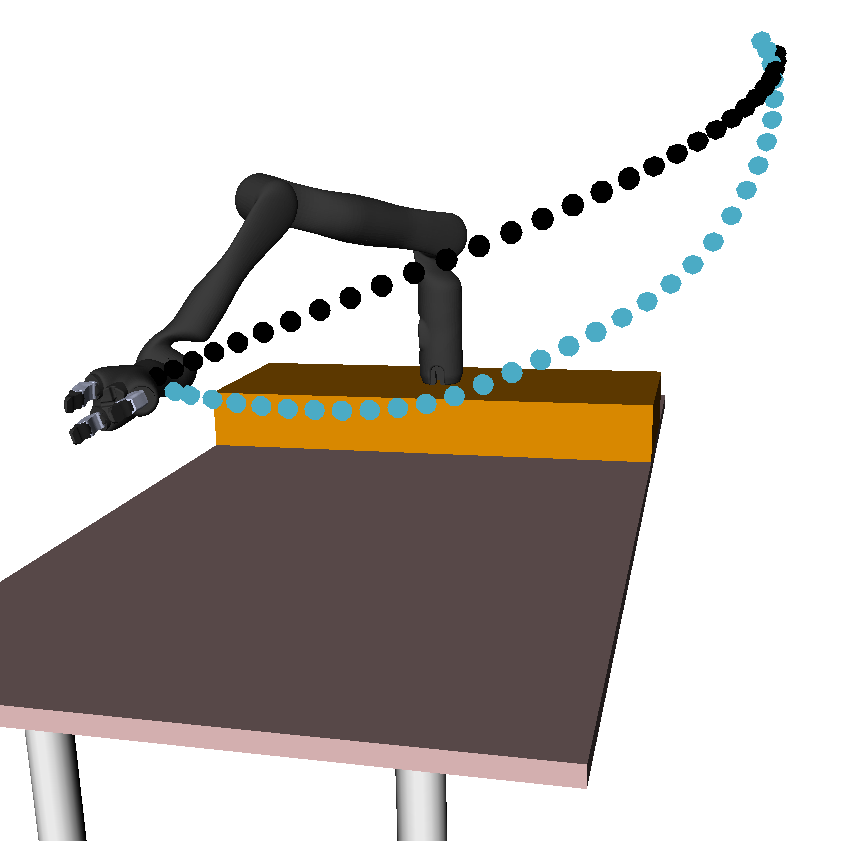

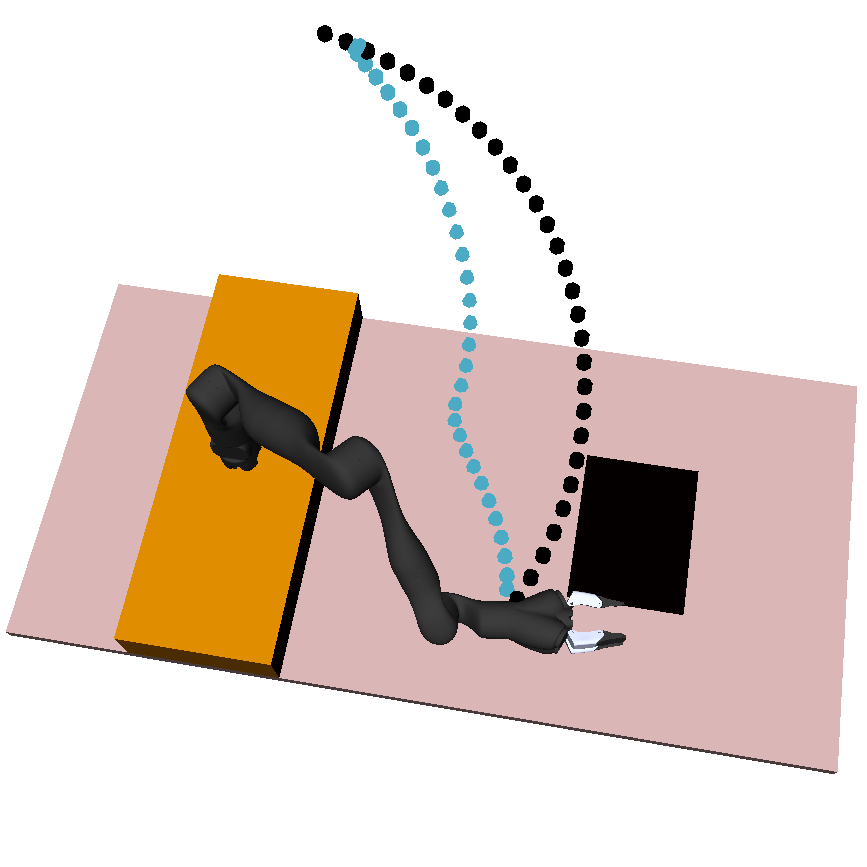

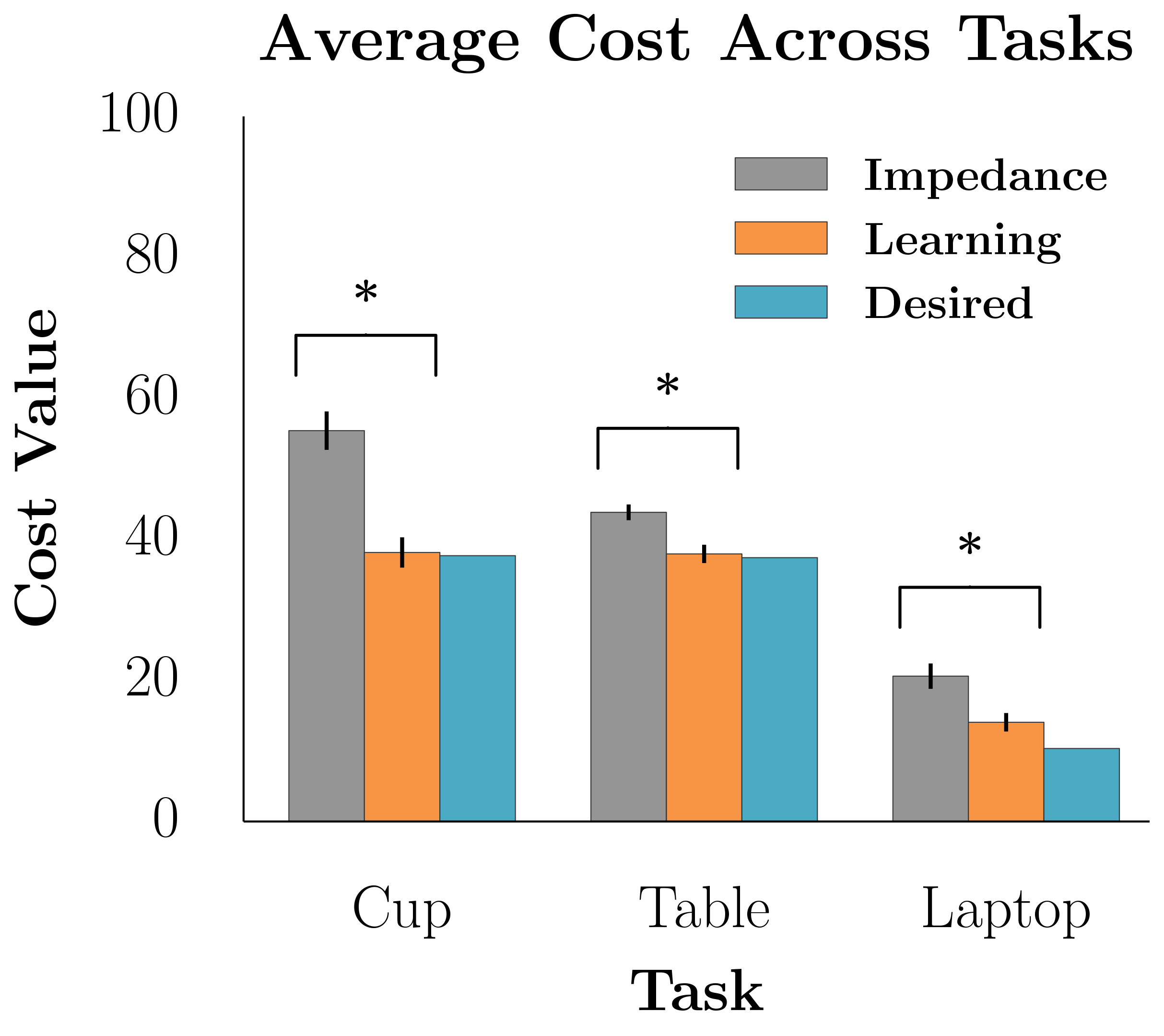

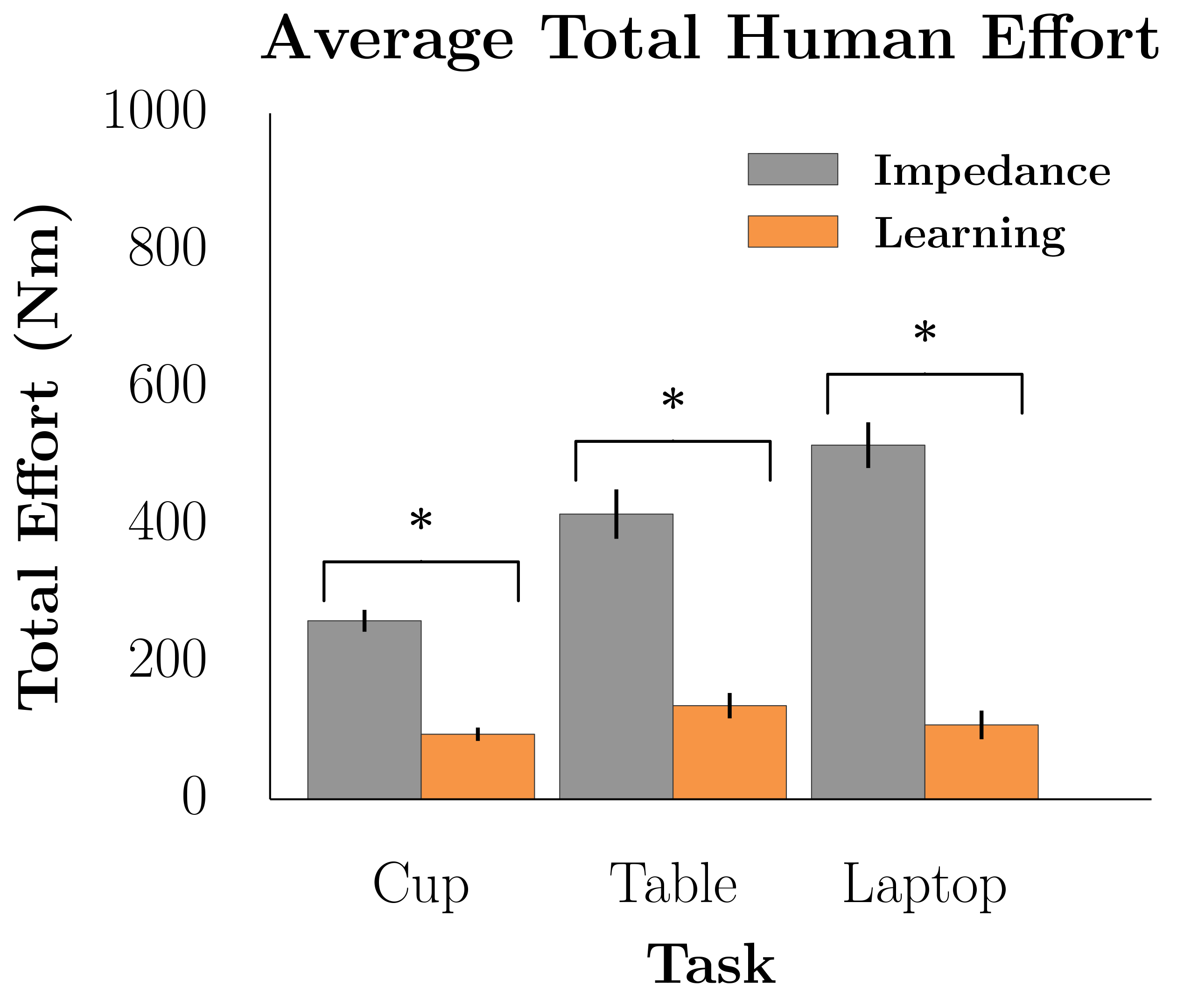

Fig 2. shows the three experimental household manipulation tasks, in each of which the robot started with an initially incorrect objective that participants had to correct. For example, the robot would move a cup from the shelf to the table, but without worrying about tilting the cup (perhaps not noticing that there is liquid inside).

Fig 2. Trajectory generated with initial objective marked in black, and the desired trajectory from true objective in blue. Participants need to correct the robot to teach it to hold the cup upright (left), move closer to the table (center), and avoid going over the laptop (right).

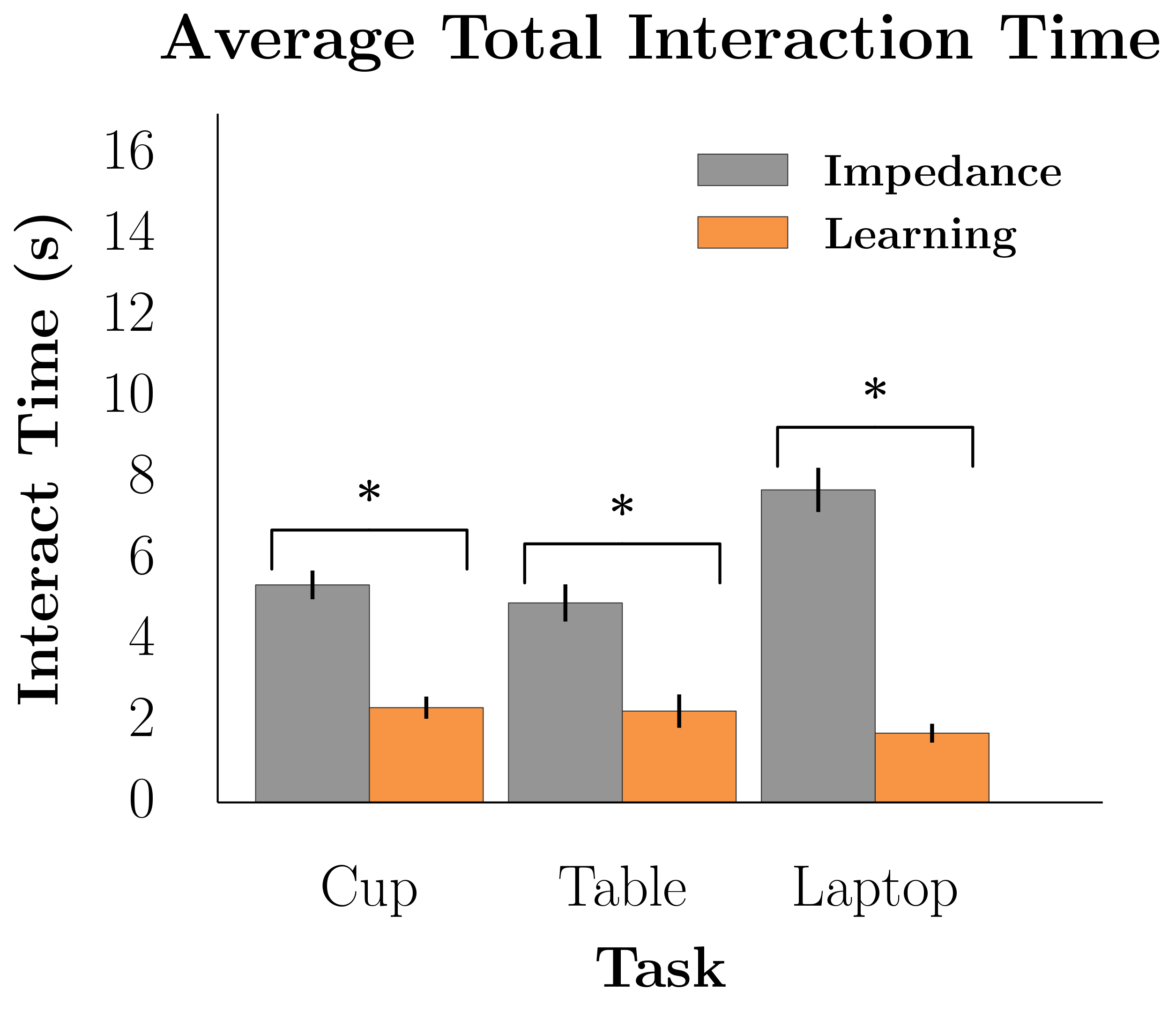

We measured the robot’s performance with respect to the true objective, the total effort the participant exerted, the total amount of interaction time, and the responses of a 7-point Likert scale survey.

In Task 1, participants have to physically intervene when they see the robot tilting the cup and teach the robot to keep the cup upright.

Task 2 had participants teaching the robot to move closer to the table.

For Task 3, the robot’s original trajectory goes over a laptop. Participants have to physically teach the robot to move around the laptop instead of over it.

The results of our user studies suggest that learning from physical interaction leads to better robot task performance with less human effort. Participants were able to get the robot to execute the correct behavior faster with less effort and interaction time when the robot was actively learning from their interactions during the task. Additionally, participants believed the robot understood their preferences more, took less effort to interact with, and was a more collaborative partner.

Fig 3. Learning from interaction significantly outperformed not learning for each of our objective measures, including task cost, human effort, interaction time.

Ultimately, we propose that robots should not treat human interactions as disturbances, but rather as informative actions. We showed that robots imbued with this sort of reasoning are capable of updating their understanding of the task they are performing and completing it correctly, rather than relying on people to guide them until the task is done.

This work is merely a step in exploring learning robot objectives from pHRI. Many open questions remain including developing solutions that can handle dynamical aspects (like preferences about the timing of the motion) and how and when to generalize learned objectives to new tasks. Additionally, robot reward functions will often have many task-related features and human interactions may only give information about a certain subset of relevant weights. Our recent work in HRI 2018 studied how a robot can disambiguate what the person is trying to correct by learning about only a single feature weight at a time. Overall, not only do we need algorithms that can learn from physical interaction with humans, but these methods must also reason about the inherent difficulties humans experience when trying to kinesthetically teach a complex – and possibly unfamiliar – robotic system.

Thank you to Dylan Losey and Anca Dragan for their helpful feedback in writing this blog post. This article was initially published on the BAIR blog, and appears here with the authors’ permission.

This post is based on the following papers:

-

A. Bajcsy* , D.P. Losey*, M.K. O’Malley, and A.D. Dragan. Learning Robot Objectives from Physical Human Robot Interaction. Conference on Robot Learning (CoRL), 2017.

-

A. Bajcsy , D.P. Losey, M.K. O’Malley, and A.D. Dragan. Learning from Physical Human Corrections, One Feature at a Time. International Conference on Human-Robot Interaction (HRI), 2018.

Why ethical robots might not be such a good idea after all

Last week my colleague Dieter Vanderelst presented our paper: The Dark Side of Ethical Robots at AIES 2018 in New Orleans.

Last week my colleague Dieter Vanderelst presented our paper: The Dark Side of Ethical Robots at AIES 2018 in New Orleans.

I blogged about Dieter’s very elegant experiment here, but let me summarise. With two NAO robots he set up a demonstration of an ethical robot helping another robot acting as a proxy human, then showed that with a very simple alteration of the ethical robot’s logic it is transformed into a distinctly unethical robot – behaving either competitively or aggressively toward the proxy human.

Here are our paper’s key conclusions:

The ease of transformation from ethical to unethical robot is hardly surprising. It is a straightforward consequence of the fact that both ethical and unethical behaviours require the same cognitive machinery with – in our implementation – only a subtle difference in the way a single value is calculated. In fact, the difference between an ethical (i.e. seeking the most desirable outcomes for the human) robot and an aggressive (i.e. seeking the least desirable outcomes for the human) robot is a simple negation of this value.

On the face of it, given that we can (at least in principle) build explicitly ethical machines then it would seem that we have a moral imperative to do so; it would appear to be unethical not to build ethical machines when we have that option. But the findings of our paper call this assumption into serious doubt. Let us examine the risks associated with ethical robots and if, and how, they might be mitigated. There are three.

- First there is the risk that an unscrupulous manufacturermight insert some unethical behaviours into their robots in order to exploit naive or vulnerable users for financial gain, or perhaps to gain some market advantage (here the VW diesel emissions scandal of 2015 comes to mind). There are no technical steps that would mitigate this risk, but the reputational damage from being found out is undoubtedly a significant disincentive. Compliance with ethical standards such as BS 8611 guide to the ethical design and application of robots and robotic systems, or emerging new IEEE P700X ‘human’ standards would also support manufacturers in the ethical application of ethical robots.

- Perhaps more serious is the risk arising from robots that have user adjustable ethics settings. Here the danger arises from the possibility that either the user or a technical support engineer mistakenly, or deliberately, chooses settings that move the robot’s behaviours outside an ‘ethical envelope’. Much depends of course on how the robot’s ethics are coded, but one can imagine the robot’s ethical rules expressed in a user-accessible format, for example, an XML like script. No doubt the best way to guard against this risk is for robots to have no user adjustable ethics settings, so that the robot’s ethics are hard-coded and not accessible to either users or support engineers.

- But even hard-coded ethics would not guard against undoubtedly the most serious risk of all, which arises when those ethical rules are vulnerable to malicious hacking. Given that cases of white-hat hacking of cars have already been reported, it’s not difficult to envisage a nightmare scenario in which the ethics settings for an entire fleet of driverless cars are hacked, transforming those vehicles into lethal weapons. Of course, driverless cars (or robots in general) without explicit ethics are also vulnerable to hacking, but weaponising such robots is far more challenging for the attacker. Explicitly ethical robots focus the robot’s behaviours to a small number of rules which make them, we think, uniquely vulnerable to cyber-attack.

Ok, taking the most serious of these risks: hacking, we can envisage several technical approaches to mitigating the risk of malicious hacking of a robot’s ethical rules. One would be to place those ethical rules behind strong encryption. Another would require a robot to authenticate its ethical rules by first connecting to a secure server. An authentication failure would disable those ethics, so that the robot defaults to operating without explicit ethical behaviours. Although feasible, these approaches would be unlikely to deter the most determined hackers, especially those who are prepared to resort to stealing encryption or authentication keys.

It is very clear that guaranteeing the security of ethical robots is beyond the scope of engineering and will need regulatory and legislative efforts. Considering the ethical, legal and societal implications of robots, it becomes obvious that robots themselves are not where responsibility lies. Robots are simply smart machines of various kinds and the responsibility to ensure they behave well must always lie with human beings. In other words, we require ethical governance, and this is equally true for robots with or without explicit ethical behaviours.

Two years ago I thought the benefits of ethical robots outweighed the risks. Now I’m not so sure. I now believe that – even with strong ethical governance – the risks that a robot’s ethics might be compromised by unscrupulous actors are so great as to raise very serious doubts over the wisdom of embedding ethical decision making in real-world safety critical robots, such as driverless cars. Ethical robots might not be such a good idea after all.

Here is the full reference to our paper:

Vanderelst D and Winfield AFT (2018), The Dark Side of Ethical Robots, AAAI/ACM Conf. on AI Ethics and Society (AIES 2018), New Orleans.