A Prime Example for Industry 4.0

Creating a Collaborative Humanoid Robot

LG to develop smart shopping cart robot with E-mart

ANYmal robot tested on offshore platform

A crucial task for energy providers is the reliable and safe operation of their plants, especially when producing energy offshore. Autonomous mobile robots are able to offer comprehensive support through regular and automated inspection of machinery and infrastructure. In a world’s first pilot installation, transmission system operator TenneT tested the autonomous legged robot ANYmal on one of the world’s largest offshore converter platforms in the North Sea.

In September 2018, the ANYbotics field team boarded a helicopter to fly out to one of the world’s largest offshore converter platforms in the North Sea. Equipped with a customized sensorhead, our four-legged robotic platform ANYmal autonomously performed various inspection tasks of the platform in a one-week pilot installation, making it the world’s first autonomous offshore robot.

Offshore Wind Farms

Offshore energy production is a key component of global energy supply. Apart from oil and natural gas extraction, wind energy is increasingly being produced offshore. One of the key innovators in this field is the Dutch-German transmission system operator TenneT, which connects large-scale offshore wind farms to the onshore grid over a high voltage DC connection. Set out to provide reliable and low-cost energy transmission and distribution, the company adopts most recent technologies.

Robotic Inspection with ANYmal

ANYbotics is partnering with TenneT to evaluate robotic inspection and maintenance on their offshore converter platforms. In periods of unmanned platform operation, a mobile robot helps to reduce the risk of disruptions and ensures the security of the electricity supply. Based on its autonomous navigation capabilities, ANYmal performs routine inspection tasks to monitor machine operations, read out sensory equipment and detect thermal hotspots and oil or water leakages. Whenever required, ANYmal can be remotely operated from an onshore control center in order for TenneT to receive real-time information through the robot’s onboard visual and thermal cameras, microphones and gas detection sensors.

Successful Pilot Installation

Before being deployed on the offshore mission, the ANYbotics field team underwent a rigorous safety training including helicopter escape and survival on sea scenarios. After being taken on a guided tour of the platform to 3D-map the environment and learn the position and characteristics of all inspection points, ANYmal autonomously navigated the platform and processed inspection protocols. The video documents a fully autonomous mission, covering a total of 16 inspection points such as gauges, levers, oil- and water levels and various other visual and thermal measurements.

Fleets of drones could aid searches for lost hikers

MIT researchers describe an autonomous system for a fleet of drones to collaboratively search under dense forest canopies using only onboard computation and wireless communication — no GPS required.

Images: Melanie Gonick

By Rob Matheson

Finding lost hikers in forests can be a difficult and lengthy process, as helicopters and drones can’t get a glimpse through the thick tree canopy. Recently, it’s been proposed that autonomous drones, which can bob and weave through trees, could aid these searches. But the GPS signals used to guide the aircraft can be unreliable or nonexistent in forest environments.

In a paper being presented at the International Symposium on Experimental Robotics conference next week, MIT researchers describe an autonomous system for a fleet of drones to collaboratively search under dense forest canopies. The drones use only onboard computation and wireless communication — no GPS required.

Each autonomous quadrotor drone is equipped with laser-range finders for position estimation, localization, and path planning. As the drone flies around, it creates an individual 3-D map of the terrain. Algorithms help it recognize unexplored and already-searched spots, so it knows when it’s fully mapped an area. An off-board ground station fuses individual maps from multiple drones into a global 3-D map that can be monitored by human rescuers.

In a real-world implementation, though not in the current system, the drones would come equipped with object detection to identify a missing hiker. When located, the drone would tag the hiker’s location on the global map. Humans could then use this information to plan a rescue mission.

“Essentially, we’re replacing humans with a fleet of drones to make the search part of the search-and-rescue process more efficient,” says first author Yulun Tian, a graduate student in the Department of Aeronautics and Astronautics (AeroAstro).

The researchers tested multiple drones in simulations of randomly generated forests, and tested two drones in a forested area within NASA’s Langley Research Center. In both experiments, each drone mapped a roughly 20-square-meter area in about two to five minutes and collaboratively fused their maps together in real-time. The drones also performed well across several metrics, including overall speed and time to complete the mission, detection of forest features, and accurate merging of maps.

Co-authors on the paper are: Katherine Liu, a PhD student in MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and AeroAstro; Kyel Ok, a PhD student in CSAIL and the Department of Electrical Engineering and Computer Science; Loc Tran and Danette Allen of the NASA Langley Research Center; Nicholas Roy, an AeroAstro professor and CSAIL researcher; and Jonathan P. How, the Richard Cockburn Maclaurin Professor of Aeronautics and Astronautics.

Exploring and mapping

On each drone, the researchers mounted a LIDAR system, which creates a 2-D scan of the surrounding obstacles by shooting laser beams and measuring the reflected pulses. This can be used to detect trees; however, to drones, individual trees appear remarkably similar. If a drone can’t recognize a given tree, it can’t determine if it’s already explored an area.

The researchers programmed their drones to instead identify multiple trees’ orientations, which is far more distinctive. With this method, when the LIDAR signal returns a cluster of trees, an algorithm calculates the angles and distances between trees to identify that cluster. “Drones can use that as a unique signature to tell if they’ve visited this area before or if it’s a new area,” Tian says.

This feature-detection technique helps the ground station accurately merge maps. The drones generally explore an area in loops, producing scans as they go. The ground station continuously monitors the scans. When two drones loop around to the same cluster of trees, the ground station merges the maps by calculating the relative transformation between the drones, and then fusing the individual maps to maintain consistent orientations.

“Calculating that relative transformation tells you how you should align the two maps so it corresponds to exactly how the forest looks,” Tian says.

In the ground station, robotic navigation software called “simultaneous localization and mapping” (SLAM) — which both maps an unknown area and keeps track of an agent inside the area — uses the LIDAR input to localize and capture the position of the drones. This helps it fuse the maps accurately.

The end result is a map with 3-D terrain features. Trees appear as blocks of colored shades of blue to green, depending on height. Unexplored areas are dark but turn gray as they’re mapped by a drone. On-board path-planning software tells a drone to always explore these dark unexplored areas as it flies around. Producing a 3-D map is more reliable than simply attaching a camera to a drone and monitoring the video feed, Tian says. Transmitting video to a central station, for instance, requires a lot of bandwidth that may not be available in forested areas.

More efficient searching

A key innovation is a novel search strategy that let the drones more efficiently explore an area. According to a more traditional approach, a drone would always search the closest possible unknown area. However, that could be in any number of directions from the drone’s current position. The drone usually flies a short distance, and then stops to select a new direction.

“That doesn’t respect dynamics of drone [movement],” Tian says. “It has to stop and turn, so that means it’s very inefficient in terms of time and energy, and you can’t really pick up speed.”

Instead, the researchers’ drones explore the closest possible area while considering their speed and direction and maintaining a consistent velocity. This strategy — where the drone tends to travel in a spiral pattern — covers a search area much faster. “In search and rescue missions, time is very important,” Tian says.

In the paper, the researchers compared their new search strategy with a traditional method. Compared to that baseline, the researchers’ strategy helped the drones cover significantly more area, several minutes faster and with higher average speeds.

One limitation for practical use is that the drones still must communicate with an off-board ground station for map merging. In their outdoor experiment, the researchers had to set up a wireless router that connected each drone and the ground station. In the future, they hope to design the drones to communicate wirelessly when approaching one another, fuse their maps, and then cut communication when they separate. The ground station, in that case, would only be used to monitor the updated global map.

Machines that learn language more like kids do

Photo: MIT News

By Rob Matheson

Children learn language by observing their environment, listening to the people around them, and connecting the dots between what they see and hear. Among other things, this helps children establish their language’s word order, such as where subjects and verbs fall in a sentence.

In computing, learning language is the task of syntactic and semantic parsers. These systems are trained on sentences annotated by humans that describe the structure and meaning behind words. Parsers are becoming increasingly important for web searches, natural-language database querying, and voice-recognition systems such as Alexa and Siri. Soon, they may also be used for home robotics.

But gathering the annotation data can be time-consuming and difficult for less common languages. Additionally, humans don’t always agree on the annotations, and the annotations themselves may not accurately reflect how people naturally speak.

In a paper being presented at this week’s Empirical Methods in Natural Language Processing conference, MIT researchers describe a parser that learns through observation to more closely mimic a child’s language-acquisition process, which could greatly extend the parser’s capabilities. To learn the structure of language, the parser observes captioned videos, with no other information, and associates the words with recorded objects and actions. Given a new sentence, the parser can then use what it’s learned about the structure of the language to accurately predict a sentence’s meaning, without the video.

This “weakly supervised” approach — meaning it requires limited training data — mimics how children can observe the world around them and learn language, without anyone providing direct context. The approach could expand the types of data and reduce the effort needed for training parsers, according to the researchers. A few directly annotated sentences, for instance, could be combined with many captioned videos, which are easier to come by, to improve performance.

In the future, the parser could be used to improve natural interaction between humans and personal robots. A robot equipped with the parser, for instance, could constantly observe its environment to reinforce its understanding of spoken commands, including when the spoken sentences aren’t fully grammatical or clear. “People talk to each other in partial sentences, run-on thoughts, and jumbled language. You want a robot in your home that will adapt to their particular way of speaking … and still figure out what they mean,” says co-author Andrei Barbu, a researcher in the Computer Science and Artificial Intelligence Laboratory (CSAIL) and the Center for Brains, Minds, and Machines (CBMM) within MIT’s McGovern Institute.

The parser could also help researchers better understand how young children learn language. “A child has access to redundant, complementary information from different modalities, including hearing parents and siblings talk about the world, as well as tactile information and visual information, [which help him or her] to understand the world,” says co-author Boris Katz, a principal research scientist and head of the InfoLab Group at CSAIL. “It’s an amazing puzzle, to process all this simultaneous sensory input. This work is part of bigger piece to understand how this kind of learning happens in the world.”

Co-authors on the paper are: first author Candace Ross, a graduate student in the Department of Electrical Engineering and Computer Science and CSAIL, and a researcher in CBMM; Yevgeni Berzak PhD ’17, a postdoc in the Computational Psycholinguistics Group in the Department of Brain and Cognitive Sciences; and CSAIL graduate student Battushig Myanganbayar.

Visual learner

For their work, the researchers combined a semantic parser with a computer-vision component trained in object, human, and activity recognition in video. Semantic parsers are generally trained on sentences annotated with code that ascribes meaning to each word and the relationships between the words. Some have been trained on still images or computer simulations.

The new parser is the first to be trained using video, Ross says. In part, videos are more useful in reducing ambiguity. If the parser is unsure about, say, an action or object in a sentence, it can reference the video to clear things up. “There are temporal components — objects interacting with each other and with people — and high-level properties you wouldn’t see in a still image or just in language,” Ross says.

The researchers compiled a dataset of about 400 videos depicting people carrying out a number of actions, including picking up an object or putting it down, and walking toward an object. Participants on the crowdsourcing platform Mechanical Turk then provided 1,200 captions for those videos. They set aside 840 video-caption examples for training and tuning, and used 360 for testing. One advantage of using vision-based parsing is “you don’t need nearly as much data — although if you had [the data], you could scale up to huge datasets,” Barbu says.

In training, the researchers gave the parser the objective of determining whether a sentence accurately describes a given video. They fed the parser a video and matching caption. The parser extracts possible meanings of the caption as logical mathematical expressions. The sentence, “The woman is picking up an apple,” for instance, may be expressed as: λxy. woman x, pick_up x y, apple y.

Those expressions and the video are inputted to the computer-vision algorithm, called “Sentence Tracker,” developed by Barbu and other researchers. The algorithm looks at each video frame to track how objects and people transform over time, to determine if actions are playing out as described. In this way, it determines if the meaning is possibly true of the video.

Connecting the dots

The expression with the most closely matching representations for objects, humans, and actions becomes the most likely meaning of the caption. The expression, initially, may refer to many different objects and actions in the video, but the set of possible meanings serves as a training signal that helps the parser continuously winnow down possibilities. “By assuming that all of the sentences must follow the same rules, that they all come from the same language, and seeing many captioned videos, you can narrow down the meanings further,” Barbu says.

In short, the parser learns through passive observation: To determine if a caption is true of a video, the parser by necessity must identify the highest probability meaning of the caption. “The only way to figure out if the sentence is true of a video [is] to go through this intermediate step of, ‘What does the sentence mean?’ Otherwise, you have no idea how to connect the two,” Barbu explains. “We don’t give the system the meaning for the sentence. We say, ‘There’s a sentence and a video. The sentence has to be true of the video. Figure out some intermediate representation that makes it true of the video.’”

The training produces a syntactic and semantic grammar for the words it’s learned. Given a new sentence, the parser no longer requires videos, but leverages its grammar and lexicon to determine sentence structure and meaning.

Ultimately, this process is learning “as if you’re a kid,” Barbu says. “You see world around you and hear people speaking to learn meaning. One day, I can give you a sentence and ask what it means and, even without a visual, you know the meaning.”

“This research is exactly the right direction for natural language processing,” says Stefanie Tellex, a professor of computer science at Brown University who focuses on helping robots use natural language to communicate with humans. “To interpret grounded language, we need semantic representations, but it is not practicable to make it available at training time. Instead, this work captures representations of compositional structure using context from captioned videos. This is the paper I have been waiting for!”

In future work, the researchers are interested in modeling interactions, not just passive observations. “Children interact with the environment as they’re learning. Our idea is to have a model that would also use perception to learn,” Ross says.

This work was supported, in part, by the CBMM, the National Science Foundation, a Ford Foundation Graduate Research Fellowship, the Toyota Research Institute, and the MIT-IBM Brain-Inspired Multimedia Comprehension project.

Robots in Depth with Stefano Stramigioli

In this episode of Robots in Depth, Per Sjöborg speaks with Stefano Stramigioli about the Robotics and Mechatronics lab he leads at University of Twente. The lab focuses on inspection and maintenance robotics, as well as medical applications.

In this episode of Robots in Depth, Per Sjöborg speaks with Stefano Stramigioli about the Robotics and Mechatronics lab he leads at University of Twente. The lab focuses on inspection and maintenance robotics, as well as medical applications.

Stefano got into robotics when he saw the robots in Star Wars, and started out building a robotic arm from scratch, including doing his own PCBs.

He also tells us about the robotic peregrine falcon that has been spun out and is now a successful company.

This interview was recorded in 2016.

Small flying robots able to pull objects up to 40 times their weight

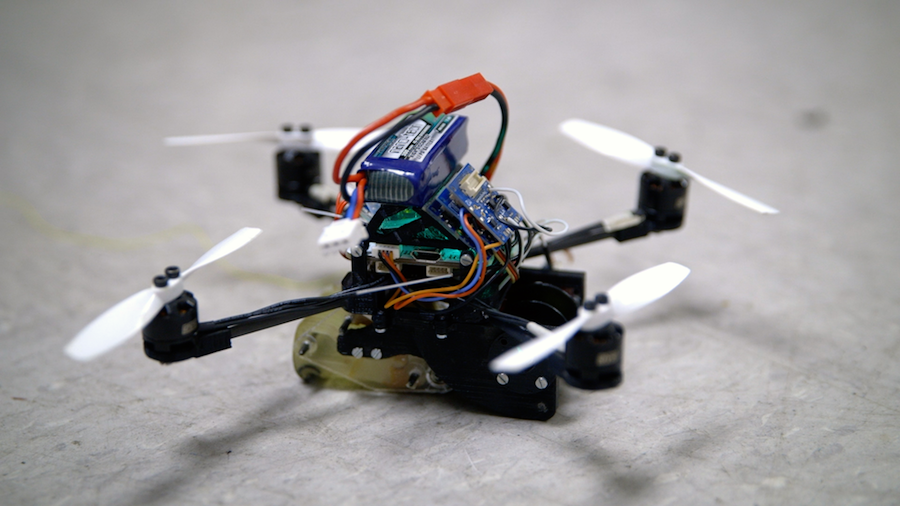

Researchers from EPFL and Stanford have developed small drones that can land and then move objects that are 40 times their weight, with the help of powerful winches, gecko adhesives and microspines.

A closed door is just one of many obstacles that no longer pose a barrier to the small flying robots developed jointly by Ecole Polytechnique Fédérale de Lausanne (EPFL) and Stanford University. Equipped with advanced gripping technology – inspired by gecko and insect feet – and able to interact with the world around them, these robots can work together to lasso a door handle and tug the door open. Read More

Waymo is first, but is Cruise second, and how can you tell?

A recent Reuters story suggests Cruise is well behind schedule with one insider saying “nothing is on schedule” and various reports of problems not yet handled. This puts doubt into GM’s announced plan to have a commercial pilot without safety drivers in operation in San Francisco in 2019.

The problem for me, and everybody else, is that it’s very hard to judge the progress of a project from outside. This is because it’s “easy” to get a basic car together and do demo runs on various streets. Teams usually have something like that up and running within a year. Just 2 years in, Google had logged 100,000 miles on 1,000 different miles of road. Today, it’s even easier.