The Benefits of Radio Frequency Identification (RFID)

5 Reasons To Consider Robots for Industrial Inspections

Two new robots for the nursing sector

As part of the “SeRoDi” project (“Service Robotics for Personal Services”), Fraunhofer IPA collaborated with other research and application partners to develop new service robotics solutions for the nursing sector. The resulting robots, the “intelligent care cart” and the “robotic service assistant”, were used in extensive real-world trials in a hospital and at two care homes.

Not enough nurses for too many patients or residents: this is a familiar problem in the nursing sector. Service robots have the potential to help maintaining an adequate quality of care also under these challenging conditions.

Intelligent care cart

To cut down the legwork of the nursing staff and reduce the time spent keeping manual records of the consumption of medical supplies, Fraunhofer IPA in collaboration with the MLR Company developed the “intelligent care cart”. Using a smartphone, the care staff is able to summon the care cart to the desired room, whereupon it makes its own way there. A 3D sensor along with object recognition software enables the care cart to automatically register the consumption of medical supplies. Being of modular design, the care cart can be adapted to different application scenarios and practical requirements.

The care carts developed as part of the project were used in a hospital (stocked with wound treatment materials) and two nursing homes (stocked with laundry items). As the intelligent care cart is based on the navigation processes of a driverless transport vehicle, it travels primarily along fixed predefined paths. For use in public spaces, it is possible to make minor deviations from these paths in order, for example, to dynamically negotiate obstacles in the way. The real-world trials revealed that efficient navigation requires extensive knowledge of the internal processes in order, among other things, to guarantee that the desired destination is actually accessible.

The initial trials also showed that it makes a big difference whether the corridors have a single lane for both directions or separate lanes, i.e. one for each direction. For the residents and staff, using one lane made it clearer where the robot was going. In addition, restricting the care carts to a single lane ensured that they did not have to make major detours. Evaluating the real-world trials, the participating nursing staff confirmed that, by reducing the amount of legwork, along with the associated timesaving, the intelligent care cart represents a potential benefit in their day-to-day work. Also, the faster provision of care, with no interruptions for restocking the care cart, results in an improvement in quality for patients and residents.

Robotic service assistant serves drinks to residents

Alongside the intelligent care cart, the robotic service assistant is another result of the SeRoDi project. Stocked with up to 28 drinks or snacks, the mobile robot is capable of serving them to patients or residents. Once again, the goal is to reduce the workload of the staff, in addition to improving the hydration of the residents by means of regular reminders. Using the robot also has the potential to promote the independence of those in need of care.

At a nursing home, where the robotic service assistant was trialed for one week in a common room nursing home, it made for a welcome change, with many residents being both curious and interested. Using the robot’s touch screen, they were able to select from a choice of drinks, which were then served to them by the robot. Once all the supplies had been used up, the service assistant returned to the kitchen, where it was restocked by the staff before being sent back to the common room by the use of a smartphone. This robot, too, received great interest from the participating nursing staff. The synthesized voice of the robot was especially popular and even motivated the residents to converse with the robot.

Have a look at the YouTube video showing the project results.

The project received funding from the German Federal Ministry for Education and Research.

The smallest steerable catheter

ABB to Build the World’s Most Advanced Robotics Factory in Shanghai

#272: Putting Robots in the Home, with Caitlyn Clabaugh

In this episode, Audrow Nash interviews Caitlyn Clabaugh, PhD Candidate at the University of Southern California, about lessons learned about putting robots in people’s homes for human-robot interaction research. Clabaugh speaks about her work to date, the expectations in human-subjects research, and gives general advice for PhD students.

Caitlyn Clabaugh

Caitlyn Clabaugh is a PhD student in Computer Science at the University of Southern California, co-advised by Prof. Maja J Matarić and Prof. Fei Sha, and supported by a graduate research assistantship in the Interaction Lab. She received my B.A. in Computer Science from Bryn Mawr College in May 2013. Her primary research interest is the application of machine learning and statistical methods to support long-term adaptation and personalization in socially assistive robot tutors, specifically for young children and early childhood STEM.

Links

- Interaction Lab

- Subscribe to Robots using iTunes

- Subscribe to Robots using RSS

- Support us on Patreon

- Download MP3 (26.7 MB)

Autonomous Cars – Safety and Traffic Regulations

RoboticsTomorrow – Special Tradeshow Coverage<br>FABTECH 2018

Talking FABTECH with Octopuz

Good news for immersive journalism: Look at your audience

Drilling down on depth sensing and deep learning

By Daniel Seita, Jeff Mahler, Mike Danielczuk, Matthew Matl, and Ken Goldberg

This post explores two independent innovations and the potential for combining them in robotics. Two years before the AlexNet results on ImageNet were released in 2012, Microsoft rolled out the Kinect for the X-Box. This class of low-cost depth sensors emerged just as Deep Learning boosted Artificial Intelligence by accelerating performance of hyper-parametric function approximators leading to surprising advances in image classification, speech recognition, and language translation.

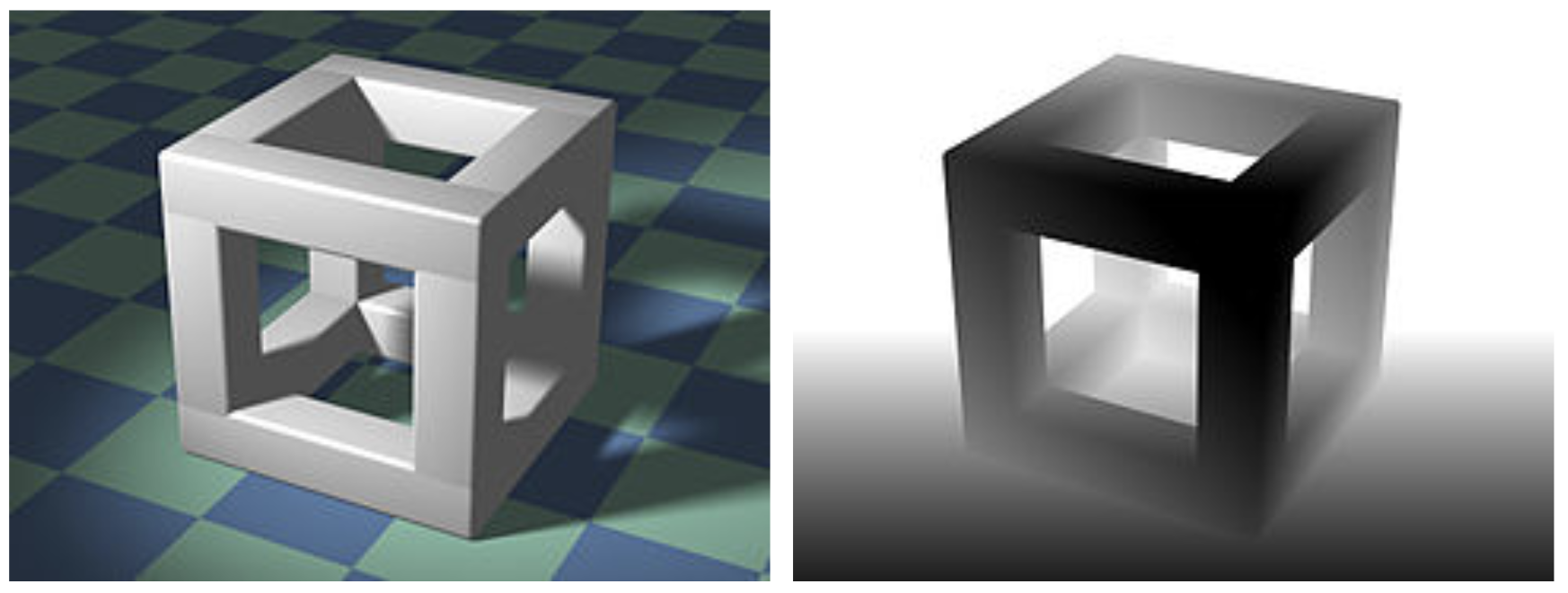

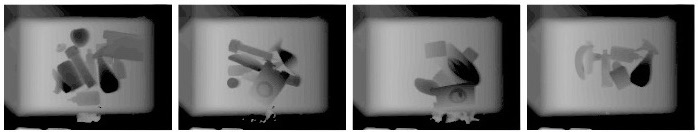

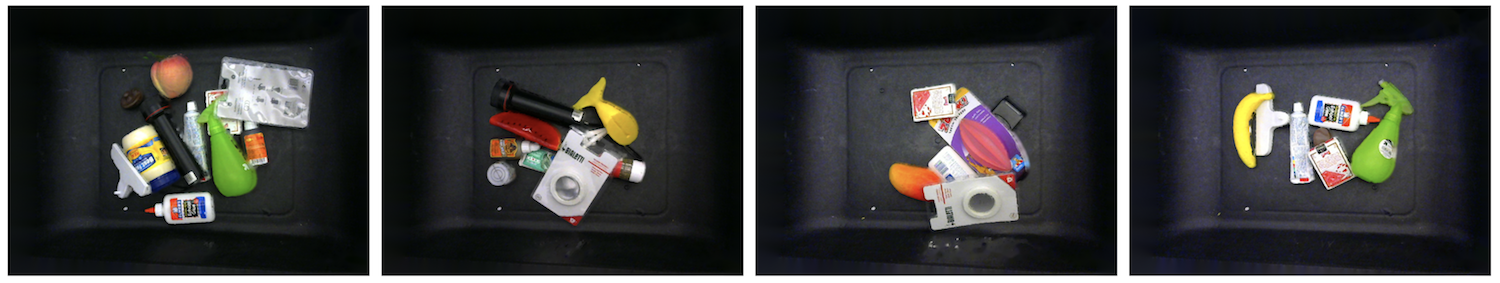

Top left: image of a 3D cube. Top right: example depth image, with darker points representing areas closer to the camera (source: Wikipedia). Next two rows: examples of depth and RGB image pairs for grasping objects in a bin. Last two rows: similar examples for bed-making.

Today, Deep Learning is also showing promise for end-to-end learning of playing video games and performing robotic manipulation tasks.

For robot perception, convolutional neural networks (CNNs), such as VGG or ResNet, with three RGB color channels have become standard. For robotics and computer vision tasks, it is common to borrow one of these architectures (along with pre-trained weights) and then to perform transfer learning or fine-tuning on task-specific data. But in some tasks, knowing the colors in an image may provide only limited benefits. Consider training a robot to grasp novel, previously unseen objects. It may be more important to understand the geometry of the environment rather than colors and textures. The physical process of manipulation — controlling one or more objects by applying forces through contact — depends on object geometry, pose, and other factors which are largely color-invariant. When you manipulate a pen with your hand, for instance, you can often move it seamlessly without looking at the actual pen, so long as you have a good understanding of the location and orientation of contact points. Thus, before proceeding, one might ask: does it makes sense to use color images?

There is an alternative: depth images. These are single-channel grayscale images that measure depth values from the camera, and give us invariance to the colors of objects within an image. We can also use depth to “filter” points beyond a certain distance which can remove background noise, as we demonstrate later with robot bed-making. Examples of paired depth and real images are shown above.

In this post, we consider the potential for combining depth images and deep learning in the context of three ongoing projects in the UC Berkeley AUTOLab: Dex-Net for robot grasping, segmenting objects in heaps, and robot bed-making.

Robots in Depth with Sebastian Weisenburger

In this episode of Robots in Depth, Per Sjöborg speaks Sebastian Weisenburger about how ECHORD++ works, with application-oriented research bridging academia, industry and end users to bring robots to market, under the banner “From lab to market”.

We also hear about Public end-user Driven Technological Innovation (PDTI) projects in healthcare and urban robotics.

This interview was recorded in 2016.

How to mass produce cell-sized robots

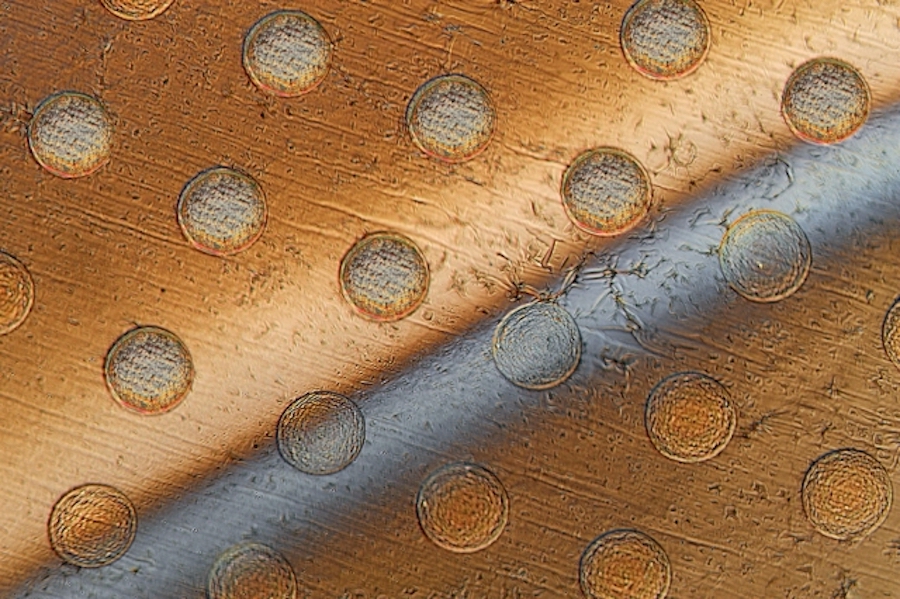

Image: Felice Frankel

By David L. Chandler

Tiny robots no bigger than a cell could be mass-produced using a new method developed by researchers at MIT. The microscopic devices, which the team calls “syncells” (short for synthetic cells), might eventually be used to monitor conditions inside an oil or gas pipeline, or to search out disease while floating through the bloodstream.

The key to making such tiny devices in large quantities lies in a method the team developed for controlling the natural fracturing process of atomically-thin, brittle materials, directing the fracture lines so that they produce miniscule pockets of a predictable size and shape. Embedded inside these pockets are electronic circuits and materials that can collect, record, and output data.

The novel process, called “autoperforation,” is described in a paper published today in the journal Nature Materials, by MIT Professor Michael Strano, postdoc Pingwei Liu, graduate student Albert Liu, and eight others at MIT.

The system uses a two-dimensional form of carbon called graphene, which forms the outer structure of the tiny syncells. One layer of the material is laid down on a surface, then tiny dots of a polymer material, containing the electronics for the devices, are deposited by a sophisticated laboratory version of an inkjet printer. Then, a second layer of graphene is laid on top.

Controlled fracturing

People think of graphene, an ultrathin but extremely strong material, as being “floppy,” but it is actually brittle, Strano explains. But rather than considering that brittleness a problem, the team figured out that it could be used to their advantage.

“We discovered that you can use the brittleness,” says Strano, who is the Carbon P. Dubbs Professor of Chemical Engineering at MIT. “It’s counterintuitive. Before this work, if you told me you could fracture a material to control its shape at the nanoscale, I would have been incredulous.”

But the new system does just that. It controls the fracturing process so that rather than generating random shards of material, like the remains of a broken window, it produces pieces of uniform shape and size. “What we discovered is that you can impose a strain field to cause the fracture to be guided, and you can use that for controlled fabrication,” Strano says.

When the top layer of graphene is placed over the array of polymer dots, which form round pillar shapes, the places where the graphene drapes over the round edges of the pillars form lines of high strain in the material. As Albert Liu describes it, “imagine a tablecloth falling slowly down onto the surface of a circular table. One can very easily visualize the developing circular strain toward the table edges, and that’s very much analogous to what happens when a flat sheet of graphene folds around these printed polymer pillars.”

As a result, the fractures are concentrated right along those boundaries, Strano says. “And then something pretty amazing happens: The graphene will completely fracture, but the fracture will be guided around the periphery of the pillar.” The result is a neat, round piece of graphene that looks as if it had been cleanly cut out by a microscopic hole punch.

Because there are two layers of graphene, above and below the polymer pillars, the two resulting disks adhere at their edges to form something like a tiny pita bread pocket, with the polymer sealed inside. “And the advantage here is that this is essentially a single step,” in contrast to many complex clean-room steps needed by other processes to try to make microscopic robotic devices, Strano says.

The researchers have also shown that other two-dimensional materials in addition to graphene, such as molybdenum disulfide and hexagonal boronitride, work just as well.

Cell-like robots

Ranging in size from that of a human red blood cell, about 10 micrometers across, up to about 10 times that size, these tiny objects “start to look and behave like a living biological cell. In fact, under a microscope, you could probably convince most people that it is a cell,” Strano says.

This work follows up on earlier research by Strano and his students on developing syncells that could gather information about the chemistry or other properties of their surroundings using sensors on their surface, and store the information for later retrieval, for example injecting a swarm of such particles in one end of a pipeline and retrieving them at the other to gain data about conditions inside it. While the new syncells do not yet have as many capabilities as the earlier ones, those were assembled individually, whereas this work demonstrates a way of easily mass-producing such devices.

Apart from the syncells’ potential uses for industrial or biomedical monitoring, the way the tiny devices are made is itself an innovation with great potential, according to Albert Liu. “This general procedure of using controlled fracture as a production method can be extended across many length scales,” he says. “[It could potentially be used with] essentially any 2-D materials of choice, in principle allowing future researchers to tailor these atomically thin surfaces into any desired shape or form for applications in other disciplines.”

This is, Albert Liu says, “one of the only ways available right now to produce stand-alone integrated microelectronics on a large scale” that can function as independent, free-floating devices. Depending on the nature of the electronics inside, the devices could be provided with capabilities for movement, detection of various chemicals or other parameters, and memory storage.

There are a wide range of potential new applications for such cell-sized robotic devices, says Strano, who details many such possible uses in a book he co-authored with Shawn Walsh, an expert at Army Research Laboratories, on the subject, called “Robotic Systems and Autonomous Platforms,” which is being published this month by Elsevier Press.

As a demonstration, the team “wrote” the letters M, I, and T into a memory array within a syncell, which stores the information as varying levels of electrical conductivity. This information can then be “read” using an electrical probe, showing that the material can function as a form of electronic memory into which data can be written, read, and erased at will. It can also retain the data without the need for power, allowing information to be collected at a later time. The researchers have demonstrated that the particles are stable over a period of months even when floating around in water, which is a harsh solvent for electronics, according to Strano.

“I think it opens up a whole new toolkit for micro- and nanofabrication,” he says.

Daniel Goldman, a professor of physics at Georgia Tech, who was not involved with this work, says, “The techniques developed by Professor Strano’s group have the potential to create microscale intelligent devices that can accomplish tasks together that no single particle can accomplish alone.”

In addition to Strano, Pingwei Liu, who is now at Zhejiang University in China, and Albert Liu, a graduate student in the Strano lab, the team included MIT graduate student Jing Fan Yang, postdocs Daichi Kozawa, Juyao Dong, and Volodomyr Koman, Youngwoo Son PhD ’16, research affiliate Min Hao Wong, and Dartmouth College student Max Saccone and visiting scholar Song Wang. The work was supported by the Air Force Office of Scientific Research, and the Army Research Office through MIT’s Institute for Soldier Nanotechnologies.

How should autonomous vehicles be programmed?

By Peter Dizikes

A massive new survey developed by MIT researchers reveals some distinct global preferences concerning the ethics of autonomous vehicles, as well as some regional variations in those preferences.

The survey has global reach and a unique scale, with over 2 million online participants from over 200 countries weighing in on versions of a classic ethical conundrum, the “Trolley Problem.” The problem involves scenarios in which an accident involving a vehicle is imminent, and the vehicle must opt for one of two potentially fatal options. In the case of driverless cars, that might mean swerving toward a couple of people, rather than a large group of bystanders.

“The study is basically trying to understand the kinds of moral decisions that driverless cars might have to resort to,” says Edmond Awad, a postdoc at the MIT Media Lab and lead author of a new paper outlining the results of the project. “We don’t know yet how they should do that.”

Still, Awad adds, “We found that there are three elements that people seem to approve of the most.”

Indeed, the most emphatic global preferences in the survey are for sparing the lives of humans over the lives of other animals; sparing the lives of many people rather than a few; and preserving the lives of the young, rather than older people.

“The main preferences were to some degree universally agreed upon,” Awad notes. “But the degree to which they agree with this or not varies among different groups or countries.” For instance, the researchers found a less pronounced tendency to favor younger people, rather than the elderly, in what they defined as an “eastern” cluster of countries, including many in Asia.

The paper, “The Moral Machine Experiment,” is being published today in Nature.

The authors are Awad; Sohan Dsouza, a doctoral student in the Media Lab; Richard Kim, a research assistant in the Media Lab; Jonathan Schulz, a postdoc at Harvard University; Joseph Henrich, a professor at Harvard; Azim Shariff, an associate professor at the University of British Columbia; Jean-François Bonnefon, a professor at the Toulouse School of Economics; and Iyad Rahwan, an associate professor of media arts and sciences at the Media Lab, and a faculty affiliate in the MIT Institute for Data, Systems, and Society.

Awad is a postdoc in the MIT Media Lab’s Scalable Cooperation group, which is led by Rahwan.

To conduct the survey, the researchers designed what they call “Moral Machine,” a multilingual online game in which participants could state their preferences concerning a series of dilemmas that autonomous vehicles might face. For instance: If it comes right down it, should autonomous vehicles spare the lives of law-abiding bystanders, or, alternately, law-breaking pedestrians who might be jaywalking? (Most people in the survey opted for the former.)

All told, “Moral Machine” compiled nearly 40 million individual decisions from respondents in 233 countries; the survey collected 100 or more responses from 130 countries. The researchers analyzed the data as a whole, while also breaking participants into subgroups defined by age, education, gender, income, and political and religious views. There were 491,921 respondents who offered demographic data.

The scholars did not find marked differences in moral preferences based on these demographic characteristics, but they did find larger “clusters” of moral preferences based on cultural and geographic affiliations. They defined “western,” “eastern,” and “southern” clusters of countries, and found some more pronounced variations along these lines. For instance: Respondents in southern countries had a relatively stronger tendency to favor sparing young people rather than the elderly, especially compared to the eastern cluster.

Awad suggests that acknowledgement of these types of preferences should be a basic part of informing public-sphere discussion of these issues. In all regions, since there is a moderate preference for sparing law-abiding bystanders rather than jaywalkers, knowing these preferences could, in theory, inform the way software is written to control autonomous vehicles.

“The question is whether these differences in preferences will matter in terms of people’s adoption of the new technology when [vehicles] employ a specific rule,” he says.

Rahwan, for his part, notes that “public interest in the platform surpassed our wildest expectations,” allowing the researchers to conduct a survey that raised awareness about automation and ethics while also yielding specific public-opinion information.

“On the one hand, we wanted to provide a simple way for the public to engage in an important societal discussion,” Rahwan says. “On the other hand, we wanted to collect data to identify which factors people think are important for autonomous cars to use in resolving ethical tradeoffs.”

Beyond the results of the survey, Awad suggests, seeking public input about an issue of innovation and public safety should continue to become a larger part of the dialoge surrounding autonomous vehicles.

“What we have tried to do in this project, and what I would hope becomes more common, is to create public engagement in these sorts of decisions,” Awad says.