700,000 submunitions demilitarized by Sandia-designed robotics system

New Video from Mouser’s Generation Robot Series Showcases Exclusive Grant Imahara Panel Discussion from ECIA Executive Conference

ACHIEVE SMART 3D ROBOT VISION WITH GOCATOR®

Controlling false discoveries in large-scale experimentation: Challenges and solutions

By Tijana Zrnic

“Scientific research has changed the world. Now it needs to change itself. – The Economist, 2013

There has been a growing concern about the validity of scientific findings. A multitude of journals, papers and reports have recognized the ever smaller number of replicable scientific studies. In 2016, one of the giants of scientific publishing, Nature, surveyed about 1,500 researchers across many different disciplines, asking for their stand on the status of reproducibility in their area of research. One of the many takeaways to the worrisome results of this survey is the following: 90% of the respondents agreed that there is a reproducibility crisis, and the overall top answer to boosting reproducibility was “better understanding of statistics”. Indeed, many factors contributing to the explosion of irreproducible research stem from the neglect of the fact that statistics is no longer as static as it was in the first half of the 20th century, when statistical hypothesis testing came into prominence as a theoretically rigorous proposal for making valid discoveries with high confidence.

When science first saw the rise of statistical testing, the basic idea was the following: you put forward competing hypotheses about the world, then you collect some data, and finally you use these data to validate your hypotheses. Typically, one was in a situation where they could iterate this three-step process only a few times; data was scarce, and the necessary computations were lengthy. Remember, this is early to mid-20th century we are talking about.

This forerunner of today’s scientific investigations would hardly recognize its own field in 2019. Nowadays, testing is much more dynamic and is performed at a scale larger than ever before. Even within a single institution, thousands of hypotheses are tested in a short time interval, older test results inspire future potential analyses, and scientific exploration oftentimes becomes a never-ending stream of individual hypothesis tests. What enabled this explosion of exploratory research is the high-throughput technologies and large amounts of data that we started seeing only recently, at least relative to the era of statistical thinking.

That said, as in any discipline with well-established and successful foundations, it is difficult to move away from classical paradigms in testing. Much of today’s large-scale investigations still uses tools and techniques which, although powerful and supported by beautiful theory, do not take into account that each test might be just a little piece of a much bigger puzzle of exploratory research. Many disciplines have yet to acquire novel methodology for testing, one that promotes valid inferences at scale and thus limits grandiose publications comprised of irreplicable mirages.

Let us analyze why classical hypothesis testing might lead to many spurious conclusions when the number of tests is large. We do so by elaborating the three main steps of a test: “hypothesize”, “collect data” and “validate”.

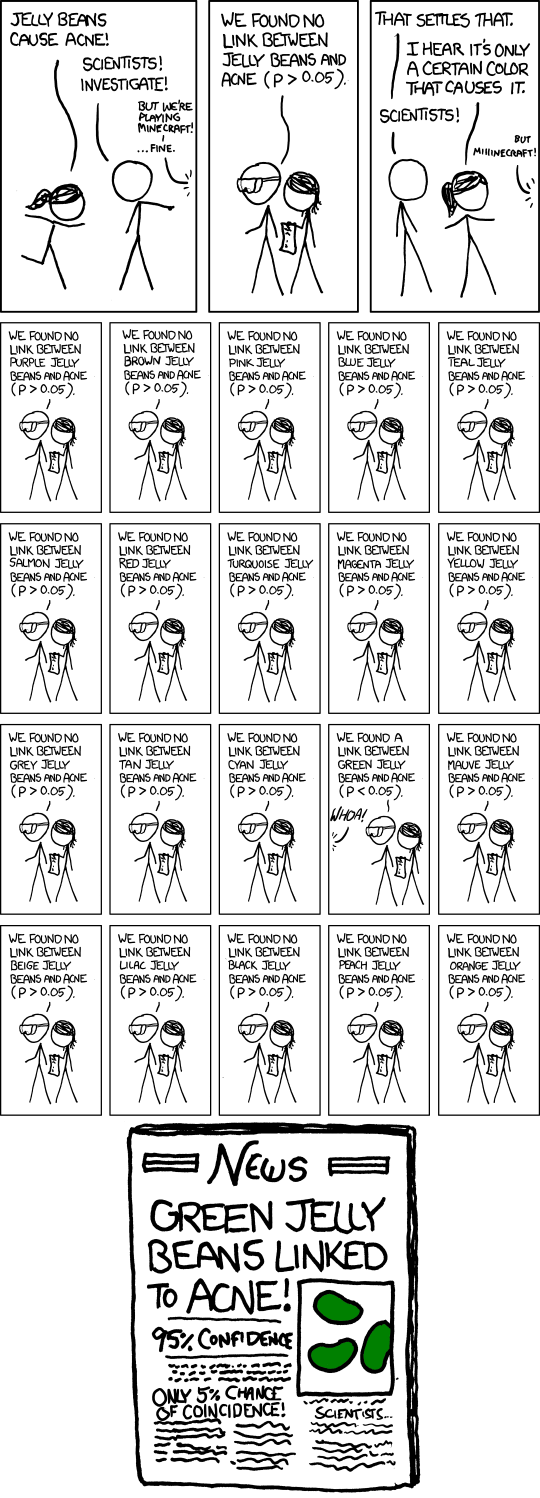

In the “hypothesize” step, a well-defined null hypothesis is formulated. For example, this could be “jelly beans do not cause acne”; we will use this as our running example. Notice that the null hypothesis, or simply null, is the opposite of what would be considered a discovery. In short, the null is status quo. Also at the beginning of a test, a false positive rate (FPR) is chosen. This is the maximal allowed probability of making a false discovery, typically chosen around 0.05. In the context of our running example, this means the following: if the null is true, i.e. if jelly beans do not cause acne, we will only have a 5% chance of proclaiming causation between jelly beans and acne.

In a frequentist manner, we assume that there is deterministic ground truth about the null hypothesis. That is, it is either true or not. We will refer to the null hypotheses that are true as true nulls, and to those that are false as non-nulls. In our example, if jelly beans do not cause acne, the null hypothesis is a true null. If it is a non-null, however, we would ideally like to proclaim a discovery.

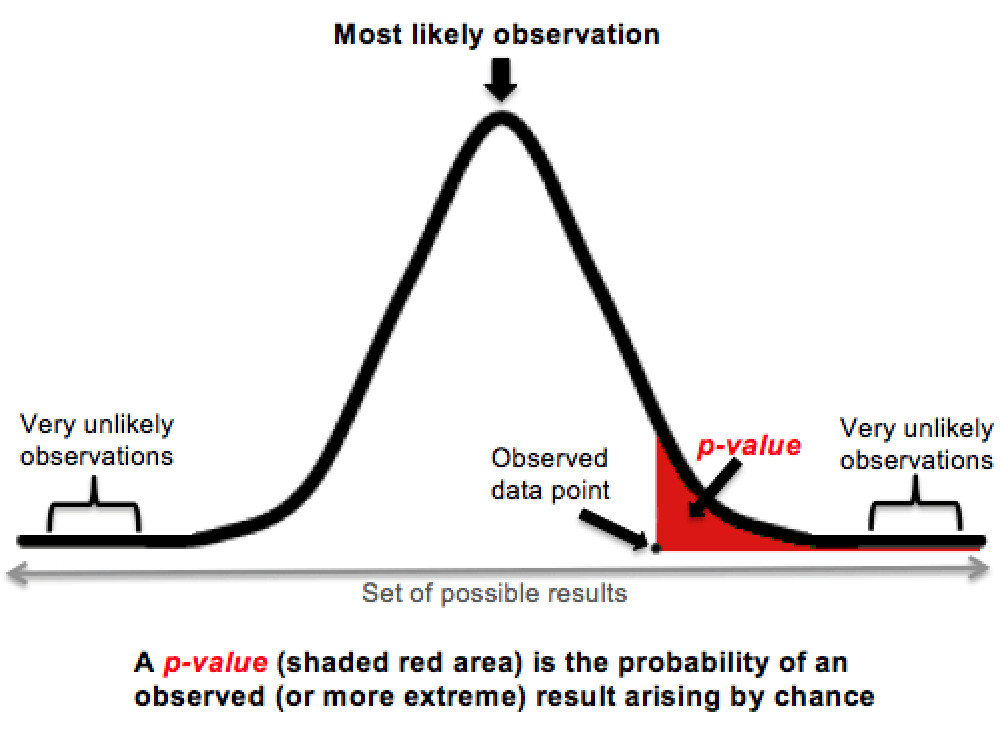

The second step is calculating a p-value based on collected data. This protagonist of many controversies around statistical testing is the probability of seeing the collected data, or something even more extreme, if the null is true. In our example, this is the probability of having some observed parameter of skin condition, or something “even more unusual”, if jelly beans indeed do not cause acne. To illustrate this point, consider the plot below. Let the bell curve be the distribution of the skin parameter if jelly beans do not cause acne. Then, the p-value is the red shaded area under this curve, which is everything “right of” the observed data point. The smaller the p-value, the more unlikely it is that the observation can be explained purely by chance.

The last step is validation. If the calculated p-value is smaller than the FPR, the null hypothesis is rejected, and a discovery is proclaimed. In our running example, if the red shaded area is less than 0.05, we say that jelly beans cause acne.

Finally, let us lift the lid on why there are so many false discoveries in large-scale testing. By construction, valid p-values are uniformly distributed on $[0,1]$1, if the null is true. This means that, even if jelly beans do not really cause acne, there is still 0.05 probability that a discovery is falsely proclaimed. Therefore, if testing N hypotheses that are truly null and hence should not be discovered, one is almost certain to proclaim some of them as discoveries if $N$ is large. For example, if all tests are independent, around 5% of $N$ will be discovered. Already after 20 tests of true nulls, even if they are completely arbitrary, one is expected to make a false discovery!

And this is how science goes wrong.

To recap, around 5% of the tested true null hypotheses unfortunately have to be discovered either way, simply by laws of probability. This wouldn’t really be an issue if most of the tested hypotheses were legitimate potential discoveries, i.e. non-nulls. Then, 5% of a small-ish number of true nulls would be negligible. Typically, however, this is not the case. We test loads of crazy, out-there hypotheses, which would attract a lot of attention if confirmed, and we do so simply because we can. In many areas, both observations and computational resources are abundant, so there is little incentive to stay on the “safe side”.

So, how can one make scientific discoveries without the fear of reporting too many false ones?

Controlling the False Discovery Rate

The recognition that a large number of tests leads to almost sure false discoveries has led to various formalisms for controlling their rate of appearance. One powerful proposal, which has become a de facto standard for false discovery control in multiple testing, is called the false discovery rate (FDR), defined as:

Controlling FDR with no additional goal is an easy task; namely, making no discoveries trivially gives FDR = 0. The implict goal behind the vast literature on FDR is discovering as many non-nulls as possible, while keeping FDR controlled under a pre-specified level $\alpha$. We collectively refer to all methods with this goal as FDR methods.

Initially, FDR methods were offline procedures. This means that they required collecting a whole batch of p-values before deciding which tests to proclaim as discoveries. The most notable example of this class is the successful Benjamini-Hochberg procedure, which has for a long time been the default of FDR methods.

However, the scale and scope of modern testing have begun to outstrip this well-recognized methodology. It is far from convenient to wait for all the p-values one wants to test, especially at institutions where testing is a never-ending process. To be more precise, we typically want to make decisions during and between our tests, in particular because this allows us to shape future analyses based on outcomes of past tests. This inspired a new line of work on FDR control, in which decisions are made online.

In online FDR control, p-values arrive one at a time, and the decision of whether or not to make a discovery is made as soon as a p-value is observed. Importantly, online FDR algorithms have enabled controlling FDR over a lifetime; even if the number of sequential tests tends to infinity, one would still have a guarantee that most of the proclaimed discoveries are indeed non-nulls.

The basic principle of online FDR control is to track and control a dynamic quantity called wealth. The wealth represents the current error budget, and is a result of all previously performed tests. In particular, if a test results in a discovery, the wealth increases, while if a discovery is not made, the wealth decreases; note that this update is completely independent of whether the test is truly null or not. When a new test starts, its FPR is chosen based on the available wealth; the bigger the wealth, the bigger the FPR, and consequently the better the chance for a discovery. In fact, this idea has a perfect analogy with testing in a broader social context. To make scientific discoveries, you are awarded an initial grant (corresponding to the target FDR level $\alpha$). This initial funding decreases with every new experiment, and, if you happen to make a scientific discovery, you are again awarded some “wealth”, which you can use toward the budget for subsequent tests. This is essentially the real-world translation of the mathematical expressions guiding online FDR algorithms.

Asynchronous Control of False Discoveries

Although online FDR control has broadened the domain of applications where false discoveries can be controlled, it has failed to account for several important aspects of modern testing.

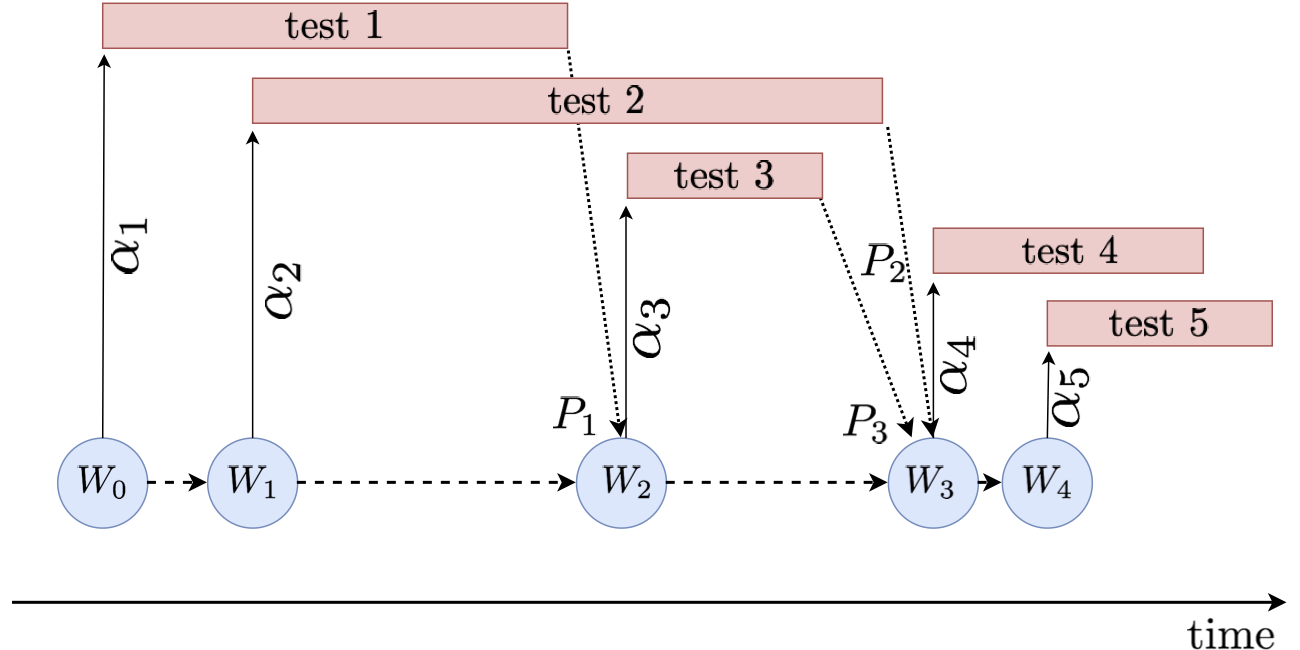

The main observation is that large-scale testing is not only sequential, but “doubly sequential”. Tests are run in a sequential fashion, but also each test internally is comprised of a sequence of atomic executions, which typically finish at an unpredictable time. This fact makes practitioners run multiple tests that overlap in time in order to gain time efficiency, allowing tests to start and finish at random times.

For example, in clinical trials, it is common to test several different treatment variants against a common control. These trials are often called “perpetual’’, as multiple treatments are tested in parallel, and new treatments enter the testing platform at random times in an online manner. Similarly, A/B testing in industry is typically distributed across many individuals and research teams, and across time, with companies running hundreds of tests per day. This large volume of tests, as well as their complex distribution across many analysts, inevitably causes asynchrony in testing.

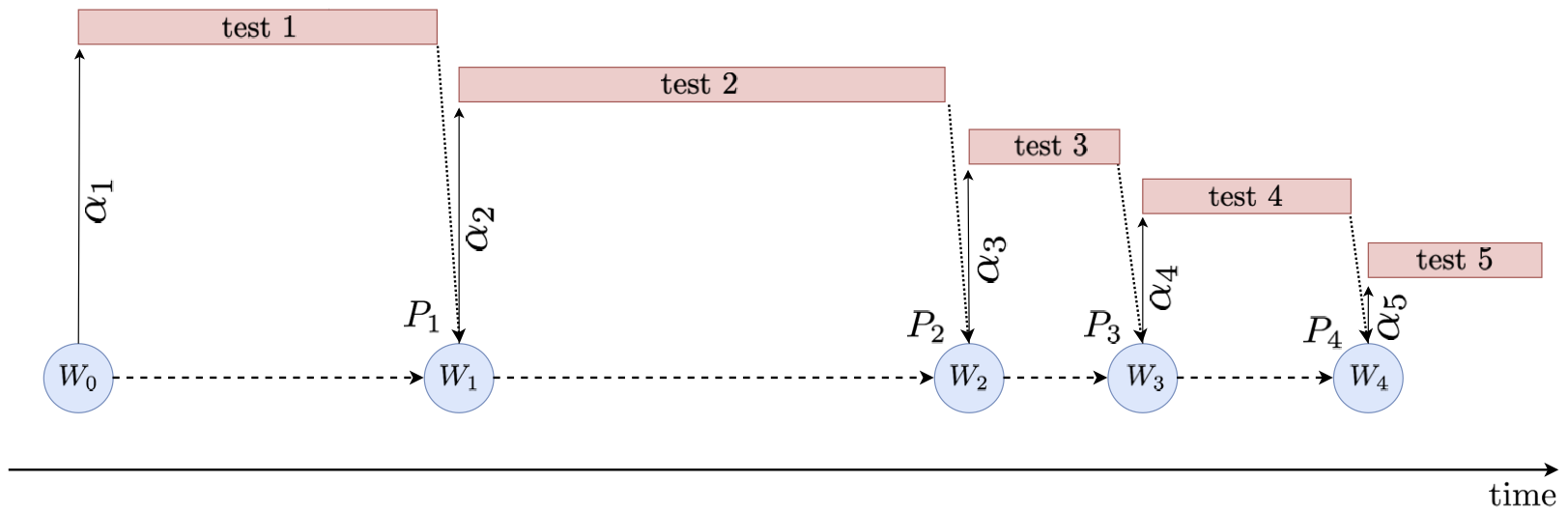

This circumstance is a problem for standard online FDR methodology. Namely, all existing online FDR algorithms assume tests are run synchronously, with no overlap in time; in other words, in order to determine a false positive rate for an upcoming test, online FDR methods need to know the outcomes of all previously started tests. The figure below depicts the difference between synchronous and asynchronous online testing.

For each time step $t$, $W_t$, $P_t$ and $\alpha_t$ are respectively the available wealth at the beginning of the $(t+1)$-th test, the p-value resulting from the $t$-th test, and the FPR of the $t$-th test.

Furthermore, the asynchronous nature of modern testing introduces patterns of dependence between p-values that do not conform to common assumptions. Prior work on online FDR either assumes perfect independence between p-values (overly optimistic), or arbitrary dependence between all tested p-values in the sequence (overly pessimistic). As data are commonly shared across different tests, the first assumption in clearly difficult to satisfy. In clinical trials, having a common control arm induces dependence; in A/B testing, many tests reuse data from the same shared pool, again causing dependence. On the other end, it is not natural to assume that dependence spills over the entire p-value sequence; older data and test outcomes with time become “stale,” and no longer have direct influence on newly created tests. Modern testing calls for an intermediate notion of dependence, called local dependence, one that assumes p-values that are far enough in the sequence are independent, while any two close enough are likely to depend on each other.

In a recent manuscript [1], we developed FDR methods that confront both of these difficulties of large-scale testing. Our methods control FDR in sequential settings that are arbitrarily asynchronous, and/or yield p-values that are locally dependent. Interestingly, from the point of view of our analysis, both local dependence and asynchrony are solved via the same technical instrument, which we call conflict sets. More formally, each new test has a conflict set, which consists of all previously started tests whose outcome is not known (e.g. if there is asynchrony so they are still running), or is known but might have some leverage on the new test (e.g. if there is dependence). We show that computing the FPR of a new test while assuming “unfavorable” outcomes of the conflicting tests is the right approach to guaranteeing FDR control (we call this the principle of pessimism).

It is worth pointing out that FDR control under conflict sets has to be more conservative by construction; to account for dependence between tests, as well as the uncertainty about the tests in progress, the FPRs have to be chosen appropriately smaller. That said, our methods are a strict generalization of prior work on online FDR; they interpolate between standard online FDR algorithms, when the conflict sets are empty, and the Bonferroni correction (also known as alpha-spending), when the conflict sets are arbitrarily large. The latter controls the familywise error rate, which is a more stringent error metric than FDR, under any assumption on how tests relate. This interpolation has introduced the possibility of a tradeoff between the consideration of overall rate of discovery per unit of real time, and consideration of the complexity of careful coordination required to minimize dependence and asynchrony.

Summary

The replicability of hypothesis tests is largely in crisis, as the scale of modern applications has long outstripped classical testing methodology which is still in use. Moreover, prior efforts toward remedying this problem have neglected the fact that testing is massively asynchronous, and hence the existing solutions for boosting reproducibility have not been suitable for many common large-scale testing schemes. Motivated by this observation, we developed methods that control the false discovery rate in complex asynchronous scenarios, allowing statisticians to perform hypothesis tests with a small fraction of false discoveries, and with minimal explicit coordination between tests.

This article was initially published on the BAIR blog, and appears here with the authors’ permission.

References

[1] Zrnic, T., Ramdas, A., & Jordan, M. I. (2018). Asynchronous Online Testing of Multiple Hypotheses. arXiv preprint arXiv:1812.05068.

-

Valid p-values can also be stochastically larger than uniform, which is a more general condition. For simplicity, we take them to be uniform in this text; the “punchline” remains the same either way. ↩

Robots track moving objects with unprecedented precision

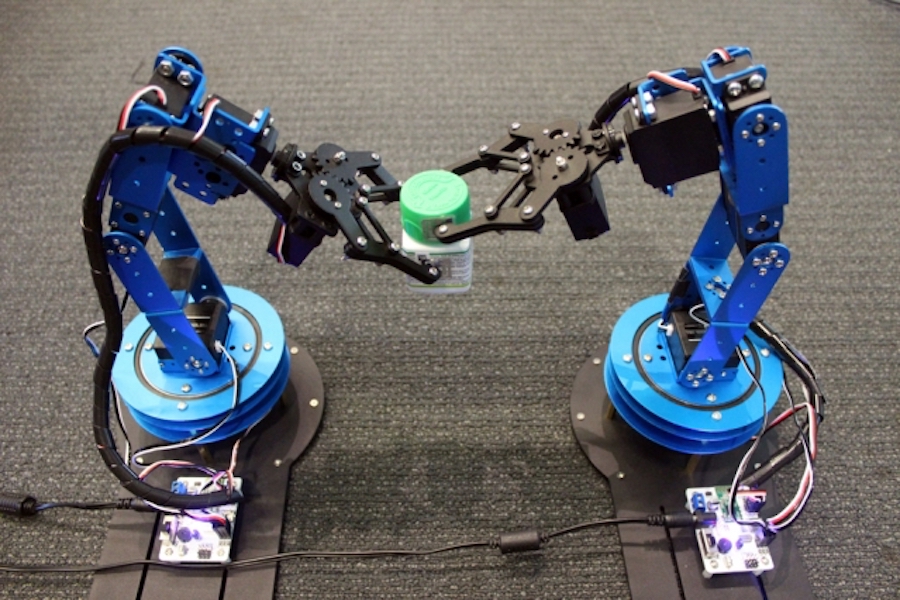

Photo courtesy of the researchers

A novel system developed at MIT uses RFID tags to help robots home in on moving objects with unprecedented speed and accuracy. The system could enable greater collaboration and precision by robots working on packaging and assembly, and by swarms of drones carrying out search-and-rescue missions.

In a paper being presented next week at the USENIX Symposium on Networked Systems Design and Implementation, the researchers show that robots using the system can locate tagged objects within 7.5 milliseconds, on average, and with an error of less than a centimeter.

In the system, called TurboTrack, an RFID (radio-frequency identification) tag can be applied to any object. A reader sends a wireless signal that reflects off the RFID tag and other nearby objects, and rebounds to the reader. An algorithm sifts through all the reflected signals to find the RFID tag’s response. Final computations then leverage the RFID tag’s movement — even though this usually decreases precision — to improve its localization accuracy.

The researchers say the system could replace computer vision for some robotic tasks. As with its human counterpart, computer vision is limited by what it can see, and it can fail to notice objects in cluttered environments. Radio frequency signals have no such restrictions: They can identify targets without visualization, within clutter and through walls.

To validate the system, the researchers attached one RFID tag to a cap and another to a bottle. A robotic arm located the cap and placed it onto the bottle, held by another robotic arm. In another demonstration, the researchers tracked RFID-equipped nanodrones during docking, maneuvering, and flying. In both tasks, the system was as accurate and fast as traditional computer-vision systems, while working in scenarios where computer vision fails, the researchers report.

“If you use RF signals for tasks typically done using computer vision, not only do you enable robots to do human things, but you can also enable them to do superhuman things,” says Fadel Adib, an assistant professor and principal investigator in the MIT Media Lab, and founding director of the Signal Kinetics Research Group. “And you can do it in a scalable way, because these RFID tags are only 3 cents each.”

In manufacturing, the system could enable robot arms to be more precise and versatile in, say, picking up, assembling, and packaging items along an assembly line. Another promising application is using handheld “nanodrones” for search and rescue missions. Nanodrones currently use computer vision and methods to stitch together captured images for localization purposes. These drones often get confused in chaotic areas, lose each other behind walls, and can’t uniquely identify each other. This all limits their ability to, say, spread out over an area and collaborate to search for a missing person. Using the researchers’ system, nanodrones in swarms could better locate each other, for greater control and collaboration.

“You could enable a swarm of nanodrones to form in certain ways, fly into cluttered environments, and even environments hidden from sight, with great precision,” says first author Zhihong Luo, a graduate student in the Signal Kinetics Research Group.

The other Media Lab co-authors on the paper are visiting student Qiping Zhang, postdoc Yunfei Ma, and Research Assistant Manish Singh.

Super resolution

Adib’s group has been working for years on using radio signals for tracking and identification purposes, such as detecting contamination in bottled foods, communicating with devices inside the body, and managing warehouse inventory.

Similar systems have attempted to use RFID tags for localization tasks. But these come with trade-offs in either accuracy or speed. To be accurate, it may take them several seconds to find a moving object; to increase speed, they lose accuracy.

The challenge was achieving both speed and accuracy simultaneously. To do so, the researchers drew inspiration from an imaging technique called “super-resolution imaging.” These systems stitch together images from multiple angles to achieve a finer-resolution image.

“The idea was to apply these super-resolution systems to radio signals,” Adib says. “As something moves, you get more perspectives in tracking it, so you can exploit the movement for accuracy.”

The system combines a standard RFID reader with a “helper” component that’s used to localize radio frequency signals. The helper shoots out a wideband signal comprising multiple frequencies, building on a modulation scheme used in wireless communication, called orthogonal frequency-division multiplexing.

The system captures all the signals rebounding off objects in the environment, including the RFID tag. One of those signals carries a signal that’s specific to the specific RFID tag, because RFID signals reflect and absorb an incoming signal in a certain pattern, corresponding to bits of 0s and 1s, that the system can recognize.

Because these signals travel at the speed of light, the system can compute a “time of flight” — measuring distance by calculating the time it takes a signal to travel between a transmitter and receiver — to gauge the location of the tag, as well as the other objects in the environment. But this provides only a ballpark localization figure, not subcentimter precision.

Leveraging movement

To zoom in on the tag’s location, the researchers developed what they call a “space-time super-resolution” algorithm.

The algorithm combines the location estimations for all rebounding signals, including the RFID signal, which it determined using time of flight. Using some probability calculations, it narrows down that group to a handful of potential locations for the RFID tag.

As the tag moves, its signal angle slightly alters — a change that also corresponds to a certain location. The algorithm then can use that angle change to track the tag’s distance as it moves. By constantly comparing that changing distance measurement to all other distance measurements from other signals, it can find the tag in a three-dimensional space. This all happens in a fraction of a second.

“The high-level idea is that, by combining these measurements over time and over space, you get a better reconstruction of the tag’s position,” Adib says.

The work was sponsored, in part, by the National Science Foundation.

What do California disengagement reports tell us?

California has released the disengagement reports the law requires companies to file and it’s a lot of data. Also worth noting is Waymo’s own blog post on their report where they report their miles per disengagement has improved from 5,600 to 11,000.

Fortunately some hard-working redditors and others have done some summation of the data, including this one from Last Driver’s Licence Holder. Most notable are an absolutely ridiculous number from Apple, and that only Waymo and Cruise have numbers suggesting real capability, with Zoox coming in from behind.

The problem, of course, is that “disengagements” is a messy statistic. Different teams report different things. Different disengagements have different importance. And it matters how complex the road you are driving is. (Cruise likes to make a big point of that.)

Safety drivers are trained to disengage if they feel at all uncomfortable. This means that they will often disengage when it is not actually needed. So it’s important to do what Waymo does, namely to play back the situation in simulator to see what would have happened if the driver had not taken over. That playback can reveal if it was:

- Paranoia (as expected) from the safety driver, but no actual issue.

- A tricky situation that is the fault of another driver.

- A situation where the vehicle would have done something undesired, but not dangerous.

- A situation like the above, but dangerous, though nothing would have actually happened. Example — temporarily weaving out of a lane when nobody else is there.

- A situation which would have resulted in a “contact” — factored with the severity of the contact, from nothing, to ding, to crash, to injury, to fatality.

A real measurement involves a complex mix of all these, and I’ll be writing up more about how we could possibly score these.

We know the numbers for these events for humans thanks to “naturalistic” driving studies and other factors. Turns out that humans are making mistakes all the time. We’re constantly not paying attention to something on the road we should be looking at, but we get away with it. We constantly find ourselves drifting out of a lane, or find we must brake harder than we would want to. But mostly, nothing happens. Robots aren’t handled that way — any mistake is a serious issue. Robocars will have fewer crashes because “somebody else was in the wrong place when I wasn’t looking.” Their crashes will often have causes that are foreign to humans.

In Waymo’s report you can actually see a few disengagements because the perception system didn’t see something. That’s definitely something to investigate and fix, but humans don’t see something very frequently, and we still do tolerably well.

A summary of the numbers for humans on US roads:

- Some sort of “ding” accident every 100,000 miles of driving (roughly).

- An accident reported to insurance every 250,000 miles.

- An accident reported to police every 500,000 miles.

- An injury accident every 1.5M miles.

- A fatality every 80M miles of all driving.

- A highway fatality every 180M of highway driving.

- A pedestrian killed every 600M miles of total driving.

Software disengagements

The other very common type of disengagement is a software disengagement. Here, the software decides to disengage because it detects something is going wrong. These are quite often not safety incidents. Modern software is loaded with diagnostic tests, always checking if things are going as expected. When one fails, most software just logs a warning, or “throws an exception” to code that handles the problem. Most of the time, that code does indeed handle the problem, and there is no safety incident. But during testing, you want to disengage to be on the safe side. Once again, the team examines the warning/exception to find out the cause and tries to fix it and figure out how serious it would have been.

That’s why Waymo’s 11,000 miles is a pretty good number. They have not published it in a long time, but their number of “necessary interventions” is much higher than that. In fact, we can bet that in the Phoenix area, where they have authorized limited operations with no safety driver, that it’s better than the numbers above.

DC Motors as Generators

How Robotics & IoT Help Sustain Triple Bottom Line

#280: Semantics in Robotics, with Amy Loutfi

In this episode, Audrow Nash interviews Amy Loutfi, a professor at Örebro University, about how semantic representations can be used to help robots reason about the world. Loutfi discusses semantics in general, as well as how semantics have been used for a simulated quad rotor to do path planning within constraints.

Amy Loutfi

Amy Loutfi is head of the Center for Applied Autonomous Sensor Systems (www.aass.oru.se) at Örebro University. She is also a professor in Information Technology at Örebro University. She received her Ph.d in Computer Science with a focus on the integration of artificial olfaction on robotic and intelligent systems. She currently leads one of the labs at the Center, the machine perception and interaction lab (www.mpi.aass.oru.se). Her general interests are in the area of integration of artificial intelligence with autonomous systems, and over the years has looked into applications where robots closely interact with humans in both industry and domestic environments.

Amy Loutfi is head of the Center for Applied Autonomous Sensor Systems (www.aass.oru.se) at Örebro University. She is also a professor in Information Technology at Örebro University. She received her Ph.d in Computer Science with a focus on the integration of artificial olfaction on robotic and intelligent systems. She currently leads one of the labs at the Center, the machine perception and interaction lab (www.mpi.aass.oru.se). Her general interests are in the area of integration of artificial intelligence with autonomous systems, and over the years has looked into applications where robots closely interact with humans in both industry and domestic environments.

Links

- Download mp3 (13.8 MB)

- Amy Loutfi’s website

- Subscribe to Robots using iTunes

- Subscribe to Robots using RSS

- Support us on Patreon