Covid-19 – the accelerator to smart logistics, smart transportation and smart supply chain services

Recurrent Neural Networks: building GRU cells VS LSTM cells in Pytorch

Robot takes contact-free measurements of patients’ vital signs

By Anne Trafton

During the current coronavirus pandemic, one of the riskiest parts of a health care worker’s job is assessing people who have symptoms of Covid-19. Researchers from MIT, Boston Dynamics, and Brigham and Women’s Hospital hope to reduce that risk by using robots to remotely measure patients’ vital signs.

The robots, which are controlled by a handheld device, can also carry a tablet that allows doctors to ask patients about their symptoms without being in the same room.

“In robotics, one of our goals is to use automation and robotic technology to remove people from dangerous jobs,” says Henwei Huang, an MIT postdoc. “We thought it should be possible for us to use a robot to remove the health care worker from the risk of directly exposing themselves to the patient.”

Using four cameras mounted on a dog-like robot developed by Boston Dynamics, the researchers have shown that they can measure skin temperature, breathing rate, pulse rate, and blood oxygen saturation in healthy patients, from a distance of 2 meters. They are now making plans to test it in patients with Covid-19 symptoms.

“We are thrilled to have forged this industry-academia partnership in which scientists with engineering and robotics expertise worked with clinical teams at the hospital to bring sophisticated technologies to the bedside,” says Giovanni Traverso, an MIT assistant professor of mechanical engineering, a gastroenterologist at Brigham and Women’s Hospital, and the senior author of the study.

The researchers have posted a paper on their system on the preprint server techRxiv, and have submitted it to a peer-reviewed journal. Huang is one of the lead authors of the study, along with Peter Chai, an assistant professor of emergency medicine at Brigham and Women’s Hospital, and Claas Ehmke, a visiting scholar from ETH Zurich.

Measuring vital signs

When Covid-19 cases began surging in Boston in March, many hospitals, including Brigham and Women’s, set up triage tents outside their emergency departments to evaluate people with Covid-19 symptoms. One major component of this initial evaluation is measuring vital signs, including body temperature.

The MIT and BWH researchers came up with the idea to use robotics to enable contactless monitoring of vital signs, to allow health care workers to minimize their exposure to potentially infectious patients. They decided to use existing computer vision technologies that can measure temperature, breathing rate, pulse, and blood oxygen saturation, and worked to make them mobile.

To achieve that, they used a robot known as Spot, which can walk on four legs, similarly to a dog. Health care workers can maneuver the robot to wherever patients are sitting, using a handheld controller. The researchers mounted four different cameras onto the robot — an infrared camera plus three monochrome cameras that filter different wavelengths of light.

The researchers developed algorithms that allow them to use the infrared camera to measure both elevated skin temperature and breathing rate. For body temperature, the camera measures skin temperature on the face, and the algorithm correlates that temperature with core body temperature. The algorithm also takes into account the ambient temperature and the distance between the camera and the patient, so that measurements can be taken from different distances, under different weather conditions, and still be accurate.

Measurements from the infrared camera can also be used to calculate the patient’s breathing rate. As the patient breathes in and out, wearing a mask, their breath changes the temperature of the mask. Measuring this temperature change allows the researchers to calculate how rapidly the patient is breathing.

The three monochrome cameras each filter a different wavelength of light — 670, 810, and 880 nanometers. These wavelengths allow the researchers to measure the slight color changes that result when hemoglobin in blood cells binds to oxygen and flows through blood vessels. The researchers’ algorithm uses these measurements to calculate both pulse rate and blood oxygen saturation.

“We didn’t really develop new technology to do the measurements,” Huang says. “What we did is integrate them together very specifically for the Covid application, to analyze different vital signs at the same time.”

Continuous monitoring

In this study, the researchers performed the measurements on healthy volunteers, and they are now making plans to test their robotic approach in people who are showing symptoms of Covid-19, in a hospital emergency department.

While in the near term, the researchers plan to focus on triage applications, in the longer term, they envision that the robots could be deployed in patients’ hospital rooms. This would allow the robots to continuously monitor patients and also allow doctors to check on them, via tablet, without having to enter the room. Both applications would require approval from the U.S. Food and Drug Administration.

The research was funded by the MIT Department of Mechanical Engineering and the Karl van Tassel (1925) Career Development Professorship, and Boston Dynamics.

Medical robotic hand? Rubbery semiconductor makes it possible

Can RL from pixels be as efficient as RL from state?

By Misha Laskin, Aravind Srinivas, Kimin Lee, Adam Stooke, Lerrel Pinto, Pieter Abbeel

A remarkable characteristic of human intelligence is our ability to learn tasks quickly. Most humans can learn reasonably complex skills like tool-use and gameplay within just a few hours, and understand the basics after only a few attempts. This suggests that data-efficient learning may be a meaningful part of developing broader intelligence.

On the other hand, Deep Reinforcement Learning (RL) algorithms can achieve superhuman performance on games like Atari, Starcraft, Dota, and Go, but require large amounts of data to get there. Achieving superhuman performance on Dota took over 10,000 human years of gameplay. Unlike simulation, skill acquisition in the real-world is constrained to wall-clock time. In order to see similar breakthroughs to AlphaGo in real-world settings, such as robotic manipulation and autonomous vehicle navigation, RL algorithms need to be data-efficient — they need to learn effective policies within a reasonable amount of time.

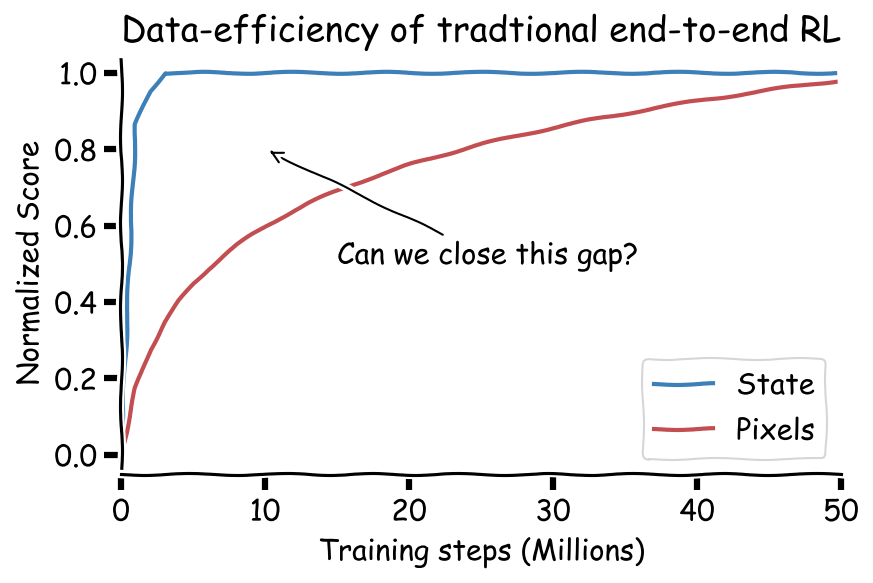

To date, it has been commonly assumed that RL operating on coordinate state is significantly more data-efficient than pixel-based RL. However, coordinate state is just a human crafted representation of visual information. In principle, if the environment is fully observable, we should also be able to learn representations that capture the state.

Recent advances in data-efficient RL

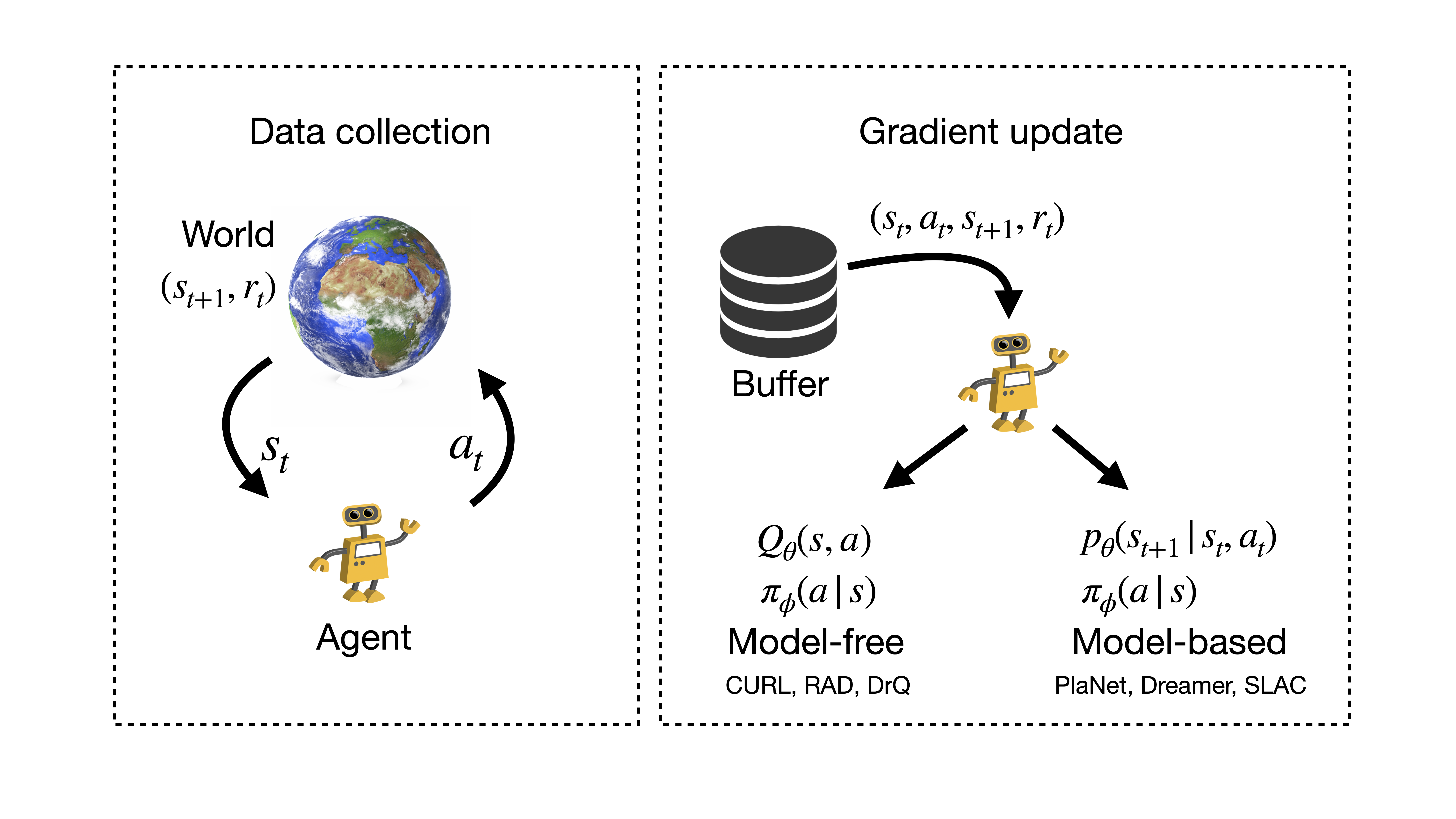

Recently, there have been several algorithmic advances in Deep RL that have improved learning policies from pixels. The methods fall into two categories: (i) model-free algorithms and (ii) model-based (MBRL) algorithms. The main difference between the two is that model-based methods learn a forward transition model $p(s_{t+1}|,s_t,a_t)$ while model-free ones do not. Learning a model has several distinct advantages. First, it is possible to use the model to plan through action sequences, generate fictitious rollouts as a form of data augmentation, and temporally shape the latent space by learning a model.

However, a distinct disadvantage of model-based RL is complexity. Model-based methods operating on pixels require learning a model, an encoding scheme, a policy, various auxiliary tasks such as reward prediction, and stitching these parts together to make a whole algorithm. Visual MBRL methods have a lot of moving parts and tend to be less stable. On the other hand, model-free methods such as Deep Q Networks (DQN), Proximal Policy Optimization (PPO), and Soft Actor-Critic (SAC) learn a policy in an end-to-end manner optimizing for one objective. While traditionally, the simplicity of model-free RL has come at the cost of sample-efficiency, recent improvements have shown that model-free methods can in fact be more data-efficient than MBRL and, more surprisingly, result in policies that are as data efficient as policies trained on coordinate state. In what follows we will focus on these recent advances in pixel-based model-free RL.

Why now?

Over the last few years, two trends have converged to make data-efficient visual RL possible. First, end-to-end RL algorithms have become increasingly more stable through algorithms like the Rainbow DQN, TD3, and SAC. Second, there has been tremendous progress in label-efficient learning for image classification using contrastive unsupervised representations (CPCv2, MoCo, SimCLR) and data augmentation (MixUp, AutoAugment, RandAugment). In recent work from our lab at BAIR (CURL, RAD), we combined contrastive learning and data augmentation techniques from computer vision with model-free RL to show significant data-efficiency gains on common RL benchmarks like Atari, DeepMind control, ProcGen, and OpenAI gym.

Contrastive Learning in RL Setting

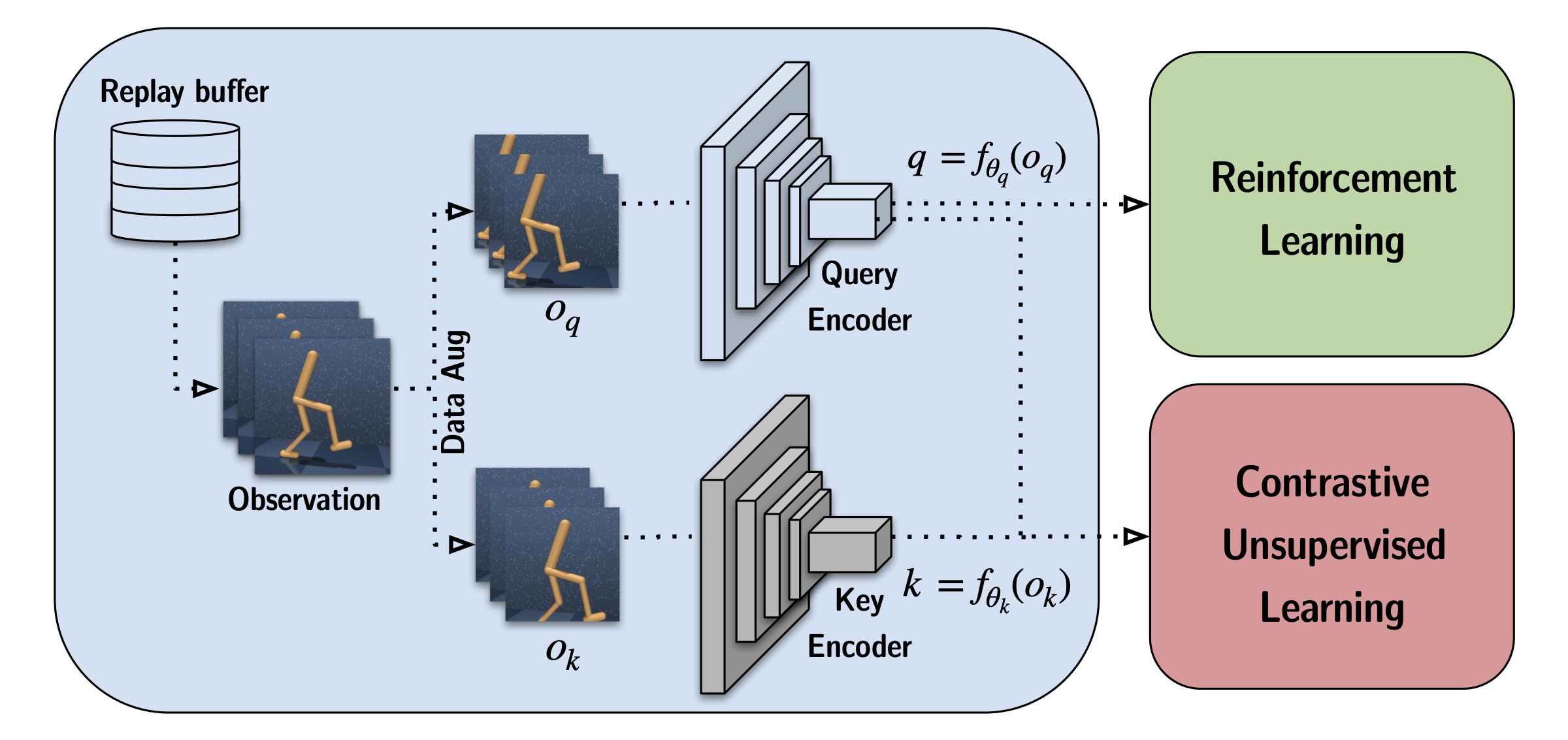

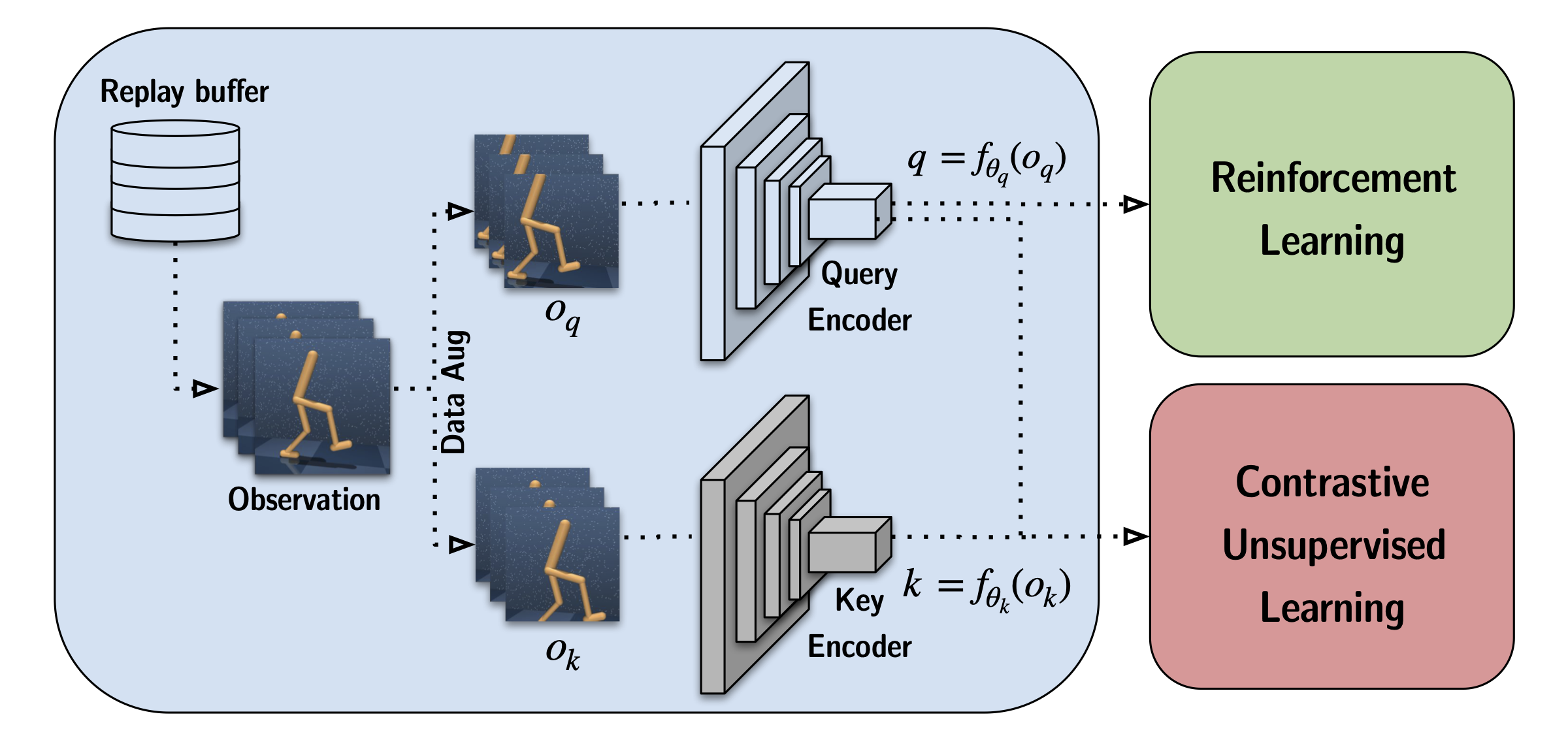

CURL was inspired by recent advances in contrastive representation learning in computer vision (CPC, CPCv2, MoCo, SimCLR). Contrastive learning aims to maximize / minimize similarity between two similar / dissimilar representations of an image. For example, in MoCo and SimCLR, the objective is to maximize agreement between two data-augmented versions of the same image and minimize it between all other images in the dataset, where optimization is performed with a Noise Contrastive Estimation loss. Through data augmentation, these representations internalize powerful inductive biases about invariance in the dataset.

In the RL setting, we opted for a similar approach and adopted the momentum contrast (MoCo) mechanism, a popular contrastive learning method in computer vision that uses a moving average of the query encoder parameters (momentum) to encode the keys to stabilize training. There are two main differences in setup: (i) the RL dataset changes dynamically and (ii) visual RL is typically performed on stacks of frames to access temporal information like velocities. Rather than separating contrastive learning from the downstream task as done in vision, we learn contrastive representations jointly with the RL objective. Instead of discriminating across single images, we discriminate across the stack of frames.

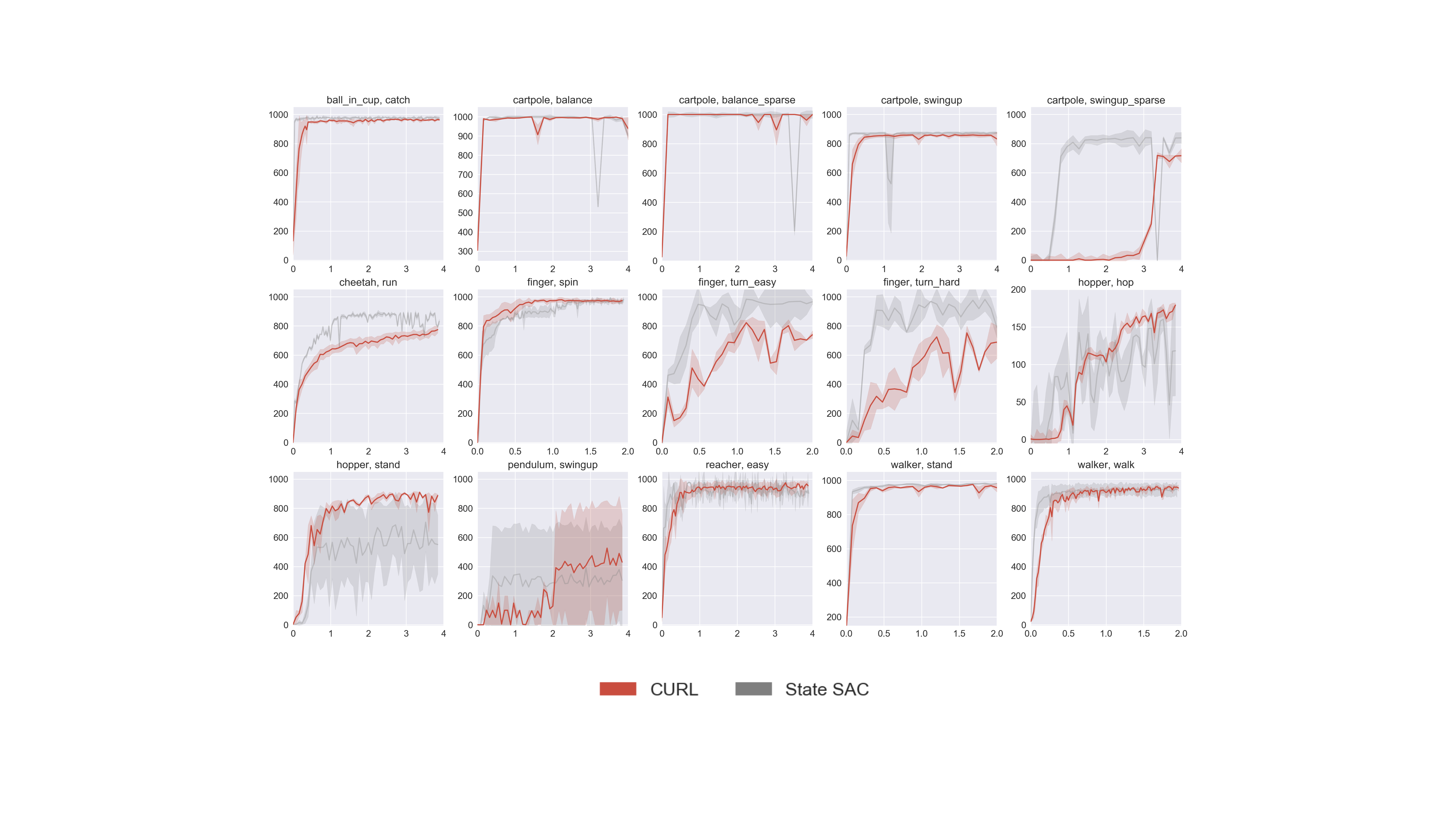

By combining contrastive learning with Deep RL in the above manner we found, for the first time, that pixel-based RL can be nearly as data-efficient as state-based RL on the DeepMind control benchmark suite. In the figure below, we show learning curves for DeepMind control tasks where contrastive learning is coupled with SAC (red) and compared to state-based SAC (gray).

We also demonstrate data-efficiency gains on the Atari 100k step benchmark. In this setting, we couple CURL with an Efficient Rainbow DQN (Eff. Rainbow) and show that CURL outperforms the prior state-of-the-art (Eff. Rainbow, SimPLe) on 20 out of 26 games tested.

RL with Data Augmentation

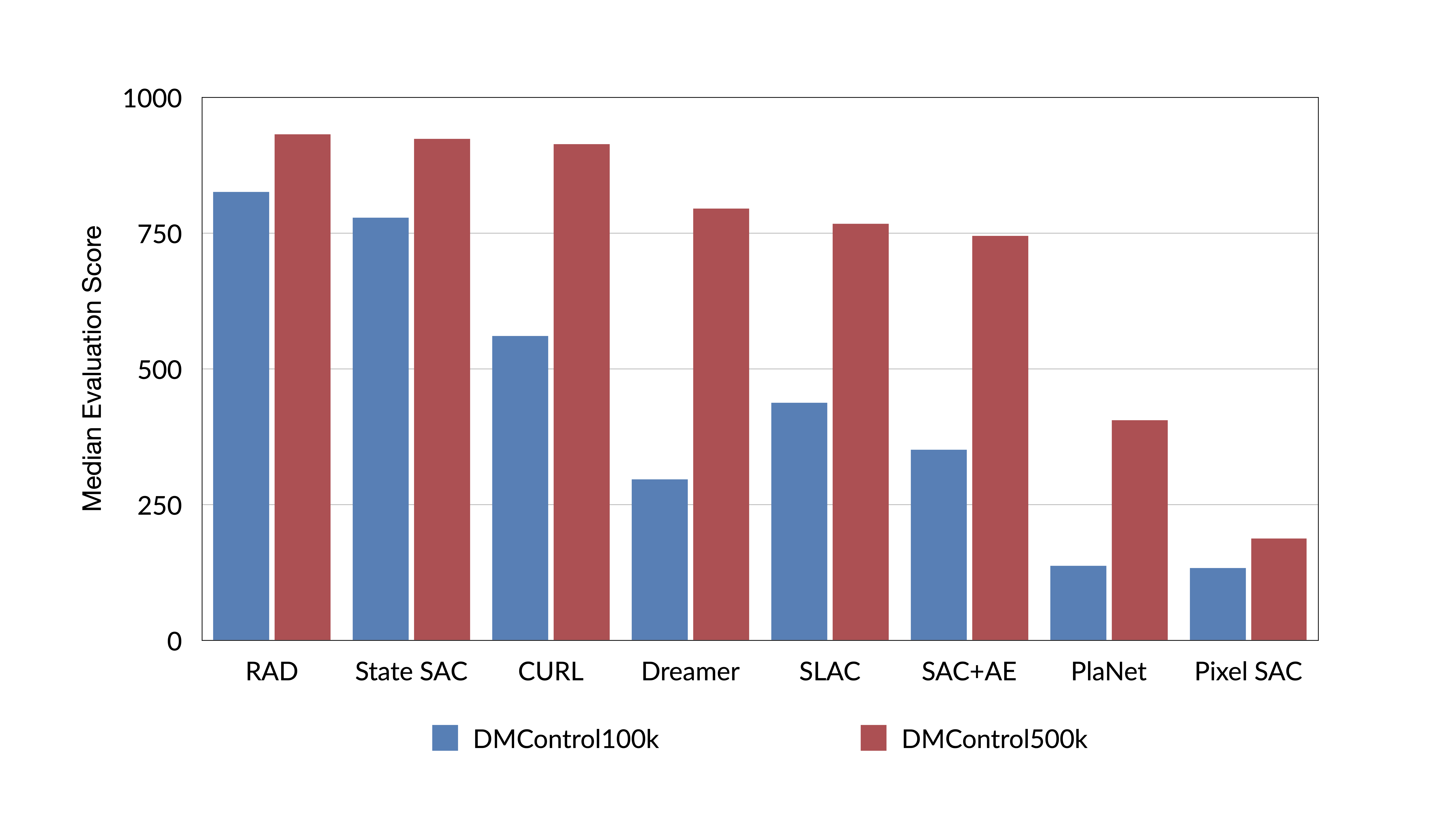

Given that random cropping was a crucial component in CURL, it is natural to ask — can we achieve the same results with data augmentation alone? In Reinforcement Learning with Augmented Data (RAD), we performed the first extensive study of data augmentation in Deep RL and found that for the DeepMind control benchmark the answer is yes. Data augmentation alone can outperform prior competing methods, match, and sometimes surpass the efficiency of state-based RL. Similar results were also shown in concurrent work – DrQ.

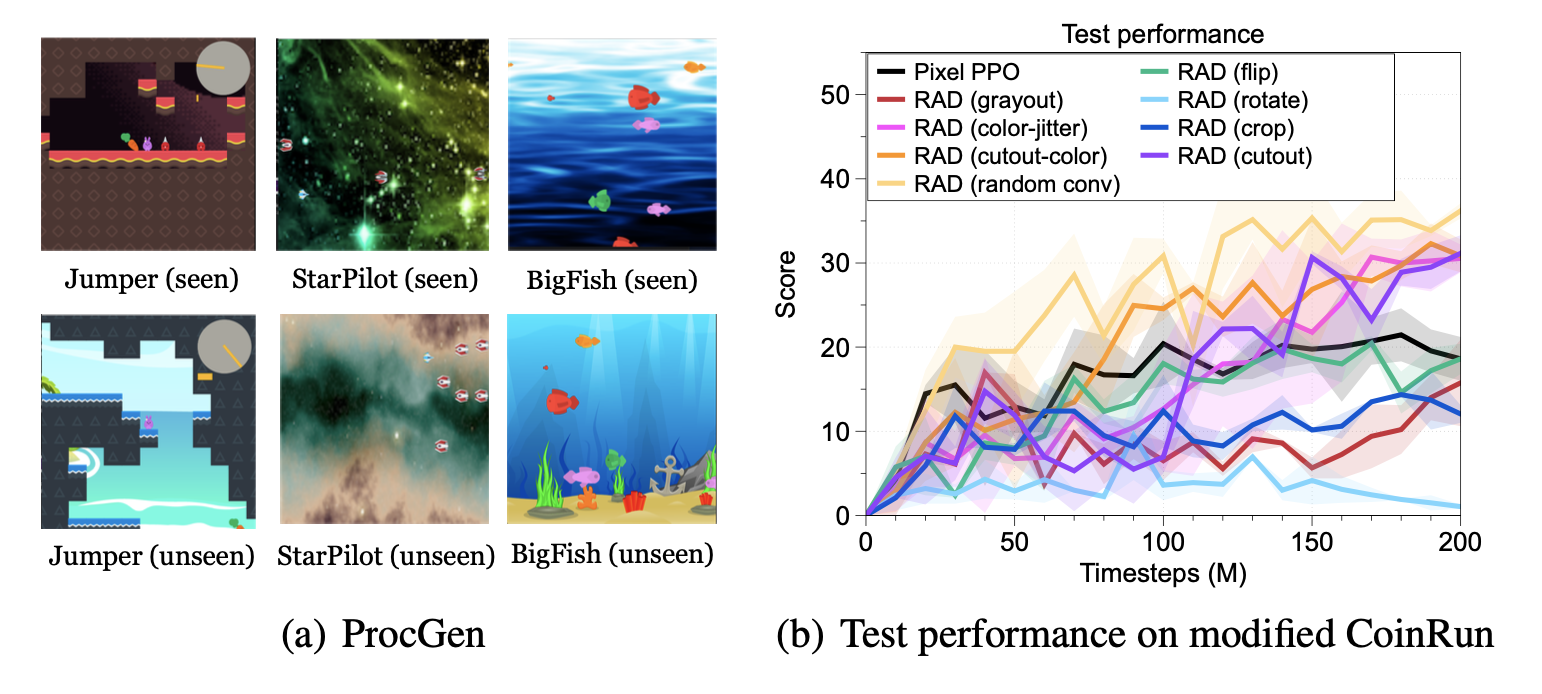

We found that RAD also improves generalization on the ProcGen game suite, showing that data augmentation is not limited to improving data-efficiency but also helps RL methods generalize to test-time environments.

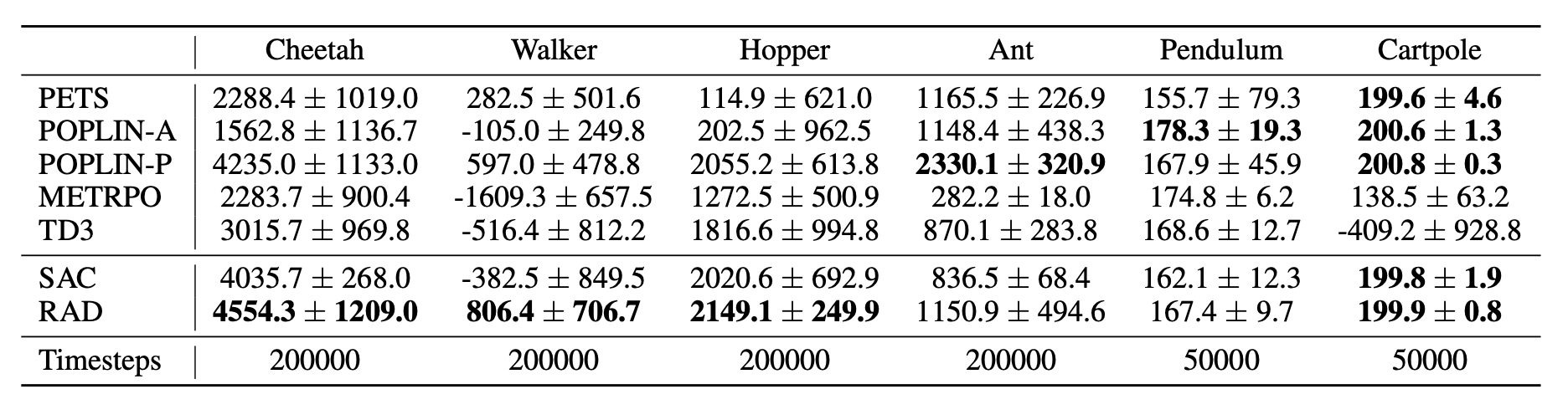

If data augmentation works for pixel-based RL, can it also improve state-based methods? We introduced a new state-based augmentation — random amplitude scaling — and showed that simple RL with state-based data augmentation achieves state-of-the-art results on OpenAI gym environments and outperforms more complex model-free and model-based RL algorithms.

Contrastive Learning vs Data Augmentation

If data augmentation with RL performs so well, do we need unsupervised representation learning? RAD outperforms CURL because it only optimizes for what we care about, which is the task reward. CURL, on the other hand, jointly optimizes the reinforcement and contrastive learning objectives. If the metric

used to evaluate and compare these methods is the score attained on the task at hand, a method that purely focuses on reward optimization is expected to be better as long as it implicitly ensures similarity consistencies on the augmented views.

However, many problems in RL cannot be solved with data augmentations alone. For example, RAD would not be applicable to environments with sparse-rewards or no rewards at all, because it learns similarity consistency implicitly through the observations coupled to a reward signal. On the other hand, the contrastive learning objective in CURL internalizes invariances explicitly and is therefore able to learn semantic representations from high dimensional observations gathered from any rollout regardless of the reward signal. Unsupervised representation learning may therefore be a better fit for real-world tasks, such as robotic manipulation, where the environment reward is more likely to be sparse or absent.

This post is based on the following papers:

-

CURL: Contrastive Unsupervised Representations for Reinforcement Learning

Michael Laskin*, Aravind Srinivas*, Pieter Abbeel

Thirty-seventh International Conference Machine Learning (ICML), 2020.

arXiv, Project Website -

Reinforcement Learning with Augmented Data

Michael Laskin*, Kimin Lee*, Adam Stooke, Lerrel Pinto, Pieter Abbeel, Aravind Srinivas

arXiv, Project Website

References

- Hafner et al. Learning Latent Dynamics for Planning from Pixels. ICML 2019.

- Hafner et al. Dream to Control: Learning Behaviors by Latent Imagination. ICLR 2020.

- Kaiser et al. Model-Based Reinforcement Learning for Atari. ICLR 2020.

- Lee et al. Stochastic Latent Actor-Critic: Deep Reinforcement Learning with a Latent Variable Model. arXiv 2019.

- Henaff et al. Data-Efficient Image Recognition with Contrastive Predictive Coding. ICML 2020.

- He et al. Momentum Contrast for Unsupervised Visual Representation Learning. CVPR 2020.

- Chen et al. A Simple Framework for Contrastive Learning of Visual Representations. ICML 2020.

- Kostrikov et al. Image Augmentation Is All You Need: Regularizing Deep Reinforcement Learning from Pixels. arXiv 2020.

This article was initially published on the BAIR blog, and appears here with the authors’ permission.

What is artificial intelligence? – The pragmatic definition

There’s no definitive or academically correct definition of artificial intelligence. The common ones usually define AI as computer models that perform tasks in a human like manner and simulate intelligent behaviour.

The truth is that for each expert on the field of AI you ask about the definition, you will get some new variation of the definition. In some cases you might even get a very religious schooling on how it’s only AI if the system contains deep learning models. Do you ask many startup founders if their systems contain AI even though it’s just a very simple regression algorithm like we have had in Excel for ages. In fact a VC fund recently found that less than half of startups claiming to be AI-startups actually have any kind of AI.

So when is something AI? My definition is pretty pragmatic. For me AI has a set of common features that if present then I would define it as AI. Especially when working with AI these features will be very much in your face. In other words, if it looks like a duck, and it swims like a duck, and quacks like a duck, then it probably is a duck. The same goes for AI.

The common features I see:

- The system simulates a human act or task.

- The learning comes from examples instead of instructions. The entails the need for data.

- Before the system is ready you cannot predict that input X will give you exactly output Y. Only just about.

So to me it’s not really important what underlying algorithm does the magic. If the common features are present then it will feel the same to use and to develop and the challenges you will face, will be the same.

It might seem like I’m with this definition taking AI to a simpler and less impactful place but this is just a tiny scratch in the surface. The underlying human, technical and organizational challenges are enormous when working with AI.

The Claxon Platform, a Quad CXP-12 PCIe Gen3 Frame Grabber

Case Study – Safe Scoring

Enabling remote whole-body control with 5G edge computing

AI Robots serve restaurant customers in South Korea

Model-assisted labelling – For better or for worse?

Collecting data is for many AI projects without a doubt the most expensive part of the project. Labelling data like images and text pieces is hard and tedious work without much possibility of scaling. If an AI project requires continuously updated or fresh data then this can be a high cost that can challenge the whole business case of an otherwise great project.

There are a few strategies though to lower the costs of labelling data. I have previously written about Active Learning; a data collection strategy that focuses on prioritizing the labelling of the most crucial data first given the models weakest confidence. This is a great strategy but in most cases you still need to label a lot of data.

To speed up the labelling process the strategy of model-assisted labelling has come up. The idea is simply that you train an AI in parallel with labelling and as the AI starts to see a pattern in the data, the AI will suggest labels to the labeller. In that way the labeller in many cases can simply approve the pre suggested label.

Model-assisted labelling can be done both by training a model solely for the purpose of labelling but can also be done by putting the actual production model in the labelling loop and letting that suggest labels.

But is modelassisted labelling just a sure way to get data labelled quicker? Or are there downsides to the strategy? I have worked intensively with model-assisted labelling and I know for sure that there are both pros and cons and if you’re not careful you can end up doing more harm than good with this strategy. If you manage it correctly it can work wonders and save you a ton of resources.

So let’s have a look at the pros and cons.

The Pros

The first and foremost advantage is that it’s faster for the person working with labelling to work with pre-labelled data. Approving the label with a single click for most cases and only having to manually select a label once in a while is just way faster. Especially when working with large documents or models with many potential labels the speed can increase significantly.

Another really useful benefit with model-assisted labelling is that you very early on get an idea about the models weak points. You will get a hands-on understanding of what instances are difficult for the model to understand and usually mislabels. This reflects on the results you should expect in production and as a result youtube the chance early to improve or work around these weak points. When seeing weak points in the model that also often suggests a lack of data volume or quality in these areas. So it also provides an insight to what kind of data you should go look for to be labelled more of.

The cons

Now for the cons. As I mentioned the cons can be pretty bad. The biggest issue with model-assisted labelling is that you are running the risk of lowering the quality of your data. So even though you get more data labelled faster with less quality you can end up with a model performing worse than it would had you not used model-assisted labelling.

So how can model-assisted labelling lower the data quality? It’s actually very simple. Humans tend to prefer defaults. The second you slip into autopilot you will start making mistakes by being more likely to choose the default or suggested label. I have seen this time and time again. The biggest source of mistakes in labelling tend to be accepting wrong suggestions. So you have to be very careful when suggesting labels.

Another downside can be if the pre-labelling quality is simply so low that it takes the labeller more time to correct than it would have to start with a blank answer. So you will have to be careful to not enable the pre-labelling too early.

A few tips for model-assisted labelling

I have a few tips for being more successful with model-assisted labelling.

First tip is to set a target for data quality. You will never get 100% correct data anyway so you will have to accept some number of wrong labels. If you can set a target that is acceptable to train the model from, you can monitor if the model-assisted labelling is begging to do more harm than good. That also works great as an expectations alignment on your team in general.

I’d also suggest doing samples without pre-labelling to measure if there’s a difference between the results you get with and without pre-labelling. You simply do this by turning off the assist model for an example one out of every ten cases. It’s easy and will show a lot of truth.

Lastly I will suggest one of my favorites. Probabilistic programming models are very beneficial for model-assisted labelling. Probabilistic models are Bayesian and as a result offer uncertainty in distributions instead of scalars(a number) and make it much easier to know if the pre-label is likely to be correct or not.

What is data operations (DataOps)?

When I write about AI I very often refer to data operations and how important a foundation it is for most AI solutions. Without proper data operations you can easily get to a point where handling the necessary data will be too difficult and costly for the AI business case to make sense. So to clarify a little I wanted to give you some insight on what it really means.

Data operations is the process of obtaining, cleaning, storing and delivering data in a secure and cost effective manner. It’s a mix of business strategy, DevOps and data science and is the underlying supply chain for many big data and AI solutions.

Data operations was originally coined in Big Data regi but has become a more broadly used term in the later years.

Data operations is the most important competitive advantage

As I have mentioned in a lot of previous posts, I see the data operations as a higher priority than algorithm development when it comes to trying to beat the competition. In most AI cases the algorithms used are standard AI algorithms from standard frameworks that are fed data, trained and tuned a little before being deployed. So since the underlying algorithms are largely the same the real difference is in the data. The work that goes into to get good results from high quality data is almost nothing compared to the amount of work it takes when using mediocre data. Getting data at a lower cost than the competition is also a really important factor. Especially in AI cases that require a continuous flow of new data. In these cases getting new data all the time can become an economic burden that will weigh down the business.

Data operations Paperflow example

To make it more concrete I wanted to use the AI company I co-founded Paperflow as an example. Paperflow is an AI company that receives invoices and other financial documents and captures data such as invoice date, amounts and invoice lines. Since invoices can look very different and the layout of invoices changes over time, getting a lot and getting more data all the time is necessary. So to make Paperflow a good business we needed good data operations.

To be honest we weren't that aware of the importance when we made these initial decisions but luckily we got it right. Our first major decision in the data operations was that we wanted to collect all data in-house and make our own system for collecting data. That’s a costly investment with both a high investment into the initial system development but also a high recurring cost to our employees with the job of entering data from invoices into the system. The competition had chosen another strategy. They instead had the customers enter the invoice data to their system when their AI failed to make the right prediction on the captured data. That’s a much cheaper strategy that can provide you with a lot of data. The only problem is that customers only have one thing in mind and that is to solve their own problems disregarding if it is correct or not in terms of what you need for training data.

So in Paperflow we found a way to get better data. But how do you get the costs down then?

A part of the solution was heavily investing in the system that was used for entering data and trying to make it as fast to use as possible. It was really trial and error and it took a lot of work. Without having the actual numbers I guess we invested more in the actual data operating systems than the AI.

Another part of the solution was to make sure we only collected the data we actually needed. This is a common challenge in data operations since it’s very difficult to know what data you are going to need in the future. Our solution was to first go for collecting a lot of data (and too much) and then slowly narrowing down the amount of data collected. Going the other way around can be difficult. If we had suddenly started to collect more data on each invoice we would basically have needed to start over and discard all previously validated invoices.

We also started to work a lot on understanding a very important metric. When were our AI guesses so correct that we trust it and avoid to validate a part of the data. That was achieved with a variety of different tricks and technologies one of them being probabilistic programming. Probabilistic programming has the advantage of delivering a uncertainty distribution instead of a percentage that most machine learning algorithms will do. By knowing how sure you are that you are such significantly lowers the risks of making mistakes.

The strategy of only collecting data that you need the most by choosing cases where you AI is the most uncertain is also known as active learning. If you are working on your data operations for AI, you should definitely look into that.

DevOps data operation challenges

On the more tech-heavy part of storing data in an effective way you will also see challenges. I’m not a DevOps expert but I have seen the problem of suddenly having too much data that grows faster than expected in real life. That can be crucial since the scaling ability quickly is coming under pressure. If I could provide one advice here it would be to involve a DevOps early on in the architecture work. Building on a scalable foundation is much more fun than trying to find short term solutions all the time.

How Robotics and IoT Are Changing the Trucking Industry

ROBOTS WITH COMMON SENSE AND COGNITIVE INTELLIGENCE: ARE WE THERE YET?

What Makes Us Superior To Robots When It Comes To Common Intelligence?

The debate about man vs robots is an evergreen and common thing now. While robots are viewed as an enabler of a dystopian future brought by digital disruption, the main question that has baffled minds is how smart are they. When it comes to human intelligence, there isn’t any other living being or ‘mechanical or AI mind’ that can draw parallel with us. Yet, robots powered by AI have been able to perform trivial, monotonous tasks with accuracy far better than us. It is important to note that this does not imply robots have acquired cognitive intelligence nor common sense which are intrinsic to humans, despite de facto of the recent marvels of robotics.

The main problem is that most of the algorithms that are written for robots are based on machine learning coding. These codes are collected from a particular type of data, and models are trained based on individual test conditions. Hence, when put in a situation that is not in their code nor algorithm, robots can fail terribly or draw a conclusion that can be catastrophic. This has highlighted in Stanley Kubrick’s landmark film 2001: A Space Odyssey. The movie features a supercomputer, HAL-9000, who is informed by its creators of the purpose of the mission: to reach Jupiter and search for signs of extra-terrestrial intelligence. When HAL makes an error, it refuses to admit this and alleges that it was caused due to human error. Therefore, astronauts decide to shut HAL down, but unfortunately, the AI discovers their plot by lip-reading. Conclusively, HAL arrives at a new conclusion that wasn’t part of its original programming, deciding to save itself by systematically killing off the people onboard.

Another illustration which experts often mention it that, while we can teach a robot on how to open a door by training it and feeding data on 500 different types of door, the robots will still fail when asked to open the 501st door. Also, this example is the best way to explain why robots don’t share the typical thought process and intelligence of humans. Humans don’t need to be ‘trained’ they observe and learn, or they experiment thanks to curiosity. Further, every time someone knocks the door, we don’t tend to open it, there is always an unfriendly neighbor we dislike. Again we don’t need to be reminded to lock the door either, but robots need a clear set of instruction. Let us consider other aspects of our life, robots and AI are trained on a particular set of data; hence they will function effectively when the input is something they have been trained or programmed for, beyond it the observation is different. For instance, if one uses the expression “Hit the road” while driving a car, she means to say to herself or the driver to begin the journey emphatically. If a robot does not know the phrasal meaning of the same expression, it may believe that the person is asking to ‘hit’ the road. This misunderstanding can lead to accidents. While researchers are working hard, devising algorithms, running codes, we are yet to see a robot that understands the way humans converse, all with accents, dialects, colloquy and jargons.

Michio Kaku, a futurist and theoretical physicist, once said that “Our robots today, have the collective intelligence and wisdom of a cockroach.” While robots of today can make salads on our command, or robots like Deep Blue or AlphaGo Zero can defeat humans in chess, it does not necessarily qualify as ‘common sense’ nor smartness. And let us not forget that Deep Blue and AlphaGo Zero were following instructions given by a team of ‘smart’ human scientists. These robots were designed by people who were smart enough to solve a seemingly impossible task. So to sum up, while robots are becoming smarter that, they are now able to fold laundry, impersonate as a person looking for dating online, they still lag when it comes to cognitive intelligence and common sense. It is a long wait till we find a robot we see in sci-fi movies, i.e. C3P0, R2D2 or WALL-E.