Utilizing AI in the Manufacturing Space

Muscles for soft robots inspired by nature: Hydrogel actuators with improved performance

Exploring the effects of hardware implementation on the exploration space of evolvable robots

A technique to facilitate the robotic manipulation of crumpled cloths

Software Development Company

Robo-Insight #4

Source: OpenAI’s DALL·E 2 with prompt “a hyperrealistic picture of a robot reading the news on a laptop at a coffee shop”

Welcome to the 4th edition of Robo-Insight, a biweekly robotics news update! In this post, we are excited to share a range of new advancements in the field and highlight robots’ progress in areas like mobile applications, cleaning, underwater mining, flexibility, human well-being, depression treatments, and human interactions.

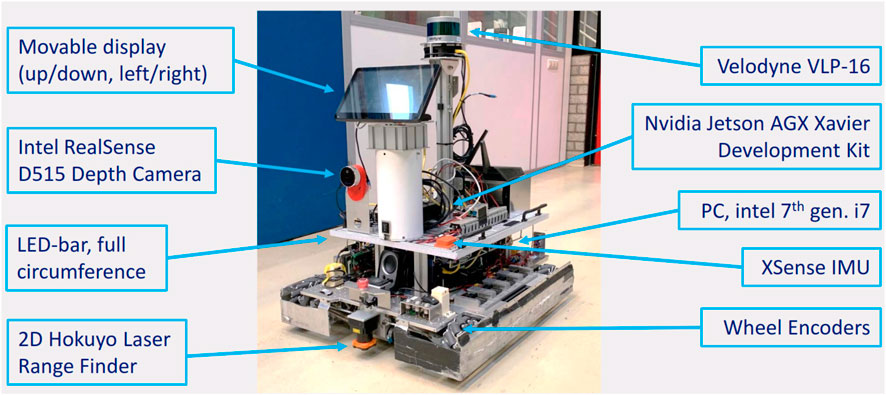

Simplified mobile robot behavior adaptations

In the world of system adaptions, researchers from Eindhoven University of Technology have introduced a methodology that bridges the gap between application developers and control engineers in the context of mobile robots’ behavior adaptation. This approach leverages symbolic descriptions of robots’ behavior, known as “behavior semantics,” and translates them into control actions through a “semantic map.” This innovation aims to simplify motion control programming for autonomous mobile robot applications and facilitate integration across various vendors’ control software. By establishing a structured interaction layer between application, interaction, and control layers, this methodology could streamline the complexity of mobile robot applications, potentially leading to more efficient underground exploration and navigation systems.

The frontal perspective of the mobile platform (showcases hardware components with blue arrows). Source.

New robot for household clean-ups

Speaking of helpful robots, Princeton University has created a robot named TidyBot to address the challenge of household tidying. Unlike simple tasks such as moving objects, real-world cleanup requires a robot to differentiate between objects, place them correctly, and avoid damaging them. TidyBot accomplishes this through a combination of physical dexterity, visual recognition, and language understanding. Equipped with a mobile robotic arm, a vision model, and a language model, TidyBot can identify objects, place them in designated locations, and even infer proper actions with an 85% accuracy rate. The success of TidyBot demonstrates its potential to handle complex household tasks.

TidyBot in work. Source.

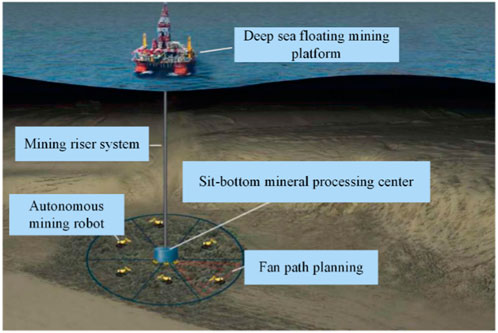

Deep sea mining robots

Shifting our focus to underwater environments, researchers are addressing the efficiency hurdles faced in deep-sea mining through innovative path planning for autonomous robotic mining vehicles. With deep-sea manganese nodules holding significant potential, these robotic vehicles are essential for their collection. By refining path planning methods, the researchers aim to improve the efficiency of these vehicles in traversing challenging underwater terrains while avoiding obstacles. This development could lead to more effective and responsible resource extraction from the ocean floor, contributing to the sustainable utilization of valuable mineral resources.

Diagram depicting the operational framework of the deep-sea mining system. Source.

Advanced soft robots with dexterity and flexibility

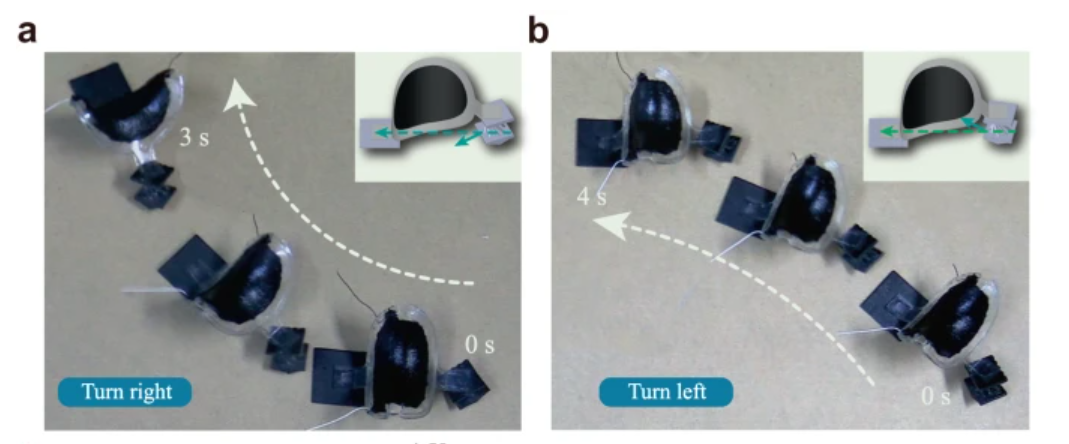

In regards to the field of robotic motion, recently researchers from Shanghai Jiao Tong University have developed small-scale soft robots with remarkable dexterity, enabling immediate and reversible changes in motion direction and shape reconfiguration. These robots, powered by an active dielectric elastomer artificial muscle and a unique chiral-lattice foot design, can change direction during fast movement with a single voltage input. The chiral-lattice foot generates various locomotion behaviors, including forward, backward, and circular motion, by adjusting voltage frequencies. Additionally, combining this structural design with shape memory materials allows the robots to perform complex tasks like navigating narrow tunnels or forming specific trajectories. This innovation opens the door to next-generation autonomous soft robots capable of versatile locomotion.

The soft robot achieves circular motion in either right or left directions by positioning the lattice foot towards the respective sides. Source.

Robotic dogs utilized to comfort patients

Turning our focus to robot use in the healthcare field, Stanford students, along with researchers and doctors, have partnered with AI and robotics industry leaders to showcase new robotic dogs designed to interact with pediatric patients at Lucile Packard Children’s Hospital. Patients at the hospital had the opportunity to engage with the playful robots, demonstrating the potential benefits of these mechanical pets for children’s well-being during their hospital stays. The robots, called Pupper, were developed by undergraduate engineering students and operated using handheld controllers. The goal of the demonstration was to study the interaction between the robots and pediatric patients, exploring ways to enhance the clinical experience and reduce anxiety.

A patient playing with the robotic dog. Source.

Robotic innovations could help with depression

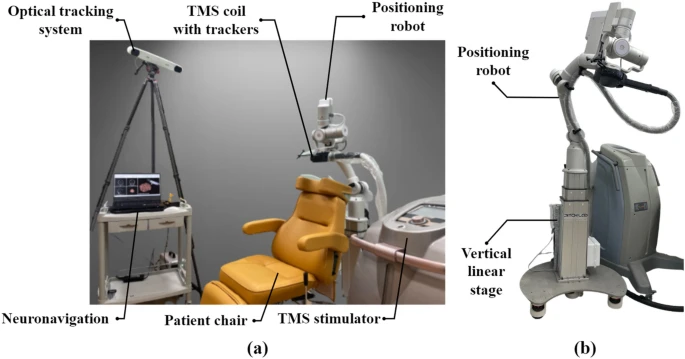

Along the same lines as improving well-being, a recent pilot study has explored the potential benefits of using robotics in transcranial magnetic stimulation (TMS) for treating depression. Researchers led by Hyunsoo Shin developed a custom TMS robot designed to improve the accuracy of TMS coil placement on the brain, a critical aspect of effective treatment. By employing the robotic system, they reduced preparation time by 53% and significantly minimized errors in coil positioning. The study found comparable therapeutic effects on depression severity and regional cerebral blood flow (rCBF) between the robotic and manual TMS methods, shedding light on the potential of robotic assistance in enhancing the precision and efficiency of TMS treatments.

Configuration of the robotic repetitive transcranial magnetic stimulation (rTMS) within the treatment facility, and robotic positioning device for automated coil placement. Source.

Advanced robotic eye research

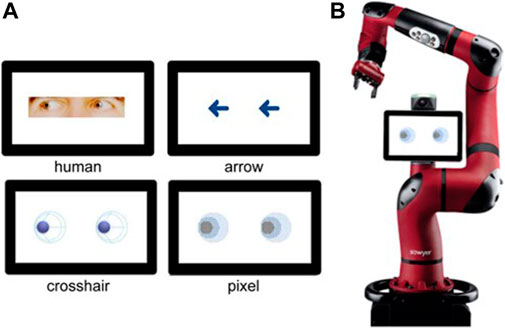

Finally, in the world of human-robot enhancement, a study conducted by researchers from various institutions has explored the potential of using robot eyes as predictive cues in human-robot interaction (HRI). The study aimed to understand whether and how the design of predictive robot eyes could enhance interactions between humans and robots. Four different types of eye designs were tested, including arrows, human eyes, and two anthropomorphic robot eye designs. The results indicated that abstract anthropomorphic robot eyes, which mimic certain aspects of human-like attention, were most effective at directing participants’ attention and triggering reflexive shifts. These findings suggest that incorporating abstract anthropomorphic eyes into robot design could improve the predictability of robot movements and enhance HRI.

The four types of stimuli. The first row showcases the human (left) and arrow (right) stimuli. The second row displays the abstract anthropomorphic robot eyes. Photograph of the questionnaire’s subject, the cooperative robot Sawyer. Source.

The continuous stream of progress seen across diverse domains underscores the adaptable and constantly progressing nature of robotics technology, revealing novel pathways for its incorporation across a spectrum of industries. The gradual advancement in the realm of robotics reflects persistent efforts and hints at the potential implications these strides might hold for the future.

Sources:

- Chen, H. L., Hendrikx, B., Torta, E., Bruyninckx, H., & van de Molengraft, R. (2023, July 10). Behavior adaptation for mobile robots via semantic map compositions of constraint-based controllers. Frontiers.

- Princeton Engineering – Engineers clean up with TidyBot. (n.d.). Princeton Engineering. Retrieved August 30, 2023,

- Xie, Y., Liu, C., Chen, X., Liu, G., Leng, D., Pan, W., & Shao, S. (2023, July 12). Research on path planning of autonomous manganese nodule mining vehicle based on lifting mining system. Frontiers.

- Wang, D., Zhao, B., Li, X., Dong, L., Zhang, M., Zou, J., & Gu, G. (2023). Dexterous electrical-driven soft robots with reconfigurable chiral-lattice foot design. Nature Communications, 14(1), 5067.

- University, S. (2023, August 1). Robo-dogs unleash joy at Stanford hospital. Stanford Report.

- Shin, H., Jeong, H., Ryu, W., Lee, G., Lee, J., Kim, D., Song, I.-U., Chung, Y.-A., & Lee, S. (2023). Robotic transcranial magnetic stimulation in the treatment of depression: a pilot study. Scientific Reports, 13(1), 14074.

- Onnasch, L., Schweidler, P., & Schmidt, H. (2023, July 3). The potential of robot eyes as predictive cues in HRI-an eye-tracking study. Frontiers.

Scientists create soft and scalable robotic hand based on multiple materials

A bipedal robot that can walk using just one actuator

Team simulates collective movement of worm blobs for future swarm robotic systems

An energy-efficient object detection system for UAVs based on edge computing

Morphobots for Mars: Caltech Develops All-Terrain Robot as Candidate for NASA Mission

MIT engineers use kirigami to make ultrastrong, lightweight structures

MIT researchers used kirigami, the art of Japanese paper cutting and folding, to develop ultrastrong, lightweight materials that have tunable mechanical properties, like stiffness and flexibility. These materials could be used in airplanes, automobiles, or spacecraft. Image: Courtesy of the researchers

By Adam Zewe | MIT News

Cellular solids are materials composed of many cells that have been packed together, such as a honeycomb. The shape of those cells largely determines the material’s mechanical properties, including its stiffness or strength. Bones, for instance, are filled with a natural material that enables them to be lightweight, but stiff and strong.

Inspired by bones and other cellular solids found in nature, humans have used the same concept to develop architected materials. By changing the geometry of the unit cells that make up these materials, researchers can customize the material’s mechanical, thermal, or acoustic properties. Architected materials are used in many applications, from shock-absorbing packing foam to heat-regulating radiators.

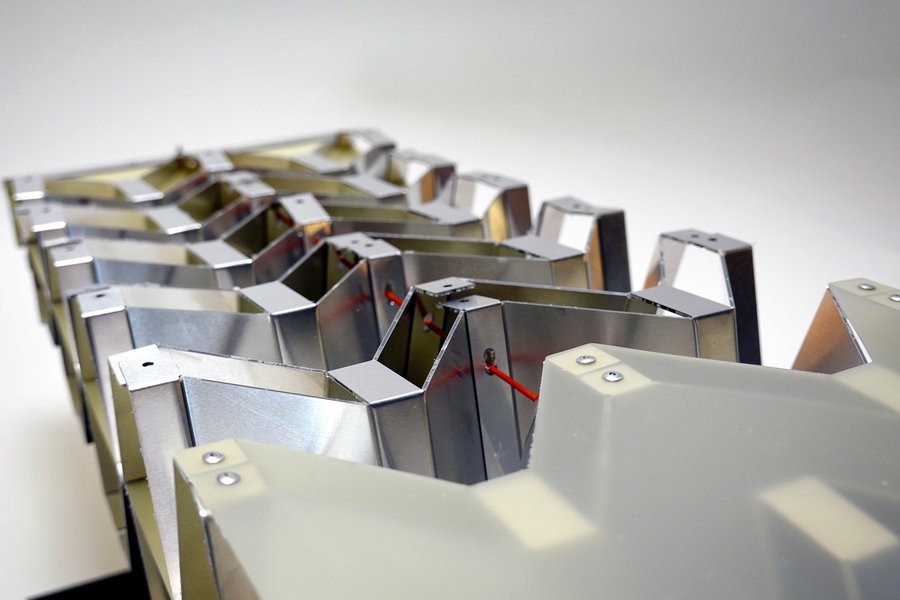

Using kirigami, the ancient Japanese art of folding and cutting paper, MIT researchers have now manufactured a type of high-performance architected material known as a plate lattice, on a much larger scale than scientists have previously been able to achieve by additive fabrication. This technique allows them to create these structures from metal or other materials with custom shapes and specifically tailored mechanical properties.

“This material is like steel cork. It is lighter than cork, but with high strength and high stiffness,” says Professor Neil Gershenfeld, who leads the Center for Bits and Atoms (CBA) at MIT and is senior author of a new paper on this approach.

The researchers developed a modular construction process in which many smaller components are formed, folded, and assembled into 3D shapes. Using this method, they fabricated ultralight and ultrastrong structures and robots that, under a specified load, can morph and hold their shape.

Because these structures are lightweight but strong, stiff, and relatively easy to mass-produce at larger scales, they could be especially useful in architectural, airplane, automotive, or aerospace components.

Joining Gershenfeld on the paper are co-lead authors Alfonso Parra Rubio, a research assistant in the CBA, and Klara Mundilova, an MIT electrical engineering and computer science graduate student; along with David Preiss, a graduate student in the CBA; and Erik D. Demaine, an MIT professor of computer science. The research will be presented at ASME’s Computers and Information in Engineering Conference.

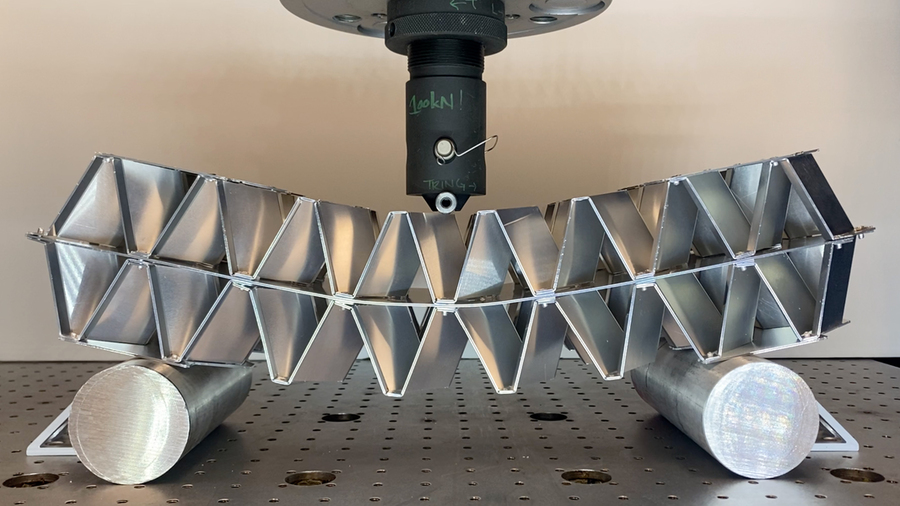

The researchers actuate a corrugated structure by tensioning steel wires across the compliant surfaces and then connecting them to a system of pulleys and motors, enabling the structure to bend in either direction. Image: Courtesy of the researchers

Fabricating by folding

Architected materials, like lattices, are often used as cores for a type of composite material known as a sandwich structure. To envision a sandwich structure, think of an airplane wing, where a series of intersecting, diagonal beams form a lattice core that is sandwiched between a top and bottom panel. This truss lattice has high stiffness and strength, yet is very lightweight.

Plate lattices are cellular structures made from three-dimensional intersections of plates, rather than beams. These high-performance structures are even stronger and stiffer than truss lattices, but their complex shape makes them challenging to fabricate using common techniques like 3D printing, especially for large-scale engineering applications.

The MIT researchers overcame these manufacturing challenges using kirigami, a technique for making 3D shapes by folding and cutting paper that traces its history to Japanese artists in the 7th century.

Kirigami has been used to produce plate lattices from partially folded zigzag creases. But to make a sandwich structure, one must attach flat plates to the top and bottom of this corrugated core onto the narrow points formed by the zigzag creases. This often requires strong adhesives or welding techniques that can make assembly slow, costly, and challenging to scale.

The MIT researchers modified a common origami crease pattern, known as a Miura-ori pattern, so the sharp points of the corrugated structure are transformed into facets. The facets, like those on a diamond, provide flat surfaces to which the plates can be attached more easily, with bolts or rivets.

The MIT researchers modified a common origami crease pattern, known as a Miura-ori pattern, so the sharp points of the corrugated structure are transformed into facets. The facets, like those on a diamond, provide flat surfaces to which the plates can be attached more easily, with bolts or rivets. Image: Courtesy of the researchers

“Plate lattices outperform beam lattices in strength and stiffness while maintaining the same weight and internal structure,” says Parra Rubio. “Reaching the H-S upper bound for theoretical stiffness and strength has been demonstrated through nanoscale production using two-photon lithography. Plate lattices construction has been so difficult that there has been little research on the macro scale. We think folding is a path to easier utilization of this type of plate structure made from metals.”

Customizable properties

Moreover, the way the researchers design, fold, and cut the pattern enables them to tune certain mechanical properties, such as stiffness, strength, and flexural modulus (the tendency of a material to resist bending). They encode this information, as well as the 3D shape, into a creasing map that is used to create these kirigami corrugations.

For instance, based on the way the folds are designed, some cells can be shaped so they hold their shape when compressed while others can be modified so they bend. In this way, the researchers can precisely control how different areas of the structure will deform when compressed.

Because the flexibility of the structure can be controlled, these corrugations could be used in robots or other dynamic applications with parts that move, twist, and bend.

To craft larger structures like robots, the researchers introduced a modular assembly process. They mass produce smaller crease patterns and assemble them into ultralight and ultrastrong 3D structures. Smaller structures have fewer creases, which simplifies the manufacturing process.

Using the adapted Miura-ori pattern, the researchers create a crease pattern that will yield their desired shape and structural properties. Then they utilize a unique machine — a Zund cutting table — to score a flat, metal panel that they fold into the 3D shape.

“To make things like cars and airplanes, a huge investment goes into tooling. This manufacturing process is without tooling, like 3D printing. But unlike 3D printing, our process can set the limit for record material properties,” Gershenfeld says.

Using their method, they produced aluminum structures with a compression strength of more than 62 kilonewtons, but a weight of only 90 kilograms per square meter. (Cork weighs about 100 kilograms per square meter.) Their structures were so strong they could withstand three times as much force as a typical aluminum corrugation.

Using their method, researchers produced aluminum structures with a compression strength of more than 62 kilonewtons, but a weight of only 90 kilograms per square meter. Image: Courtesy of the researchers

The versatile technique could be used for many materials, such as steel and composites, making it well-suited for the production lightweight, shock-absorbing components for airplanes, automobiles, or spacecraft.

However, the researchers found that their method can be difficult to model. So, in the future, they plan to develop user-friendly CAD design tools for these kirigami plate lattice structures. In addition, they want to explore methods to reduce the computational costs of simulating a design that yields desired properties.

“Kirigami corrugations holds exciting potential for architectural construction,” says James Coleman MArch ’14, SM ’14, co-founder of the design for fabrication and installation firm SumPoint, and former vice president for innovation and R&D at Zahner, who was not involved with this work. “In my experience producing complex architectural projects, current methods for constructing large-scale curved and doubly curved elements are material intensive and wasteful, and thus deemed impractical for most projects. While the authors’ technology offers novel solutions to the aerospace and automotive industries, I believe their cell-based method can also significantly impact the built environment. The ability to fabricate various plate lattice geometries with specific properties could enable higher performing and more expressive buildings with less material. Goodbye heavy steel and concrete structures, hello lightweight lattices!”

Parra Rubio, Mundilova and other MIT graduate students also used this technique to create three large-scale, folded artworks from aluminum composite that are on display at the MIT Media Lab. Despite the fact that each artwork is several meters in length, the structures only took a few hours to fabricate.

“At the end of the day, the artistic piece is only possible because of the math and engineering contributions we are showing in our papers. But we don’t want to ignore the aesthetic power of our work,” Parra Rubio says.

This work was funded, in part, by the Center for Bits and Atoms Research Consortia, an AAUW International Fellowship, and a GWI Fay Weber Grant.