I recently chaired a UJA Tech Talk on “The Future Of Autonomous Cars” with former General Motors Vice-Chairman Steve Girsky. The auto executive enthusiastically shared his vision for the next 15-25 years of driving – a congestion-free world of automated wheeled capsules zipping commuters to and from work.

Girsky stated that connected cars with safety assist (autonomy-lite) features are moving much faster toward mass adoption than fully autonomous vehicles (sans steering wheels and pedals). In his opinion, the largest roadblocks toward a consumer-ready robocar are the current technical inefficiencies of prototypes on the road today, which burn huge amounts of energy supporting enhanced computing and arrays of sensors. This makes the sticker price closer to a 1972 Ferrari than a 2018 Prius.

As main street adoption relies heavily on converting combustion engines to electric at accessible pricing, Girsky’s sentiment was shared by many CES 2018 participants. NVIDIA, the leading chip manufacturer for autonomous vehicles, unveiled its latest technology, Xavier, with auto industry partner Volkswagen in Las Vegas. Xavier promises to be 15 times more energy-efficient than previous chip generations delivering 30 trillion operations per second by wielding only 30 watts of power.

After the Xavier CES demonstration, Volkswagen CEO Herbert Diess exclaimed, “Autonomous driving, zero-emission mobility, and digital networking are virtually impossible without advances in AI and deep learning. Working with NVIDIA, the leader in AI technology, enables us to take a big step into the future.”

NVIDIA is becoming the industry standard as Volkswagen joins more than 320 companies and organizations working with the chip manufacturer on autonomous vehicles. While NVIDIA is leading the pack, Intel and Qualcomm are not far behind with their low-power solutions. Electric vehicle powerhouse Tesla is developing its own internal chip for the next generation of Autopilot. While these new chips represents a positive evolution in processors, there is still much work to be done as current self-driving prototypes require close to 2,500 watts per second.

Power Consumption a Tradeoff for Self-Driving Cars

The power-consumption problem was highlighted recently with a report published by the the University of Michigan Center for Sustainable Systems. Its lead author, Greg Keoleian, questions whether the current autonomous car models will slow the overall adoption towards electric vehicles. Keoleian’s team simulated a number of self-driving Ford Fusion models with different-sized computer configurations and engine designs. In sharing his findings, Keoleian said, “We knew there was going to be a tradeoff in terms of the energy and greenhouse gas emissions associated with the equipment and the benefits gained from operational efficiency. I was surprised that it was so significant.”

Keoleian’s conclusions challenged the premise of self-driving cars accelerating the adoption of renewal energy. For years, the advocates of autonomous vehicles have claimed that smart driving will lead to a reduction of greenhouse gas emissions through the platooning of vehicles on highways and intersections; the decrease of aerodynamic drag on freeways, and the overall reduction in urban congestion.

Analysis: How California’s Self-Driving Cars Performed in 2017

However, the University of Michigan tests only showed a “six to nine percent net energy reduction” over the vehicle’s lifecycle when running on autonomy mode. This went down by five percent when using a large Waymo rooftop sensor package (shown below) as it increased the aerodynamic drag. The report also stated that the greatest net efficiencies were in cars with gas drivetrains that benefit the most from smart driving. Waymo currently uses a hybrid Chrysler Pacifica to run its complex fusion of sensors and processing units.

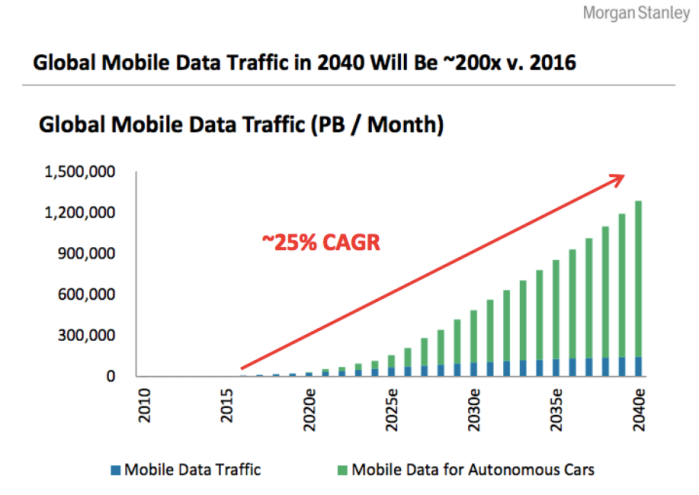

Keoleian told IEEE Spectrum that his modeling actually “overstates real impacts from future autonomous vehicles.” While he anticipates the reduction of computing and sensor drag, he is concerned that the impact of 5G communications has not been fully explored. The increased bandwidth will lead to greater data streams and boost power consumption for inboard systems and processors. In addition, he thinks that self-driving systems will lead to greater distances traveled as commuters move further away from city centers with the advent of easier commutes. Keoleian explains, “There could be a rebound effect. They could induce travel, adding to congestion and fuel use.” Koeleian points to a confusing conclusion by the U.S. National Renewable Energy Laboratory that presents two possible outcomes of full autonomy:

- A reduction in greenhouse emissions by sixty percent with greater ride sharing options

- An increase of two hundred percent with increased driving distances

According to Wilko Stark, Mercedes-Benz’s Vice President of Strategy, it only makes sense for autonomous vehicles to be electric as the increased power requirements will go to the computers instead of the motors. “To put such a system into a combustion-engined car doesn’t make any sense, because the fuel consumption will go up tremendously,” explains Stark.

Analysis: Fleet Expansion Shows Waymo Lapping Self-Driving Competition

Girsky shares Stark’s view, as he predicted that the first large scale use cases for autonomy will be fleets of souped-up golf carts running low speed pre-planned shuttle routes. Also on view at CES were complimentary autonomous shared taxi rides around Las Vegas, courtesy of French startup Navya. Today, Navya boasts of 60 operating shuttles in more than 10 cities, including around the University of Michigan.

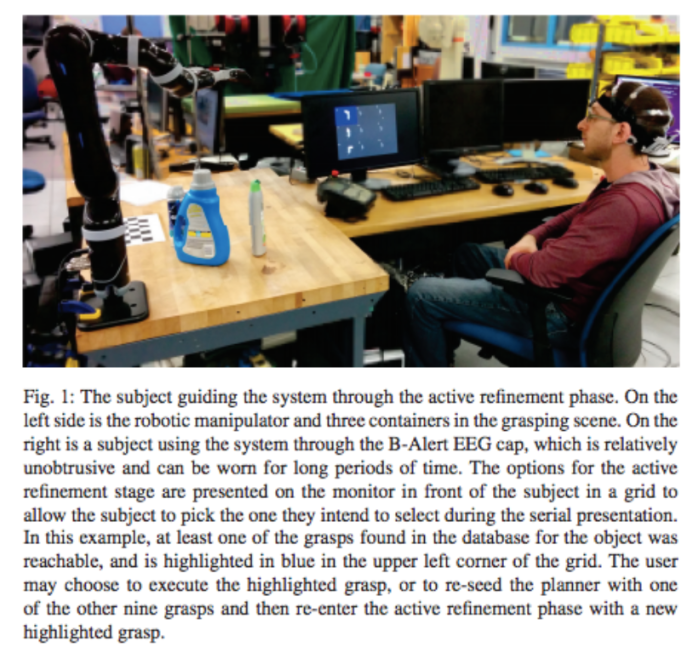

Fully autonomous cars might not be far behind, as Waymo has seen a ninety percent drop in component costs by bringing its sensor development in-house. The autonomous powerhouse recently passed the four million mile marker on public roads and is planning on ditching its safety driver later this year in its Phoenix, Arizona test program. According Dmitri Dolgov, Vice President of Waymo’s Engineering, “Sensors on our new generation of vehicles can see farther, sharper, and more accurately than anything available on the market. Instead of taking components that might have been designed for another application, we engineered everything from the ground up, specifically for the task of Level 4 autonomy.”

With increased roadside fatalities and rising CO2 emissions, the world can’t wait too much longer for affordable, energy-efficient autonomous transportation. Girsky and others remind us there is still a long road ahead, while the industry experts estimate that the current gas-burning Waymo Chrysler Pacifica cruising around Arizona costs more than one hundred times the sticker price of the minivan. I guess until then there is always Citibike.

Boston Dynamics is not the only quadruped provider; recent upstarts have entered the market by utilizing Raibert’s research as an inspiration for their own bionic creatures. Chinese roboticist Xing Wang is unabashed in his gushing admiration for the founder of Boston Dynamics, “Marc Raibert … is my idol,” he said a recent interview with IEEE Spectrum Magazine. However, his veneration for Raibert has not stopped him from founding a competitive startup.

Boston Dynamics is not the only quadruped provider; recent upstarts have entered the market by utilizing Raibert’s research as an inspiration for their own bionic creatures. Chinese roboticist Xing Wang is unabashed in his gushing admiration for the founder of Boston Dynamics, “Marc Raibert … is my idol,” he said a recent interview with IEEE Spectrum Magazine. However, his veneration for Raibert has not stopped him from founding a competitive startup.

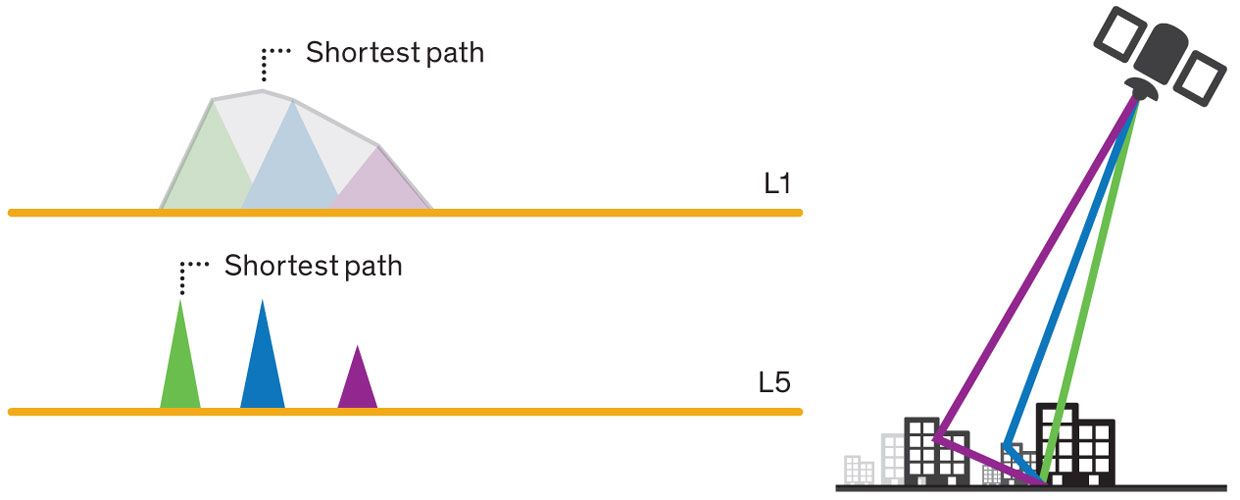

Satellites already provide millions of GPS coordinates for connected systems. However, the accuracy of GPS has been off by as many as 5 meters, which in a fully autonomous world could mean the difference between life and death. Chip manufacturer Broadcom aims to reduce the error margin to 30 centimeters. According to a press release this summer, Broadcom’s technology works better in concrete canyons like New York which have plagued Uber drivers for years with wrong fare destinations. Using new L5 satellite signals, the chips are able to calculate receptions between points at a fast rate with lower power consumption (see diagram). Manuel del Castillo of Broadcom explained, “Up to now there haven’t been enough L5 satellites in orbit.” Currently there are approximately 30 L5 satellites in orbit. However, del Castillo suggests that could be enough to begin shipping the new chip next year, “[Even in a city’s] narrow window of sky you can see six or seven, which is pretty good. So now is the right moment to launch.”

Satellites already provide millions of GPS coordinates for connected systems. However, the accuracy of GPS has been off by as many as 5 meters, which in a fully autonomous world could mean the difference between life and death. Chip manufacturer Broadcom aims to reduce the error margin to 30 centimeters. According to a press release this summer, Broadcom’s technology works better in concrete canyons like New York which have plagued Uber drivers for years with wrong fare destinations. Using new L5 satellite signals, the chips are able to calculate receptions between points at a fast rate with lower power consumption (see diagram). Manuel del Castillo of Broadcom explained, “Up to now there haven’t been enough L5 satellites in orbit.” Currently there are approximately 30 L5 satellites in orbit. However, del Castillo suggests that could be enough to begin shipping the new chip next year, “[Even in a city’s] narrow window of sky you can see six or seven, which is pretty good. So now is the right moment to launch.”