iRobot Corp. unveiled new coding resources through iRobot Education that promote more inclusive, equitable access to STEM education and support social-emotional development. iRobot also updated its iRobot Coding App with the introduction of Python coding support and a new 3D Root coding robot simulator environment that is ideal for hybrid and remote learning landscapes.

The updates coincide with the annual National Robotics Week, a time when kids, parents and teachers across the nation tap into the excitement of robotics for STEM learning.

Supporting Social and Emotional Learning

The events of the past year changed the traditional learning environment with students, families and educators adapting to hybrid and remote classrooms. Conversations on the critical importance of diversity, equity and inclusion have also taken on increased importance in the classroom. To address this, iRobot Education has introduced social and emotional learning (SEL) lessons to its Learning Library that tie SEL competencies, like peer interaction and responsible decision-making, into coding and STEM curriculum. These SEL learning lessons, such as The Kind Playground, Seeing the Whole Picture and Navigating Conversations, provide educators with new resources that help students build emotional intelligence and become responsible global citizens, through a STEM lens.

Language translations for iRobot Coding App

More students can now enjoy the free iRobot Coding App with the introduction of Spanish, French, German, Czech and Japanese language support. iRobot’s mobile and web coding app offers three progressively challenging levels of coding language that advances users from graphical coding to hybrid coding, followed by full-text coding. Globally, users can now translate graphical and hybrid block coding levels into their preferred language, helping beginners and experts alike hone their language and computational thinking skills.

Introducing Python coding language support

One of the most popular coding languages, Python is now available to iRobot Coding App users in level 3, full-text coding. This new functionality provides an avenue to gain more complex coding experience in a coding language that is currently used in both academic and professional capacities worldwide, preparing the next generation of students for STEM curriculums and careers.

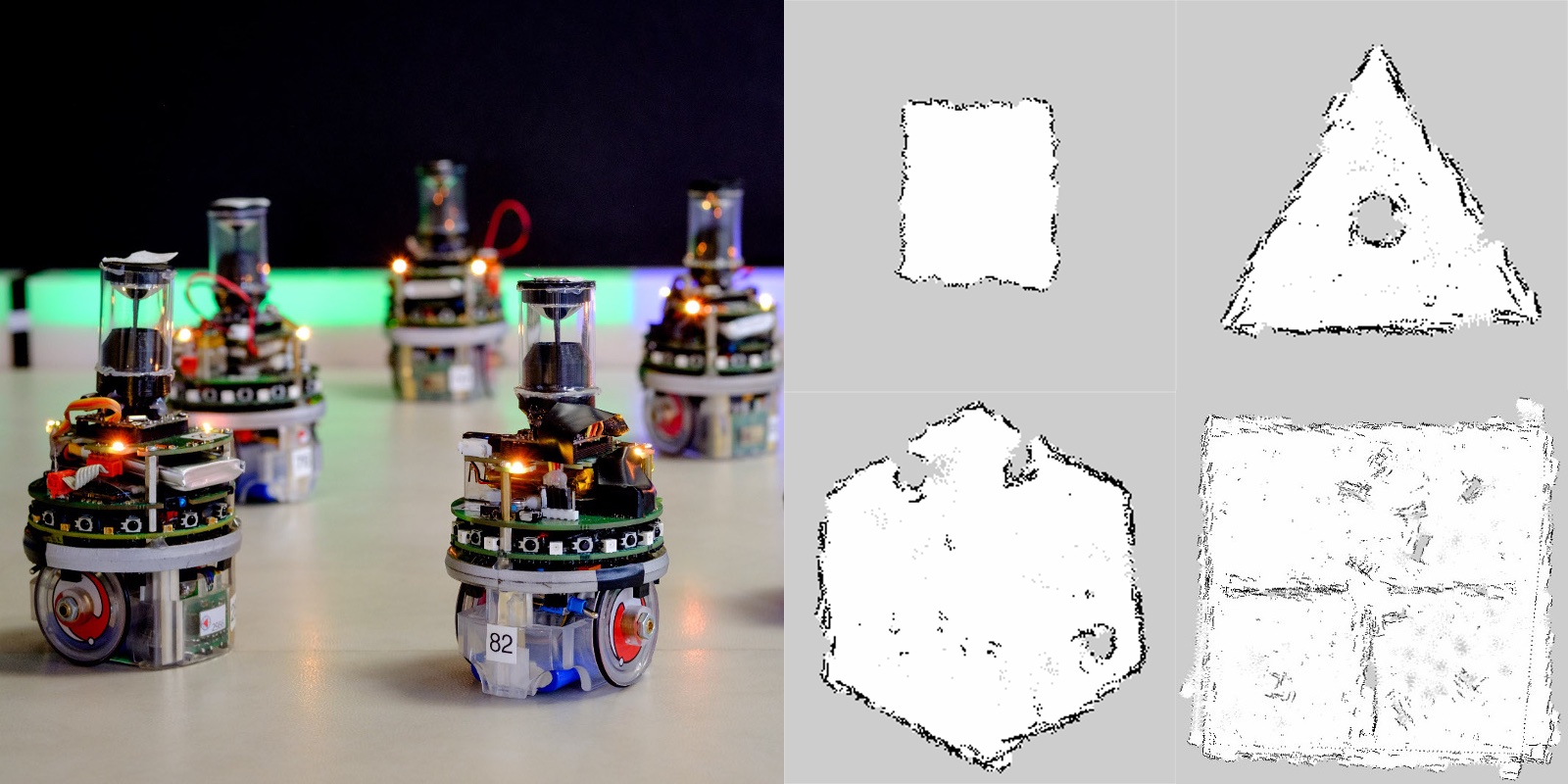

New Root Coding Robot 3D Simulator

Ready to code in 3D? The iRobot Coding App is uniquely designed to help kids learn coding at home and in school, with a virtual Root coding robot available for free within the app. iRobot updated the virtual Root SimBot with a fun and interactive 3D experience, allowing students to control their programmable Root® coding robot right on the screen.

“The COVID-19 pandemic has had, and continues to have, a tangible impact on students who’ve been learning in remote environments, which is why we identified solutions to nurture and grow SEL skills in students,” said Colin Angle, chairman and CEO of iRobot. “The expansion of these new iRobot Education resources, which are free to anyone, will hopefully facilitate greater inclusivity and accessibility for those who want to grow their coding experience and pursue STEM careers.”

In celebration of National Robotics Week, iRobot Education will also release weekly coding challenges focused on learning how to use code to communicate. Each challenge can be completed online in the iRobot Coding App with the Root SimBot or in-person with a Root coding robot. The weekly challenges build upon each other and include guided questions to facilitate discussions about the coding process, invite reflections, and celebrate new learning.

For more information on iRobot Education, Root coding robots and the iRobot Coding App, visit: https://edu.irobot.com/.

Information about National Robotics Week can be found at www.nationalroboticsweek.org.