Watch these tube-shaped robots roll up stairs, carry carts, and race one another

Winged microchip is smallest-ever human-made flying structure

An autonomous system that can reach charge mobile robots without interrupting their missions

Understanding Human-machine Interfaces (HMIS)

NASA robots compete in DARPA’s Subterranean Challenge final

Sense Think Act Podcast: Ryan Gariepy

In this episode, Audrow Nash interviews Ryan Gariepy, Chief Technology Officer of both Clearpath Robotics and OTTO Motors.

Episode links

Subscribe

Surgeons to reach prostate with robot

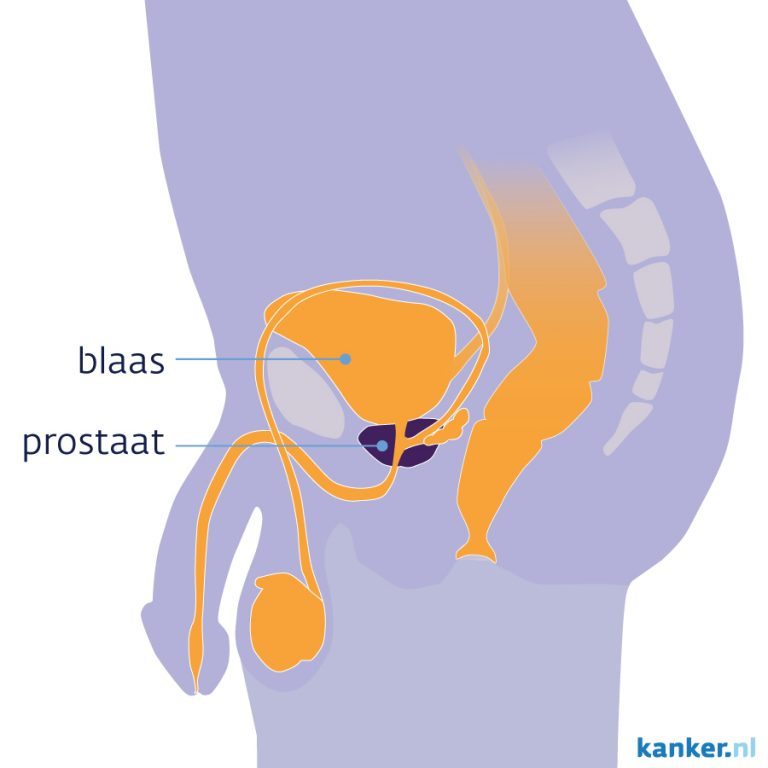

Prostate cancer is the most common form of cancer in men. Every year about 13,000 Dutch men are diagnosed with this disease. According to the Prostate Cancer Foundation, about 1 in 10 men suffers from prostate cancer at some point in their lives.

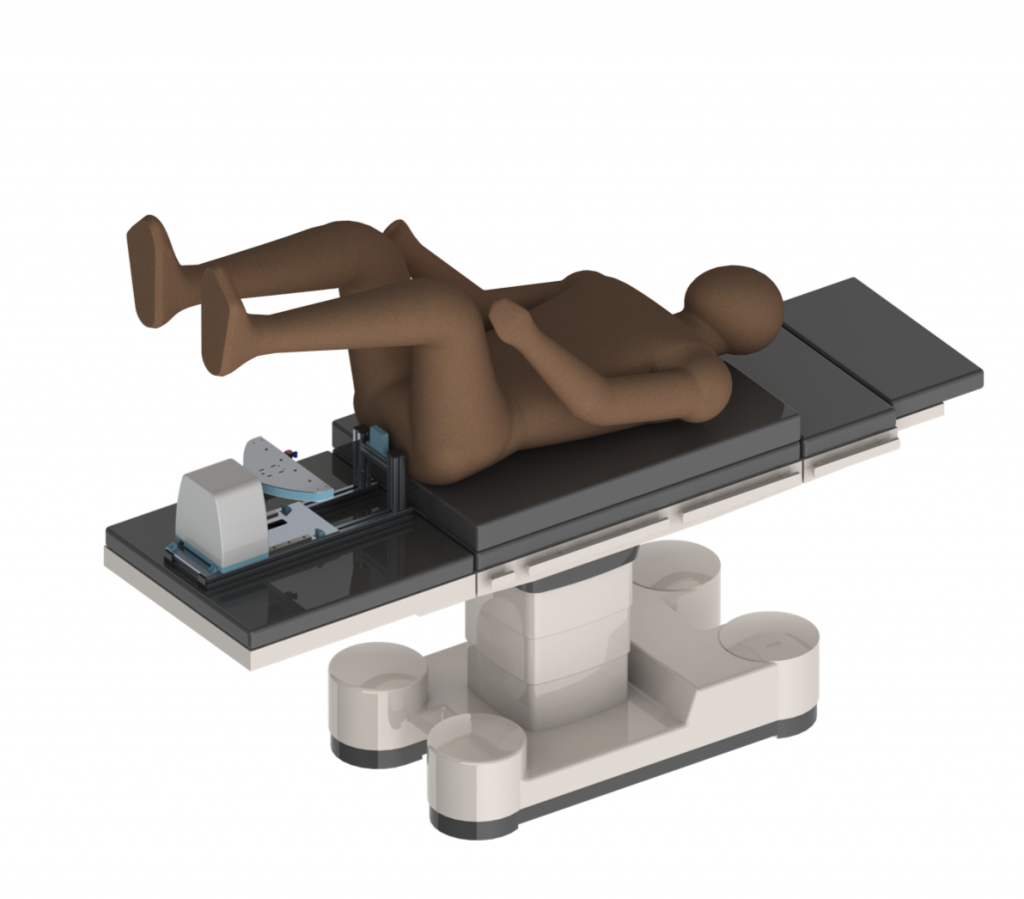

When an all-male TU Delft student team started working with PhD researcher Martijn de Vries to design a robot that can precisely place a radiation source in your body with a steerable needle, it took a while for these statistics to sink in. But once that happened, motivation shot up.

Pepijn van Kampen, mechanical engineering student and minor robotics graduate, says: “The first six months we spent on researching literature. Then we began to actually build our robot. And we started to wonder: are we going to see this robot again when we are 60 years old?”

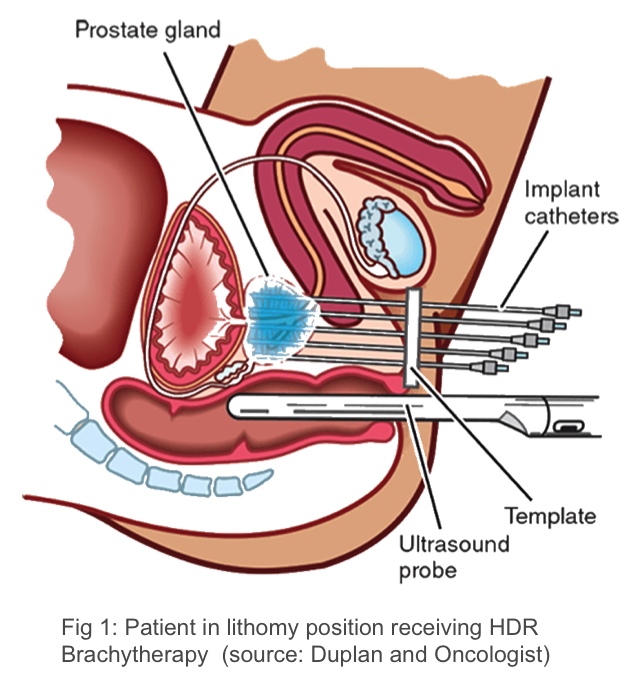

For a tumor located in the prostate, serveral treatments are availabe: surgery to remove the prostate, internal radiation therapy — also called brachytherapy or seed implantation – and external radiotherapy, which uses a machine outside the body to direct radiation beams at the cancer.

Brachytherapy places seeds, ribbons, or capsules that contain a radiation source near the tumor. It delivers a high dose of radiation directly to the tumor and helps spare nearby tissues. The treatment is most frequently applied to the prostate. It is also used to treat cancers of the head and neck, breast, cervix and eye.

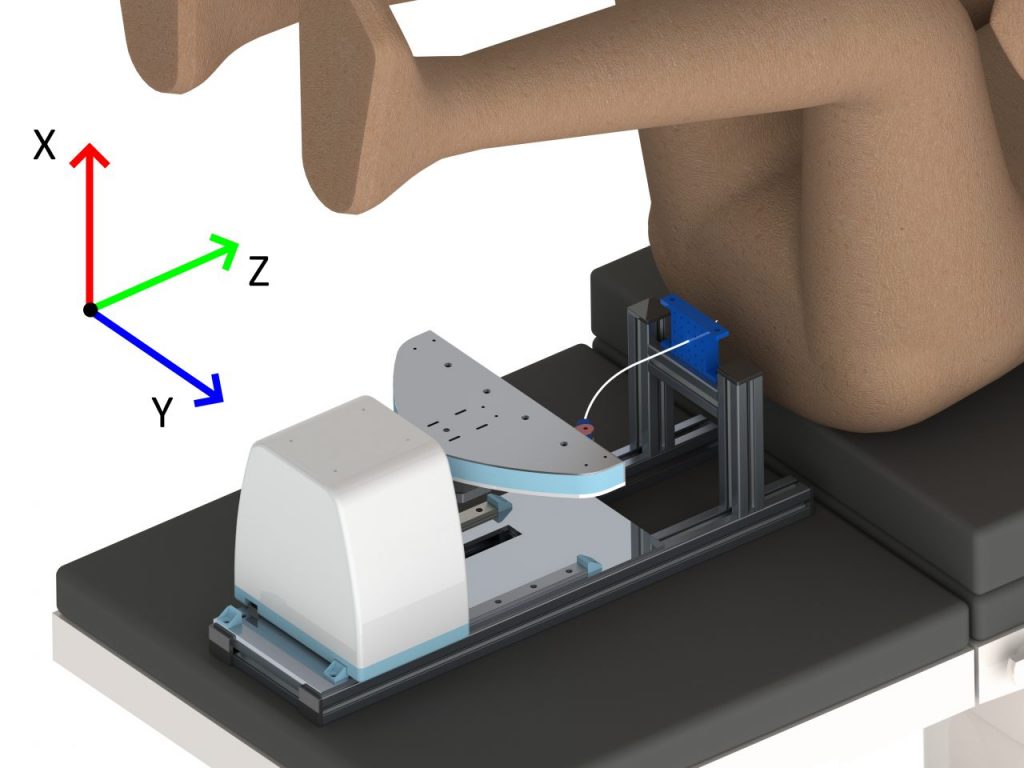

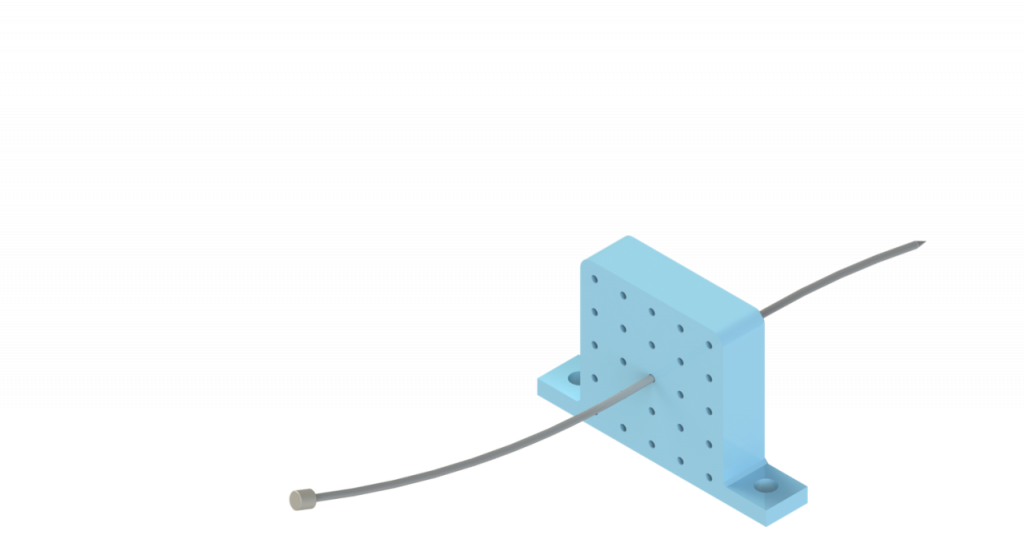

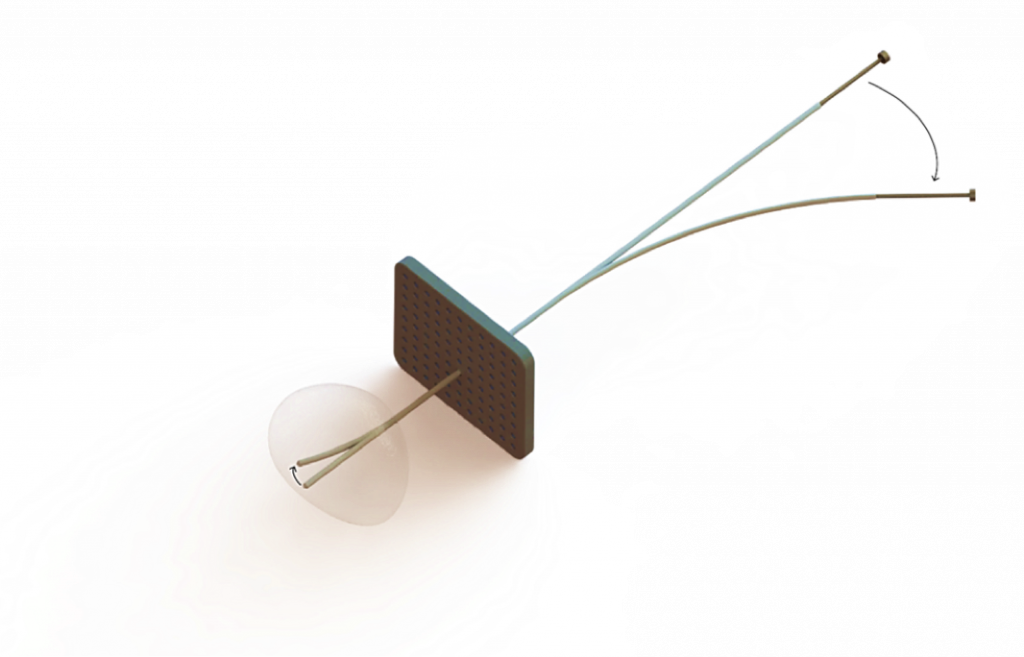

Brachytherapy is put in place through a catheter, which is a small stretchy tube. Current treatment of prostate cancer uses a straight, inflexible needle. This method is not always accurate. “The flexible needle designed by Martijn de Vries has four segments, enabling the surgeon to steer the tip in a range of directions,” says Pepijn van Kampen, of the Steerable Needle Project. “Our robotic system aims to enhance and augment its steering precision, like with power steering in a car. And this works, in principle. Needle buckling is still a challenge. ”

The Steerable Needle Project has received high ratings. The student team graduated cum laude from their bachelor programme. For less needle buckling and more control, they recommend some form of feedback loop in combination with high end visualisation like Magnetic Resonance Imaging (MRI). Accurate robotic control may also depend on predictive models to guide the interaction between needle and patient tissue. Because that is also how a surgeon’s hand-eye coordination works: our human body makes predictions that enable us to intervene in the world with agility and speed, apparently without the cumbersome calculations and feedback loops that most robots still rely on.

Intrigued? Follow Martijn de Vries, and his fellow collaborators to see where this project heads next.

The post Surgeons to reach prostate with robot appeared first on RoboHouse.