A new bioinspired earthworm robot for future underground explorations

Author: D.Farina. Credits: Istituto Italiano di Tecnologia – © IIT, all rights reserved

Researchers at Istituto Italiano di Tecnologia (IIT-Italian Institute of Technology) in Genova has realized a new soft robot inspired by the biology of earthworms,which is able to crawl thanks to soft actuators that elongate or squeeze, when air passes through them or is drawn out. The prototype has been described in the international journal Scientific Reports of the Nature Portfolio, and it is the starting point for developing devices for underground exploration, but also search and rescue operations in confined spaces and the exploration of other planets.

Nature offers many examples of animals, such as snakes, earthworms, snails, and caterpillars, which use both the flexibility of their bodies and the ability to generate physical travelling waves along the length of their body to move and explore different environments. Some of their movements are also similar to plant roots.

Taking inspiration from nature and, at the same time, revealing new biological phenomena while developing new technologies is the main goal of the BioInspired Soft robotics lab coordinated by Barbara Mazzolai, and this earthworm-like robot is the latest invention coming from her group.

The creation of earthworm-like robot was made possible through a thorough understanding and application of earthworm locomotion mechanics. They use alternating contractions of muscle layers to propel themselves both below and above the soil surface by generating retrograde peristaltic waves. The individual segments of their body (metameres) have a specific quantity of fluid that controls the internal pressure to exert forces, and perform independent, localized and variable movement patterns.

IIT researchers have studied the morphology of earthworms and have found a way to mimic their muscle movements, their constant volume coelomic chambers and the function of their bristle-like hairs (setae) by creating soft robotic solutions.

The team developed a peristaltic soft actuator (PSA) that implements the antagonistic muscle movements of earthworms; from a neutral position it elongates when air is pumped into it and compresses when air is extracted from it. The entire body of the robotic earthworm is made of five PSA modules in series, connected with interlinks. The current prototype is 45 cm long and weighs 605 grams.

Each actuator has an elastomeric skin that encapsulates a known amount of fluid, thus mimicking the constant volume of internal coelomic fluid in earthworms. The earthworm segment becomes shorter longitudinally and wider circumferentially and exerts radial forces as the longitudinal muscles of an individual constant volume chamber contract. Antagonistically, the segment becomes longer along the anterior–posterior axis and thinner circumferentially with the contraction of circumferential muscles, resulting in penetration forces along the axis.

Every single actuator demonstrates a maximum elongation of 10.97mm at 1 bar of positive pressure and a maximum compression of 11.13mm at 0.5 bar of negative pressure, unique in its ability to generate both longitudinal and radial forces in a single actuator module.

In order to propel the robot on a planar surface, small passive friction pads inspired by earthworms’ setae were attached to the ventral surface of the robot. The robot demonstrated improved locomotion with a speed of 1.35mm/s.

This study not only proposes a new method for developing a peristaltic earthworm-like soft robot but also provides a deeper understanding of locomotion from a bioinspired perspective in different environments. The potential applications for this technology are vast, including underground exploration, excavation, search and rescue operations in subterranean environments and the exploration of other planets. This bioinspired burrowing soft robot is a significant step forward in the field of soft robotics and opens the door for further advancements in the future.

Robots are performing Hindu rituals—some devotees fear they’ll replace worshippers

Robots are performing Hindu rituals—some devotees fear they’ll replace worshippers

Accelerate Your Robot Development on the Cloud

Enabling Peak Servo Performance with Thermal Protection

Engineers use psychology, physics, and geometry to make robots more intelligent

Multimodal locomotion and cargo transportation of magnetically actuated quadruped soft microrobots

Insights from Brenton Engineering Company

What is the hype cycle for robotics?

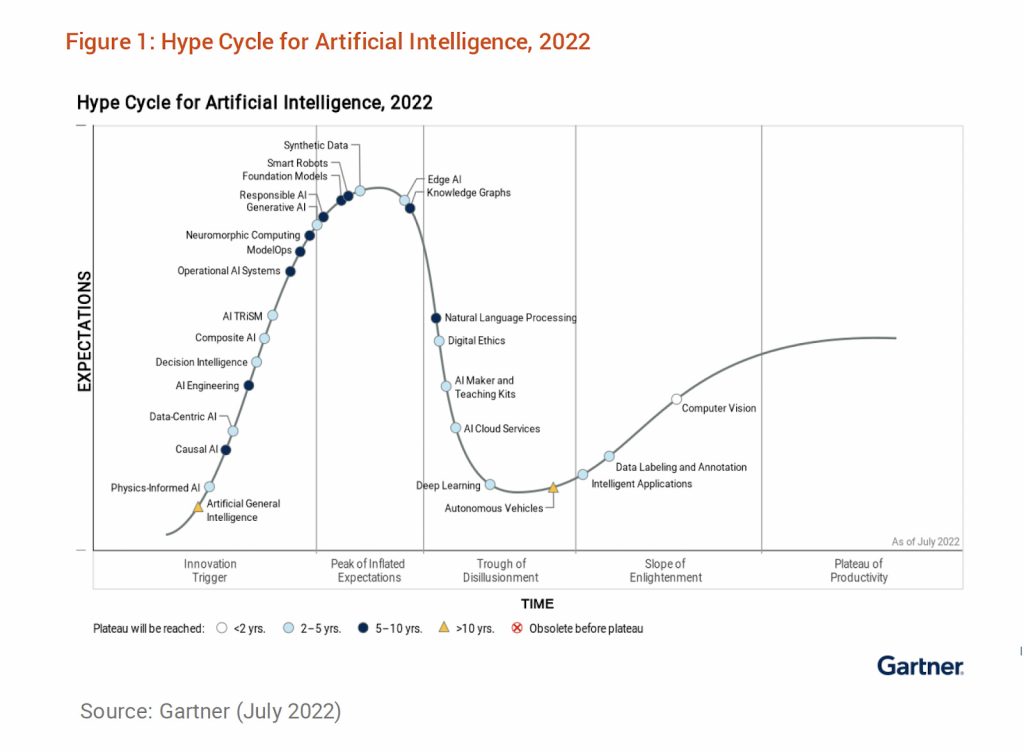

We’ve all seen or heard of the Hype Cycle. It’s a visual depiction of the lifecycle stages a technology goes through from the initial development to commercial maturity. It’s a useful way to track what technologies are compatible with your organization’s needs. There are five stages of the Hype Cycle, which take us through the initial excitement trigger, that leads to the peak of inflated expectations followed by disillusionment. It’s only as a product moves into more tangible market use, sometimes called ‘The Slope of Enlightenment’, that we start to reach full commercial viability.

Working with so many robotics startups, I see this stage as the transition into revenue generation in more than pilot use cases. This is the point where a startup no longer needs to nurture each customer deployment but can produce reference use cases and start to reliably scale. I think this is a useful model but that Gartner’s classifications don’t do robotics justice.

For example, this recent Gartner chart puts Smart Robots at the top of the hype cycle. Robotics is a very fast moving field at the moment. The majority of new robotics companies are less than 5-10 years old. From the perspective of the end user, it can be very difficult to know when a company is moving out of the hype cycle and into commercial maturity because there aren’t many deployments or much marketing at first, particularly compared to the media coverage of companies at the peak of the hype cycle.

So, here’s where I think robotics technologies really fit on the Gartner Hype Cycle:

Innovation trigger

- Voice interfaces for practical applications of robots

- Foundational models applied to robotics

Peak of inflated expectations

- Large Language models – although likely to progress very quickly

- Humanoids

Trough of disillusionment

- Quadrupeds

- Cobots

- Full self-driving cars and trucks

- Powered clothing/Exoskeletons

Slope of enlightenment

- Teleoperation

- Cloud fleet management

- Drones for critical delivery to remote locations

- Drones for civilian surveillance

- Waste recycling

- Warehouse robotics (pick and place)

- Hospital logistics

- Education robots

- Food preparation

- Rehabilitation

- AMRs in other industries

Plateau of productivity

- Robot vacuum cleaners (domestic and commercial)

- Surgical Robots

- Warehouse robotics (AMRs in particular)

- Factory automation (robot arms)

- 3d printing

- ROS

- Simulation

AI, in the form of Large Language Models ie. ChatGPT, GPT3 and Bard is at peak hype, as are humanoid robots, and perhaps the peak of that hype is the idea of RoboGPT, or using LLMs to interpret human commands to robots. Just in the last year, four or five new humanoid robot companies have come out of stealth from Figure, Teslabot, Aeolus, Giant AI, Agility, Halodi, and so far only Halodi has a commercial deployment doing internal security augmentation for ADT.

Cobots are still in the Trough of Disillusionment, in spite of Universal Robot selling 50,000+ arms. People buy robot arms from companies like Universal primarily for affordability, ease of setup, not requiring safety guarding hardware and capable of industrial precision. The full promise of collaborative robots has had trouble landing with end users. We don’t really deploy collaborative robots engaged in frequent hand-offs to humans. Perhaps we need more dual armed cobots with better human-robot interaction before we really explore the possibilities.

Interestingly the Trough of Disillusionment generates a lot of media coverage but it’s usually negative. Self-driving cars and trucks are definitely at the bottom of the trough. Whereas powered clothing or exoskeletons, or quadrupeds are a little harder to place.

AMRs, or Autonomous Mobile Robots, are a form of self-driving cargo that is much more successful than self-driving cars or trucks traveling on public roads. AMRs are primarily deployed in warehouses, hospitals, factories, farms, retail facilities, airports and even on the sidewalk. Behind every successful robot deployment there is probably a cloud fleet management provider or a teleoperation provider, or monitoring service.

Finally, the Plateau of Productivity is where the world’s most popular robots are. Peak popularity is the Roomba and other home robot vacuum cleaners. Before their acquisition by Amazon, iRobot had sold more than 40 million Roombas and captured 20% of the domestic vacuum cleaner market. Now commercial cleaning fleets are switching to autonomy as well.

And of course Productivity (not Hype) is also where the workhorse industrial robot arms live with ever increasing deployments worldwide. The International Federation of Robotics, IFR, reports that more than half a million new industrial robot arms were deployed in 2021, up 31% from 2020. This figure has been rising pretty steadily since I first started tracking robotics back in 2010.

What does your robotics hype cycle look like? What technology would you like me to add to this chart? Contact andra@svrobo.org