Blockchain technology could provide secure communications for robot teams

Robotic arm fuses data from a camera and antenna to locate and retrieve items

To swim like a tuna, robotic fish need to change how stiff their tails are in real time

By Daniel Quinn

Underwater vehicles haven’t changed much since the submarines of World War II. They’re rigid, fairly boxy and use propellers to move. And whether they are large manned vessels or small robots, most underwater vehicles have one cruising speed where they are most energy efficient.

Fish take a very different approach to moving through water: Their bodies and fins are very flexible, and this flexibility allows them to interact with water more efficiently than rigid machines. Researchers have been designing and building flexible fishlike robots for years, but they still trail far behind real fish in terms of efficiency.

What’s missing?

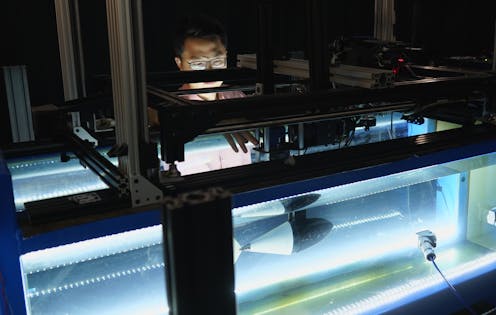

I am an engineer and study fluid dynamics. My labmates and I wondered if something in particular about the flexibility of fish tails allows fish to be so fast and efficient in the water. So, we created a model and built a robot to study the effect of stiffness on swimming efficiency. We found fish swim so efficiently over a wide range of speeds because they can change how rigid or flexible their tails are in real time.

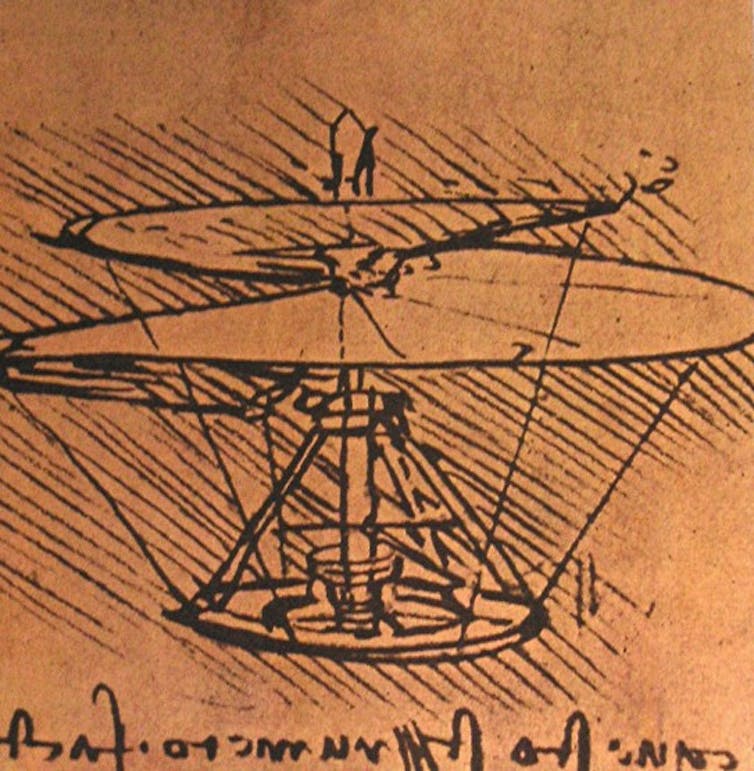

Leonardo Da Vinci/WikimediaCommons

Why are people still using propellers?

Fluid dynamics applies to both liquids and gasses. Humans have been using rotating rigid objects to move vehicles for hundreds of years – Leonardo Da Vinci incorporated the concept into his helicopter designs, and the first propeller–driven boats were built in the 1830s. Propellers are easy to make, and they work just fine at their designed cruise speed.

It has only been in the past couple of decades that advances in soft robotics have made actively controlled flexible components a reality. Now, marine roboticists are turning to flexible fish and their amazing swimming abilities for inspiration.

When engineers like me talk about flexibility in a swimming robot, we are usually referring to how stiff the tail of the fish is. The tail is the entire rear half of a fish’s body that moves back and forth when it swims.

Consider tuna, which can swim up to 50 mph and are extremely energy efficient over a wide range of speeds.

The tricky part about copying the biomechanics of fish is that biologists don’t know how flexible they are in the real world. If you want to know how flexible a rubber band is, you simply pull on it. If you pull on a fish’s tail, the stiffness depends on how much the fish is tensing its various muscles.

The best that researchers can do to estimate flexibility is film a swimming fish and measure how its body shape changes.

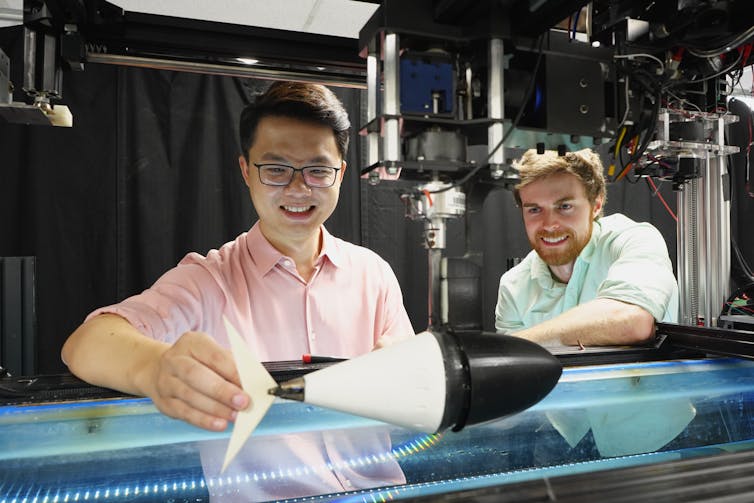

Qiang Zhong and Daniel Quinn, CC BY-ND

Searching for answers in the math

Researchers have built dozens of robots in an attempt to mimic the flexibility and swimming patterns of tuna and other fish, but none have matched the performance of the real things.

In my lab at the University of Virginia, my colleagues and I ran into the same questions as others: How flexible should our robot be? And if there’s no one best flexibility, how should our robot change its stiffness as it swims?

We looked for the answer in an old NASA paper about vibrating airplane wings. The report explains how when a plane’s wings vibrate, the vibrations change the amount of lift the wings produce. Since fish fins and airplane wings have similar shapes, the same math works well to model how much thrust fish tails produce as they flap back and forth.

Using the old wing theory, postdoctoral researcher Qiang Zhong and I created a mathematical model of a swimming fish and added a spring and pulley to the tail to represent the effects of a tensing muscle. We discovered a surprisingly simple hypothesis hiding in the equations. To maximize efficiency, muscle tension needs to increase as the square of swimming speed. So, if swimming speed doubles, stiffness needs to increase by a factor of four. To swim three times faster while maintaining high efficiency, a fish or fish-like robot needs to pull on its tendon about nine times harder.

To confirm our theory, we simply added an artificial tendon to one of our tunalike robots and then programmed the robot to vary its tail stiffness based on speed. We then put our new robot into our test tank and ran it through various “missions” – like a 200-meter sprint where it had to dodge simulated obstacles. With the ability to vary its tail’s flexibility, the robot used about half as much energy on average across a wide range of speeds compared to robots with a single stiffness.

Yicong Fu, CC BY-ND

Why it matters

While it is great to build one excellent robot, the thing my colleagues and I are most excited about is that our model is adaptable. We can tweak it based on body size, swimming style or even fluid type. It can be applied to animals and machines whether they are big or small, swimmers or flyers.

For example, our model suggests that dolphins have a lot to gain from the ability to vary their tails’ stiffness, whereas goldfish don’t get much benefit due to their body size, body shape and swimming style.

The model also has applications for robotic design too. Higher energy efficiency when swimming or flying – which also means quieter robots – would enable radically new missions for vehicles and robots that currently have only one efficient cruising speed. In the short term, this could help biologists study river beds and coral reefs more easily, enable researchers to track wind and ocean currents at unprecedented scales or allow search and rescue teams to operate farther and longer.

In the long term, I hope our research could inspire new designs for submarines and airplanes. Humans have only been working on swimming and flying machines for a couple centuries, while animals have been perfecting their skills for millions of years. There’s no doubt there is still a lot to learn from them.

![]()

Daniel Quinn receives funding from The National Science Foundation and The Office of Naval Research.

This article appeared in The Conversation.

To swim like a tuna, robotic fish need to change how stiff their tails are in real time

Study explores how a robot’s inner speech affects a human user’s trust

Using bundles of fibers, robots mimic nature

Robert Wood’s Plenary Talk: Soft robotics for delicate and dexterous manipulation

Robotic grasping and manipulation has historically been dominated by rigid grippers, force/form closure constraints, and extensive grasp trajectory planning. The advent of soft robotics offers new avenues to diverge from this paradigm by using strategic compliance to passively conform to grasped objects in the absence of active control, and with minimal chance of damage to the object or surrounding environment. However, while the reduced emphasis on sensing, planning, and control complexity simplifies grasping and manipulation tasks, precision and dexterity are often lost.

This talk will discuss efforts to increase the robustness of soft grasping and the dexterity of soft robotic manipulators, with particular emphasis on grasping tasks that are challenging for more traditional robot hands. This includes compliant objects, thin flexible sheets, and delicate organisms. Examples will be drawn from manipulation of everyday objects and field studies of deep sea sampling using soft end effectors

Bio: Robert Wood is the Charles River Professor of Engineering and Applied Sciences in the Harvard John A. Paulson School of Engineering and Applied Sciences and a National Geographic Explorer. Prof. Wood completed his M.S. and Ph.D. degrees in the Dept. of Electrical Engineering and Computer Sciences at the University of California, Berkeley. His current research interests include new micro- and meso-scale manufacturing techniques, bioinspired microrobots, biomedical microrobots, control of sensor-limited and computation-limited systems, active soft materials, wearable robots, and soft grasping and manipulation. He is the winner of multiple awards for his

work including the DARPA Young Faculty Award, NSF Career Award, ONR Young Investigator Award, Air Force Young Investigator Award, Technology Review’s TR35, and multiple best paper awards. In 2010 Wood received the Presidential Early Career Award for Scientists and Engineers from President Obama for his work in microrobotics. In 2012 he was selected for the Alan T. Waterman award, the National Science Foundation’s most prestigious early career award. In 2014 he was named one of National Geographic’s “Emerging Explorers”, and in 2018 he was an inaugural recipient of the Max Planck-Humboldt Medal. Wood’s group is also dedicated to STEM education by using novel robots to motivate young students to pursue careers in science and engineering.

Sense Think Act Podcast: Dave Coleman

In this episode, Audrow Nash interviews Dave Coleman, Chief Executive Officer at PickNik Robotics. Dave speaks at a high level about what MoveIt is and what problems it helps roboticists solve, they talk about supervised autonomy, including a collaboration with NASA and MoveIt Studio, and Dave talks about MoveIt 3.0.

Episode links

- Download the episode

- Dave Coleman’s LinkedIn profile

- PickNik’s website

- MoveIt’s website

- MoveIt Studio’s website

Subscribe

An autonomous forearm-supported walker to assist patients in nursing facilities

Six Predictions for the Future of IoT and Mobile App Integration

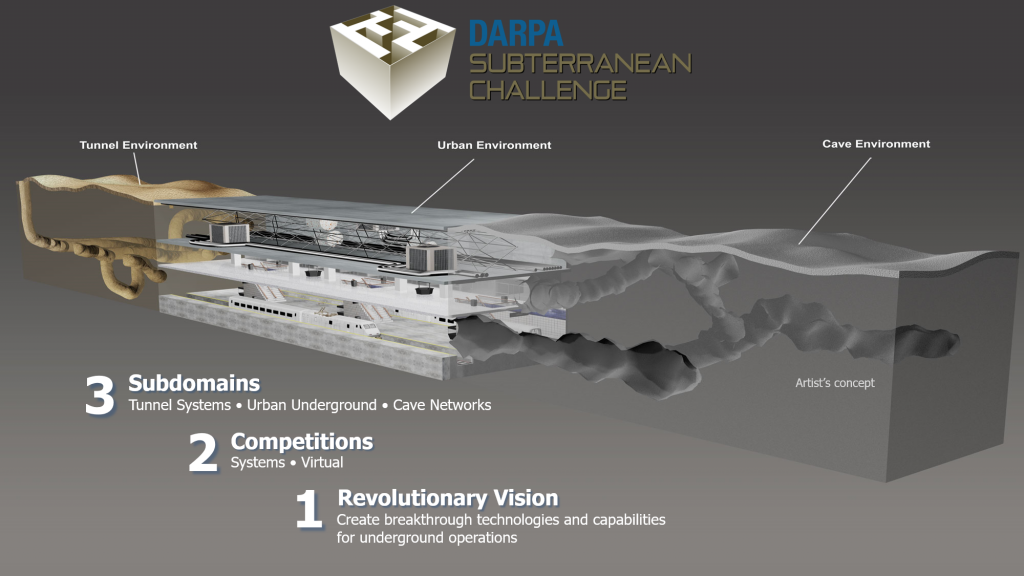

Team CERBERUS wins the DARPA Subterranean Challenge

The DARPA Subterranean Challenge planned to develop novel approaches to rapidly map, explore and search underground environments in time-sensitive operations critical for the civilian and military domains alike. In the Final Event, DARPA designed an environment involving branches representing all three challenges of the “Tunnel Circuit”, the “Urban Circuit” and the “Cave Circuit”. Robots had to explore, search for objects (“artifacts”) of interest, and report their accurate location within underground tunnels, infrastructure similar to a subway, and natural caves and paths with extremely confined geometries, tough terrain, and severe visual degradation (including dense smoke).

Team CERBERUS deployed a diverse set of robots with the prime systems being four ANYmal C legged systems. In the Prize Round of the Final Event, the team won the competition and scored 23 points by correctly detecting and localizing 23 of 40 of the artifacts DARPA had placed inside the environment. The second team, “CSIRO Data61” also scored 23 points but reported the last artifact with a slight further delay to DARPA thus the tiebraker was in favor of Team CERBERUS. The third team, “MARBLE” scored 18 points.

The DARPA Subterranean Challenge was one of the rare types of global robotic competition events pushing the frontiers for resilient autonomy and calling teams to develop novel and innovative solutions with the capacity to help critical sectors such as search and rescue personnel and the industry in domains such as mining and beyond. The level of achievement of Team CERBERUS is best understood by looking at all the competitors in the “Systems Competition” of the Final Event. The participating teams including members from top international institutions, namely:

- CERBERUS (Score = 23): University of Nevada, Reno, ETH Zurich, NTNU, University of California Berkeley, Oxford Robotics Institute, Flyability, Sierra Nevada Corporation

- CSIRO Data61 (Score = 23): CSIRO, Emesent, Georgia Institute of Technology

- MARBLE (Score = 18): University of Colorado Boulder, University of Colorado Denver, Scientific Systems Company, University of California Santa Cruz

- Explorer (Score = 17): Carnegie Mellon University, Oregon State University

- CoSTAR (Score = 13): NASA Jet Propulsion Laboratory, California Institute of Technology, MIT, KAIST, Lulea University of Technology

- CTU-CRAS-NORLAB (Score = 7): Czech Technological University, Université Laval

- Coordinated Robotics (Score = 2): Coordinated Robotics, California State University Channel Islands, Oke Onwuka, Sequoia Middle School

- Robotika (Score = 2): Robotika International, Robotika.cz, Czech University of Life Science, Centre for Field Robotics, Cogito Team

We congratulate all members of the team and we are proud of this incredible and historic achievement! Most importantly, we are excited to be part of this amazing community pushing the frontier of resilient robotic autonomy in extreme environments.