Researchers leveraging AI to train (robotic) dogs to respond to their masters

5 Ways AI Is Revolutionizing the Automotive Industry

The introduction of the automobile changed American culture profoundly, and it has been doing so for over a century. Innovations in automotive technology have allowed people to travel farther, faster, and for less fuel than generations before. In fact, the […]

The post 5 Ways AI Is Revolutionizing the Automotive Industry appeared first on TechSpective.

New video test for Parkinson’s uses AI to track how the disease is progressing

Fast Hexapod Improves Aircraft Manufacturing Process

Development of ‘living robots’ needs regulation and public debate

Bio-inspired lizard robot reveals what’s needed for optimum locomotion

Bio-hybrid robotics need regulation and public debate, say researchers

Won’t Get Fooled Again

ChatGPT-Generated Exam Answers Dupe Profs

Looks like college take-home tests are destined to suffer the same fate as the Dodo bird.

Instructors at a U.K. university learned as much after a slew of take-home exams featuring answers generated by ChatGPT passed with flying colors — all while evading virtually any suspicions of cheating.

Observes writer Richard Adams: “Researchers at the University of Reading fooled their own professors by secretly submitting AI-generated exam answers that went undetected and got better grades than real students.

“The university’s markers – who were not told about the project – flagged only one of the 33 entries.”

Observes Karen Yeung, a professor at the University of Birmingham: “The publication of this real-world quality assurance test demonstrates very clearly that the generative AI tools — freely and openly available — enable students to cheat take-home examinations without difficulty.”

In other news and analysis on AI writing:

*In-Depth Guide: Lovo AI Text-to-Voice: Writers looking for a reliable text-to-voice solution may want to give Lovo AI a whirl, according to Sharqa Hameed.

Hameed’s guide on the product is extremely valuable in that it offers scores of step-by-step screenshots that truly give you a detailed look at how Lovo AI works.

Hameed’s verdict on the app: “Overall, I’d rate it 4 out of 5.

“It offers various valuable features, including Genny, Auto Subtitle Generator, Text to Speech, Online Video Editor, AI Art Generator, AI Writer and more.

“However, its free version limits you to convert up to 20 minutes of text to audio.”

*Free-for-All: Open Source Promises Wide Array of AI Writing Tools: Facebook founder Mark Zuckerberg predicts that writers and others will continue to have a number of AI choices as the tech grows ever–more sophisticated.

A key player in AI writing/chat tech, Zuckerberg has released his AI code as open-source — available to any and all to use and alter.

Observes Zuckerberg: “I don’t think that AI technology is a thing that should be kind of hoarded and — that one company gets to use it to build whatever central, single product that they’re building.”

*Grand Claims, Meh Results: Google’s AI Falls Short: Apparently, Google’s Gemini — the AI that powers its direct competition to ChatGPT — is not all it’s cracked up to be.

Observes writer Kyle Wiggers: “In press briefings and demos, Google has repeatedly claimed that the models can accomplish previously impossible tasks thanks to their ‘long context.'”

Those tasks include summarizing multiple hundred-page documents or searching across scenes in film footage.

“But new research suggests that the models aren’t, in fact, very good at those things,” Wiggers adds.

*Freelance Writing Dreams Disappearing in a Puff of Code: Add freelancers to the growing list of workers discovering that AI is less a ‘helpful buddy’ and more a ruthless job stealer.

Case in point: Since the advent of ChatGPT, job opportunities in freelance writing have declined 21%, according to a newly updated study.

Observes writer Laura Bratton: “Research shows that easily-automated writing and (computer) coding jobs are being replaced by AI.”

*Privacy Ninja: New AI Email Promises to Guard Your Secrets: Proton, an email provider long-prized for its heavy emphasis on privacy, has added AI to its mix.

Specifically, its newly released AI writing assistant ‘Proton Scribe’ is designed to help users auto-write and proofread their emails.

Observes writer Paul Sawers: “Proton Scribe can be deployed entirely at the local device level — meaning user data doesn’t leave the device.

“Moreover, Proton promises that its AI assistant won’t learn from user data — a particularly important feature for enterprise use cases, where privacy is paramount.”

*Forget Solitaire: Claude Turns AI Writing into a Collaborative Party Game: ChatGPT competitor Claude has a new feature that enables users to publish, share and remix the AI writing and other content that they generate with one another.

Observes writer Eric Hal Schwartz: “Essentially, you can open published ‘Artifacts’ created by others and modify or build upon them through conversations with Claude.

“Anthropic is pitching it as a way to foster a collaborative environment.”

*Robo Lawyer: For Many Attorneys, AI Still a Boogeyman: Despite its considerable benefits to the legal community, AI is viewed warily by many lawyers and pros.

Specifically, 77% of professionals recently surveyed by Thomson Reuters saw AI as a threat to lawyers.

Observes Artificial Lawyer: “While some very innovative lawyers are comfortable with AI and have few worries about the legal world’s imminent demise, there are plenty of lawyers out there who still feel very uncertain about what this all means for them, the profession, and their firms.”

*ChatGPT Mind-Meld: New Hope For the Paralyzed: A man slowly succumbing to paralysis has been given new hope with ChatGPT, which is enabling him to text using his brain waves.

Using a brain implant, the man is able to translate his thoughts into text commands — generated by ChatGPT — which he uses to operate computerized communication devices.

Observes the patient: “You get choices of how you might respond in several different ways.

“So rather than me typing single words, I’m hitting one or two buttons or clicks, if you will, and I’ve got the majority of a sentence done.”

*AI Big Picture: AI Gold Rush Still Runs Hot: Nearly 20 months after ChatGPT introduced a stunned world to the potential of AI, businesses across the world are still clamoring to bring the newly commercialized tech onboard.

Observes writer Ben Dickson: “Most organizations are spending hefty amounts to either explore generative AI use cases or have already implemented them in production,” according to a new survey of 200 IT leaders.

“Nearly three-fourths (73%) of respondents plan to spend more than $500,000 on generative AI in the next 12 months, with almost half (46%) allocating more than $1 million,” Dickson adds.

Share a Link: Please consider sharing a link to https://RobotWritersAI.com from your blog, social media post, publication or emails. More links leading to RobotWritersAI.com helps everyone interested in AI-generated writing.

–Joe Dysart is editor of RobotWritersAI.com and a tech journalist with 20+ years experience. His work has appeared in 150+ publications, including The New York Times and the Financial Times of London.

The post Won’t Get Fooled Again appeared first on Robot Writers AI.

An overview of classifier-free guidance for diffusion models

#RoboCup2024 – daily digest: 21 July

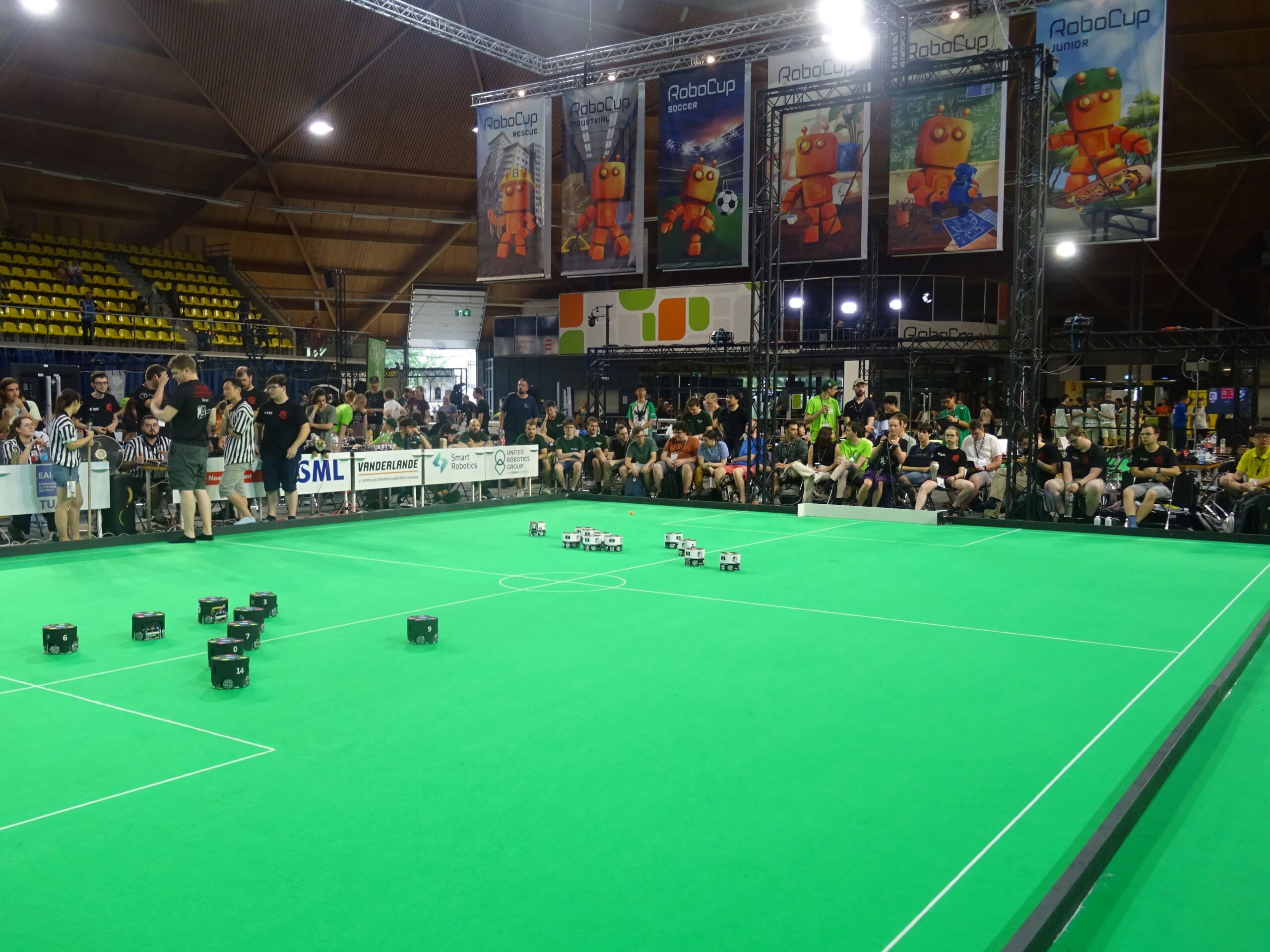

A break in play during a Small Size League match.

A break in play during a Small Size League match.

Today, 21 July, saw the competitions draw to a close in a thrilling finale. In the third and final of our round-up articles, we provide a flavour of the action from this last day. If you missed them, you can find our first two digests here: 19 July | 20 July.

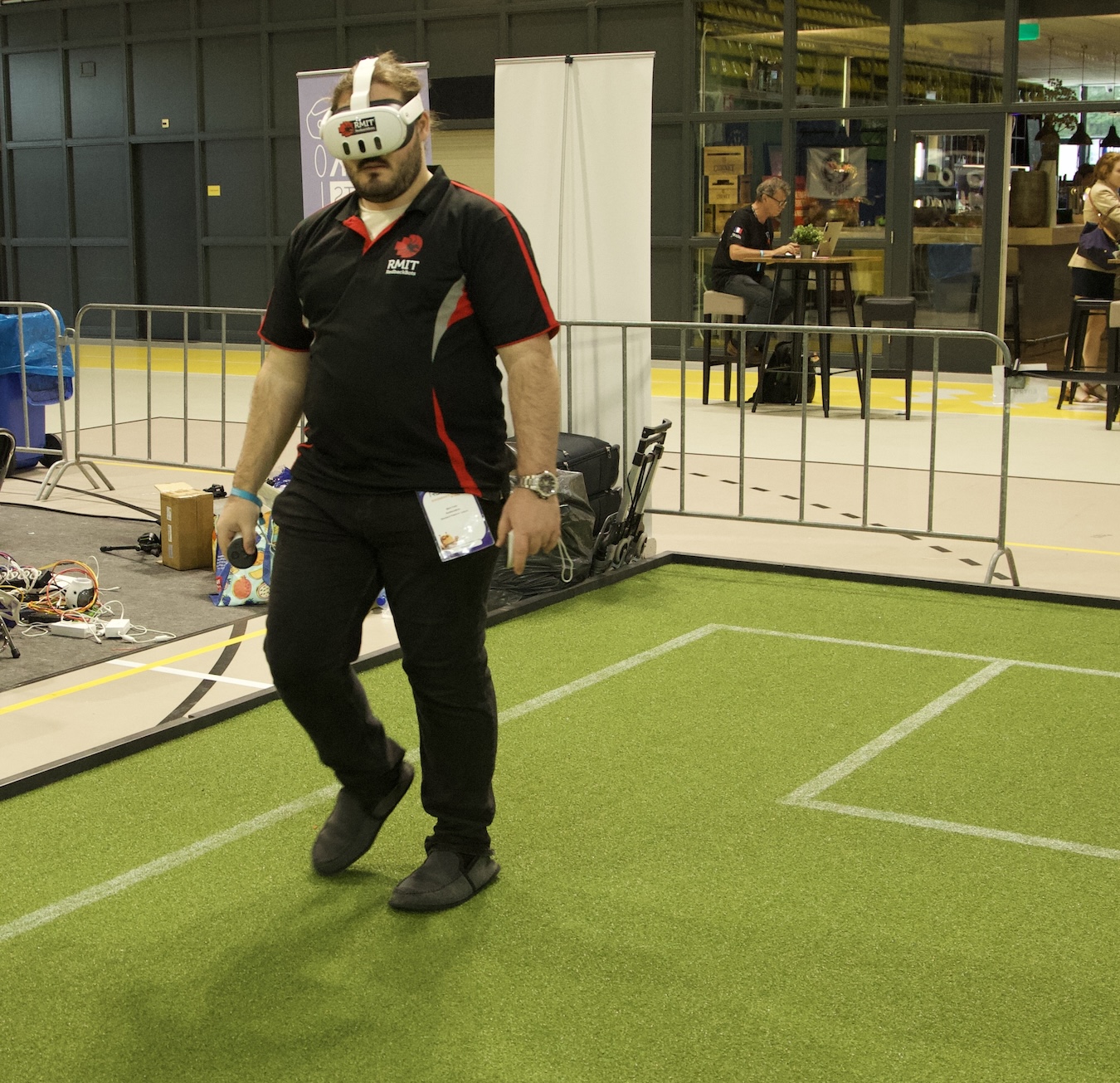

My first port of call this morning was the Standard Platform League, where Dr Timothy Wiley and Tom Ellis from Team RedbackBots, RMIT University, Melbourne, Australia, demonstrated an exciting advancement that is unique to their team. They have developed an augmented reality (AR) system with the aim of enhancing the understanding and explainability of the on-field action.

The RedbackBots travelling team for 2024 (L-to-R: Murray Owens, Sam Griffiths, Tom Ellis, Dr Timothy Wiley, Mark Field, Jasper Avice Demay). Photo credit: Dr Timothy Wiley.

The RedbackBots travelling team for 2024 (L-to-R: Murray Owens, Sam Griffiths, Tom Ellis, Dr Timothy Wiley, Mark Field, Jasper Avice Demay). Photo credit: Dr Timothy Wiley.

Timothy, the academic leader of the team explained: “What our students proposed at the end of last year’s competition, to make a contribution to the league, was to develop an augmented reality (AR) visualization of what the league calls the team communication monitor. This is a piece of software that gets displayed on the TV screens to the audience and the referee, and it shows you where the robots think they are, information about the game, and where the ball is. We set out to make an AR system of this because we think it’s so much better to view it overlaid on the field. What the AR lets us do is project all of this information live on the field as the robots are moving.”

The team has been demonstrating the system to the league at the event, with very positive feedback. In fact, one of the teams found an error in their software during a game whilst trying out the AR system. Tom said that they’ve received a lot of ideas and suggestions from the other teams for further developments. This is one of the first (if not, the first) AR system to be trialled across the competition, and first time it has been used in the Standard Platform League. I was lucky enough to get a demo from Tom and it definitely added a new level to the viewing experience. It will be very interesting to see how the system evolves.

Mark Field setting up the MetaQuest3 to use the augmented reality system. Photo credit: Dr Timothy Wiley.

Mark Field setting up the MetaQuest3 to use the augmented reality system. Photo credit: Dr Timothy Wiley.

From the main soccer area I headed to the RoboCupJunior zone, where Rui Baptista, an Executive Committee member, gave me a tour of the arenas and introduced me to some of the teams that have been using machine learning models to assist their robots. RoboCupJunior is a competition for school children, and is split into three leagues: Soccer, Rescue and OnStage.

I first caught up with four teams from the Rescue league. Robots identify “victims” within re-created disaster scenarios, varying in complexity from line-following on a flat surface to negotiating paths through obstacles on uneven terrain. There are three different strands to the league: 1) Rescue Line, where robots follow a black line which leads them to a victim, 2) Rescue Maze, where robots need to investigate a maze and identify victims, 3) Rescue Simulation, which is a simulated version of the maze competition.

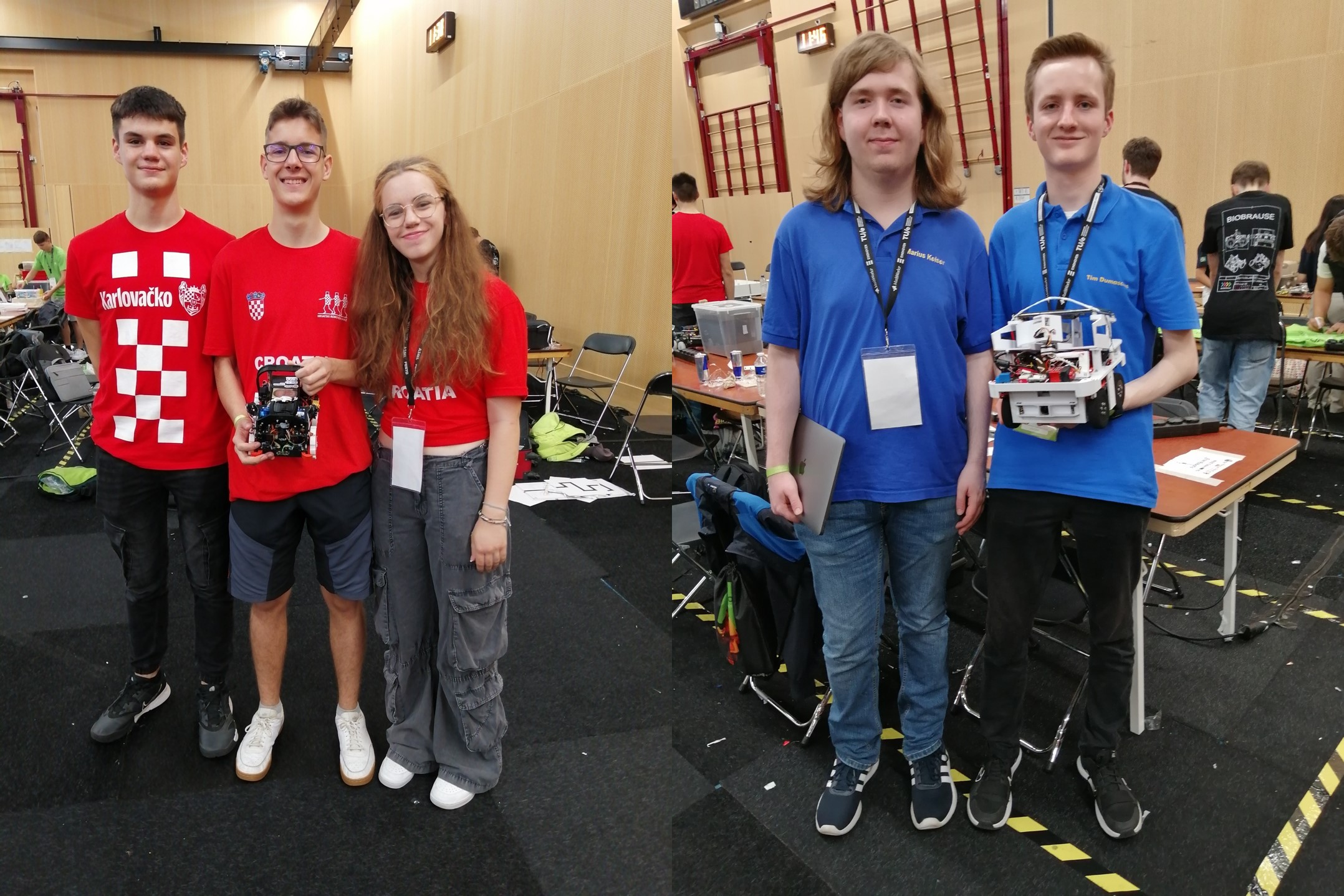

Team Skollska Knijgia, taking part in the Rescue Line, used a YOLO v8 neural network to detect victims in the evacuation zone. They trained the network themselves with about 5000 images. Also competing in the Rescue Line event were Team Overengeniering2. They also used YOLO v8 neural networks, in this case for two elements of their system. They used the first model to detect victims in the evacuation zone and to detect the walls. Their second model is utilized during line following, and allows the robot to detect when the black line (used for the majority of the task) changes to a silver line, which indicates the entrance of the evacuation zone.

Left: Team Skollska Knijgia. Right: Team Overengeniering2.

Left: Team Skollska Knijgia. Right: Team Overengeniering2.

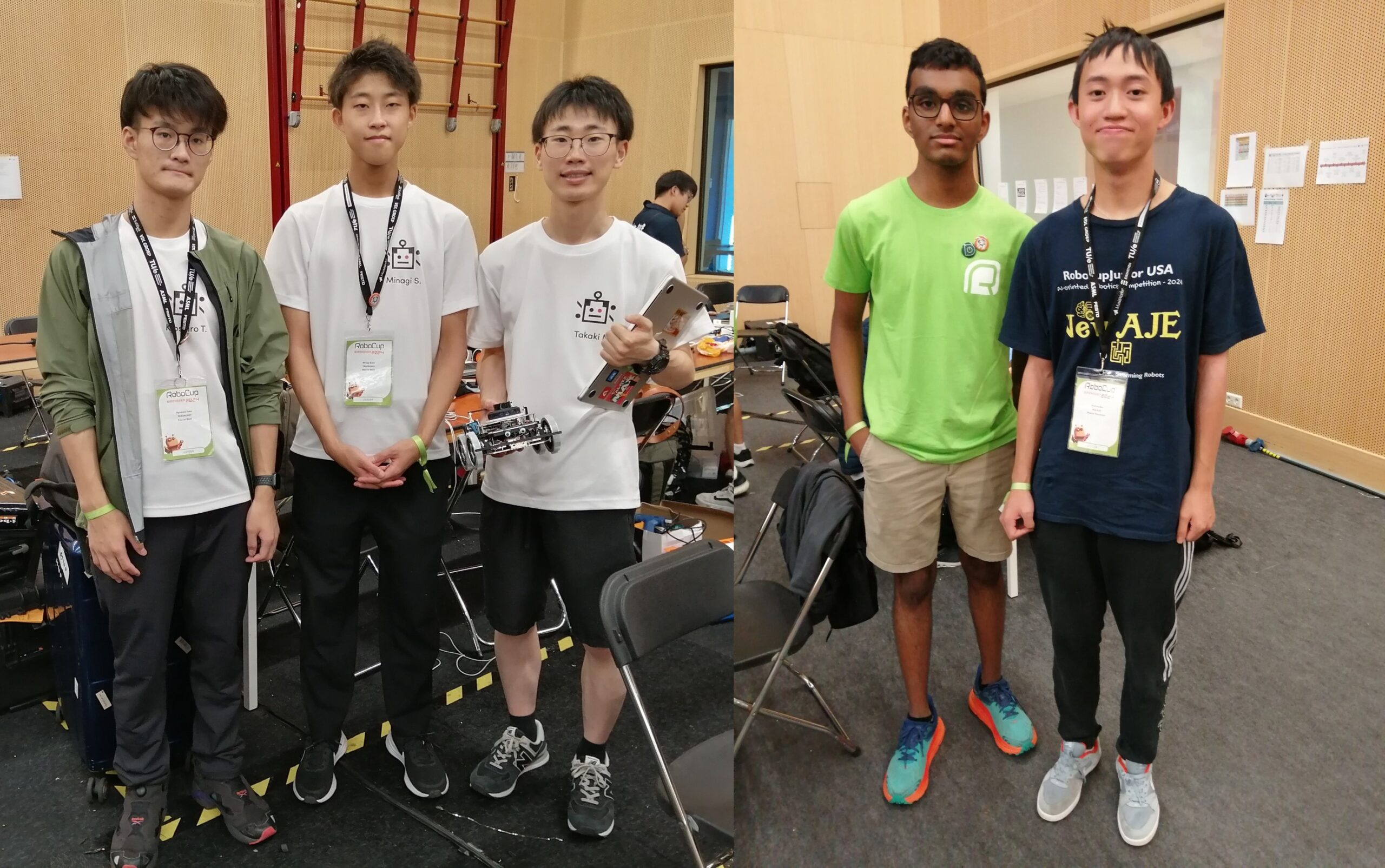

Team Tanorobo! were taking part in the maze competition. They also used a machine learning model for victim detection, training on 3000 photos for each type of victim (these are denoted by different letters in the maze). They also took photos of walls and obstacles, to avoid mis-classification. Team New Aje were taking part in the simulation contest. They used a graphical user interface to train their machine learning model, and to debug their navigation algorithms. They have three different algorithms for navigation, with varying computational cost, which they can switch between depending on the place (and complexity) in the maze in which they are located.

Left: Team Tanorobo! Right: Team New Aje.

Left: Team Tanorobo! Right: Team New Aje.

I met two of the teams who had recently presented in the OnStage event. Team Medic’s performance was based on a medical scenario, with the team including two machine learning elements. The first being voice recognition, for communication with the “patient” robots, and the second being image recognition to classify x-rays. Team Jam Session’s robot reads in American sign language symbols and uses them to play a piano. They used the MediaPipe detection algorithm to find different points on the hand, and random forest classifiers to determine which symbol was being displayed.

Left: Team Medic Bot Right: Team Jam Session.

Left: Team Medic Bot Right: Team Jam Session.

Next stop was the humanoid league where the final match was in progress. The arena was packed to the rafters with crowds eager to see the action.

Standing room only to see the Adult Size Humanoids.

Standing room only to see the Adult Size Humanoids.

The finals continued with the Middle Size League, with the home team Tech United Eindhoven beating BigHeroX by a convincing 6-1 scoreline. You can watch the livestream of the final day’s action here.

The grand finale featured the winners of the Middle Size League (Tech United Eindhoven) against five RoboCup trustees. The humans ran out 5-2 winners, their superior passing and movement too much for Tech United.

Landmark Study Reveals Wearable Robotics Significantly Boost Safety and Efficiency in Industrial Environments

#RoboCup2024 – daily digest: 20 July

The Standard Platform Soccer League in action.

The Standard Platform Soccer League in action.

This is the second of our daily digests from RoboCup2024 in Eindhoven, The Netherlands. If you missed the first digest, which gives some background to RoboCup, you can find it here.

Competitions continued across all the leagues today, with participants vying for a place in Sunday’s finals.

The RoboCup@Work league focusses on robots in work-related scenarios, utilizing ideas and concepts from other RoboCup competitions to tackle open research challenges in industrial and service robotics.

I arrived at the arena in time to catch the advanced navigation test. Robots have to autonomously navigate, picking up and placing objects at different work stations. In this advanced test, caution tape is added to the arena floor, which the robots should avoid travelling over. There is also a complex placing element where teams have to put an object that they’ve collected into a slot – get the orientation or placement of the object slightly wrong and the it won’t fall into the slot.

The RoboCup@Work arena just before competition start.

The RoboCup@Work arena just before competition start.

Eight teams are taking part in the league this year. Executive Committee member Asad Norouzi said that there are plans to introduce a sub-league which would provide an entry point for new teams or juniors to get into the league proper.

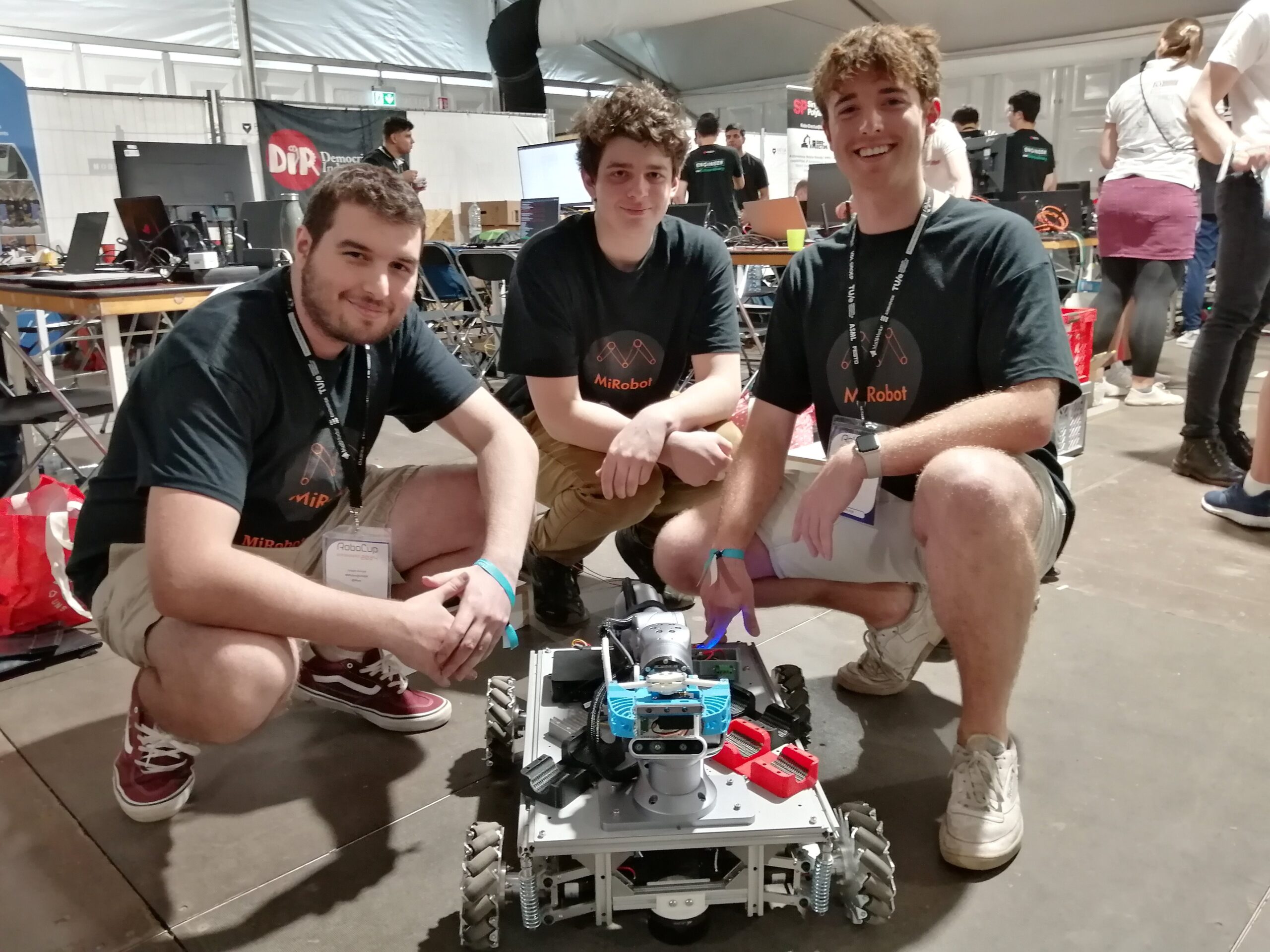

I caught up with Harrison Burns, Mitchell Torok and Jasper Arnold from Team MiRobot. They are based at the University of New South Wales and are attending RoboCup for the first time.

Team MiRobot from UNSW.

Team MiRobot from UNSW.

The team actually only started six months ago, so final preparations have been a bit stressful. However, the experience has been great fun, and the competition has gone well so far. Like most teams, they’ve had to make many refinements as the competition has progressed, leading to some late nights.

One notable feature of the team’s robot is the bespoke, in-house-designed grasping mechanism on the end of the arm. The team note that “it has good flexible jaws, so when it grabs round objects it actually pulls the object directly into it. Because it uses a linear motion, compared to a lot of other rotating jaws, it has a lot better reliability for picking up objects”.

Here is some footage from the task, featuring Team bi-t-bots and Team Singapore.

Team b-it-bots take on the RoboCup@Work advanced navigation test, picking up a drill bit #RoboCup2024 pic.twitter.com/QfijZpxaOK

— AIhub (@aihuborg) July 20, 2024

Team Singapore placing an object in the RoboCup@Work advanced navigation test pic.twitter.com/SvgLBOaVo7

— AIhub (@aihuborg) July 20, 2024

In the Middle Size Soccer league (MSL), teams of five fully autonomous robots play with a regular size FIFA ball. Teams are free to design their own hardware but all sensors have to be on-board and there is a maximum size and weight limit of 40kg for the robots. The research focus is on mechatronics design, control and multi-agent cooperation at plan and perception levels. Nine teams are competing this year.

Action from the Middle Size League at #RoboCup2024.

Falcons vs Robot Club Toulon pic.twitter.com/GHcNLOx2nV

— AIhub (@aihuborg) July 20, 2024

I spoke to António Ribeiro, who is a member of the technical committee and part of Team LAR@MSL from the University of Minho, Portugal. The team started in 1998, but António and most of his colleagues on the current team have only been involved in the MSL since September 2022. The robots have evolved as the competition has progressed, and further improvements are in progress. Refinements so far have included communication, the detection system, and the control system. They are pleased with the improvements from the previous RoboCup. “Last year we had a lot of hardware issues, but this year the hardware seems pretty stable. We also changed our coding architecture and it is now much easier and faster for us to develop code because we can all work on the code at the same time on different modules”.

António cited versatility and cost-effective solutions as strengths of the team. “Our robot is actually very cheap compared to other teams. We use a lot of old chassis, and our solutions always go to the lowest cost possible. Some teams have multiple thousand dollar robots, but, for example, our vision system is around $70-80. It works pretty well – we need to improve the way we handle it, but it seems stable”.

Team LAR@MSL

Team LAR@MSL

The RoboCup@Home league aims to develop service and assistive robot technology with high relevance for future personal domestic applications. A set of benchmark tests is used to evaluate the robots’ abilities and performance in a realistic non-standardized home environment setting. These tests include helping to prepare breakfast, clearing the table, and storing groceries.

I arrived in time to watch the “stickler for the rules” challenge, where robots have to navigate different rooms and make sure that the people inside (“guests” at a party) are sticking to four rules: 1) there is one forbidden room – if a guest is in there the robot must alert them and ask them to follow it into another room), 2) everyone must have a drink in their hand – if not, the robot directs them to a shelf with drinks, 3) no shoes to be worn in the house, 4) there should be no rubbish left on the floor.

After watching an attempt from the LAR@Home robot, Tiago from the team told me a bit about the robot. “The goal is to develop a robot capable of multi general-purpose tasks in home and healthcare environments.” With the exception of the robotic arm, all of the hardware was built by the team. The robot has two RGBD cameras, two LIDARs, a tray (where the robot can store items that it needs to carry), and two emergency stop buttons that deactivate all moving parts. Four omnidirectional wheels allow the robot to move in any direction at any time. The wheels have independent suspension systems which guarantees that they can all be on the ground at all times, even if there are bumps and cables on the venue floor. There is a tablet that acts as a visual interface, and a microphone and speakers to enable communication between humans and the robot, which is all done via speaking and listening.

Tiago told me that the team have talked to a lot healthcare practitioners to find out the main problems faced by elderly people, and this inspired one of their robot features. “They said that the two main injury sources are from when people are trying to sit down or stand up, and when they are trying to pick something up from the floor. We developed a torso that can pick objects from the floor one metre away from the robot”.

The LAR@Home team.

The LAR@Home team.

You can keep up with the latest news direct from RoboCup here.

Click here to see all of our content pertaining to RoboCup.

Drones could revolutionize the construction industry, supporting a new UK housing boom

Are We Ready for Multi-Image Reasoning? Launching VHs: The Visual Haystacks Benchmark!

Humans excel at processing vast arrays of visual information, a skill that is crucial for achieving artificial general intelligence (AGI). Over the decades, AI researchers have developed Visual Question Answering (VQA) systems to interpret scenes within single images and answer related questions. While recent advancements in foundation models have significantly closed the gap between human and machine visual processing, conventional VQA has been restricted to reason about only single images at a time rather than whole collections of visual data.

This limitation poses challenges in more complex scenarios. Take, for example, the challenges of discerning patterns in collections of medical images, monitoring deforestation through satellite imagery, mapping urban changes using autonomous navigation data, analyzing thematic elements across large art collections, or understanding consumer behavior from retail surveillance footage. Each of these scenarios entails not only visual processing across hundreds or thousands of images but also necessitates cross-image processing of these findings. To address this gap, this project focuses on the “Multi-Image Question Answering” (MIQA) task, which exceeds the reach of traditional VQA systems.

Visual Haystacks: the first "visual-centric" Needle-In-A-Haystack (NIAH) benchmark designed to rigorously evaluate Large Multimodal Models (LMMs) in processing long-context visual information.