ROS Awards 2022 results

The ROS Awards are the Oscars of the ROS world! The intention of these awards is to express recognition for contributions to the ROS community and the development of the ROS-based robot industry, and to help those contributions gain awareness.

Conditions

- Selection of the winners is made by anonymous online voting over a period of 2 weeks

- Anybody in the ROS community can vote through the voting enabled website

- Organizers of the awards provide an initial list of 10 possible projects for each category but the list can be increased at any time by anybody during the voting period

- Since the Awards are organized by The Construct none of its products or developers can be voted

- Winners are announced at the ROS Developers Day yearly conference

- New on 2022 edition: Winners of previous editions cannot win again, in order to not concentrate the focus on the same projects all the time. Remember, with these awards, we want to help spread all ROS projects!

Voting

- Every person can only vote once in each category

- You cannot change your answers once you have submitted your vote

- Voting is closed 3 days before the conference, and a list of the finalists per each category is announced in the same week

- Voters cannot use flaws in the system to influence voting. Any detection of trying to trick the system will disqualify the votes. You can, though, promote your favorite among your networks so others vote for it.

Measures have been taken to prevent as much as possible batch voting from a single person.

Categories

Best ROS Software

The Best ROS Software category comprises any software that runs with ROS. It can be a package published on the ROS.org repo of just a software that uses ROS libraries to produce an input. Open Source and closed source are both valid.

Finalists

- Ignition Gazebo, by Open Robotics

- Groot Behavior Tree, by Davide Faconti

- Webots, by Cyberbotics

- SMACC2, by Brett Aldrich

- ros2_control, by several ROS developers

- PlotJuggler, by Davide Faconti

Winner: Webots, by Cyberbotics

Learn more about the winner in this video:

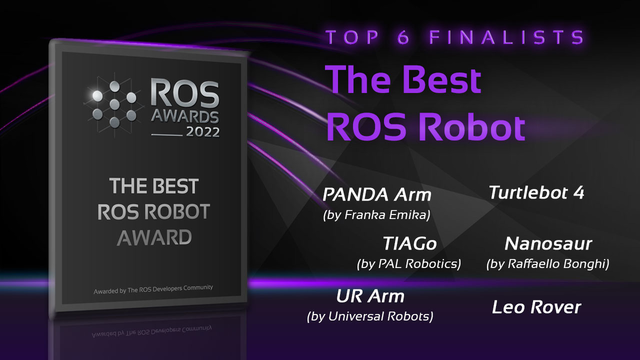

Best ROS-Based Robot

The Best ROS-Based Robot category includes any robot that runs ROS inside it. They can be robotics products, robots for research, or robots for education. In all cases, they must be running ROS inside.

Finalists

- Panda robot arm, by Franka Emika

- TIAGo, by Pal Robotics

- UR robot arm, by Universal Robots

- Turtlebot 4, by Clearpath

- Nanosaur, by Raffaello Bonghi

- Leo Rover, by Leo Rover

Winner: Nanosaur, by Raffaello Bonghi

Learn more about the winner in this video:

Best ROS Developer

Developers are the ones that create all the ROS software that we love. The Best ROS Developer category allows you to vote for any developer who has contributed to ROS development in one sense or another.

Finalists

- Francisco Martín

- Davide Faconti

- Raffaello Bonghi

- Brett Aldrich

- Victor Mayoral Vilches

- Pradheep Krishna

Winner: Francisco Martín

Learn more about the winner in this video:

Insights from the 2022 Edition

- This year, the third time we organise the awards, we have increased the total number of votes by 500% So we can say that the winners are a good representation of the feelings of the community.

- Still this year, the winners of previous editions received many votes. Fortunately, we applied the new rule of not allowing to win previous winners, to provide space for other ROS projects have the focus on the community, and hence help to create a rich ROS ecosystem.

Conclusions

The ROS Awards started in 2020 with a first edition where the winners were some of the best and well-known projects in the ROS world. In this third edition, we have massively increased the number of votes from the previous edition. We expect this award will continue to contribute to the spreading of good ROS projects.

See you again at ROS Awards 2023!

ep.357: Origin Story of the OAK-D, with Brandon Gilles

Brandon Gilles, Founder and CEO of Luxonis, tells us his story about how Luxonis designed one of the most versatile perception platforms on the market.

Brandon took the lessons learned from his time at Ubiquiti, which transformed networking with network-on-a-chip architectures, and applied the mastery of embedded hardware and software to the OAK-D camera and the broader OAK line of products.

To refer to the OAK-D as a stereovision camera tells only part of the story. Aside from depth sensing, the OAK-D leverages the Intel Myriad X to perform perception computations directly on the camera in a highly power-efficient architecture.

Customers can also instantly leverage a wide array of open-source computer vision and AI packages that are pre-calibrated to the optics system.

Additionally, by leveraging a system-on-a-module design, the Luxonis team easily churns out a multitude of variations of the hardware platform to fit the wide variety of customer use cases. Tune in for more.

Brandon Gilles

Brandon Gilles is the Founder and CEO of Luxonis, maker of the OAK-D line of cameras. Brandon comes from a background in Electrical and RF Engineering. He spent his early career as a UniFi Lead at Ubiquiti, where his team helped bring Ubiquiti’s highly performant and power-efficient Unifi products to market.

Links

- Download mp3

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

Industrial Robots Have Moved From Maturity Into A Fast-Growth Phase

A model that allows robots to follow and guide humans in crowded environments

Robochop makes garden trimming a snip

Crewless robotic Mayflower ship nears Plymouth Rock

Volatolomics: Robot noses may one day be able to ‘smell’ disease on your breath

Mimicking the function of Ruffini receptors using a bio-inspired artificial skin

Exploring the Design and Benefits of Modular Conveyor Systems

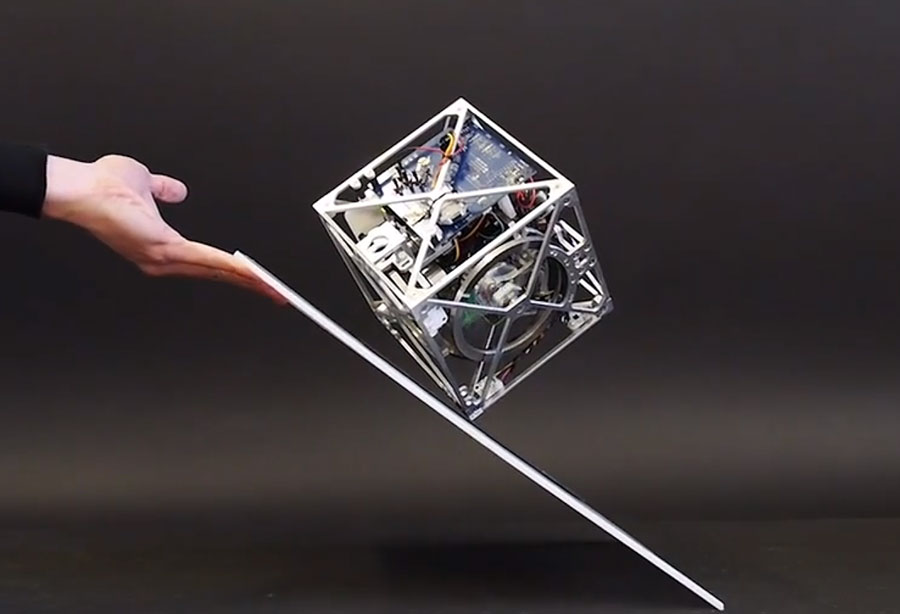

The one-wheel Cubli

Researchers Matthias Hofer, Michael Muehlebach and Raffaello D’Andrea have developed the one-wheel Cubli, a three-dimensional pendulum system that can balance on its pivot using a single reaction wheel. How is it possible to stabilize the two tilt angles of the system with only a single reaction wheel?

The key is to design the system such that the inertia in one direction is higher than in the other direction by attaching two masses far away from the center. As a consequence, the system moves faster in the direction with the lower inertia and slower in the direction with the higher inertia. The controller can leverage this property and stabilize both directions simultaneously.

This work was carried out at the Institute for Dynamic Systems and Control, ETH Zurich, Switzerland.

Almost a decade has passed since the first Cubli

The Cubli robot started with a simple idea: Can we build a 15cm sided cube that can jump up, balance on its corner, and walk across our desk using off-the-shelf motors, batteries, and electronic components? The educational article Cubli – A cube that can jump up, balance, and walk across your desk shows all the design principles and prototypes that led to the development of the robot.

Cubli, from ETH Zurich.