AI for the board game Diplomacy

AI for the board game Diplomacy

AI for the board game Diplomacy

Robot helps researchers achieve a new record at the world’s deepest cave pit

Robotic pipe crawlers using maxon DC motors

Countering Luddite politicians with life (and cost) saving machines

Earlier this month, Candy Crush celebrated its decade birthday by hosting a free party in lower Manhattan. The climax culminated with a drone light display of 500 Unmanned Ariel Vehicles (UAVs) illustrating the whimsical characters of the popular mobile game over the Hudson. Rather than applauding the decision, New York lawmakers ostracized the avionic wonders to Jersey. In the words of Democratic State Senator, Brad Hoylman, “Nobody owns New York City’s skyline – it is a public good and to allow a private company to reap profits off it is in itself offensive.” The complimentary event followed the model of Macy’s New York fireworks that have illuminated the Hudson skies since 1958. Unlike the department store’s pyrotechnics that release dangerous greenhouse gases into the atmosphere, drones are a quiet climate-friendly choice. Still, Luddite politicians plan to introduce legislation to ban the technology as a public nuisance, citing its impact on migratory birds, which are often more spooked by skyscrapers in Hoylman’s district.

Beyond aerial tricks, drones are now being deployed in novel ways to fill the labor gap of menial jobs that have not returned since the pandemic. Founded in 2018, Andrew Ashur’s Lucid Drones has been power-washing buildings throughout the United States for close to five years. As the founder told me: “I saw window washers hanging off the side of the building on a swing stage and it was a mildly windy day. You saw this platform get caught in the wind and all of a sudden the platform starts slamming against the side of the building. The workers were up there, hanging on for dear life, and I remember having two profound thoughts in this moment. The first one, thank goodness that’s not me up there. And then the second one was how can we leverage technology to make this a safer, more efficient job?” At the time, Ashur was a junior at Davidson College playing baseball. The self-starter knew he was on to a big market opportunity.

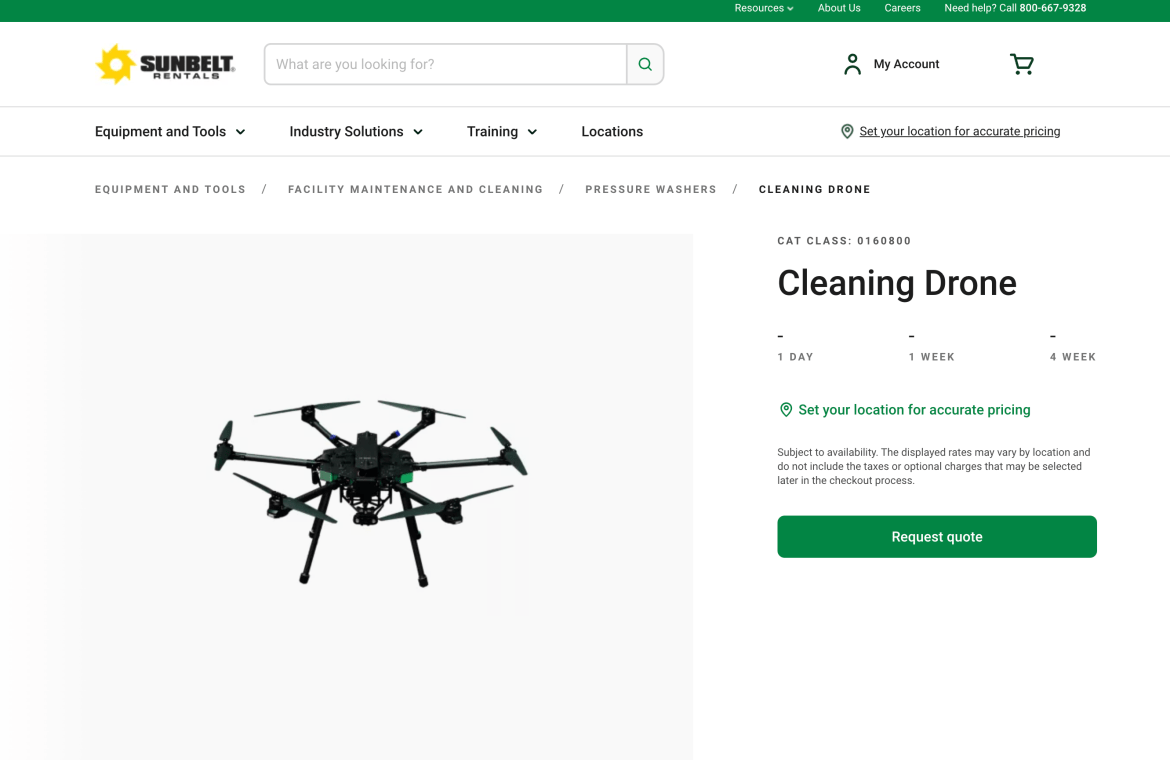

Each year, more than 160,000 emergency room injuries, and 300 deaths, are caused by falling off of ladders in the United States. Entrepreneurs like Ashur understood that drones were uniquely qualified to free humans from such dangerous work. This first required building a sturdy tethered quadcopter, capable of a 300 psi flow rate, connected to a tank for power and cleaning fluid for less than the cost of the annual salary of one window cleaner. After overcoming the technical hurdle, the even harder task was gaining sales traction. Unlike many hardware companies that set out to disrupt the market and sell directly to end customers; Lucid partnered with existing building maintenance operators. “Our primary focus is on existing cleaning companies. And the way to think about it is we’re now the shiniest tool in their toolkit that helps them do more jobs with less time and less liability to make more revenue,” explains Ashur. This relationship was further enhanced this past month with the announcement of a partnership with Sunbelt Rentals, servicing its 1,000 locations throughout California, Florida, and Texas. Lucid’s drones are now within driving distance of the majority of the 86,000 facade cleaning companies in America.

According to Commercial Buildings Energy Consumption Survey, there are 5.9 million commercial office buildings in the United States, with an average height of 16 floors. This means there is room for many robot cleaning providers. Competing directly with Lucid are several other drone operators, including Apellix, Aquiline Drones, Alpha Drones, and a handful of local upstarts. In addition, there are several winch-powered companies, such as Skyline Robotics, HyCleaner, Serbot, Erlyon, Kite Robotics, and SkyPro. Facade cleaning is ripe for automation as it is a dangerous, costly, repetitive task that can be safely accomplished by an uncrewed system. As Ashur boasts, “You improve that overall profitability because it’s fewer labor hours. You’ve got lower insurance on ground cleaner versus an above ground cleaner as well as the other equipment.” His system being tethered, ground-based, and without any ladders is the safest way to power wash a multistory office building. He elaborated further on the cost savings, “It lowers insurance cost, especially when you look at how workers comp is calculated… we had a customer, one of their workers missed the bottom rung of the ladder, the bottom rung, he shattered his ankle. OSHA classifies it as a hazardous workplace injury. Third workers comp rates are projected to increase by an annual $25,000 over the next five years. So it’s a six-figure expense for just that one business from missing one single bottom rung of the ladder and unfortunately, you hear stories of people falling off a roof or other terrible accidents that are life changing or in some cases life lost. So that’s the number one thing you get to eliminate with the drone by having people on the ground.”

Five ways drones will change the way buildings are designed

Six-dimensional force and torque sensor helps robots feel and touch

Wearable sensor can help unlock the potential of exosuits in real-world environments

DENSO Increases Efficiencies, Improves Morale with Fleet of MiR250 Autonomous Mobile Robots

Call for robot holiday videos 2022

That’s right! You better not run, you better not hide, you better watch out for brand new robot holiday videos on Robohub!

Drop your submissions down our chimney at daniel.carrillozapata@robohub.org and share the spirit of the season.

For inspiration, here are our lists from previous years.