Patrick Bennett, CC BY-ND

By Eran Klein, University of Washington and Katherine Pratt, University of Washington

In the 1995 film “Batman Forever,” the Riddler used 3-D television to secretly access viewers’ most personal thoughts in his hunt for Batman’s true identity. By 2011, the metrics company Nielsen had acquired Neurofocus and had created a “consumer neuroscience” division that uses integrated conscious and unconscious data to track customer decision-making habits. What was once a nefarious scheme in a Hollywood blockbuster seems poised to become a reality.

Recent announcements by Elon Musk and Facebook about brain-computer interface (BCI) technology are just the latest headlines in an ongoing science-fiction-becomes-reality story.

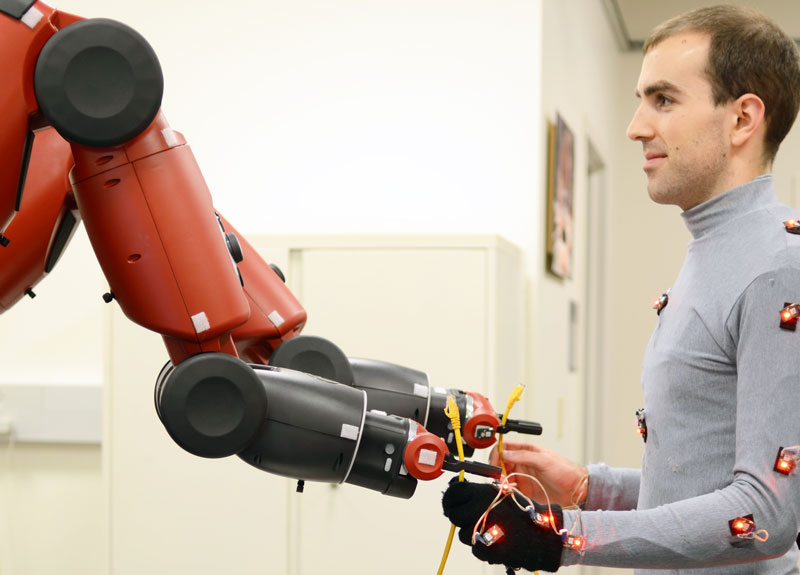

BCIs use brain signals to control objects in the outside world. They’re a potentially world-changing innovation – imagine being paralyzed but able to “reach” for something with a prosthetic arm just by thinking about it. But the revolutionary technology also raises concerns. Here at the University of Washington’s Center for Sensorimotor Neural Engineering (CSNE) we and our colleagues are researching BCI technology – and a crucial part of that includes working on issues such as neuroethics and neural security. Ethicists and engineers are working together to understand and quantify risks and develop ways to protect the public now.

Picking up on P300 signals

All BCI technology relies on being able to collect information from a brain that a device can then use or act on in some way. There are numerous places from which signals can be recorded, as well as infinite ways the data can be analyzed, so there are many possibilities for how a BCI can be used.

Some BCI researchers zero in on one particular kind of regularly occurring brain signal that alerts us to important changes in our environment. Neuroscientists call these signals “event-related potentials.” In the lab, they help us identify a reaction to a stimulus.

In particular, we capitalize on one of these specific signals, called the P300. It’s a positive peak of electricity that occurs toward the back of the head about 300 milliseconds after the stimulus is shown. The P300 alerts the rest of your brain to an “oddball” that stands out from the rest of what’s around you.

For example, you don’t stop and stare at each person’s face when you’re searching for your friend at the park. Instead, if we were recording your brain signals as you scanned the crowd, there would be a detectable P300 response when you saw someone who could be your friend. The P300 carries an unconscious message alerting you to something important that deserves attention. These signals are part of a still unknown brain pathway that aids in detection and focusing attention.

Reading your mind using P300s

P300s reliably occur any time you notice something rare or disjointed, like when you find the shirt you were looking for in your closet or your car in a parking lot. Researchers can use the P300 in an experimental setting to determine what is important or relevant to you. That’s led to the creation of devices like spellers that allow paralyzed individuals to type using their thoughts, one character at a time.

It also can be used to determine what you know, in what’s called a “guilty knowledge test.” In the lab, subjects are asked to choose an item to “steal” or hide, and are then shown many images repeatedly of both unrelated and related items. For instance, subjects choose between a watch and a necklace, and are then shown typical items from a jewelry box; a P300 appears when the subject is presented with the image of the item he took.

Everyone’s P300 is unique. In order to know what they’re looking for, researchers need “training” data. These are previously obtained brain signal recordings that researchers are confident contain P300s; they’re then used to calibrate the system. Since the test measures an unconscious neural signal that you don’t even know you have, can you fool it? Maybe, if you know that you’re being probed and what the stimuli are.

Techniques like these are still considered unreliable and unproven, and thus U.S. courts have resisted admitting P300 data as evidence.

Imagine that instead of using a P300 signal to solve the mystery of a “stolen” item in the lab, someone used this technology to extract information about what month you were born or which bank you use – without your telling them. Our research group has collected data suggesting this is possible. Just using an individual’s brain activity – specifically, their P300 response – we could determine a subject’s preferences for things like favorite coffee brand or favorite sports.

But we could do it only when subject-specific training data were available. What if we could figure out someone’s preferences without previous knowledge of their brain signal patterns? Without the need for training, users could simply put on a device and go, skipping the step of loading a personal training profile or spending time in calibration. Research on trained and untrained devices is the subject of continuing experiments at the University of Washington and elsewhere.

It’s when the technology is able to “read” someone’s mind who isn’t actively cooperating that ethical issues become particularly pressing. After all, we willingly trade bits of our privacy all the time – when we open our mouths to have conversations or use GPS devices that allow companies to collect data about us. But in these cases we consent to sharing what’s in our minds. The difference with next-generation P300 technology under development is that the protection consent gives us may get bypassed altogether.

What if it’s possible to decode what you’re thinking or planning without you even knowing? Will you feel violated? Will you feel a loss of control? Privacy implications may be wide-ranging. Maybe advertisers could know your preferred brands and send you personalized ads – which may be convenient or creepy. Or maybe malicious entities could determine where you bank and your account’s PIN – which would be alarming.

With great power comes great responsibility

The potential ability to determine individuals’ preferences and personal information using their own brain signals has spawned a number of difficult but pressing questions: Should we be able to keep our neural signals private? That is, should neural security be a human right? How do we adequately protect and store all the neural data being recorded for research, and soon for leisure? How do consumers know if any protective or anonymization measures are being made with their neural data? As of now, neural data collected for commercial uses are not subject to the same legal protections covering biomedical research or health care. Should neural data be treated differently?

Mark Stone, University of Washington, CC BY-ND

These are the kinds of conundrums that are best addressed by neural engineers and ethicists working together. Putting ethicists in labs alongside engineers – as we have done at the CSNE – is one way to ensure that privacy and security risks of neurotechnology, as well as other ethically important issues, are an active part of the research process instead of an afterthought. For instance, Tim Brown, an ethicist at the CSNE, is “housed” within a neural engineering research lab, allowing him to have daily conversations with researchers about ethical concerns. He’s also easily able to interact with – and, in fact, interview – research subjects about their ethical concerns about brain research.

There are important ethical and legal lessons to be drawn about technology and privacy from other areas, such as genetics and neuromarketing. But there seems to be something important and different about reading neural data. They’re more intimately connected to the mind and who we take ourselves to be. As such, ethical issues raised by BCI demand special attention.

Working on ethics while tech’s in its infancy

As we wrestle with how to address these privacy and security issues, there are two features of current P300 technology that will buy us time.

First, most commercial devices available use dry electrodes, which rely solely on skin contact to conduct electrical signals. This technology is prone to a low signal-to-noise ratio, meaning that we can extract only relatively basic forms of information from users. The brain signals we record are known to be highly variable (even for the same person) due to things like electrode movement and the constantly changing nature of brain signals themselves. Second, electrodes are not always in ideal locations to record.

All together, this inherent lack of reliability means that BCI devices are not nearly as ubiquitous today as they may be in the future. As electrode hardware and signal processing continue to improve, it will be easier to continuously use devices like these, and make it easier to extract personal information from an unknowing individual as well. The safest advice would be to not use these devices at all.

![]() The goal should be that the ethical standards and the technology will mature together to ensure future BCI users are confident their privacy is being protected as they use these kinds of devices. It’s a rare opportunity for scientists, engineers, ethicists and eventually regulators to work together to create even better products than were originally dreamed of in science fiction.

The goal should be that the ethical standards and the technology will mature together to ensure future BCI users are confident their privacy is being protected as they use these kinds of devices. It’s a rare opportunity for scientists, engineers, ethicists and eventually regulators to work together to create even better products than were originally dreamed of in science fiction.

The second panel featured talks on sector based solutions starting with the International Federation of the Red Cross (IFRC). The Federation (Aarathi) spoke about their joint project with WeRobotics; looking at cross-sectoral needs for various robotics solutions in the South Pacific. IFRC is exploring at the possibility of launching a South Pacific Flying Labs with a strong focus on women and girls. Pix4D (Lorenzo) addressed the role of aerial robotics in agriculture, giving concrete examples of successful applications while providing guidance to our Flying Labs Coordinators.

The second panel featured talks on sector based solutions starting with the International Federation of the Red Cross (IFRC). The Federation (Aarathi) spoke about their joint project with WeRobotics; looking at cross-sectoral needs for various robotics solutions in the South Pacific. IFRC is exploring at the possibility of launching a South Pacific Flying Labs with a strong focus on women and girls. Pix4D (Lorenzo) addressed the role of aerial robotics in agriculture, giving concrete examples of successful applications while providing guidance to our Flying Labs Coordinators. Panel number three addressed the transformation of transportation. UNICEF (Judith) highlighted the field tests they have been carrying out in Malawi; using cargo robotics to transport HIV samples in order to accelerate HIV testing and thus treatment. UNICEF has also launched an air corridor in Malawi to enable further field-testing of flying robots. MSF (Oriol) shared their approach to cargo delivery using aerial robotics. They shared examples from Papua New Guinea

Panel number three addressed the transformation of transportation. UNICEF (Judith) highlighted the field tests they have been carrying out in Malawi; using cargo robotics to transport HIV samples in order to accelerate HIV testing and thus treatment. UNICEF has also launched an air corridor in Malawi to enable further field-testing of flying robots. MSF (Oriol) shared their approach to cargo delivery using aerial robotics. They shared examples from Papua New Guinea

I enjoyed talking at the Visual Localization session (another packed out session with standing room only) on our paper, “

I enjoyed talking at the Visual Localization session (another packed out session with standing room only) on our paper, “ The social functions were good and the conference hotel was incredible, especially the infinity pool at the top of the hotel, 57 floors up, looking over the Marina. The night safari was fun too.

The social functions were good and the conference hotel was incredible, especially the infinity pool at the top of the hotel, 57 floors up, looking over the Marina. The night safari was fun too. Overall, a great week and always great to reconnect with hundreds of colleagues and collaborators from around the world. See you next year in Brisbane!

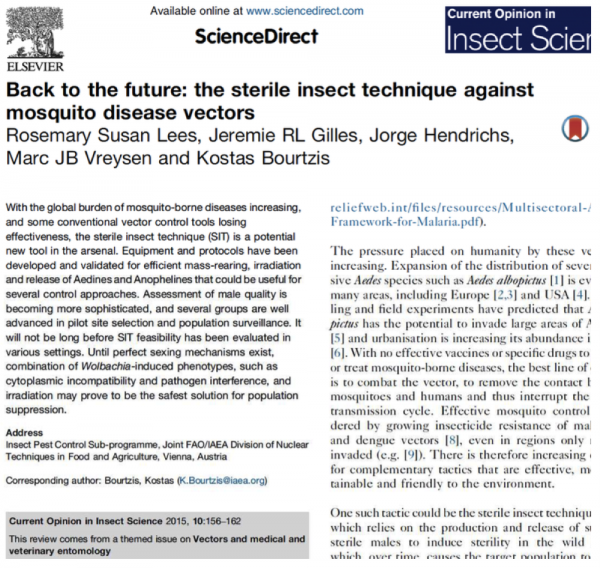

Overall, a great week and always great to reconnect with hundreds of colleagues and collaborators from around the world. See you next year in Brisbane! Mosquitos kill more humans every year than any other animal on the planet and conventional methods to reduce mosquito-borne illnesses haven’t worked as well as many hoped. So we’ve been hard at work since receiving this USAID grant six months ago to reduce Zika incidence and related threats to public health.

Mosquitos kill more humans every year than any other animal on the planet and conventional methods to reduce mosquito-borne illnesses haven’t worked as well as many hoped. So we’ve been hard at work since receiving this USAID grant six months ago to reduce Zika incidence and related threats to public health.

Our approach seeks to complement and extend (not replace) these existing delivery methods. The challenge with manned aircraft is that they are expensive to operate and maintain. They may also not be able to target areas with great accuracy given the altitudes they have to fly at.

Our approach seeks to complement and extend (not replace) these existing delivery methods. The challenge with manned aircraft is that they are expensive to operate and maintain. They may also not be able to target areas with great accuracy given the altitudes they have to fly at. Cars are less expensive, but they rely on ground infrastructure. This can be a challenge in some corners of the world when roads become unusable due to rainy seasons or natural disasters. What’s more, not everyone lives on or even close to a road.

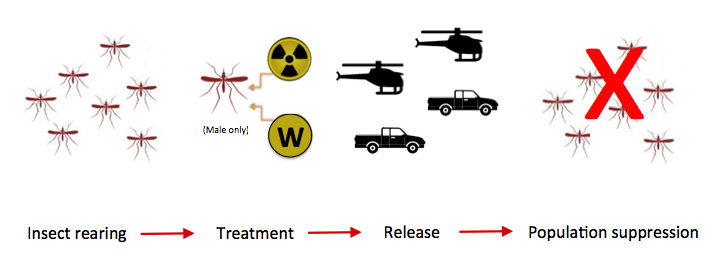

Cars are less expensive, but they rely on ground infrastructure. This can be a challenge in some corners of the world when roads become unusable due to rainy seasons or natural disasters. What’s more, not everyone lives on or even close to a road. Our IAEA colleagues thus envision establishing small mosquito breeding labs in strategic regions in order to release sterilized male mosquitos and reduce the overall mosquito population in select hotspots. The idea would be to use both ground and aerial release methods with cars and flying robots.

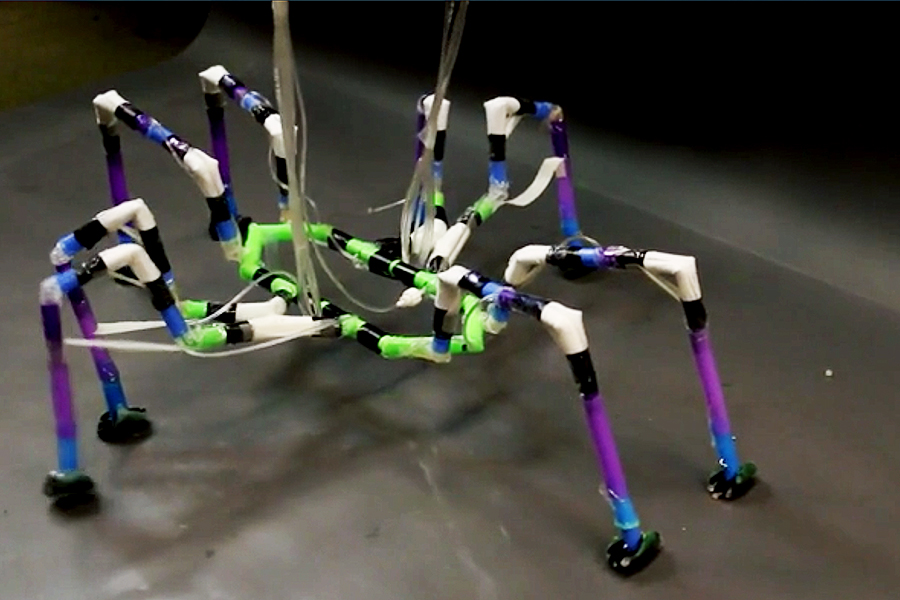

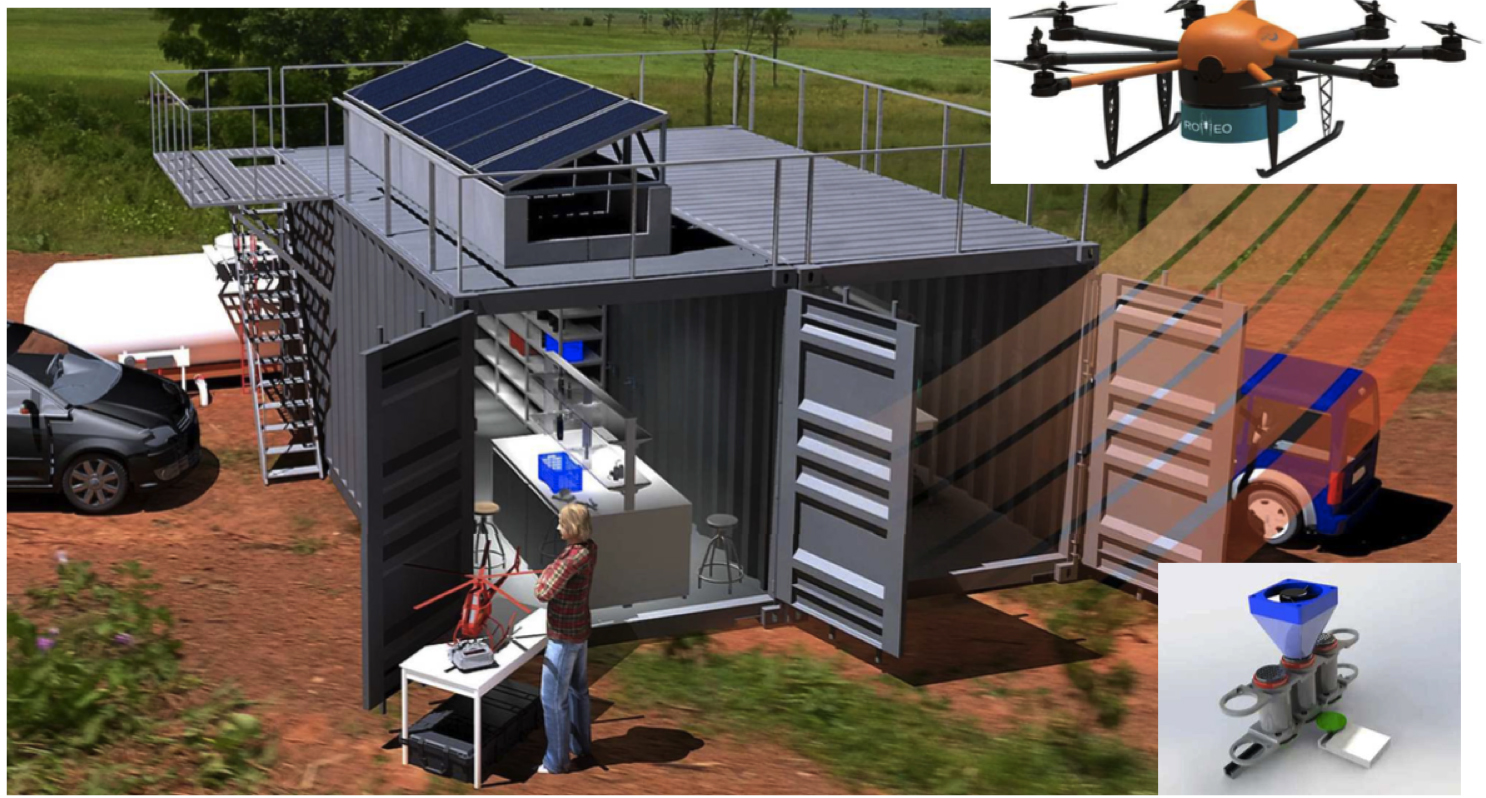

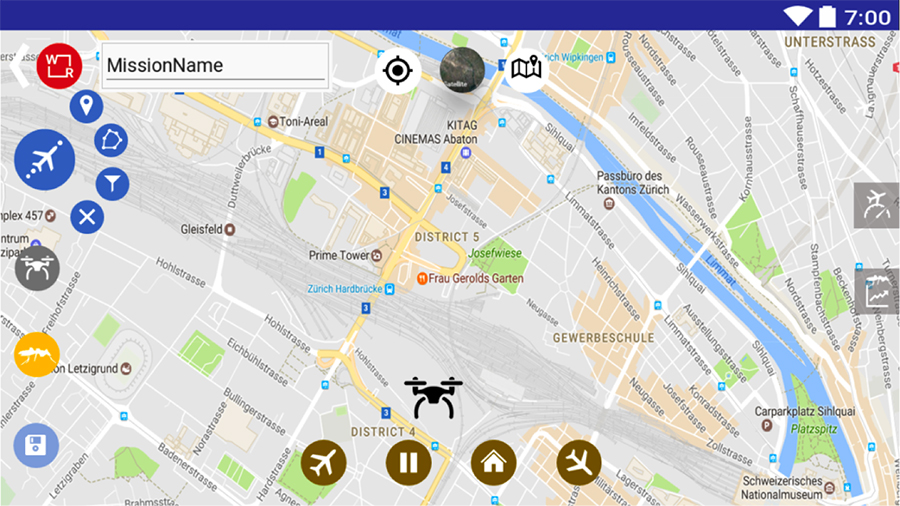

Our IAEA colleagues thus envision establishing small mosquito breeding labs in strategic regions in order to release sterilized male mosquitos and reduce the overall mosquito population in select hotspots. The idea would be to use both ground and aerial release methods with cars and flying robots. The real technical challenge here, besides breeding millions of sterilized mosquitos, is actually not the flying robot (drone/UAV) but rather the engineering that needs to go into developing a release mechanism that attaches to the flying robot. In fact, we’re more interested in developing a release mechanism that will work with any number of flying robots, rather than having a mechanism work with one and only one drone/UAV. Aerial robotics is evolving quickly and it is inevitable that drones/UAVs available in 6-12 months will have greater range and payload capacity than today. So we don’t want to lock our release mechanism into a platform that may be obsolete by the end of the year. So for now we’re just using a DJI Matrice M600 Pro so we can focus on engineering the release mechanism.

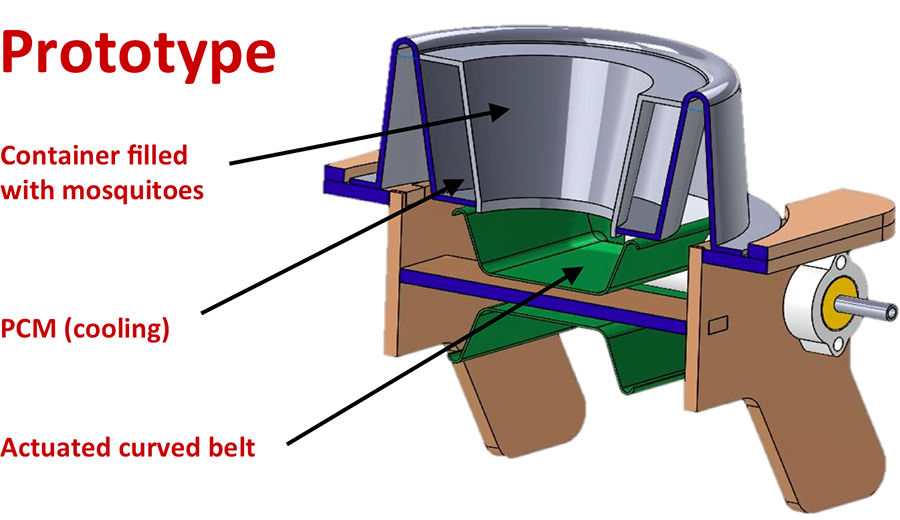

The real technical challenge here, besides breeding millions of sterilized mosquitos, is actually not the flying robot (drone/UAV) but rather the engineering that needs to go into developing a release mechanism that attaches to the flying robot. In fact, we’re more interested in developing a release mechanism that will work with any number of flying robots, rather than having a mechanism work with one and only one drone/UAV. Aerial robotics is evolving quickly and it is inevitable that drones/UAVs available in 6-12 months will have greater range and payload capacity than today. So we don’t want to lock our release mechanism into a platform that may be obsolete by the end of the year. So for now we’re just using a DJI Matrice M600 Pro so we can focus on engineering the release mechanism. Developing this release mechanism is anything but trivial. Ironically, mosquitos are particularly fragile. So if they get damaged while being released, game over. What’s more, in order to pack one million mosquitos (about 2.5kg in weight) into a particularly confined space, they need to be chilled or else they’ll get into a brawl and damage each other, i.e., game over. (Recall the last time you were stuck in the middle seat in Economy class on a transcontinental flight). This means that the release mechanism has to include a reliable cooling system. But wait, there’s more. We also need to control the rate of release, i.e., to control how many thousands mosquitos are released per unit of space and time in order to drop said mosquitos in a targeted and homogenous manner. Adding to the challenge is the fact that mosquitos need time to unfreeze during free fall so they can fly away and do their thing, i.e., before they hit the ground or else, game over.

Developing this release mechanism is anything but trivial. Ironically, mosquitos are particularly fragile. So if they get damaged while being released, game over. What’s more, in order to pack one million mosquitos (about 2.5kg in weight) into a particularly confined space, they need to be chilled or else they’ll get into a brawl and damage each other, i.e., game over. (Recall the last time you were stuck in the middle seat in Economy class on a transcontinental flight). This means that the release mechanism has to include a reliable cooling system. But wait, there’s more. We also need to control the rate of release, i.e., to control how many thousands mosquitos are released per unit of space and time in order to drop said mosquitos in a targeted and homogenous manner. Adding to the challenge is the fact that mosquitos need time to unfreeze during free fall so they can fly away and do their thing, i.e., before they hit the ground or else, game over. We’ve already started testing our early prototype using “mosquito substitutes” like cumin and anise as the latter came recommended by mosquito experts. Next month, we’ll be at the FAO/IAEA Pest Control Lab in Vienna to test the release mechanism indoors using dead and live mosquitos. We’ll then have 3 months to develop a second version of the prototype before heading to Latin America to field test the release mechanism with our Peru Flying Labs. One of these tests will involve the the integration of the flying robot and the release mechanism in terms of both hardware and software. In other words, we’ll be testing the integrated system over different types of terrain and weather conditions in Peru specifically.

We’ve already started testing our early prototype using “mosquito substitutes” like cumin and anise as the latter came recommended by mosquito experts. Next month, we’ll be at the FAO/IAEA Pest Control Lab in Vienna to test the release mechanism indoors using dead and live mosquitos. We’ll then have 3 months to develop a second version of the prototype before heading to Latin America to field test the release mechanism with our Peru Flying Labs. One of these tests will involve the the integration of the flying robot and the release mechanism in terms of both hardware and software. In other words, we’ll be testing the integrated system over different types of terrain and weather conditions in Peru specifically.

For now, though, our WeRobotics Engineering Team (below) is busy developing the prototype out of our Zurich office. So if you happen to be passing through, definitely let us know, we’d love to show you the latest and give you a demo. We’ll also be reaching out the Technical University of Peru who are members of our Peru Flying Labs to engage with their engineers as we get closer to the field tests in country.

For now, though, our WeRobotics Engineering Team (below) is busy developing the prototype out of our Zurich office. So if you happen to be passing through, definitely let us know, we’d love to show you the latest and give you a demo. We’ll also be reaching out the Technical University of Peru who are members of our Peru Flying Labs to engage with their engineers as we get closer to the field tests in country. As an aside, our USAID colleagues recently encouraged us to consider an entirely separate, follow up project totally independently of IAEA whereby we’d be giving rides to Wolbachia treated mosquitos. Wolbachia is the name of bacteria that is used to infect male mosquitos so they can’t reproduce. IAEA does not focus on Wolbachia at all, but other USAID grantees do. Point being, the release mechanism could have multiple applications. For example, instead of releasing mosquitos, the mechanism could scatter seeds. Sound far-fetched?

As an aside, our USAID colleagues recently encouraged us to consider an entirely separate, follow up project totally independently of IAEA whereby we’d be giving rides to Wolbachia treated mosquitos. Wolbachia is the name of bacteria that is used to infect male mosquitos so they can’t reproduce. IAEA does not focus on Wolbachia at all, but other USAID grantees do. Point being, the release mechanism could have multiple applications. For example, instead of releasing mosquitos, the mechanism could scatter seeds. Sound far-fetched?