A Cut Above: Adding Next-level Protection for Next-generation Welding

Are Labeling and Palletizing Robots the Secret Weapon Manufacturers Need?

Navigating the Complexities of the Semiconductor Supply Chain

In a significant development that underscores the strategic importance of semiconductors in the global economy, the White House has recently announced a groundbreaking agreement with Taiwan Semiconductor Manufacturing Company (TSMC). The deal will see the U.S. government extend $11 billion in grants and loans to TSMC for the chip manufacturer to establish three advanced semiconductor factories in Arizona. The ambitious goal is to have 20% of the world’s leading-edge semiconductors manufactured on American soil by 2030.

This move is not merely about enhancing the United States’ semiconductor capabilities but is also a strategic maneuver to mitigate the risks associated with the heavy concentration of chip manufacturing in East Asia, particularly in Taiwan. As we highlighted in our previous article, Semiconductor Titans: Inside the World of AI Chip Manufacturing and Design, the dominance of TSMC in chip manufacturing and NVIDIA in chip design presents a significant concentration risk. However, the complexities of the semiconductor supply chain extend far beyond these giants’ dominance.

The semiconductor supply chain is a labyrinthine network of interconnected processes, each with its own set of vulnerabilities. In this article, we will delve into the key risks that threaten this vital supply chain. We will explore the major concentrations that pose significant challenges and highlight some of the most prominent choke points that can disrupt the flow of semiconductor production. Our aim is to provide a comprehensive understanding of the intricacies involved in the semiconductor supply chain and the critical importance of ensuring its resilience in the face of evolving global challenges.

If this in-depth content is useful for you, subscribe to our AI mailing list to be alerted when we release new material.

Key Risk Areas in the Semiconductor Supply Chain

The semiconductor supply chain, essential for the modern world’s functioning, faces numerous risks that could significantly disrupt its operations. These risks include geopolitical tensions, climate and environmental factors, product complexity, critical shortages and disruptions, a shortage of specialized labor, and a complex regulatory environment.

Geopolitical Tensions

The semiconductor industry is deeply entangled in the web of global geopolitics, with tensions between China and Taiwan representing a particularly acute threat. Taiwan’s strategic importance in the semiconductor supply chain is unparalleled, as it is home to 92% of the world’s most sophisticated semiconductor manufacturing capabilities (< 10 nanometers). Any conflict between China and Taiwan could have devastating repercussions for the global semiconductor supply chain, disrupting the production and supply of these critical components.

Compounding the issue are the trade barriers and restrictions implemented by the U.S. and China. Given that each of these economic powerhouses accounts for a quarter of global semiconductor consumption, any trade measures they impose can have significant ripple effects throughout the industry.

Climate and Environment Factors

The semiconductor supply chain is also vulnerable to disruptions caused by natural disasters, such as earthquakes, heat waves, and flooding. A survey of 100 senior decision-makers in leading semiconductor companies revealed that more than half (53%) consider climate change and environmental factors as significant influences on supply chain risks. Furthermore, 31% of respondents identified environmental changes as underlying factors contributing to supply chain vulnerabilities.

A major concern is the geographical concentration of major suppliers in areas prone to extreme weather events and natural disasters. As the frequency and severity of such events increase due to climate change, the semiconductor industry must adapt and bolster its resilience to safeguard against these environmental challenges.

Product Complexity

One of the most pressing challenges is the increasing complexity of semiconductor products. According to the survey, mentioned earlier, 31% of respondents identified this as the primary factor underlying supply chain risks. The production of a single semiconductor requires contributions from thousands of companies worldwide, providing a myriad of raw materials and components. As these chips traverse international borders more than 70 times, covering approximately 25,000 miles through various production stages, the complexity of the supply chain becomes evident.

This intricate network makes it difficult to pinpoint vulnerabilities and develop strategies to mitigate them. A staggering 81% of executives in the semiconductor industry admitted that a lack of data, knowledge, and understanding poses significant challenges to addressing risks in the coming years.

Additionally, the relentless demand for increased functionality in semiconductor products further complicates the manufacturing process and the supply chain.

Critical Shortages and Disruptions

The issue of product complexity is further compounded by critical shortages and disruptions in the supply chain. A significant portion of senior decision-makers in leading semiconductor companies (43%) believe that ongoing shortages of raw materials will have the most significant impact on their businesses in the next two years, closely followed by energy and other service interruptions (40%). These shortages and disruptions can have wide-reaching consequences, affecting everything from production timelines to market availability.

In the subsequent sections of this article, we will delve deeper into the major danger points for shortages and disruptions in the semiconductor supply chain.

Shortage of Specialized Labor

The scarcity of skilled technical talent is a critical issue that chip manufacturers are facing and is expected to intensify over the next three years. This shortage is not just a local issue but a global one, affecting the industry’s ability to keep pace with the ever-increasing demand for semiconductors.

A notable example of this challenge is TSMC’s experience in the United States. The company has had to postpone the opening of its facilities, including the first fabrication plant in Arizona, due to the lack of specialized labor in the U.S. In an attempt to address this shortfall, TSMC considered bringing in foreign labor, a move that was met with strong opposition from local unions.

Complex Regulatory Environment

Another significant hurdle for the semiconductor industry is navigating the complex regulatory landscape, especially when it comes to environmental regulations. Chip factories are known for their high water usage and greenhouse gas emissions, making them subject to stringent environmental laws.

In the U.S., companies looking to establish facilities must comply with several regulations, including the National Environmental Policy Act, the Clean Water Act, and the Clean Air Act. For many chip companies, especially those relocating operations from overseas, adhering to these laws can be a daunting task.

The challenge lies in balancing the need for environmental protection with the demands of semiconductor manufacturing, a task that requires careful planning and execution.

Major Concentration Areas in the Semiconductor Supply Chain

The global semiconductor supply chain is a complex and intricate network that is crucial for a multitude of industries worldwide. However, this network is characterized by significant concentrations of production and expertise in specific geographic regions, posing potential risks and vulnerabilities.

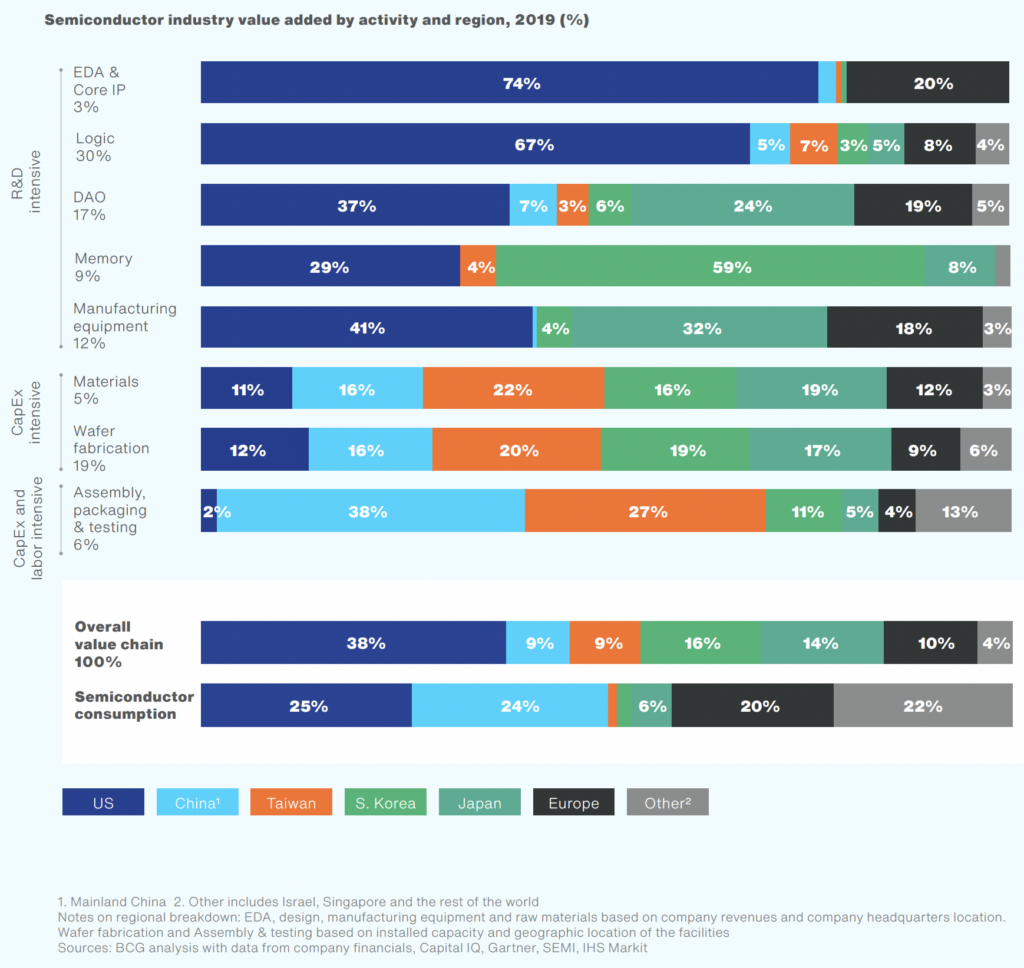

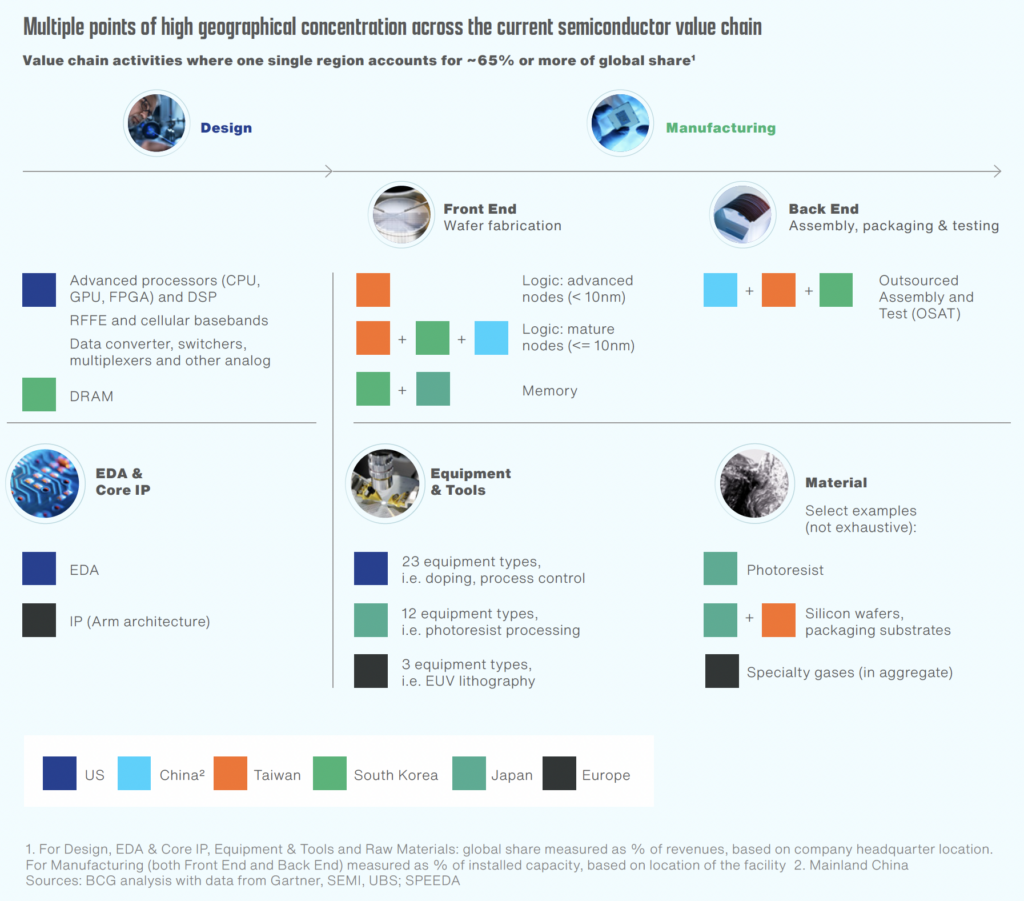

An examination of the semiconductor value chain reveals that more than 50 points across the network are dominated by one region holding over 65% of the global market share. This concentration is not evenly distributed but varies significantly across different countries, each with dominance in specific areas of the supply chain.

The statistics from the graph above may be slightly outdated, but they still illustrate the key trends that remain relevant. For example, approximately 75% of the world’s semiconductor manufacturing capacity is located in China and East Asia. In terms of cutting-edge technology, 100% of the world’s most advanced semiconductor manufacturing capacity (< 10 nanometers) is found in Taiwan (92%) and South Korea (8%). These advanced semiconductors are not just components in consumer electronics; they are pivotal to the economy, national security, and critical infrastructure of any country, highlighting the strategic importance of these capabilities.

At the same time, we need to consider that this region, while being a hub of semiconductor activity, is also exposed to a high degree of seismic activity and geopolitical tensions, further accentuating the risks associated with this concentration.

The concentration in the semiconductor industry extends beyond manufacturing capabilities. The United States is at the forefront of activities that are heavily reliant on R&D, accounting for approximately three-quarters of electronic design automation (EDA) and core IP. Additionally, U.S. firms have a dominant presence in the equipment market, holding more than a 50% share in five major categories of manufacturing process equipment. These categories include deposition tools, dry/wet etch and cleaning equipment, doping equipment, process control systems, and testers.

A high degree of geographic concentration is also present in the supply of certain materials crucial to semiconductor manufacturing, including silicon wafers and photoresist, as well as some chemicals and specialty gases. The concentrated sources of these materials pose additional risks to the stability of the global supply chain. Let’s review a few of the most prominent potential choke points in the semiconductor industry.

Potential Choke Points in the Semiconductor Supply Chain

The semiconductor supply chain is a complex network that relies on a variety of specialized materials and equipment. Certain key components and raw materials have highly concentrated sources of supply, creating potential choke points that could disrupt the entire industry. Below are a few specific examples.

- Lithography Equipment. Advanced lithography machines are crucial for etching intricate circuits onto silicon wafers. ASML, based in the Netherlands, dominates this niche market as the sole supplier of extreme ultraviolet (EUV) lithography machines. These machines are indispensable for producing the most advanced semiconductor chips, making ASML’s role in the supply chain critically important.

- Neon Gas. Ukraine is a major producer of neon gas, an essential raw material for semiconductor manufacturing. Before the conflict in the region, the country accounted for up to 70% of the global supply of neon gas. The disruptions caused by Russia’s war have led to uncertainty and potential shortages, underscoring the vulnerability associated with depending on a single region for critical materials.

- C4F6 Gas. C4F6 gas is crucial for manufacturing 3D NAND memory and some advanced logic chips. Once a manufacturing plant is calibrated to use C4F6, it cannot easily be substituted. The top three suppliers of C4F6 are located in Japan (~40%), Russia (~25%), and South Korea (~23%). A severe disruption in any of these countries could lead to significant losses in the semiconductor industry, with potential revenue losses of $10 to $18 billion for NAND alone. Recovering from such a disruption could take 2-3 years, as new capacity would need to be developed and made ready for mass production.

- Photoresist Materials. Japan holds a dominant position in the photoresist processing market, with over a 90% share. Photoresist materials are vital for the lithography process, making Japan’s role in the supply chain crucial.

- Polysilicon. China is a major player in the silicon market, accounting for 79% of global raw silicon and 70% of global silicon production. The concentration of polysilicon production in China poses a risk to the semiconductor supply chain, as any disruption in the region could have far-reaching effects.

- Critical Minerals. China is also the main source country for many critical minerals required in semiconductor manufacturing, including rare earth elements (REEs), gallium, germanium, arsenic, and copper. The reliance on China for these essential materials adds another layer of vulnerability to the supply chain.

As evident from the above examples, the integrity of the semiconductor supply chain is closely linked to specialized suppliers and materials concentrated in specific geographical regions. This dependence creates vulnerable choke points that could significantly affect the industry’s global operations. Recognizing these vulnerabilities highlights the critical need for diversifying supply sources and implementing comprehensive risk mitigation strategies.

Enjoy this article? Sign up for more AI updates.

We’ll let you know when we release more summary articles like this one.

The post Navigating the Complexities of the Semiconductor Supply Chain appeared first on TOPBOTS.

Hyper-V Unveiled: Best Practices for Efficient Hyper-V Backup

Virtualization has become a cornerstone of modern IT infrastructure, enabling organizations to run multiple virtual machines (VMs) on a single physical server. In the field of virtualization, Microsoft’s Hyper-V stands out as a robust, scalable solution for creating and managing virtual machines. However, the advantages of digitization come with the responsibility of ensuring that your...

The post Hyper-V Unveiled: Best Practices for Efficient Hyper-V Backup appeared first on 1redDrop.

Nearly 70% of Newsrooms Using AI

Most newsrooms across the U.S. and Europe are all-in on AI, according to a new study from the Associated Press, which found that nearly 70% of those surveyed are already using AI in some way.

“It’s an exciting moment for journalism and technology, maybe a little too exciting, which makes it difficult to plan for the next year let alone what may transpire in the next 10 years,” says Aimee Rinehart, co-author of the AP study.

Primary uses of AI in newsrooms right now include auto-generating story drafts, social media posts, newsletters and headlines, translating text and transcribing reporter interviews, according to the study.

Adds Hannes Cools, study co-author: “I do believe that generative AI is here to stay, and it will — if it hasn’t already — be present in many aspects of our daily lives.”

In other AI-generated news and analysis:

*In-Depth Guide: Custom-Fit Alternatives to Copy.ai: Writer Sam Dawson offers his take on some of the best AI writers currently available — based on your personal use case.

Writesonic is great for auto-generating blog posts and similar copy, while Word.ai is a good pick for rewrites and Frase is more suited to writers looking to SEO-optimize their copy, according to Dawson.

Bottom line: This is a great piece to check-out to ensure you’re bringing an AI writer on board that is specially designed for your particular writing needs.

*ChatGPT Just Got Smarter: Writers looking for writing from ChatGPT that is more direct, less verbose — and opts for more conversational language — will be happy with the tool’s new upgrade.

The enhanced writing capability — available with ChatGPT’s paid versions Plus, Teams and Enterprise — is powered by a new AI engine for the tool, GPT-4 Turbo.

In addition, the GPT-4 Turbo AI engine also offers users improved chops in math, logical reasoning and coding.

Plus, GPT-4 Turbo also adds an upgraded, overall knowledgebase to ChatGPT that’s current to December 2023.

*Key Players in AI Writing: More Than Just ChatGPT Clones: Infinity Business Insights has released its list of the top players in AI writing in 2024.

The list forms the framework for IBI’s new study, “AI Writing Tool Market Insights, Extending to 2031.”

Many of the company’s listed by IBI use ChatGPT’s underlying AI engine — or similar AI tech — to power their AI writing.

They attempt to distinguish their auto-writers from ChatGPT and similar AI engines by layering on a custom dashboard of additional writing tools.

AI writing companies that made IBI’s list (with links to each company’s pricing page) include:

Jasper

Sudowrite

Anyword

INK

Scalenut

Neuraltext

Writesonic

Wordtune

Sapling

Notion Labs

Copy.ai

Rytr

Chibi AI

Surfer

Article Forge

WordAI

AI Writer

Hypotenuse AI

Longshot

CreaitorAI

CopySmith

OpenAI

Writer

GrowthBar

Closerscopy

ParagraphAI

Frase

*Ultimate Persuader: AI Bests Humans at Winning Hearts and Minds: It’s official: If you’re looking to persuade people with an op-ed piece, marketing copy or similar text, your best bet is to turn to AI to auto-generate the piece, according to a new study.

Researchers found that an AI opinion piece written using ChatGPT’s underlying AI engine GPT-4 was preferred over human-written copy 82% of the time.

Observes writer Matthias Bastian: “The study suggests that such AI-driven persuasion strategies could have a major impact in sensitive online environments such as social media.”

*Street Hustle: AI Competitors Still Nipping at ChatGPT’s Heels: In the wake of ChatGPT’s new upgrade, fierce competitors like Google and Mistral have doubled-down on their chase.

Observes The Guardian: “OpenAI, Google, and the French artificial intelligence startup Mistral have all released new versions of their frontier AI models within 12 hours of one another as the industry prepares for a burst of activity over the summer.

Meanwhile, Facebook parent Meta is expected to release a significant overhaul to its own AI engine this summer.

*When Some of the Best Things in AI Are Free: While the maker of ChatGPT is still the top choice for corporate customers looking to add AI, a number of open-source AI makers are giving the leader a run for its money.

Says writer Sharon Goldman: “The landscape now includes unicorn startups such as Mistral and Together AI — and boasts a constant barrage of new open-source AI models that are getting ever-closer to beating OpenAI’s flagship GPT-4.

“Just over the past couple of weeks, there were also open source releases of Large Language Models (AI engines) from top companies like Databricks, Cerebras, AI21 and Cohere.”

Proponents of open-source release their AI engines free to the public, reasoning that corporate customers will still pay for customized versions of the basic AI code.

Simultaneously, free access to the generic AI engines also enables AI researchers across-the-globe to learn from the latest AI research and continually improve on it.

*Free Journalism + AI Online Fest, April 17-21: Writers and editors looking to stream free, live online presentations on AI and journalism will want to check-out a free AI festival this week.

Dubbed the International Journalism Festival, the in-person and online fest offers a number of tracks in English.

Plus, the gathering offers still more tracks featuring automated, simultaneous translation into English and other popular languages.

AI tracks in the fest include:

~Applying AI in Small Newsrooms

~Can Journalism Survive AI

~Freelance Journalism in the Age of AI

*Walmart Online Powers Up With AI Writing: Third-party sellers on the Walmart.com site can now auto-generate product descriptions using ChatGPT and similar AI tech.

The new tool, dubbed ContentHubGPT, also auto-suggests keywords for those product descriptions as well as other suggestions for search engine optimization.

Says Sumit Kapoor, managing partner, Zorang — maker of ContentHubGPT: “Walmart Marketplace is a leader and it gives me tremendous pride to collaborate with such a storied name.”

*AI Big Picture: Pizza Hut Goes ‘AI First:’ You know the world has reached critical mass in AI when brands like Pizza Hut, Taco Bell and Kentucky Fried Chicken all boldly declare they are now ‘AI First’ companies.

Says Joe Park, chief digital and technology officer, Yum Brands — the parent company behind Pizza Hut et al: Our vision “is that an AI-first mentality works every step of the way.

“If you think about the major journeys within a restaurant that can be AI-powered, we believe it’s endless.”

Share a Link: Please consider sharing a link to https://RobotWritersAI.com from your blog, social media post, publication or emails. More links leading to RobotWritersAI.com helps everyone interested in AI-generated writing.

–Joe Dysart is editor of RobotWritersAI.com and a tech journalist with 20+ years experience. His work has appeared in 150+ publications, including The New York Times and the Financial Times of London.

The post Nearly 70% of Newsrooms Using AI appeared first on Robot Writers AI.