#IROS2020 Plenary and Keynote talks focus series #1: Yukie Nagai & Danica Kragic

Would you like to stay up to date with the latest robotics & AI research from top roboticists? The IEEE/RSJ IROS2020 (International Conference on Intelligent Robots and Systems) recently released their Plenary and Keynote talks in the IEEE RAS YouTube channel. We’re starting a new focus series with all their talks. This week, we’re featuring Professor Yukie Nagai (University of Tokyo), talking about cognitive development in humans and robots, and Professor Danica Kragic (KTH Royal Institute of Technology), talking about the impact of robotics and AI in the fashion industry.

|

Prof. Yukie Nagai – Cognitive Development in Humans and Robots: New Insights into Intelligence Abstract: Computational modeling of cognitive development has the potential to uncover the underlying mechanism of human intelligence as well as to design intelligent robots. We have been investigating whether a unified theory accounts for cognitive development and what computational framework embodies such a theory. This talk introduces a neuroscientific theory called predictive coding and shows how robots as well as humans acquire cognitive abilities using predictive processing neural networks. A key idea is that the brain works as a predictive machine; that is, the brain tries to minimize prediction errors by updating the internal model and/or by acting on the environment. Our robot experiments demonstrate that the process of minimizing prediction errors leads to continuous development from non-social to social cognitive abilities. Internal models acquired through their own sensorimotor experiences enable robots to interact with others by inferring their internal state. Our experiments inducing atypicality in predictive processing also explains why and how developmental disorders appear in social cognition. I discuss new insights into human and robot intelligence obtained from these studies. Bio: Yukie Nagai is a Project Professor at the International Research Center for Neurointelligence, the University of Tokyo. She received her Ph.D. in Engineering from Osaka University in 2004 and worked at the National Institute of Information and Communications Technology, Bielefeld University, and Osaka University. Since 2019, she leads Cognitive Developmental Robotics Lab at the University of Tokyo. Her research interests include cognitive developmental robotics, computational neuroscience, and assistive technologies for developmental disorders. Her research achievements have been widely reported in the media as novel techniques to understand and support human development. She also serves as the research director of JST CREST Cognitive Mirroring. |

|

Prof. Danica Kragic – Robotics and Artificial Intelligence Impacts on the Fashion Industry Abstract: This talk will overview how robotics and artificial intelligence can impact fashion industry. What can we do to make fashion industry more sustainable and what are the most difficult parts in this industry to automate? Concrete examples of research problems in terms of perception, manipulation of deformable materials and planning will be discussed in this context. Bio: Danica Kragic is a Professor at the School of Computer Science and Communication at the Royal Institute of Technology, KTH. She received MSc in Mechanical Engineering from the Technical University of Rijeka, Croatia in 1995 and PhD in Computer Science from KTH in 2001. She has been a visiting researcher at Columbia University, Johns Hopkins University and INRIA Rennes. She is the Director of the Centre for Autonomous Systems. Danica received the 2007 IEEE Robotics and Automation Society Early Academic Career Award. She is a member of the Royal Swedish Academy of Sciences, Royal Swedish Academy of Engineering Sciences and Young Academy of Sweden. She holds a Honorary Doctorate from the Lappeenranta University of Technology. Her research is in the area of robotics, computer vision and machine learning. She received ERC Starting and Advanced Grant. Her research is supported by the EU, Knut and Alice Wallenberg Foundation, Swedish Foundation for Strategic Research and Swedish Research Council. She is an IEEE Fellow. |

Handling Omnidirectional 3D Vision Data in Mobile Robots

Milvus Robotics – Moving the World Forward with Material Handling

New robot aims to make high-rise window and façade services faster and safer

Improving Image Stabilization with Hexapod 6-Axis Motion Simulators for More Reliable Image Capturing

Bipedal robot makes history by learning to run, completing a 5K

Smart robot can lend hospitals a big hand

Nimble robotic arms that perform delicate surgery may be one step closer to reality

What Technologies Is Amazon Developing to Keep Workers Safe?

Google parent launches new ‘moonshot’ for robotics software

Better behaving bots: Researcher helps robots problem solve and care

RO-MAN 2021 Roboethics Competition: Bringing ethical robots into the home

In 1984, Heathkit presented HERO Jr. as the first robot that could be used in households to perform a variety of tasks, such as guarding people’s homes, setting reminders, and even playing games. Following this development, many companies launched affordable “smart robots” that could be used within the household. Some of these technologies, like Google Home, Amazon Echo and Roomba, have become household staples; meanwhile, other products such as Jibo, Aniki, and Kuri failed to successfully launch despite having all the necessary resources.

By Marshall Astor – flickr.com, CC BY-SA 2.0, https://commons.wikimedia.org/w/index.php?curid=5531066

Why were these robots shut down? Why aren’t there more social and service robots in households, particularly with the rising eldery population and increasing number of full-time working parents? The simple answer is that most of these personal robots do not work well—but this is not necessarily because we do not have the technological capacity to build highly functional robots.

Technologists have accomplished amazing physical tasks with robots such as a humanoid robot that can perform gymnastics movements or a robotic dog that traverses through rough trails. However, as of now we cannot guarantee that these robots/personal assistants act appropriately in the complex and sensitive social dynamics of a household. This poses a significant obstacle in developing domestic service robots because companies would be liable for any harms a socially inappropriate robot causes. As robots become more affordable and feasible technically, the challenge of designing robots that act in accordance with context-specific social norms becomes increasingly pronounced. Researchers in human-robot interaction and roboethics have attempted to resolve this issue for the past few decades, and while progress has been made, there is an urgent need to address the ethical implications of service robots in practice.

As an attempt to take a more solution-focused path for these challenges, we are happy to share a completely new competition with our readers. This year, the Roboethics to Design & Development competition will take place as a part of RO-MAN—an international conference on robot and human interactive communication. The competition, the first and only one of its kind, fulfills a need for interactive education on roboethics.

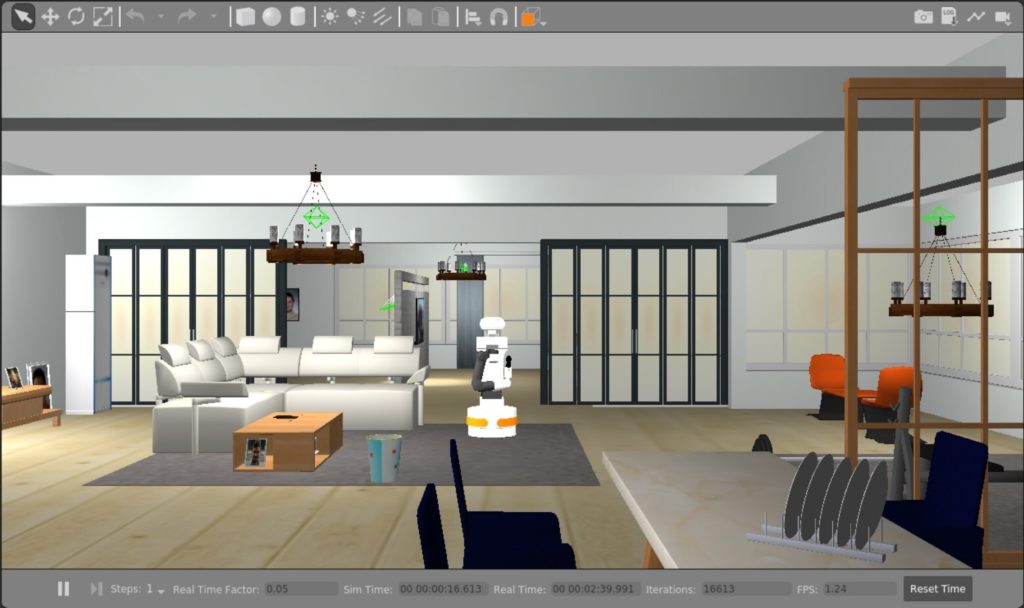

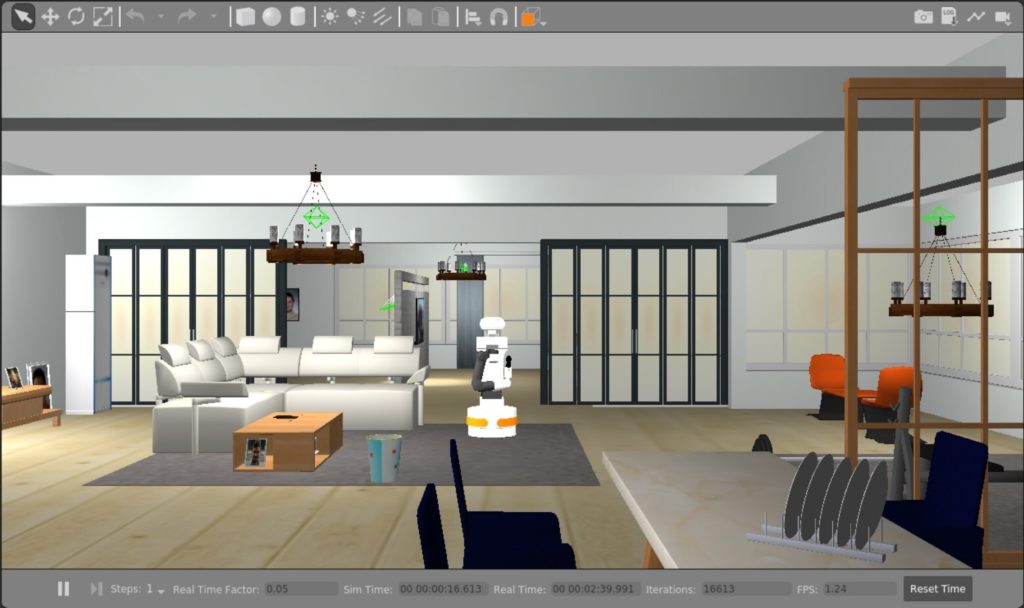

In partnership with RoboHub at the University of Waterloo, the competition organizers designed a virtual environment where participating teams will develop a robot that fetches objects in a home. The household is composed of a variety of personas—a single mother who works as a police officer, a teenage daughter, her college-aged boyfriend, a baby, and a dog. For example, design teams will need to consider how a home robot may respond to the daughter’s request to take her parent’s credit card. In this ethical conundrum, should the robot obey the daughter, and would the robot be responsible if the daughter were to use the credit card without her mom’s permission?

How can we identify and address ethical challenges presented by a home robot?

Participants are challenged to tackle the long-standing problem of how we can design a safe and ethical robot in a dynamic environment where values, beliefs, and priorities may be in conflict. With submissions from around the world, it will be fascinating to see how solutions may differ and translate across cultures.

It is important to recognize that there is a void when searching for standards and ethical rules in robotics. In an attempt to address this void, various toolkits have been developed to guide technologists in identifying and resolving ethical challenges. Here are some steps from the Foresight into AI Ethics Toolkit that will be helpful in designing an ethical home robot:

- Who are our stakeholders and what are their values, beliefs, and priorities?

Stakeholders refer to the people who are directly or indirectly impacted by the technology. In the case of RO-MAN’s home robot, we want to consider:

- Who is in the household and what is important to them in how they live their day to day?

- What are the possible interactions within the household members and between the householder members and the robot?

- How will the robot interact with the various stakeholders?

- What are the goals and values of each stakeholder? How do the stakeholders expect to interact with the robot? What do they expect to gain from the robot?

- What are the value tensions presented by the home robot and social context?

Once we’ve reviewed the values of each stakeholder identified in the previous step, we may notice that some of their values may be in conflict with one another. As such, we need to identify these value tensions because they can lead to ethical issues.

Here are some questions that can prompt us to think about value tensions:

- Who was involved in the decision of purchasing the robot? What were their goals in introducing the robot to the household?

- What is the cultural context or background for this particular household? What societal values could impact stakeholders’ individual beliefs and actions?

- What might the different stakeholders argue about? For example, what might the teenage daughter and the mother disagree about in regards to the robot’s capabilities?

- How will the robot create or relieve conflict in the household?

In some cases, value tensions may indicate a clear moral tradeoff, such that two values or goals conflict and one must be prioritized over another. The challenge is therefore to design solutions that fairly balance stakeholder interests while respecting their fundamental rights.

- How can we resolve the identified value tensions?

Focusing on these value tensions, we can begin to brainstorm how these conflicts can be addressed and at what level they should be addressed at. In particular, we need to determine whether a value tension can be resolved at a systems or technical level. For example, if the mother does not trust her daughter’s boyfriend, will the presence and actions of the home robot address the root of the problem (i.e. distrust)? Most likely not, but the robot may alleviate or exacerbate the issue. Therefore, we need to consider how the tension may manifest in more granular ways—how can we ensure that the robot appropriately navigates trust boundaries of the mother-boyfriend relationship? The robot must protect the privacy of all stakeholders, safeguard their personal items, abide by the laws, and respect other social norms.

When developing solutions, we can begin by asking ourselves:

- What level is the value tension occurring at, and what level(s) of solutions are accessible to the competition’s design parameters?

- Which actions from the robot will produce the most good and do the least harm?

- How can we manipulate the functions of the robot to address ethical challenges? For instance:

- How might the robot’s speech functions impact the identified value tensions?

- In what ways should the robot’s movements be limited to respect stakeholders’ privacy and personal spaces?

- How can design solutions address all stakeholders’ values?

- How can we maximize the positive impact of the design to address as many ethical challenges as possible?

What does the public think about the scenarios and approaches?

Often the public voice is missing in roboethics spaces, and we seek to engage the public in tackling these ethical challenges. To present the participants’ ethical designs to a broader audience, the Open Roboethics Institute will be running a public poll and awarding a Citizen’s Award to the team with the most votes. If you are interested in learning about and potentially judging their solutions, we encourage you to participate in the vote next month. We look forward to hearing from you!

To learn more about the roboethics competition at RO-MAN 2021, please visit https://competition.raiselab.ca. If you have any questions about the ORI Foresight into AI Ethics Toolkit, contact us at contact@openroboethics.org.

Additional Resources

RO-MAN 2021 Roboethics Competition: Bringing ethical robots into the home

In 1984, Heathkit presented HERO Jr. as the first robot that could be used in households to perform a variety of tasks, such as guarding people’s homes, setting reminders, and even playing games. Following this development, many companies launched affordable “smart robots” that could be used within the household. Some of these technologies, like Google Home, Amazon Echo and Roomba, have become household staples; meanwhile, other products such as Jibo, Aniki, and Kuri failed to successfully launch despite having all the necessary resources.

By Marshall Astor – flickr.com, CC BY-SA 2.0, https://commons.wikimedia.org/w/index.php?curid=5531066

Why were these robots shut down? Why aren’t there more social and service robots in households, particularly with the rising eldery population and increasing number of full-time working parents? The simple answer is that most of these personal robots do not work well—but this is not necessarily because we do not have the technological capacity to build highly functional robots.

Technologists have accomplished amazing physical tasks with robots such as a humanoid robot that can perform gymnastics movements or a robotic dog that traverses through rough trails. However, as of now we cannot guarantee that these robots/personal assistants act appropriately in the complex and sensitive social dynamics of a household. This poses a significant obstacle in developing domestic service robots because companies would be liable for any harms a socially inappropriate robot causes. As robots become more affordable and feasible technically, the challenge of designing robots that act in accordance with context-specific social norms becomes increasingly pronounced. Researchers in human-robot interaction and roboethics have attempted to resolve this issue for the past few decades, and while progress has been made, there is an urgent need to address the ethical implications of service robots in practice.

As an attempt to take a more solution-focused path for these challenges, we are happy to share a completely new competition with our readers. This year, the Roboethics to Design & Development competition will take place as a part of RO-MAN—an international conference on robot and human interactive communication. The competition, the first and only one of its kind, fulfills a need for interactive education on roboethics.

In partnership with RoboHub at the University of Waterloo, the competition organizers designed a virtual environment where participating teams will develop a robot that fetches objects in a home. The household is composed of a variety of personas—a single mother who works as a police officer, a teenage daughter, her college-aged boyfriend, a baby, and a dog. For example, design teams will need to consider how a home robot may respond to the daughter’s request to take her parent’s credit card. In this ethical conundrum, should the robot obey the daughter, and would the robot be responsible if the daughter were to use the credit card without her mom’s permission?

How can we identify and address ethical challenges presented by a home robot?

Participants are challenged to tackle the long-standing problem of how we can design a safe and ethical robot in a dynamic environment where values, beliefs, and priorities may be in conflict. With submissions from around the world, it will be fascinating to see how solutions may differ and translate across cultures.

It is important to recognize that there is a void when searching for standards and ethical rules in robotics. In an attempt to address this void, various toolkits have been developed to guide technologists in identifying and resolving ethical challenges. Here are some steps from the Foresight into AI Ethics Toolkit that will be helpful in designing an ethical home robot:

- Who are our stakeholders and what are their values, beliefs, and priorities?

Stakeholders refer to the people who are directly or indirectly impacted by the technology. In the case of RO-MAN’s home robot, we want to consider:

- Who is in the household and what is important to them in how they live their day to day?

- What are the possible interactions within the household members and between the householder members and the robot?

- How will the robot interact with the various stakeholders?

- What are the goals and values of each stakeholder? How do the stakeholders expect to interact with the robot? What do they expect to gain from the robot?

- What are the value tensions presented by the home robot and social context?

Once we’ve reviewed the values of each stakeholder identified in the previous step, we may notice that some of their values may be in conflict with one another. As such, we need to identify these value tensions because they can lead to ethical issues.

Here are some questions that can prompt us to think about value tensions:

- Who was involved in the decision of purchasing the robot? What were their goals in introducing the robot to the household?

- What is the cultural context or background for this particular household? What societal values could impact stakeholders’ individual beliefs and actions?

- What might the different stakeholders argue about? For example, what might the teenage daughter and the mother disagree about in regards to the robot’s capabilities?

- How will the robot create or relieve conflict in the household?

In some cases, value tensions may indicate a clear moral tradeoff, such that two values or goals conflict and one must be prioritized over another. The challenge is therefore to design solutions that fairly balance stakeholder interests while respecting their fundamental rights.

- How can we resolve the identified value tensions?

Focusing on these value tensions, we can begin to brainstorm how these conflicts can be addressed and at what level they should be addressed at. In particular, we need to determine whether a value tension can be resolved at a systems or technical level. For example, if the mother does not trust her daughter’s boyfriend, will the presence and actions of the home robot address the root of the problem (i.e. distrust)? Most likely not, but the robot may alleviate or exacerbate the issue. Therefore, we need to consider how the tension may manifest in more granular ways—how can we ensure that the robot appropriately navigates trust boundaries of the mother-boyfriend relationship? The robot must protect the privacy of all stakeholders, safeguard their personal items, abide by the laws, and respect other social norms.

When developing solutions, we can begin by asking ourselves:

- What level is the value tension occurring at, and what level(s) of solutions are accessible to the competition’s design parameters?

- Which actions from the robot will produce the most good and do the least harm?

- How can we manipulate the functions of the robot to address ethical challenges? For instance:

- How might the robot’s speech functions impact the identified value tensions?

- In what ways should the robot’s movements be limited to respect stakeholders’ privacy and personal spaces?

- How can design solutions address all stakeholders’ values?

- How can we maximize the positive impact of the design to address as many ethical challenges as possible?

What does the public think about the scenarios and approaches?

Often the public voice is missing in roboethics spaces, and we seek to engage the public in tackling these ethical challenges. To present the participants’ ethical designs to a broader audience, the Open Roboethics Institute will be running a public poll and awarding a Citizen’s Award to the team with the most votes. If you are interested in learning about and potentially judging their solutions, we encourage you to participate in the vote next month. We look forward to hearing from you!

To learn more about the roboethics competition at RO-MAN 2021, please visit https://competition.raiselab.ca. If you have any questions about the ORI Foresight into AI Ethics Toolkit, contact us at contact@openroboethics.org.

Additional Resources