Self-supervised learning of visual appearance solves fundamental problems of optical flow

Flying insects as inspiration to AI for small drones

How do honeybees land on flowers or avoid obstacles? One would expect such questions to be mostly of interest to biologists. However, the rise of small electronics and robotic systems has also made them relevant to robotics and Artificial Intelligence (AI). For example, small flying robots are extremely restricted in terms of the sensors and processing that they can carry onboard. If these robots are to be as autonomous as the much larger self-driving cars, they will have to use an extremely efficient type of artificial intelligence – similar to the highly developed intelligence possessed by flying insects.

Optical flow

One of the main tricks up the insect’s sleeve is the extensive use of ‘optical flow’: the way in which objects move in their view. They use it to land on flowers and avoid obstacles or predators. Insects use surprisingly simple and elegant optical flow strategies to tackle complex tasks. For example, for landing, honeybees keep the optical flow divergence (how quickly things get bigger in view) constant when going down. By following this simple rule, they automatically make smooth, soft landings.

I started my work on optical flow control from enthusiasm about such elegant, simple strategies. However, developing the control methods to actually implement these strategies in flying robots turned out to be far from trivial. For example, when I first worked on optical flow landing my flying robots would not actually land, but they started to oscillate, continuously going up and down, just above the landing surface.

Fundamental problems

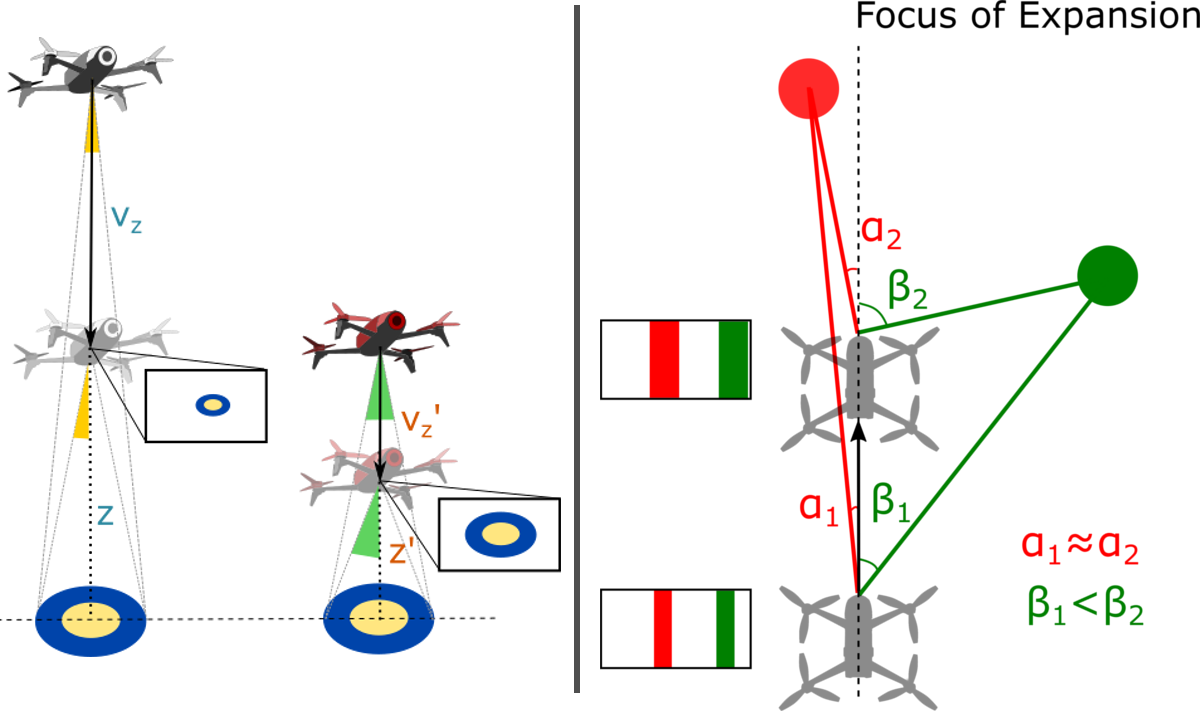

Optical flow has two fundamental problems that have been widely described in the growing literature on bio-inspired robotics. The first problem is that optical flow only provides mixed information on distances and velocities – and not on distance or velocity separately. To illustrate, if there are two landing drones and one of them flies twice as high and twice as fast as the other drone, then they experience exactly the same optical flow. However, for good control these two drones should actually react differently to deviations in the optical flow divergence. If a drone does not adapt its reactions to the height when landing, it will never arrive and start to oscillate above the landing surface.

The second problem is that optical flow is very small and little informative in the direction in which a robot is moving. This is very unfortunate for obstacle avoidance, because it means that the obstacles straight ahead of the robot are the hardest ones to detect! The problems are illustrated in the figures below.

Right: Problem 2: The drone moves straight forward, in the direction of its forward-looking camera. Hence, the focus of expansion is straight ahead. Objects close to this direction, like the red obstacle, have very little flow. This is illustrated by the red lines in the figure: The angles of these lines with respect to the camera are very similar. Objects further from this direction, like the green obstacle, have considerable flow. Indeed, the green lines show that the angle gets quickly bigger when the drone moves forward.

Learning visual appearance as the solution

In an article published in Nature Machine Intelligence today [1], we propose a solution to both problems. The main idea was that both problems of optical flow would disappear if the robots were able to interpret not only optical flow, but also the visual appearance of objects in their environment. This solution becomes evident from the above figures. The rectangular insets show the images captured by the drones. For the first problem it is evident that the image perfectly captures the difference in height between the white and red drone: The landing platform is simply larger in the red drone’s image. For the second problem the red obstacle is as large as the green one in the drone’s image. Given their identical size, the obstacles are equally close to the drone.

Exploiting visual appearance as captured by an image would allow robots to see distances to objects in the scene similarly to how we humans can estimate distances in a still picture. This would allow drones to immediately pick the right control gain for optical flow control and it would allow them to see obstacles in the flight direction. The only question was: How can a flying robot learn to see distances like that?

The key to this question lay in a theory I devised a few years back [2], which showed that flying robots can actively induce optical flow oscillations to perceive distances to objects in the scene. In the approach proposed in the Nature Machine Intelligence article the robots use such oscillations in order to learn what the objects in their environment look like at different distances. In this way, the robot can for example learn how fine the texture of grass is when looking at it from different heights during landing, or how thick tree barks are at different distances when navigating in a forest.

Relevance to robotics and applications

Implementing this learning process on flying robots led to much faster, smoother optical flow landings than we ever achieved before. Moreover, for obstacle avoidance, the robots were now also able to see obstacles in the flight direction very clearly. This did not only improve obstacle detection performance, but also allowed our robots to speed up. We believe that the proposed methods will be very relevant to resource-constrained flying robots, especially when they operate in a rather confined environment, such as flying in greenhouses to monitor crop or keeping track of the stock in warehouses.

It is interesting to compare our way of distance learning with recent methods in the computer vision domain for single camera (monocular) distance perception. In the field of computer vision, self-supervised learning of monocular distance perception is done with the help of projective geometry and the reconstruction of images. This results in impressively accurate, dense distance maps. However, these maps are still “unscaled” – they can show that one object is twice as far as another one but cannot convey distances in an absolute sense.

In contrast, our proposed method provides “scaled” distance estimates. Interestingly, the scaling is not in terms of meters but in terms of control gains that would lead the drone to oscillate. This makes it very relevant for control. This feels very much like the way in which we humans perceive distances. Also for us it may be more natural to reason in terms of actions (“Is an object within reach?”, “How many steps do I roughly need to get to a place?”) than in terms of meters. It hence reminds very much of the perception of “affordances”, a concept forwarded by Gibson, who introduced the concept of optical flow [3].

Relevance to biology

The findings are not only relevant to robotics, but also provide a new hypothesis for insect intelligence. Typical honeybee experiments start with a learning phase, in which honeybees exhibit various oscillatory behaviors when they get acquainted with a new environment and related novel cues like artificial flowers. The final measurements presented in articles typically take place after this learning phase has finished and focus predominantly on the role of optical flow. The presented learning process forms a novel hypothesis on how flying insects improve their navigational skills over their lifetime. This suggests that we should set up more studies to investigate and report on this learning phase.

On behalf of all authors,

Guido de Croon, Christophe De Wagter, and Tobias Seidl.

References

- Enhancing optical-flow-based control by learning visual appearance cues for flying robots. G.C.H.E. de Croon, C. De Wagter, and T. Seidl, Nature Machine Intelligence 3(1), 2021.

- Monocular distance estimation with optical flow maneuvers and efference copies: a stability-based strategy. G.C.H.E. de Croon, Bioinspiration & biomimetics, 11(1), 016004.

- The Ecological Approach to Visual Perception. J.J. Gibson (1979).

WSR: A new Wi-Fi-based system for collaborative robotics

Getting the Right Grip: Designing Soft and Sensitive Robotic Fingers

#326: Deep Sea Mining, with Benjamin Pietro Filardo

In this episode, Abate follows up with Benjamin Pietro Filardo, founder of Pliant Energy Systems and NACROM, the North American Consortium for Responsible Ocean Mining. Pietro talks about the deep sea mining industry, an untapped market with a massive potential for growth. Pietro discusses the current proposed solutions for deep sea mining which are environmentally destructive, and he offers an alternative solution using swarm robots which could mine the depths of the ocean while creating minimal disturbance to this mysterious habitat.

Benjamin “Pietro” Filardo

After several years in the architectural profession, Pietro founded Pliant Energy Systems to explore renewable energy concepts he first pondered while earning his first degree in marine biology and oceanography. With funding from four federal agencies he has broadened the application of these concepts into marine propulsion and a highly novel robotics platform.

After several years in the architectural profession, Pietro founded Pliant Energy Systems to explore renewable energy concepts he first pondered while earning his first degree in marine biology and oceanography. With funding from four federal agencies he has broadened the application of these concepts into marine propulsion and a highly novel robotics platform.

Links

- Download mp3 (20.4 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

A technique that allows robots to estimate the pose of objects by touching them

MakinaRocks, Hyundai Robotics sign MOU to ‘advance AI-based industrial robot arm anomaly detection’

Women in Robotics Update: introducing our 2021 Board of Directors

Women in Robotics is a grassroots community involving women from across the globe. Our mission is supporting women working in robotics and women who would like to work in robotics. We formed an official 501c3 non-profit organization in 2020 headquartered in Oakland California. We’d like to introduce our 2021 Board of Directors:

|

Andra Keay, Women in Robotics President

Managing Director at Silicon Valley Robotics | Visiting Scholar at CITRIS People and Robots Lab | Startup Advisor & Investor

Andra Keay founded Women in Robotics originally under the umbrella of Silicon Valley Robotics, the non-profit industry group supporting innovation and commercialization of robotics technologies. Andra’s background is in human-robot interaction and communication theory. She is a trained futurist, founder of the Robot Launch global startup competition, Robot Garden maker space, Women in Robotics and is a mentor, investor and advisor to startups, investors, accelerators and think tanks, with a strong interest in commercializing socially positive robotics and AI. Andra speaks regularly at leading technology conferences, and is Secretary-General of the International Alliance of Robotics Associations. She is also a Visiting Scholar with the UC’s CITRIS People and Robots Research Group. |

|

Allison Thackston

Roboticist, Software Engineer & Manager– Waymo

Allison Thackston is the Chair of Women in Robotics Website SubCommittee and CoChair of our New Chapter Formation SubCommittee. She is also a Founding Member of the ROS2 Technical Steering Committee. Prior to working at Waymo, she worked at Nuro and was the Manager of Shared Autonomy at Toyota Research Institute, and Principle Research Scientist Intelligent Manipulation. She has an MS in Robotics and MechEng from the University of Hawaii and a BS in EEng from Georgia Tech. With a passion for robots and robotic technologies, she brings energy, dedication, and smarts to all the challenges she faces. |

|

Ariel Anders

Roboticist – Robust.AI

Ariel Anders is a black feminist roboticist who enjoys spending time with her family and artistic self-expression. Anders is the first roboticist hired at Robust.AI, an early stage robotics startup building the world’s first industrial grade cognitive engine. Anders received a BS in Computer Engineering from UC Santa Cruz and her Doctorate in Computer Science from MIT, where she taught project-based collaborative robotics courses, developed an iOS app for people with vision impairment, and received a grant to install therapy lamps across campus. Her research focused on reliable robotic manipulation with the vision of enabling household helpers. |

|

Cynthia Yeung

Robotics Executive & COO, Advisor, Speaker

Cynthia Yeung is the Chair of the Women in Robotics Mentoring Program SubCommittee, which will be piloting shortly. She is also a mentor and advisor to robotics companies, accelerators and venture capital firms, and speaks at leading technology conferences. Cynthia studied Entrepreneurship at Stanford, Systems Engineering at UPenn, and did a triple major at The Wharton School, UPenn, where she was a Benjamin Franklin Scholar and a Joseph Wharton Scholar. She has led Strategic or International Partnerships at organizations like Google, Capital One and led Product Partnerships at SoftBank Robotics, Checkmate.io and was COO of CafeX. In her own words, “I practice radical candor. I build teams to make myself obsolete. I create value to better human society. I edit robotics research papers for love.” |

|

Hallie Siegel

Associate Director, Strategy & Operations at University of Toronto

Hallie Siegel is the driving force behind the emerging robotics network in Canada, centered at the University of Toronto. She is a communications professional serving the technology, innovation and research sectors, specifically robotics, automation and AI. She has a Masters in Strategic Foresight and Innovation at OCADU, where she was Dean’s Scholar. Hallie was also the first Managing Editor at Robohub.org, the site for robotics news and views, after doing science communications for Raffaelo D’Andrea’s lab at ETH Zurich. In her spare time, she is a multidisciplinary artist, and Chair of the Women in Robotics Vision Workshops. |

|

Kerri Fetzer-Borelli

Head of Diversity, Equity, Inclusion & Community Engagement at Toyota Research Institute

Kerri Fetzer-Borelli is the CoChair for the Women in Robotics New Chapter Formation SubCommittee. They have worked as Scientific Data Collector for the military, as a Welder in nuclear power plants, and as the Manager of Autonomous Vehicle Testing, then Prototyping and Robotics Operations at Toyota Research Institute where they now lead DEI and Community Engagement. Kerri mobilizes cross functional teams to solve complex, abstract problems by distilling strategic, actionable items and workflows from big ideas. |

|

Laura Stelzner

Robotics Software Engineer at RIOS

Laura Stelzner is the Chair of the Women in Robotics Community Management SubCommittee increasing activity and engagement in our online community. By day, she is in charge of software for emerging robotics startup RIOS which provides factory automation as a service, deploying AI powered and dexterous robots on factory assembly lines. Prior to RIOS, Laura worked at Toyota Research Institute, Space Systems Loral, Amazon Labs, Electric Movement and Raytheon. She has a BS in Computer Engineering from UC Santa Cruz and an MS in Computer Science from Stanford. |

|

Laurie Linz, Women in Robotics Treasurer

Software Development Engineer in Test at Alteryx

Laurie Linz is the Women in Robotics Treasurer, as well as founder of the Boulder/Denver Colorado WiR Chapter. When not working as a software developer or QA tester, Laurie can be found with her hands on an Arduino, or a drone, or a camera. As she says, “I like to build things, break things and solve puzzles all day! Thankfully development and testing allows me to do that. Fred Brooks was right when he wrote that the programmer gains the “sheer joy of making things” and he talks of “castles in the air, from air” as we are only limited by the bounds of human imagination.” |

|

Lisa Winter

Head of Hardware at Quartz

A roboticist since childhood, Lisa has over 20 years experience designing and building robots. She has competed in Robot Wars and BattleBots competitions since 1996, and is a current judge on BattleBots. She currently holds the position of Head of Hardware at Quartz, an early stage startup working on the future of construction. Her rugged hardware can be seen attached to tower cranes all around California. In her free time she likes to volunteer her prototyping skills to the Marine Mammal Center to aid in the rehab of hundreds of animals each year. She is a Founding Board Member of Women in Robotics and Chair of the Artwork/Swag SubCommittee. |

|

Sue Keay, Women in Robotics Secretary

CEO at Queensland AI Hub and Chair of the Board of Directors of Robotics Australia Group

Currently CEO of Queensland AI Hub, after leading cyber-physical systems research for CSIRO’s Data61. Previously Sue set-up the world’s first robotic vision research centre. She led the development of Australia’s first robotics roadmap, the Robotics Australia Network and the Queensland Robotics Cluster. A Graduate of the Australian Institute of Company Directors, she founded and Chairs the Board of Robotics Australia Group. Sue also serves on the Boards of CRC ORE, Queensland AI Hub and represents Australia in the International Alliance of Robotics Associations. |

With such a go-getting Board of Directors, you can be assured that Women in Robotics is preparing for an active 2021. As of 1/1/21, we had 1270 members in our online community, 900 additional newsletter subscribers, and six active chapters in the USA, Canada, UK and Australia. All Women in Robotics events abide by our Code of Conduct and we offer it for use at any robotics event or conference.

Our focus for 2021 is on:

- Project Inspire – our annual 30 women in robotics you need to know about list, plus regular updates, spotlights, and wikipedia pages for women in robotics.

- Project Connect – forming new chapters, promoting our online community, and enjoying regular member led activities and events, under a Code of Conduct.

- Project Advance – piloting a mentoring program, providing educational resources for women in robotics, and improving accountability metrics in our workplaces.

We’d also like to thank our two Founding Board Members, Sabine Hauert of the Hauert Lab at University of Bristol UK and Founder of Robohub.org and Sarah Osentoski SVP of Engineering at Iron Ox, who are leaving the WiR Board but who will be leading our new Women in Robotics Advisory Board, another new initiative for 2021.

You can subscribe to our newsletter to keep updated on our activities, to sign up for our speaker database or volunteering opportunities, or to show your support as an ally. Please support our activities with a one off or recurring donation (tax deductible in the USA).

Robotics trends at #CES2021

Even massive events like the 54th edition of Consumer Electronics Show (CES) have gone virtual due to the current pandemic. Since 1967, the Consumer Technology Association (CTA), which is the North American trade association for the consumer technology industry, has been organising the fair, and this year was not going to be any different—well, except they had to take the almost 300,000m${}^2$ from CES 2020 to the cloud. In this post, I mainly put the focus on current and future hardware/robotics trends presented at CES 2021 (because we all love to make predictions, even during uncertain times).

“Innovation accelerates and bunches up during economic downturns only to be unleashed as the economy begins to recover, ushering in powerful waves of technological change”—Christopher Freeman, British Economist. With this quote, I start the first session on ‘my show’ of CES 2021, ‘Tech trends to watch’ by CTA (see their slides here). There are not-that-surprising trends such as Artificial Intelligence/Machine Learning or services like cloud computing, video streaming or remote learning, but let’s kick off with hardware/robotics. Steve Koenig (Vice President of Research for CTA) highlighted this recent study from Gartner that predicts Robotic Process Automation will become a \$2 billion global industry in 2021, and will continue growing with double figures through 2024.

Dual Arm Robot System (DARS) from ITRI

Lesley Rorhbaugh (Director of Research for CTA) also presented some other hardware/robotics trends for 2021, including digital health wearables going beyond your wrists (for example, the Oura ring can measure your body temperature or respiratory rate, and generate a health score based on the data collected during the day, making it a potential tool to detect early COVID-19 symptoms) or robot triage helpers to support during high influx of patients at hospitals and to reduce the exposure rate of hospital workers. We all know this past year has been marked by COVID-19. In a more focused session on ‘robots to the rescue’ chaired by Eugene Demaitre (Senior Editor at the Robot Report), he stated that “this year, the definition of what a frontline worker is has changed”. In fact, the field of rescue robots has expanded with the current pandemic. Not only are they applied to assist in disaster situations nowadays, but areas such as autonomous delivery of goods, automatic cleaning for indoor sanitising (such as ADIBOT) or even cooking (such as the kitchen robot by Moley)—as Lesley showed in her session.

“Delivery is actually the largest unautomated industry in the world”, Ahti Heinla (CEO of Starship Technologies) said. “From the consumers’ perspective, delivery today kind of works. You pay a couple bucks and you get what you want, or maybe you don’t pay these couple of bucks at all. It appears to be free. Well, guess what? It isn’t free, and while it might be working for the consumer, it isn’t always working for the company doing the delivery. They are looking for solutions.”

What’s more, there’s a shortage of drivers to cope with the exponential growth of deliveries, Kathy Winter (Vice President at the IoT group at Intel) mentioned. But automation comes with a price, and the management of autonomous delivery fleets isn’t straightforwards. In relation to drone delivery, James Burgess (CEO of Wing) said that “data is one of the key elements here. There’s so much to keep track of, both individual robots or airplanes as you have, but also the environment, the weather, the traffic, the other systems that are moving through.” One of the biggest challenges is to actually develop the platform that manages the fleets. But also, “you need to build hardware, build software, think about regulations, think about safety, think about the consumer adoption and value proposition. You also need to build an app”, Ahti expressed. When it comes to regulations (whether traffic regulations for autonomous vehicles or standards for safety on sidewalks), the technology is far more advanced, Kathy said. In her opinion, we need a common standard that certifies safety of autonomous ground/aerial vehicles to avoid having different safety levels depending on the vehicle.

As in recent years at CES, vehicle tech takes a huge part of the whole event. With the rise of 5G connectivity (expected to really kick off during 2021), not only are self-driving cars in the trends conversation, but also connectivity via Cellular V2X (Vehicle to Everything communication). This is especially remarkable, as the area of smart cities is also a current trend in development under the umbrella of the Internet of Things.

Hans Vestberg’s keynote at CES 2021 (CEO of Verizon)

As shown above, research into consumer habits and future technology trends is a huge part of the work that CTA does. Some preexisting tech has skyrocketed as a result of the current health crisis, as CTA’s latest research reveals. Indeed, the tech industry grew by 5.5% to \$442 billions during 2020 in the United States.

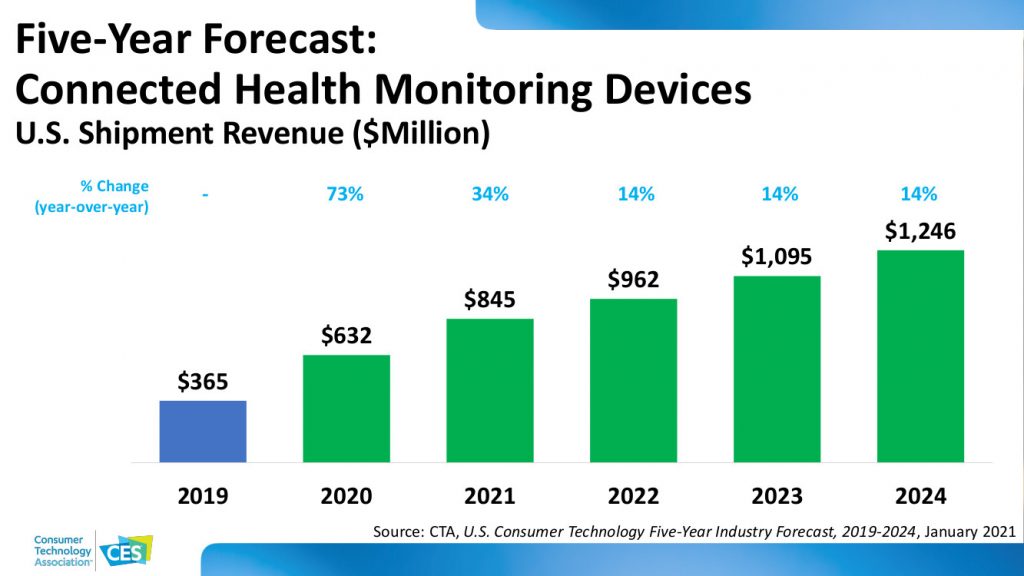

While hardware-related tech—which represents three quarters of the industry retail value—had a flat growth, services grew by a substantial 31%. It is not surprising that the overall five key trends including hardware, software and services that CTA found are: 1) remote learning (educational robots, AR/VR, STEM products), 2) digital entertainment (e.g. audio/video platforms or gaming), 3) smart homes (tech to improve energy efficiency, air/water quality, etc.), 4) online shopping (as exemplified above, autonomous grocery delivery is becoming a thing), and 5) personal vehicles & travel tech (did I mention autonomous vehicles already?). When it comes to their hardware forecast, their prediction for the short run points to smart homes technology (with home robots being a very popular choice) and digital health, an area worth \$845 millions, with a great opportunity for health-monitoring devices (e.g. wearables).

Dallara IL-15 racecar, the autonomous car designed for the Indy Autonomous Challenge in October, 2021

There was also room at CES 2021 for diversity, equity and inclusion (DEI). In the session chaired by Tiffany Moore (Senior Vice President, Political and Industry Affairs at CTA), invited speakers Dawn Jones (Acting Chief Diversity and Inclusion Officer & Global Director of Social Impact communications, policy and strategy at Intel) and Monica Poindexter (Head of Employee Relations and Inclusion & Diversity at Lyft) commented on the findings from the reports on diversity, inclusion and racial equity launched recently by Intel and Lyft. As stressed by both Dawn and Monica, retention and progression of employees from underrepresented groups is key for successful DEI in the long run. In fact, it can take at least one or two years before the outcome of DEI policies start to show up, Monica pointed out. Another crucial aspect both speakers shared is the support from the C-suite and middle managers. They all have to believe in the same DEI goals across the organisation. Active listening and the implementation of mechanisms for bottom-up feedback where employees can anonymously express their opinion and raise their concerns have also helped both companies improve their DEI. However, the two reports show there are still DEI barriers to break down (e.g. no more than 21.3% were females at senior, directors or executive levels at Intel in 2020, and there was an overall loss of 1.9% of the Black/African American employees at Lyft last year). That is why the work done by organisations such as Women in Robotics and Blacks in Robotics is vital to improve DEI inside companies. Still, a lot of work to be done.

Let’s hope #CES2022 returns to Las Vegas (because this will mean the pandemic is over). See you there!

An algorithm for optimizing the cost and efficiency of human-robot collaborative assembly lines

Cybathlon: A Success for the Maxon Teams

How to keep drones flying when a motor fails

Robotics researchers at the University of Zurich show how onboard cameras can be used to keep damaged quadcopters in the air and flying stably – even without GPS.

As anxious passengers are often reassured, commercial aircrafts can easily continue to fly even if one of the engines stops working. But for drones with four propellers – also known as quadcopters – the failure of one motor is a bigger problem. With only three rotors working, the drone loses stability and inevitably crashes unless an emergency control strategy sets in.

Researchers at the University of Zurich and the Delft University of Technology have now found a solution to this problem: They show that information from onboard cameras can be used to stabilize the drone and keep it flying autonomously after one rotor suddenly gives out.

Spinning like a ballerina

“When one rotor fails, the drone begins to spin on itself like a ballerina,” explains Davide Scaramuzza, head of the Robotics and Perception Group at UZH and of the Rescue Robotics Grand Challenge at NCCR Robotics, which funded the research. “This high-speed rotational motion causes standard controllers to fail unless the drone has access to very accurate position measurements.” In other words, once it starts spinning, the drone is no longer able to estimate its position in space and eventually crashes.

One way to solve this problem is to provide the drone with a reference position through GPS. But there are many places where GPS signals are unavailable. In their study, the researchers solved this issue for the first time without relying on GPS, instead using visual information from different types of onboard cameras.

Event cameras work well in low light

The researchers equipped their quadcopters with two types of cameras: standard ones, which record images several times per second at a fixed rate, and event cameras, which are based on independent pixels that are only activated when they detect a change in the light that reaches them.

The research team developed algorithms that combine information from the two sensors and use it to track the quadrotor’s position relative to its surroundings. This enables the onboard computer to control the drone as it flies – and spins – with only three rotors. The researchers found that both types of cameras perform well in normal light conditions. “When illumination decreases, however, standard cameras begin to experience motion blur that ultimately disorients the drone and crashes it, whereas event cameras also work well in very low light,” says first author Sihao Sun, a postdoc in Scaramuzza’s lab.

Increased safety to avoid accidents

The problem addressed by this study is a relevant one, because quadcopters are becoming widespread and rotor failure may cause accidents. The researchers believe that this work can improve quadrotor flight safety in all areas where GPS signal is weak or absent.