AI comes in many different shapes and sizes. That applies to the use cases, the underlying technologies as well as the approaches to adopting AI in your organization. As many organizations are looking to adopt AI, an increasing need for tangible frameworks to understand the technology in a business perspective is requested by leaders in all industries.

Some of the key questions asked by leaders are simple. How much time and money is required to adopt AI and solve business problems via AI and what returns do we get for those efforts? That is more than reasonable questions but answering these questions have been an issue in two parts. Firstly the answers have been a moving target with the technology being in an exponential development and as a result the answers of yesterday seem antique today. Secondly the intangible and explorative nature of AI has made it hard to provide such answers at all.

But as AI has matured as a technology, and been packaged into products and ready-to-use solutions, these questions are ready to be answered. The products and solutions might come in different levels of abstractions but they are nevertheless ready for being applied to business problems without much hassle.

The three main AI approaches

To make it easy to understand the efforts and the outcomes of AI it can be divided into three core approaches; Off-the-shelf-AI, AutoAI and Custom AI. The idea is simple. AI has reached a point where some solutions are ready to use out of the box and others need a lot of work before being applied. All approaches come with their own benefits and drawbacks so the trick is to understand these properties and know when to apply what kind. These core AI adoption strategies provide a more concrete foundation for predicting costs, risks and returns when applying AI.

Off-the-shelf-AI

Some AI solutions are ready to use out of the box and need little to no adjustment. Examples can be the Siri in your iPhone, an invoice capture software or speech-to-text solutions. These solutions take minutes to get started and the business models are often AI-as-a-Service making the initial investments low. Often these services are pay-per-use models and consequently implies low risk. The challenge of course is that you get what you get and you shouldn’t get upset. The options for adjusting and making necessary changes for the business problem are usually as low as the costs. More and more of these AI-services are blooming in the AI ecosystem with the large cloud providers as Google and Microsoft taking the lead.

AutoAI

Also known as AutoML, a more technical name, this solution is the hybrid solution giving both freedom to shape the AI as one wishes to a certain extent but also not having to invent the wheel once again. With AutoAI a business can take it’s own data such as documents, customer data or even pictures of products. This data is then used to train AI’s in pre-made environments that have the ability to pick the right algorithms for the job and deploy the AI ready to use in the cloud. As it can be costly to acquire data so there are some efforts required with AutoAI but at least it rarely requires a small army of data scientists. The drawback is also the inflexibility that is inherent in standardized tools. AutoAI also will be challenged when aiming for an AI with the highest possible accuracy.

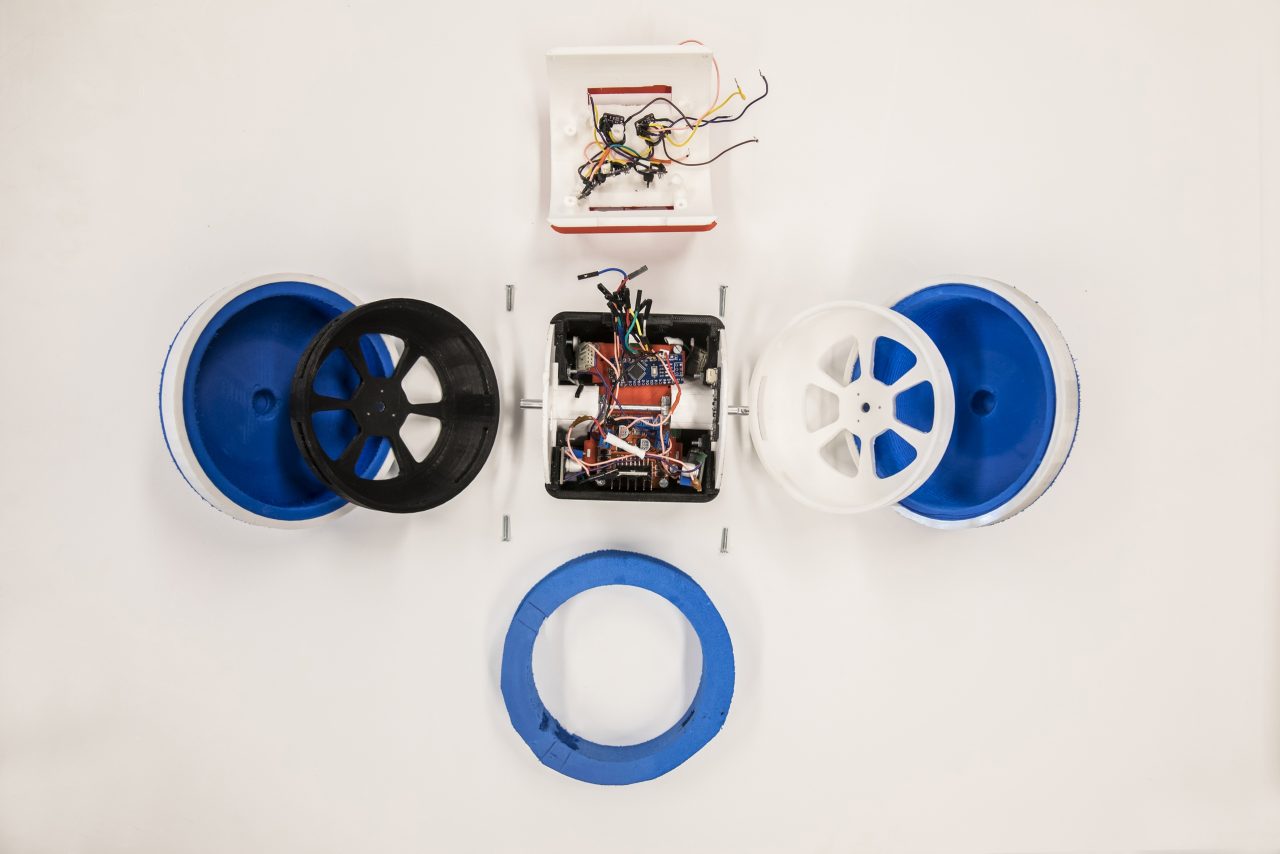

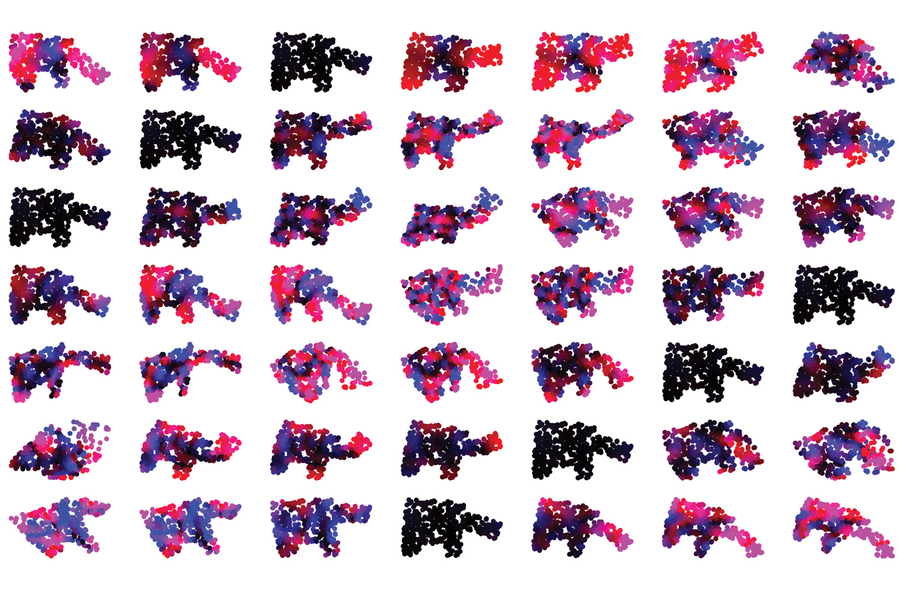

Custom AI

With Custom AI almost everything is built from scratch. It is a job for data scientists, machine learning engineers and more of a task for R&D than any other place in the organization. The Custom AI approached is usually the weapon of choice when extremely high accuracy is required. Everything can be built and the possibilities are endless. This also usually means at least months or even years of work and experiments. Costly and time consuming. As the AutoAI and Off-the-shelf-AI is becoming more and more available and advanced, Custom AI is more suitable for companies building AI solutions that compete with other AI solutions. With all these extra efforts you might get the small edge that will win you the market.

A final note

For solving problems with AI the most usual approach is advancing towards off-the-shelf and AutoAI. An even more likely future is that a combination of these will be the favorite choice for many organizations adopting AI.

The concept of these approaches is not unique to AI. Almost all other technologies have been through the same natural progression and now the time has come to AI. It is a sign of maturity and that AI is in a state of public property and no longer hidden behind the ivy walls of the top universities.

Of course these approaches are not set in stone and the boundaries between them are fluid and inconsistent. But applying this framework of approaches to the conversation when adopting AI helps the almost magic aura of artificial intelligence become closer to a tool in the toolbox business. And that is where the value starts mounting.