#IJCAI in tweets – tutorials and workshops day 2

Here’s our daily update in tweets, live from IJCAI (International Joint Conference on Artificial Intelligence) in Macau. Like yesterday, we’ll be covering tutorials and workshops.

Tutorials

Now attending the #tutorial "Argumentation and Machine Learning: When the Whole is Greater than the Sum of its Parts" by @CeruttiFederico, & learning about #ML mechanisms that create, annotate, analyze & evaluate arguments expressed in natural language.#AI #IJCAI2019 @IJCAIconf pic.twitter.com/XGW0msJ9xX

— Dagmar Monett (@dmonett) August 12, 2019

Getting dirty hands on the new @TensorFlow 2.0 in the Deep Learning Tutorial at #IJCAI2019 by @random_forests using Google Colab #deeplearning #machinelearning #tensorflow pic.twitter.com/dVeFiuz2PE

— Daniele Di Mitri (@dimstudi0) August 12, 2019

Workshops

Bridging2019

Now: "Dialogues with Socially Aware Robot Agents – Knowledge & Reasoning using Natural Language," an invited #IJCAI2019 talk by Prof. Kristiina Jokinen

Her start: "The quality of #intelligence possessed by humans and #AI is fundamentally different."#Bridging2019 @IJCAIconf pic.twitter.com/rBBxFpg9Tj

— Dagmar Monett (@dmonett) August 12, 2019

At the #Bridging2019 #IJCAI2019 workshop now.

The second talk by Rodolfo A. Fiorini: "New Approach to Computational Cognition and Predicative Competence."

On his second slide: #AGI "needs fresh methods with cognitive architectures and philosophy of mind."#AI @IJCAIconf pic.twitter.com/4sRnelDnSZ

— Dagmar Monett (@dmonett) August 12, 2019

HAI19

#HAI19 is now over! Amazing day with lots of great discussions, talks, poster sessions and so on!

Congratulations to Shane and Sharmila for winning the best paper and best lightning talk award!

Thank you everyone, and see you again next year! @IJCAIconf pic.twitter.com/f5tQHgseIV— Puneet Agrawal (@puneet1622) August 12, 2019

#HAI19 is half done! Great keynotes from Rui Yan and @danmcduff – you guys gave nice insights! Very interesting Lightning Talks followed! After lunch, a poster session is lined up followed by more invited talks from @vdignum @rafal_rzepka and Pascale Fung. @IJCAIconf pic.twitter.com/XpDd5KHBQ8

— Puneet Agrawal (@puneet1622) August 12, 2019

SCAI Workshop

#SCAI_Workshop poster session is happening in the Hall A. Here is an approximate map on how to get there from the workshop room (2501): go behind the escalator and turn right. pic.twitter.com/mgCkUprIWp

— SCAI Workshop (@scai_workshop) August 12, 2019

Looking forward for an exciting program tomorrow at #SCAI_Workshop @IJCAIconf ! Great speakers from academia and industry! Full schedule is available at https://t.co/EyrDcGjqLN

See you all in the room 2501, right next to the escalators (detailed map available in tho Whova app).

— SCAI Workshop (@scai_workshop) August 11, 2019

Xiaoxue Zang from @GoogleAI presenting the work on multi-domain conversations #SCAI_Workshop @IJCAIconf #ijcai2019 pic.twitter.com/1Ys4Ew0fW0

— SCAI Workshop (@scai_workshop) August 12, 2019

Done with the presentation in SocialNLP 2019 @ IJCAI-2019, Macau, China.#SocialNLP @IJCAIconf #ijcai2019 @PurdueCS

Presentation Slide: https://t.co/HveK1IMgYQ

Paper link: https://t.co/43VGQllCdP pic.twitter.com/5qlHs9BaEw

— Tunazzina Islam (@Tunaz_Islam) August 12, 2019

Line: yet another messager most people in the West don't know about. 200M users and the right expertise to build a conversational system w/ Naver #SCAI_Workshop #ijcai2019 pic.twitter.com/JBMpc5tRws

— Aleksandr Chuklin (@varphi) August 12, 2019

AI4SocialGood

Tomorrow is our #IJCAI2019 workshop on #AIforSocialGood! Invited talks by @5harad, Lilian Tang, and @ermonste. https://t.co/5Vxedbh06x. Co-organized with @erichorvitz @RecklessCoding @ezinne_nwa @nhaghtal. @MilindTambe_AI @HCRCS

— Bryan Wilder (@brwilder) August 11, 2019

Thanks to @5harad a great invited talk on the difficulty of formalizing fairness! #AIforSocialGood at #IJCAI2019 pic.twitter.com/Vie7aEsk1p

— Bryan Wilder (@brwilder) August 12, 2019

#ijcai2019 #day3 workshop on ‘AI for social good’ Invited talk on ‘The Measure and Mismeasure of Fairness: A Critical Review of Fair Machine Learning’ by Sharad Goel(Stanford)

알고리듬에 따른 결정이 공정 할까요? 공정성을 확보하기 위해 주로 https://t.co/oL5Y5mYBB7— Woontack Woo (우운택) (@wwoo_ct) August 12, 2019

Liz Bondi on game theory for conservation at the #IJCAI2019 workshop on #AIforSocialGood. @MilindTambe_AI @BDilkina @fangf07 pic.twitter.com/vZ2Q9dqVpE

— Bryan Wilder (@brwilder) August 12, 2019

Talk on fairness and efficiency in ridesharing by Xiaohui Bei at #IJCAI2019 workshop on #AIforSocialGood pic.twitter.com/xvlcAWKcYQ

— Bryan Wilder (@brwilder) August 12, 2019

. @frankdignum in the #AI4SocialGood WS #ijcai2019 talking about #AI in #healthcare. pic.twitter.com/hzcimS1Mug

— Andreas Theodorou

#IJCAI2019 (@RecklessCoding) August 12, 2019

AI Safety

The Human side of AI safety: presentation by @raja_chatila at #AIsafety2019 #ijcai2019 @IJCAI_2019 pic.twitter.com/rJwq2l3tIN

— Virginia Dignum (@vdignum) August 12, 2019

DeLBP

Accepted paper 1. Implicitly Learning to Reason in First-Order Logic. Vaishak Belle and Brendan Juba #DeLBP #ijcai2019 pic.twitter.com/wNAfdCPzU7

— Guo, Quan (@guoquan_) August 12, 2019

Accepted paper 7. Query-driven PAC-learning for reasoning. Brendan Juba #DeLBP #ijcai2019 pic.twitter.com/t6uvCpcWUY

— Guo, Quan (@guoquan_) August 12, 2019

Semdeep5

The #semdeep5 workshop is happening right now at @IJCAIconf #ijcai2019! Proceedings are now online at the ACL Anthology website (@read_papers)! Regular papers + WiC submissions @CamachoCollados @tpilehvar @Dagmar_G @ThierryDeclerck https://t.co/vcXQVxa3vK

— Luis (@luisanke) August 12, 2019

Stay tuned as I’ll be covering the conference as an AIhub ambassador.

The future of rescue robotics

By Nicola Nosengo

Current research is aligned with the need of rescue workers but robustness and ease of use remain significant barriers to adoption, NCCR Robotics researchers find after reviewing the field and consulting with field operators.

Robots for search and rescue are developing at an impressive pace, but they must become more robust and easier to use in order to be widely adopted, and researchers in the field must devote more effort to these aspects in the future. This is one of the main findings by a group of NCCR Robotics researchers who focus on search-and-rescue applications. After reviewing the recent developments in technology and interviewing rescue workers, they have found that the work by the robotics research community is well aligned with the needs of those who work in the field. Consequently, although current adoption of state-of-the-art robotics in disaster response is still limited, it is expected to grow quickly in the future. However, more work is needed from the research community to overcome some key barriers to adoption.

The analysis is the result of a group effort from researchers who participate in the Rescue Robotics Grand Challenge, one of the main research units of NCCR Robotics, and has been published in the Journal of Field Robotics.

With this paper, the researchers wanted to take stock of the current state-of-the-art of research on rescue robotics, and in particular of the advancements published between 2014 and 2018, a period that had not yet been covered by previous scientific reviews.

“Although previous surveys were only a few years old, the rapid pace of development in robotics research and robotic deployment in search and rescue means that the state-of-the-art is already very different than these earlier assessments” says Jeff Delmerico, first author of the paper and formerly a NCCR Robotics member in Davide Scaramuzza’s Robotics and Perception Group at the University of Zurich. “More importantly, rather than just documenting the current state of the research, or the history of robot deployments after real-world disasters, we were trying to analyze what is missing from the output of the research community in order to target real-world needs and provide the maximum benefit to actual rescuers”.

The paper offers a comprehensive review of research on legged, wheeled, flying and amphibious robots, as well as of perception, control systems and human-robot interfaces. Among many recent advancements, it highlights how learning algorithms and modular designs have been applied to legged robots to make them capable of adapting to different missions and of being resilient to damages; how wheeled and tracked robots are being tested in new applications such as telepresence for interacting with victims or for remote firefighting; how a number of strategies are being investigated for making drones more easily transportable and able to change locomotion mode when necessary. It details advancements in using cameras for localization and mapping in areas not covered by GPS signals, and new strategies for human-robot interaction that allow users to pilot a drone by pointing a finger or with the movements of the torso.

In order to confirm whether these research directions are aligned with the demands coming from the field, the study authors have interviewed seven rescue experts from key agencies in the USA, Italy, Switzerland, Japan and the Netherlands. The interviews have revealed that the key factors guiding adoption decisions are robustness and ease of use.

“Disaster response workers are reluctant to adopt new technologies unless they can really depend on them in critical situations, and even then, these tools need to add new capabilities or outperform humans at the same task” Delmerico explains. “So the bar is very high for technology before it will be deployed in a real disaster. Our goal with this article was to understand the gap between where our research is now and where it needs to be to reach that bar. This is critical to understand in order to work towards the technologies that rescue workers actually need, and to ensure that new developments from our NCCR labs, and the robotics research community in general, can move quickly out of the lab and into the hands of rescue professionals”.

Robotic research platforms have features that are absent from commercial platforms and that are highly appreciated by rescue workers, such as the possibility to generate 3D maps of a disaster scene. Academic efforts to develop new human-robot interfaces that reduce the operator’s attention load are also consistent with the needs of stakeholder. Another key finding is that field operators see robotic systems as tools to support and enhance their performance rather than as autonomous systems to replace them. Finally, an important aspect of existing research work is the emphasis on human-robot teams, which meets the desire of stakeholders to maintain a human in the loop during deployments in situations where priorities may change quickly.

On the critical side, though, robustness keeps rescuers from adopting technologies that are “hot” for researchers but are not yet considered reliable enough, such as Artificial Intelligence. Similarly, the development of integrated, centrally organized robot teams is interesting for researchers, but not so much for SAR personnel, who prefer individual systems that can more easily be deployed independently of each other.

NCCR Robotics researchers note that efforts to develop systems that are robust and capable enough for real-world rescue scenarios have been hitherto insufficient. “While it is unrealistic to expect robotic systems with a high technology readiness level to come directly from the academic domain without involvement from other organizations” they write, “more emphasis on robustness during the research phase may accelerate the process of reaching a high level for use in deployment”. The ease of use, endurance, and the capabilities to collection and quickly transmit data to rescuers are also important barriers to adoption that the research community must focus on in the future.

Literature

J. Delmerico, S. Mintchev, A. Giusti, B. Gromov, K. Melo, T. Horvat, C. Cadena, M. Hutter, A. Ijspeert, D. Floreano, L. M. Gambardella, R. Siegwart, D. Scaramuzza, “The current state and future outlook of rescue robotics“, Journal of Field Robotics, DOI: 10.1002/rob.21887

#IJCAI2019 in tweets – tutorials and workshops

The first two days at IJCAI (International Joint Conference on Artificial Intelligence) in Macau were focussed on workshops and tutorials. Here’s an overview in tweets.

Welcome

First demo at #ijcai2019: grad students are still superior to robots at reception desks pic.twitter.com/49beH9qBcl

— Emmanuel Hebrard (@EmmanuelHebrard) August 10, 2019

Papers

#ijcai2019 proceedings are now indexed by #dblp – https://t.co/wpB1UDDdJn

— dblp (@dblp_org) August 8, 2019

Workshops

AI Multimodal Analytics for Education

Starting off the workshop AI Multimodal Analytics 4 Education #AIMA4Edu @ #IJCAI2019 pic.twitter.com/OpxznIq6Jh

— Daniele Di Mitri (@dimstudi0) August 11, 2019

SDGs&AI

First talk of SDGs&AI workshop at #IJCAI2019 Takayoki Ito pic.twitter.com/DjfACOoayY

— Serge Stinckwich (@SergeStinckwich) August 11, 2019

AI Safety

Yang Liu "Last year Europe implementated GDPR. And we want to follow it. China and America implementated stricter privacy rules due to it." @AISafetyWS #IJCAI2019 pic.twitter.com/lh0sqJdIoH

— Andreas Theodorou

#IJCAI2019 (@RecklessCoding) August 11, 2019

EduAI

@cynthiabreazeal speaks about ai education projects in MIT – I known a little belgian researcher Who would love to meet all the concerned research teams

#eduai2019 #ijcai2019 pic.twitter.com/4PwMXIJOZ3

— Julie Henry (@HenryJulie) August 11, 2019

Tutorials

The AI Universe of “Actions”: Agency, Causality, Commonsense and Deception

#ijcai2019 #day2 Tutorial on ‘The AI Universe of “Actions”: Agency, Causality, Commonsense and Deception’ by Chitta Baral (ASU) and Tran Cao Son (NMSU)

끊임없이 변하는 Actions을 이해하는 것은 상황 인식, 계획, 상식, 인과 추론 등 AI 전분야에서 핵심적인 https://t.co/qQIzqLaLkW— Woontack Woo (우운택) (@wwoo_ct) August 11, 2019

An Introduction to Formal Argumentation

Martin Caminada explaining the "preferred discussion game" at @IJCAIconf tutorial "an introduction to formal argumentation"#ijcai2019 #argumentation pic.twitter.com/2eSeKoZdbQ

— Shohreh (@ShohrehHd) August 11, 2019

AI Ethics

Lots of applications rise #ethical concerns, @razzrboy show and comment some of them.#AI #AIEthics #IJCAI_2019 @IJCAIconf pic.twitter.com/OOr2EUUsBE

— Dagmar Monett (@dmonett) August 11, 2019

Interesting tutorial about #AIEthics at #IJCAI2019 pic.twitter.com/GnjDuj6OFN

— Nedjma Ousidhoum (@nedjmaou) August 11, 2019

Last Q&A part running now.

"As #AI has a growing impact on society, important #ethical issues arise, [but they] are tricky, lack of right methodological tools."

"Speculation about the future of #AI is fun, but not very productive." @razzrboy#AIEthics @IJCAIconf #IJCAI2019 pic.twitter.com/IYAImvWFG6

— Dagmar Monett (@dmonett) August 11, 2019

Congratulations to the authors of the announced best workshop papers!

Surprised and happy to receive the *best paper award* at the AIMA4EDU workshop at #IJCAI2019 today in Macao. Thanks to @zpardos and @kpthaiphd for hosting and to @SuperHeroBooth @marcuspecht and @HDrachsler for the support!#Multimodal #Pipeline wins again :-) pic.twitter.com/KahgrDsLZ0

— Daniele Di Mitri (@dimstudi0) August 11, 2019

Congratulations @aloreggia #NickMattei @frossi_t #KBrentVenable! Well-deserved! @CSERCambridge @AAIP_York @IJCAIconf #ijcai2019 #aiethics #AIFATES #aies #AIandSociety https://t.co/v0WU9tP2lp

— William H. Hsu (@banazir) August 11, 2019

Stay tuned as I’ll be covering the conference as an AIhub ambassador.

Live coverage of #IJCAI2019

IJCAI, the 28th International Joint Conference on Artificial Intelligence, is happening from the 10th to 16th August in Macao, China. We’ll be posting updates throughout the week thanks to the AIhub Ambassadors on the ground. Stay tuned.

You can follow the tweets at #IJCAI2019 or below.

Guided by AI, robotic platform automates molecule manufacture

By Becky Ham

By Becky Ham

Guided by artificial intelligence and powered by a robotic platform, a system developed by MIT researchers moves a step closer to automating the production of small molecules that could be used in medicine, solar energy, and polymer chemistry.

The system, described in the August 8 issue of Science, could free up bench chemists from a variety of routine and time-consuming tasks, and may suggest possibilities for how to make new molecular compounds, according to the study co-leaders Klavs F. Jensen, the Warren K. Lewis Professor of Chemical Engineering, and Timothy F. Jamison, the Robert R. Taylor Professor of Chemistry and associate provost at MIT.

The technology “has the promise to help people cut out all the tedious parts of molecule building,” including looking up potential reaction pathways and building the components of a molecular assembly line each time a new molecule is produced, says Jensen.

“And as a chemist, it may give you inspirations for new reactions that you hadn’t thought about before,” he adds.

Other MIT authors on the Science paper include Connor W. Coley, Dale A. Thomas III, Justin A. M. Lummiss, Jonathan N. Jaworski, Christopher P. Breen, Victor Schultz, Travis Hart, Joshua S. Fishman, Luke Rogers, Hanyu Gao, Robert W. Hicklin, Pieter P. Plehiers, Joshua Byington, John S. Piotti, William H. Green, and A. John Hart.

From inspiration to recipe to finished product

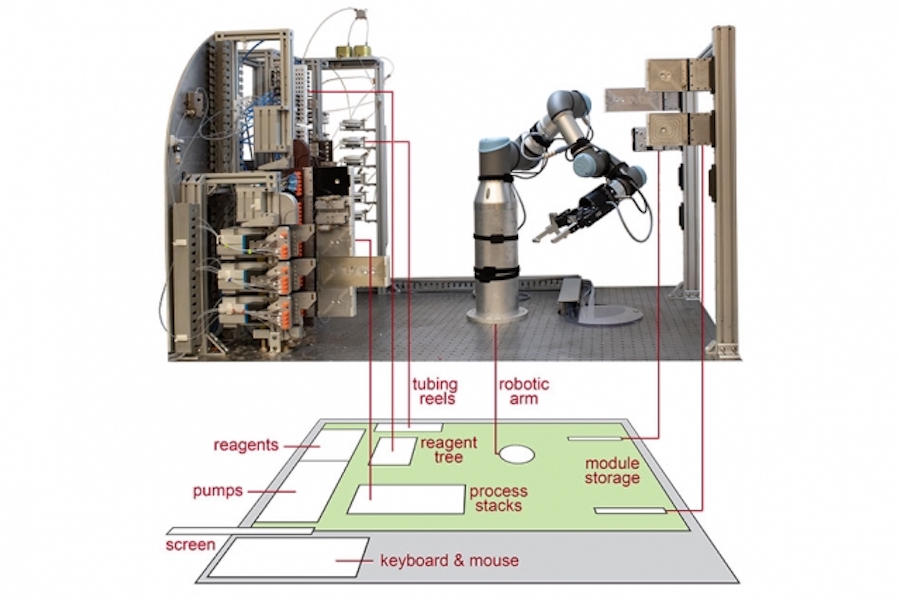

The new system combines three main steps. First, software guided by artificial intelligence suggests a route for synthesizing a molecule, then expert chemists review this route and refine it into a chemical “recipe,” and finally the recipe is sent to a robotic platform that automatically assembles the hardware and performs the reactions that build the molecule.

Coley and his colleagues have been working for more than three years to develop the open-source software suite that suggests and prioritizes possible synthesis routes. At the heart of the software are several neural network models, which the researchers trained on millions of previously published chemical reactions drawn from the Reaxys and U.S. Patent and Trademark Office databases. The software uses these data to identify the reaction transformations and conditions that it believes will be suitable for building a new compound.

“It helps makes high-level decisions about what kinds of intermediates and starting materials to use, and then slightly more detailed analyses about what conditions you might want to use and if those reactions are likely to be successful,” says Coley.

“One of the primary motivations behind the design of the software is that it doesn’t just give you suggestions for molecules we know about or reactions we know about,” he notes. “It can generalize to new molecules that have never been made.”

Chemists then review the suggested synthesis routes produced by the software to build a more complete recipe for the target molecule. The chemists sometimes need to perform lab experiments or tinker with reagent concentrations and reaction temperatures, among other changes.

“They take some of the inspiration from the AI and convert that into an executable recipe file, largely because the chemical literature at present does not have enough information to move directly from inspiration to execution on an automated system,” Jamison says.

The final recipe is then loaded on to a platform where a robotic arm assembles modular reactors, separators, and other processing units into a continuous flow path, connecting pumps and lines that bring in the molecular ingredients.

“You load the recipe — that’s what controls the robotic platform — you load the reagents on, and press go, and that allows you to generate the molecule of interest,” says Thomas. “And then when it’s completed, it flushes the system and you can load the next set of reagents and recipe, and allow it to run.”

Unlike the continuous flow system the researchers presented last year, which had to be manually configured after each synthesis, the new system is entirely configured by the robotic platform.

“This gives us the ability to sequence one molecule after another, as well as generate a library of molecules on the system, autonomously,” says Jensen.

The design for the platform, which is about two cubic meters in size — slightly smaller than a standard chemical fume hood — resembles a telephone switchboard and operator system that moves connections between the modules on the platform.

“The robotic arm is what allowed us to manipulate the fluidic paths, which reduced the number of process modules and fluidic complexity of the system, and by reducing the fluidic complexity we can increase the molecular complexity,” says Thomas. “That allowed us to add additional reaction steps and expand the set of reactions that could be completed on the system within a relatively small footprint.”

Toward full automation

The researchers tested the full system by creating 15 different medicinal small molecules of different synthesis complexity, with processes taking anywhere between two hours for the simplest creations to about 68 hours for manufacturing multiple compounds.

The team synthesized a variety of compounds: aspirin and the antibiotic secnidazole in back-to-back processes; the painkiller lidocaine and the antianxiety drug diazepam in back-to-back processes using a common feedstock of reagents; the blood thinner warfarin and the Parkinson’s disease drug safinamide, to show how the software could design compounds with similar molecular components but differing 3-D structures; and a family of five ACE inhibitor drugs and a family of four nonsteroidal anti-inflammatory drugs.

“I’m particularly proud of the diversity of the chemistry and the kinds of different chemical reactions,” says Jamison, who said the system handled about 30 different reactions compared to about 12 different reactions in the previous continuous flow system.

“We are really trying to close the gap between idea generation from these programs and what it takes to actually run a synthesis,” says Coley. “We hope that next-generation systems will increase further the fraction of time and effort that scientists can focus their efforts on creativity and design.”

The research was supported, in part, by the U.S. Defense Advanced Research Projects Agency (DARPA) Make-It program.

Carnegie Mellon Robot, Art Project To Land on Moon in 2021

Carnegie Mellon Robot, Art Project To Land on Moon in 2021

June 6, 2019

CMU Becomes Space-Faring University With Payloads Aboard Astrobotic Lander

PITTSBURGH—Carnegie Mellon University is going to the moon, sending a robotic rover and an intricately designed arts package that will land in July 2021.

The four-wheeled robot is being developed by a CMU team led by William “Red” Whittaker, professor in the Robotics Institute. Equipped with video cameras, it will be one of the first American rovers to explore the moon’s surface. Although NASA landed the first humans on the moon almost 50 years ago, the U.S. space agency has never launched a robotic lunar rover.

The arts package, called MoonArk, is the creation of Lowry Burgess, space artist and professor emeritus in the CMU School of Art. The eight-ounce MoonArk has four elaborate chambers that contain hundreds of images, poems, music, nano-objects, mechanisms and earthly samples intertwined through complex narratives that blur the boundaries between worlds seen and unseen.

“Carnegie Mellon is one of the world’s leaders in robotics. It’s natural that our university would expand its technological footprint to another world,” said J. Michael McQuade, CMU’s vice president of research. “We are excited to expand our knowledge of the moon and develop lunar technology that will assist NASA in its goal of landing astronauts on the lunar surface by 2024.”

Both payloads will be delivered to the moon by a Peregrine lander, built and operated by Astrobotic Inc., a CMU spinoff company in Pittsburgh. NASA last week awarded a $79.5 million contract to Astrobotic to deliver 14 scientific payloads to the lunar surface, making the July 2021 mission possible. CMU independently negotiated with Astrobotic to hitch a ride on the lander’s first mission.

“CMU robots have been on land, on the sea, in the air, underwater and underground,” said Whittaker, Fredkin University Research Professor and director of the Field Robotics Center. “The next frontier is the high frontier.”

For more than 30 years at the Robotics Institute, Whittaker has led the creation of a series of robots that developed technologies intended for planetary rovers — robots with names such as Ambler, Nomad, Scarab and Andy. And CMU software has helped NASA’s Mars rovers navigate on their own.

“We’re more than techies — we’re scholars of the moon,” Whittaker said.

The CMU robot headed to the moon is modest in size and form; Whittaker calls it “a shoebox with wheels.” It weighs only a little more than four pounds, but it carries large ambitions. Whittaker sees it as the first of a new family of robots that will make planetary robotics affordable for universities and other private entities.

The Soviet Union put large rovers on the moon fifty years ago, and China has a robot on the far side of the moon now, but these were massive programs affordable only by huge nations. The concept of CMU’s rover is similar to that of CubeSats. These small, inexpensive satellites revolutionized missions to Earth’s orbit two decades ago, enabling even small research groups to launch experiments.

Miniaturization is a big factor in affordability, Whittaker said. Whereas the Soviet robots each weighed as much as a buffalo and China’s rover is the weight of a panda bear, CMU’s rover weighs half as much as a house cat.

The Astrobotic landing will be on the near side of the moon in the vicinity of Lacus Mortis, or Lake of Death, which features a large pit the size of Pittsburgh’s Heinz Field that is of considerable scientific interest. The rover will serve largely as a mobile video platform, providing the first ground-level imagery of the site.

The MoonArk has been assembled by an international team of professionals within the arts, humanities, science and technology communities. Mark Baskinger, associate professor in the CMU School of Design, is co-leading the initiative with Lowry.

The MoonArk team includes CMU students, faculty and alumni who worked with external artists and professionals involved with emerging media, new and ancient technologies, and hybrid processes. The team members hold degrees and faculty appointments in design, engineering, architecture, chemistry, poetry, music composition and visual art, among others. Their efforts have been coordinated by the Frank-Ratchye STUDIO for Creative Inquiry in CMU’s College of Fine Arts.

Baskinger calls the ark and its contents a capsule of life on earth, meant to help illustrate a vital part of the human existence: the arts.

“If this is the next step in space exploration, let’s put that exploration into the public consciousness,” he said. “Why not get people to look up and think about our spot in the universe, and think about where we are in the greater scheme of things?”

Carnegie Mellon University

5000 Forbes Ave.

Pittsburgh, PA 15213

412-268-2900

Fax: 412-268-6929

Contact: Byron Spice

412-268-9068

Pam Wigley

412-268-1047

————————————————————

Press release above was provided to us by Carnegie Mellon University

The post Carnegie Mellon Robot, Art Project To Land on Moon in 2021 appeared first on Roboticmagazine.

Intelligent Robot Vision Solutions for Your Industry 4.0 Processes

Guiding the Future of Autonomous Commercial Cleaning

Agile Automation for Small-Batch Manufacturing

2019 Robot Launch startup competition is open!

It’s time for Robot Launch 2019 Global Startup Competition! Applications are now open until September 2nd 6pm PDT. Finalists may receive up to $500k in investment offers, plus space at top accelerators and mentorship at Silicon Valley Robotics co-work space.

It’s time for Robot Launch 2019 Global Startup Competition! Applications are now open until September 2nd 6pm PDT. Finalists may receive up to $500k in investment offers, plus space at top accelerators and mentorship at Silicon Valley Robotics co-work space.

Winners in previous years include high profile robotics startups and acquisitions:

2018: Anybotics from ETH Zurich, with Sevensense and Hebi Robotics as runners-up.

2017: Semio from LA, with Appellix, Fotokite, Kinema Systems, BotsAndUs and Mothership Aeronautics as runners up in Seed and Series A categories.

Flexibility of Mobile Robots Supports Lean Manufacturing Initiatives and Continuous Optimizations of Internal Logistics at Honeywell

How Drones Are Disrupting The Insurance Industry

#292: Robot Operating System (ROS) & Gazebo, with Brian Gerkey

In this episode, Audrow Nash interviews Brian Gerkey, CEO of Open Robotics about the Robot Operating System (ROS) and Gazebo. Both ROS and Gazebo are open source and are widely used in the robotics community. ROS is a set of software libraries and tools, and Gazebo is a 3D robotics simulator. Gerkey explains ROS and Gazebo and talks about how they are used in robotics, as well as some of the design decisions of the second version of ROS, ROS2.

Brian Gerkey

Brian Gerkey is the CEO of Open Robotics, which seeks to develop and drive the adoption of open source software in robotics. Before Open Robotics, Brian was the Director of Open Source Development at Willow Garage, a computer scientist in the SRI Artificial Intelligence Center, a post-doctoral scholar in Sebastian Thrun‘s group in the Stanford Artificial Intelligence Lab. Brian did his PhD with Maja Matarić in the USC Interaction Lab.

Links