| Paper ID |

Title |

Authors |

Virtual Session Link |

| 1 |

Planning and Execution using Inaccurate Models with Provable Guarantees |

Anirudh Vemula (Carnegie Mellon University)*; Yash Oza (CMU); J. Bagnell (Aurora Innovation); Maxim Likhachev (CMU) |

Virtual Session #1 |

| 2 |

Swoosh! Rattle! Thump! – Actions that Sound |

Dhiraj Gandhi (Carnegie Mellon University)*; Abhinav Gupta (Carnegie Mellon University); Lerrel Pinto (NYU/Berkeley) |

Virtual Session #1 |

| 3 |

Deep Visual Reasoning: Learning to Predict Action Sequences for Task and Motion Planning from an Initial Scene Image |

Danny Driess (Machine Learning and Robotics Lab, University of Stuttgart)*; Jung-Su Ha (); Marc Toussaint () |

Virtual Session #1 |

| 4 |

Elaborating on Learned Demonstrations with Temporal Logic Specifications |

Craig Innes (University of Edinburgh)*; Subramanian Ramamoorthy (University of Edinburgh) |

Virtual Session #1 |

| 5 |

Non-revisiting Coverage Task with Minimal Discontinuities for Non-redundant Manipulators |

Tong Yang (Zhejiang University)*; Jaime Valls Miro (University of Technology Sydney); Yue Wang (Zhejiang University); Rong Xiong (Zhejiang University) |

Virtual Session #1 |

| 6 |

LatticeNet: Fast Point Cloud Segmentation Using Permutohedral Lattices |

Radu Alexandru Rosu (University of Bonn)*; Peer Schütt (University of Bonn); Jan Quenzel (University of Bonn); Sven Behnke (University of Bonn) |

Virtual Session #1 |

| 7 |

A Smooth Representation of Belief over SO(3) for Deep Rotation Learning with Uncertainty |

Valentin Peretroukhin (University of Toronto)*; Matthew Giamou (University of Toronto); W. Nicholas Greene (MIT); David Rosen (MIT Laboratory for Information and Decision Systems); Jonathan Kelly (University of Toronto); Nicholas Roy (MIT) |

Virtual Session #1 |

| 8 |

Leading Multi-Agent Teams to Multiple Goals While Maintaining Communication |

Brian Reily (Colorado School of Mines)*; Christopher Reardon (ARL); Hao Zhang (Colorado School of Mines) |

Virtual Session #1 |

| 9 |

OverlapNet: Loop Closing for LiDAR-based SLAM |

Xieyuanli Chen (Photogrammetry & Robotics Lab, University of Bonn)*; Thomas Läbe (Institute for Geodesy and Geoinformation, University of Bonn); Andres Milioto (University of Bonn); Timo Röhling (Fraunhofer FKIE); Olga Vysotska (Autonomous Intelligent Driving GmbH); Alexandre Haag (AID); Jens Behley (University of Bonn); Cyrill Stachniss (University of Bonn) |

Virtual Session #1 |

| 10 |

The Dark Side of Embodiment – Teaming Up With Robots VS Disembodied Agents |

Filipa Correia (INESC-ID & University of Lisbon)*; Samuel Gomes (IST/INESC-ID); Samuel Mascarenhas (INESC-ID); Francisco S. Melo (IST/INESC-ID); Ana Paiva (INESC-ID U of Lisbon) |

Virtual Session #1 |

| 11 |

Shared Autonomy with Learned Latent Actions |

Hong Jun Jeon (Stanford University)*; Dylan Losey (Stanford University); Dorsa Sadigh (Stanford) |

Virtual Session #1 |

| 12 |

Regularized Graph Matching for Correspondence Identification under Uncertainty in Collaborative Perception |

Peng Gao (Colorado school of mines)*; Rui Guo (Toyota Motor North America); Hongsheng Lu (Toyota Motor North America); Hao Zhang (Colorado School of Mines) |

Virtual Session #1 |

| 13 |

Frequency Modulation of Body Waves to Improve Performance of Limbless Robots |

Baxi Zhong (Goergia Tech)*; Tianyu Wang (Carnegie Mellon University); Jennifer Rieser (Georgia Institute of Technology); Abdul Kaba (Morehouse College); Howie Choset (Carnegie Melon University); Daniel Goldman (Georgia Institute of Technology) |

Virtual Session #1 |

| 14 |

Self-Reconfiguration in Two-Dimensions via Active Subtraction with Modular Robots |

Matthew Hall (The University of Sheffield)*; Anil Ozdemir (The University of Sheffield); Roderich Gross (The University of Sheffield) |

Virtual Session #1 |

| 15 |

Singularity Maps of Space Robots and their Application to Gradient-based Trajectory Planning |

Davide Calzolari (Technical University of Munich (TUM), German Aerospace Center (DLR))*; Roberto Lampariello (German Aerospace Center); Alessandro Massimo Giordano (Deutches Zentrum für Luft und Raumfahrt) |

Virtual Session #1 |

| 16 |

Grounding Language to Non-Markovian Tasks with No Supervision of Task Specifications |

Roma Patel (Brown University)*; Ellie Pavlick (Brown University); Stefanie Tellex (Brown University) |

Virtual Session #1 |

| 17 |

Fast Uniform Dispersion of a Crash-prone Swarm |

Michael Amir (Technion – Israel Institute of Technology)*; Freddy Bruckstein (Technion) |

Virtual Session #1 |

| 18 |

Simultaneous Enhancement and Super-Resolution of Underwater Imagery for Improved Visual Perception |

Md Jahidul Islam (University of Minnesota Twin Cities)*; Peigen Luo (University of Minnesota-Twin Cities); Junaed Sattar (University of Minnesota) |

Virtual Session #1 |

| 19 |

Collision Probabilities for Continuous-Time Systems Without Sampling |

Kristoffer Frey (MIT)*; Ted Steiner (Charles Stark Draper Laboratory, Inc.); Jonathan How (MIT) |

Virtual Session #1 |

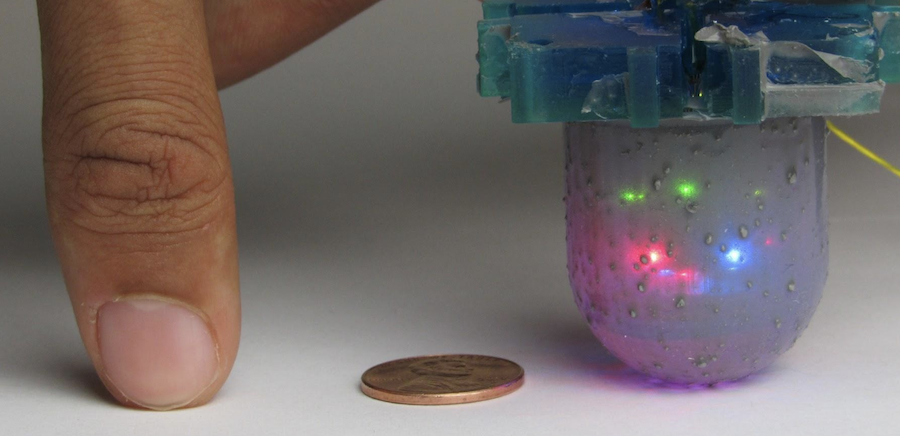

| 20 |

Event-Driven Visual-Tactile Sensing and Learning for Robots |

Tasbolat Taunyazov (National University of Singapore); Weicong Sng (National University of Singapore); Brian Lim (National University of Singapore); Hian Hian See (National University of Singapore); Jethro Kuan (National University of Singapore); Abdul Fatir Ansari (National University of Singapore); Benjamin Tee (National University of Singapore); Harold Soh (National University Singapore)* |

Virtual Session #1 |

| 21 |

Resilient Distributed Diffusion for Multi-Robot Systems Using Centerpoint |

JIANI LI (Vanderbilt University)*; Waseem Abbas (Vanderbilt University); Mudassir Shabbir (Information Technology University); Xenofon Koutsoukos (Vanderbilt University) |

Virtual Session #1 |

| 22 |

Pixel-Wise Motion Deblurring of Thermal Videos |

Manikandasriram Srinivasan Ramanagopal (University of Michigan)*; Zixu Zhang (University of Michigan); Ram Vasudevan (University of Michigan); Matthew Johnson Roberson (University of Michigan) |

Virtual Session #1 |

| 23 |

Controlling Contact-Rich Manipulation Under Partial Observability |

Florian Wirnshofer (Siemens AG)*; Philipp Sebastian Schmitt (Siemens AG); Georg von Wichert (Siemens AG); Wolfram Burgard (University of Freiburg) |

Virtual Session #1 |

| 24 |

AVID: Learning Multi-Stage Tasks via Pixel-Level Translation of Human Videos |

Laura Smith (UC Berkeley)*; Nikita Dhawan (UC Berkeley); Marvin Zhang (UC Berkeley); Pieter Abbeel (UC Berkeley); Sergey Levine (UC Berkeley) |

Virtual Session #1 |

| 25 |

Provably Constant-time Planning and Re-planning for Real-time Grasping Objects off a Conveyor Belt |

Fahad Islam (Carnegie Mellon University)*; Oren Salzman (Technion); Aditya Agarwal (CMU); Likhachev Maxim (Carnegie Mellon University) |

Virtual Session #1 |

| 26 |

Online IMU Intrinsic Calibration: Is It Necessary? |

Yulin Yang (University of Delaware)*; Patrick Geneva (University of Delaware); Xingxing Zuo (Zhejiang University); Guoquan Huang (University of Delaware) |

Virtual Session #1 |

| 27 |

A Berry Picking Robot With A Hybrid Soft-Rigid Arm: Design and Task Space Control |

Naveen Kumar Uppalapati (University of Illinois at Urbana Champaign)*; Benjamin Walt ( University of Illinois at Urbana Champaign); Aaron Havens (University of Illinois Urbana Champaign); Armeen Mahdian (University of Illinois at Urbana Champaign); Girish Chowdhary (University of Illinois at Urbana Champaign); Girish Krishnan (University of Illinois at Urbana Champaign) |

Virtual Session #1 |

| 28 |

Iterative Repair of Social Robot Programs from Implicit User Feedback via Bayesian Inference |

Michael Jae-Yoon Chung (University of Washington)*; Maya Cakmak (University of Washington) |

Virtual Session #1 |

| 29 |

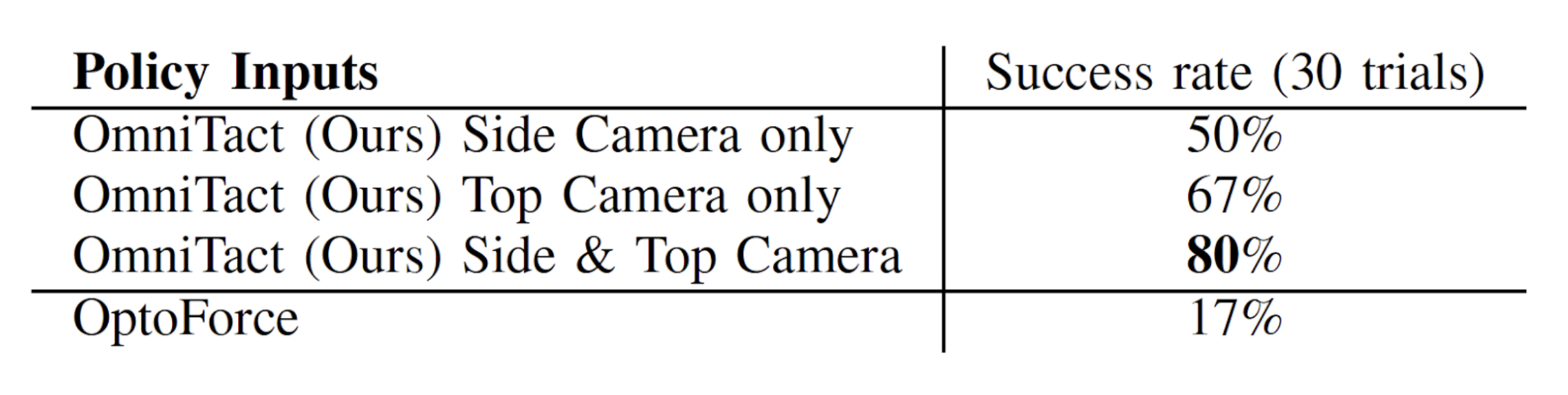

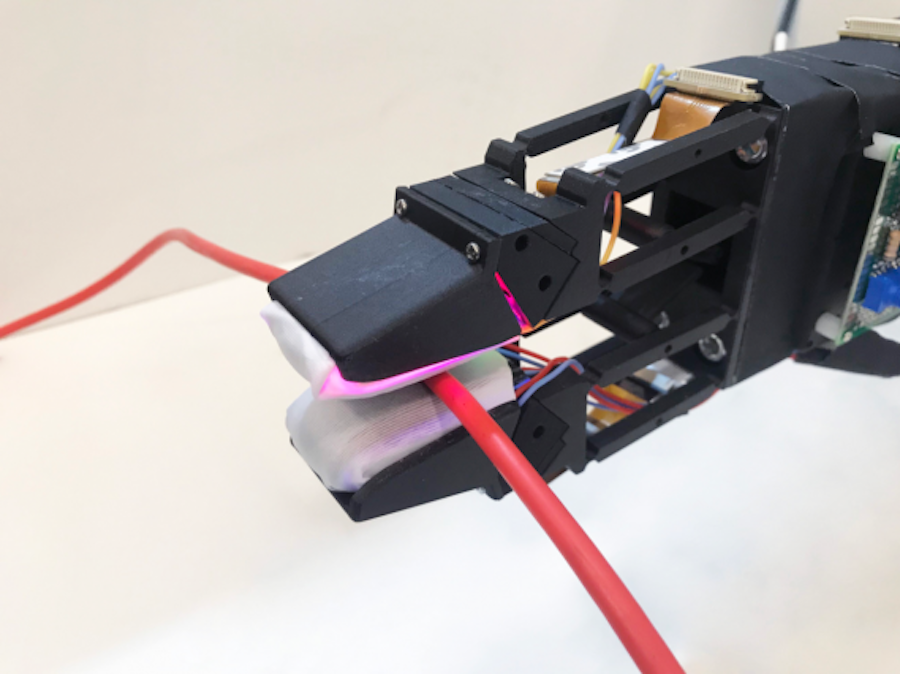

Cable Manipulation with a Tactile-Reactive Gripper |

Siyuan Dong (MIT); Shaoxiong Wang (MIT); Yu She (MIT)*; Neha Sunil (Massachusetts Institute of Technology); Alberto Rodriguez (MIT); Edward Adelson (MIT, USA) |

Virtual Session #1 |

| 30 |

Automated Synthesis of Modular Manipulators’ Structure and Control for Continuous Tasks around Obstacles |

Thais Campos de Almeida (Cornell University)*; Samhita Marri (Cornell University); Hadas Kress-Gazit (Cornell) |

Virtual Session #1 |

| 31 |

Learning Memory-Based Control for Human-Scale Bipedal Locomotion |

Jonah Siekmann (Oregon State University)*; Srikar Valluri (Oregon State University); Jeremy Dao (Oregon State University); Francis Bermillo (Oregon State University); Helei Duan (Oregon State University); Alan Fern (Oregon State University); Jonathan Hurst (Oregon State University) |

Virtual Session #1 |

| 32 |

Multi-Fidelity Black-Box Optimization for Time-Optimal Quadrotor Maneuvers |

Gilhyun Ryou (Massachusetts Institute of Technology)*; Ezra Tal (Massachusetts Institute of Technology); Sertac Karaman (Massachusetts Institute of Technology) |

Virtual Session #1 |

| 33 |

Manipulation Trajectory Optimization with Online Grasp Synthesis and Selection |

Lirui Wang (University of Washington)*; Yu Xiang (NVIDIA); Dieter Fox (NVIDIA Research / University of Washington) |

Virtual Session #1 |

| 34 |

VisuoSpatial Foresight for Multi-Step, Multi-Task Fabric Manipulation |

Ryan Hoque (UC Berkeley)*; Daniel Seita (University of California, Berkeley); Ashwin Balakrishna (UC Berkeley); Aditya Ganapathi (University of California, Berkeley); Ajay Tanwani (UC Berkeley); Nawid Jamali (Honda Research Institute); Katsu Yamane (Honda Research Institute); Soshi Iba (Honda Research Institute); Ken Goldberg (UC Berkeley) |

Virtual Session #1 |

| 35 |

Spatial Action Maps for Mobile Manipulation |

Jimmy Wu (Princeton University)*; Xingyuan Sun (Princeton University); Andy Zeng (Google); Shuran Song (Columbia University); Johnny Lee (Google); Szymon Rusinkiewicz (Princeton University); Thomas Funkhouser (Princeton University) |

Virtual Session #2 |

| 36 |

Generalized Tsallis Entropy Reinforcement Learning and Its Application to Soft Mobile Robots |

Kyungjae Lee (Seoul National University)*; Sungyub Kim (KAIST); Sungbin Lim (UNIST); Sungjoon Choi (Disney Research); Mineui Hong (Seoul National University); Jaein Kim (Seoul National University); Yong-Lae Park (Seoul National University); Songhwai Oh (Seoul National University) |

Virtual Session #2 |

| 37 |

Learning Labeled Robot Affordance Models Using Simulations and Crowdsourcing |

Adam Allevato (UT Austin)*; Elaine Short (Tufts University); Mitch Pryor (UT Austin); Andrea Thomaz (UT Austin) |

Virtual Session #2 |

| 38 |

Towards Embodied Scene Description |

Sinan Tan (Tsinghua University); Huaping Liu (Tsinghua University)*; Di Guo (Tsinghua University); Xinyu Zhang (Tsinghua University); Fuchun Sun (Tsinghua University) |

Virtual Session #2 |

| 39 |

Reinforcement Learning based Control of Imitative Policies for Near-Accident Driving |

Zhangjie Cao (Stanford University); Erdem Biyik (Stanford University)*; Woodrow Wang (Stanford University); Allan Raventos (Toyota Research Institute); Adrien Gaidon (Toyota Research Institute); Guy Rosman (Toyota Research Institute); Dorsa Sadigh (Stanford) |

Virtual Session #2 |

| 40 |

Deep Drone Acrobatics |

Elia Kaufmann (ETH / University of Zurich)*; Antonio Loquercio (ETH / University of Zurich); Rene Ranftl (Intel Labs); Matthias Müller (Intel Labs); Vladlen Koltun (Intel Labs); Davide Scaramuzza (University of Zurich & ETH Zurich, Switzerland) |

Virtual Session #2 |

| 41 |

Active Preference-Based Gaussian Process Regression for Reward Learning |

Erdem Biyik (Stanford University)*; Nicolas Huynh (École Polytechnique); Mykel Kochenderfer (Stanford University); Dorsa Sadigh (Stanford) |

Virtual Session #2 |

| 42 |

A Bayesian Framework for Nash Equilibrium Inference in Human-Robot Parallel Play |

Shray Bansal (Georgia Institute of Technology)*; Jin Xu (Georgia Institute of Technology); Ayanna Howard (Georgia Institute of Technology); Charles Isbell (Georgia Institute of Technology) |

Virtual Session #2 |

| 43 |

Data-driven modeling of a flapping bat robot with a single flexible wing surface |

Jonathan Hoff (University of Illinois at Urbana-Champaign)*; Seth Hutchinson (Georgia Tech) |

Virtual Session #2 |

| 44 |

Safe Motion Planning for Autonomous Driving using an Adversarial Road Model |

Alex Liniger (ETH Zurich)*; Luc Van Gool (ETH Zurich) |

Virtual Session #2 |

| 45 |

A Motion Taxonomy for Manipulation Embedding |

David Paulius (University of South Florida)*; Nicholas Eales (University of South Florida); Yu Sun (University of South Florida) |

Virtual Session #2 |

| 46 |

Aerial Manipulation Using Hybrid Force and Position NMPC Applied to Aerial Writing |

Dimos Tzoumanikas (Imperial College London)*; Felix Graule (ETH Zurich); Qingyue Yan (Imperial College London); Dhruv Shah (Berkeley Artificial Intelligence Research); Marija Popovic (Imperial College London); Stefan Leutenegger (Imperial College London) |

Virtual Session #2 |

| 47 |

A Global Quasi-Dynamic Model for Contact-Trajectory Optimization in Manipulation |

Bernardo Aceituno-Cabezas (MIT)*; Alberto Rodriguez (MIT) |

Virtual Session #2 |

| 48 |

Vision-Based Goal-Conditioned Policies for Underwater Navigation in the Presence of Obstacles |

Travis Manderson (McGill University)*; Juan Camilo Gamboa Higuera (McGill University); Stefan Wapnick (McGill University); Jean-François Tremblay (McGill University); Florian Shkurti (University of Toronto); David Meger (McGill University); Gregory Dudek (McGill University) |

Virtual Session #2 |

| 49 |

Spatio-Temporal Stochastic Optimization: Theory and Applications to Optimal Control and Co-Design |

Ethan Evans (Georgia Institute of Technology)*; Andrew Kendall (Georgia Institute of Technology); Georgios Boutselis (Georgia Institute of Technology ); Evangelos Theodorou (Georgia Institute of Technology) |

Virtual Session #2 |

| 50 |

Kernel Taylor-Based Value Function Approximation for Continuous-State Markov Decision Processes |

Junhong Xu (INDIANA UNIVERSITY)*; Kai Yin (Vrbo, Expedia Group); Lantao Liu (Indiana University, Intelligent Systems Engineering) |

Virtual Session #2 |

| 51 |

HMPO: Human Motion Prediction in Occluded Environments for Safe Motion Planning |

Jaesung Park (University of North Carolina at Chapel Hill)*; Dinesh Manocha (University of Maryland at College Park) |

Virtual Session #2 |

| 52 |

Motion Planning for Variable Topology Truss Modular Robot |

Chao Liu (University of Pennsylvania)*; Sencheng Yu (University of Pennsylvania); Mark Yim (University of Pennsylvania) |

Virtual Session #2 |

| 53 |

Emergent Real-World Robotic Skills via Unsupervised Off-Policy Reinforcement Learning |

Archit Sharma (Google)*; Michael Ahn (Google); Sergey Levine (Google); Vikash Kumar (Google); Karol Hausman (Google Brain); Shixiang Gu (Google Brain) |

Virtual Session #2 |

| 54 |

Compositional Transfer in Hierarchical Reinforcement Learning |

Markus Wulfmeier (DeepMind)*; Abbas Abdolmaleki (Google DeepMind); Roland Hafner (Google DeepMind); Jost Tobias Springenberg (DeepMind); Michael Neunert (Google DeepMind); Noah Siegel (DeepMind); Tim Hertweck (DeepMind); Thomas Lampe (DeepMind); Nicolas Heess (DeepMind); Martin Riedmiller (DeepMind) |

Virtual Session #2 |

| 55 |

Learning from Interventions: Human-robot interaction as both explicit and implicit feedback |

Jonathan Spencer (Princeton University)*; Sanjiban Choudhury (University of Washington); Matt Barnes (University of Washington); Matthew Schmittle (University of Washington); Mung Chiang (Princeton University); Peter Ramadge (Princeton); Siddhartha Srinivasa (University of Washington) |

Virtual Session #2 |

| 56 |

Fourier movement primitives: an approach for learning rhythmic robot skills from demonstrations |

Thibaut Kulak (Idiap Research Institute)*; Joao Silverio (Idiap Research Institute); Sylvain Calinon (Idiap Research Institute) |

Virtual Session #2 |

| 57 |

Self-Supervised Localisation between Range Sensors and Overhead Imagery |

Tim Tang (University of Oxford)*; Daniele De Martini (University of Oxford); Shangzhe Wu (University of Oxford); Paul Newman (University of Oxford) |

Virtual Session #2 |

| 58 |

Probabilistic Swarm Guidance Subject to Graph Temporal Logic Specifications |

Franck Djeumou (University of Texas at Austin)*; Zhe Xu (University of Texas at Austin); Ufuk Topcu (University of Texas at Austin) |

Virtual Session #2 |

| 59 |

In-Situ Learning from a Domain Expert for Real World Socially Assistive Robot Deployment |

Katie Winkle (Bristol Robotics Laboratory)*; Severin Lemaignan (); Praminda Caleb-Solly (); Paul Bremner (); Ailie Turton (University of the West of England); Ute Leonards () |

Virtual Session #2 |

| 60 |

MRFMap: Online Probabilistic 3D Mapping using Forward Ray Sensor Models |

Kumar Shaurya Shankar (Carnegie Mellon University)*; Nathan Michael (Carnegie Mellon University) |

Virtual Session #2 |

| 61 |

GTI: Learning to Generalize across Long-Horizon Tasks from Human Demonstrations |

Ajay Mandlekar (Stanford University); Danfei Xu (Stanford University)*; Roberto Martín-Martín (Stanford University); Silvio Savarese (Stanford University); Li Fei-Fei (Stanford University) |

Virtual Session #2 |

| 62 |

Agbots 2.0: Weeding Denser Fields with Fewer Robots |

Wyatt McAllister (University of Illinois)*; Joshua Whitman (University of Illinois); Allan Axelrod (University of Illinois); Joshua Varghese (University of Illinois); Girish Chowdhary (University of Illinois at Urbana Champaign); Adam Davis (University of Illinois) |

Virtual Session #2 |

| 63 |

Optimally Guarding Perimeters and Regions with Mobile Range Sensors |

Siwei Feng (Rutgers University)*; Jingjin Yu (Rutgers Univ.) |

Virtual Session #2 |

| 64 |

Learning Agile Robotic Locomotion Skills by Imitating Animals |

Xue Bin Peng (UC Berkeley)*; Erwin Coumans (Google); Tingnan Zhang (Google); Tsang-Wei Lee (Google Brain); Jie Tan (Google); Sergey Levine (UC Berkeley) |

Virtual Session #2 |

| 65 |

Learning to Manipulate Deformable Objects without Demonstrations |

Yilin Wu (UC Berkeley); Wilson Yan (UC Berkeley)*; Thanard Kurutach (UC Berkeley); Lerrel Pinto (); Pieter Abbeel (UC Berkeley) |

Virtual Session #2 |

| 66 |

Deep Differentiable Grasp Planner for High-DOF Grippers |

Min Liu (National University of Defense Technology)*; Zherong Pan (University of North Carolina at Chapel Hill); Kai Xu (National University of Defense Technology); Kanishka Ganguly (University of Maryland at College Park); Dinesh Manocha (University of North Carolina at Chapel Hill) |

Virtual Session #2 |

| 67 |

Ergodic Specifications for Flexible Swarm Control: From User Commands to Persistent Adaptation |

Ahalya Prabhakar (Northwestern University)*; Ian Abraham (Northwestern University); Annalisa Taylor (Northwestern University); Millicent Schlafly (Northwestern University); Katarina Popovic (Northwestern University); Giovani Diniz (Raytheon); Brendan Teich (Raytheon); Borislava Simidchieva (Raytheon); Shane Clark (Raytheon); Todd Murphey (Northwestern Univ.) |

Virtual Session #2 |

| 68 |

Dynamic Multi-Robot Task Allocation under Uncertainty and Temporal Constraints |

Shushman Choudhury (Stanford University)*; Jayesh Gupta (Stanford University); Mykel Kochenderfer (Stanford University); Dorsa Sadigh (Stanford); Jeannette Bohg (Stanford) |

Virtual Session #2 |

| 69 |

Latent Belief Space Motion Planning under Cost, Dynamics, and Intent Uncertainty |

Dicong Qiu (iSee); Yibiao Zhao (iSee); Chris Baker (iSee)* |

Virtual Session #2 |

| 70 |

Learning of Sub-optimal Gait Controllers for Magnetic Walking Soft Millirobots |

Utku Culha (Max-Planck Institute for Intelligent Systems); Sinan Ozgun Demir (Max Planck Institute for Intelligent Systems); Sebastian Trimpe (Max Planck Institute for Intelligent Systems); Metin Sitti (Carnegie Mellon University)* |

Virtual Session #3 |

| 71 |

Nonparametric Motion Retargeting for Humanoid Robots on Shared Latent Space |

Sungjoon Choi (Disney Research)*; Matthew Pan (Disney Research); Joohyung Kim (University of Illinois Urbana-Champaign) |

Virtual Session #3 |

| 72 |

Residual Policy Learning for Shared Autonomy |

Charles Schaff (Toyota Technological Institute at Chicago)*; Matthew Walter (Toyota Technological Institute at Chicago) |

Virtual Session #3 |

| 73 |

Efficient Parametric Multi-Fidelity Surface Mapping |

Aditya Dhawale (Carnegie Mellon University)*; Nathan Michael (Carnegie Mellon University) |

Virtual Session #3 |

| 74 |

Towards neuromorphic control: A spiking neural network based PID controller for UAV |

Rasmus Stagsted (University of Southern Denmark); Antonio Vitale (ETH Zurich); Jonas Binz (ETH Zurich); Alpha Renner (Institute of Neuroinformatics, University of Zurich and ETH Zurich); Leon Bonde Larsen (University of Southern Denmark); Yulia Sandamirskaya (Institute of Neuroinformatics, University of Zurich and ETH Zurich, Switzerland)* |

Virtual Session #3 |

| 75 |

Quantile QT-Opt for Risk-Aware Vision-Based Robotic Grasping |

Cristian Bodnar (University of Cambridge)*; Adrian Li (X); Karol Hausman (Google Brain); Peter Pastor (X); Mrinal Kalakrishnan (X) |

Virtual Session #3 |

| 76 |

Scaling data-driven robotics with reward sketching and batch reinforcement learning |

Serkan Cabi (DeepMind)*; Sergio Gómez Colmenarejo (DeepMind); Alexander Novikov (DeepMind); Ksenia Konyushova (DeepMind); Scott Reed (DeepMind); Rae Jeong (DeepMind); Konrad Zolna (DeepMind); Yusuf Aytar (DeepMind); David Budden (DeepMind); Mel Vecerik (Deepmind); Oleg Sushkov (DeepMind); David Barker (DeepMind); Jonathan Scholz (DeepMind); Misha Denil (DeepMind); Nando de Freitas (DeepMind); Ziyu Wang (Google Research, Brain Team) |

Virtual Session #3 |

| 77 |

MPTC – Modular Passive Tracking Controller for stack of tasks based control frameworks |

Johannes Englsberger (German Aerospace Center (DLR))*; Alexander Dietrich (DLR); George Mesesan (German Aerospace Center (DLR)); Gianluca Garofalo (German Aerospace Center (DLR)); Christian Ott (DLR); Alin Albu-Schaeffer (Robotics and Mechatronics Center (RMC), German Aerospace Center (DLR)) |

Virtual Session #3 |

| 78 |

NH-TTC: A gradient-based framework for generalized anticipatory collision avoidance |

Bobby Davis (University of Minnesota Twin Cities)*; Ioannis Karamouzas (Clemson University); Stephen Guy (University of Minnesota Twin Cities) |

Virtual Session #3 |

| 79 |

3D Dynamic Scene Graphs: Actionable Spatial Perception with Places, Objects, and Humans |

Antoni Rosinol (MIT)*; Arjun Gupta (MIT); Marcus Abate (MIT); Jingnan Shi (MIT); Luca Carlone (Massachusetts Institute of Technology) |

Virtual Session #3 |

| 80 |

Robot Object Retrieval with Contextual Natural Language Queries |

Thao Nguyen (Brown University)*; Nakul Gopalan (Georgia Tech); Roma Patel (Brown University); Matthew Corsaro (Brown University); Ellie Pavlick (Brown University); Stefanie Tellex (Brown University) |

Virtual Session #3 |

| 81 |

AlphaPilot: Autonomous Drone Racing |

Philipp Foehn (ETH / University of Zurich)*; Dario Brescianini (University of Zurich); Elia Kaufmann (ETH / University of Zurich); Titus Cieslewski (University of Zurich & ETH Zurich); Mathias Gehrig (University of Zurich); Manasi Muglikar (University of Zurich); Davide Scaramuzza (University of Zurich & ETH Zurich, Switzerland) |

Virtual Session #3 |

| 82 |

Concept2Robot: Learning Manipulation Concepts from Instructions and Human Demonstrations |

Lin Shao (Stanford University)*; Toki Migimatsu (Stanford University); Qiang Zhang (Shanghai Jiao Tong University); Kaiyuan Yang (Stanford University); Jeannette Bohg (Stanford) |

Virtual Session #3 |

| 83 |

A Variable Rolling SLIP Model for a Conceptual Leg Shape to Increase Robustness of Uncertain Velocity on Unknown Terrain |

Adar Gaathon (Technion – Israel Institute of Technology)*; Amir Degani (Technion – Israel Institute of Technology) |

Virtual Session #3 |

| 84 |

Interpreting and Predicting Tactile Signals via a Physics-Based and Data-Driven Framework |

Yashraj Narang (NVIDIA)*; Karl Van Wyk (NVIDIA); Arsalan Mousavian (NVIDIA); Dieter Fox (NVIDIA) |

Virtual Session #3 |

| 85 |

Learning Active Task-Oriented Exploration Policies for Bridging the Sim-to-Real Gap |

Jacky Liang (Carnegie Mellon University)*; Saumya Saxena (Carnegie Mellon University); Oliver Kroemer (Carnegie Mellon University) |

Virtual Session #3 |

| 86 |

Manipulation with Shared Grasping |

Yifan Hou (Carnegie Mellon University)*; Zhenzhong Jia (SUSTech); Matthew Mason (Carnegie Mellon University) |

Virtual Session #3 |

| 87 |

Deep Learning Tubes for Tube MPC |

David Fan (Georgia Institute of Technology )*; Ali Agha (Jet Propulsion Laboratory); Evangelos Theodorou (Georgia Institute of Technology) |

Virtual Session #3 |

| 88 |

Reinforcement Learning for Safety-Critical Control under Model Uncertainty, using Control Lyapunov Functions and Control Barrier Functions |

Jason Choi (UC Berkeley); Fernando Castañeda (UC Berkeley); Claire Tomlin (UC Berkeley); Koushil Sreenath (Berkeley)* |

Virtual Session #3 |

| 89 |

Fast Risk Assessment for Autonomous Vehicles Using Learned Models of Agent Futures |

Allen Wang (MIT)*; Xin Huang (MIT); Ashkan Jasour (MIT); Brian Williams (Massachusetts Institute of Technology) |

Virtual Session #3 |

| 90 |

Online Domain Adaptation for Occupancy Mapping |

Anthony Tompkins (The University of Sydney)*; Ransalu Senanayake (Stanford University); Fabio Ramos (NVIDIA, The University of Sydney) |

Virtual Session #3 |

| 91 |

ALGAMES: A Fast Solver for Constrained Dynamic Games |

Simon Le Cleac’h (Stanford University)*; Mac Schwager (Stanford, USA); Zachary Manchester (Stanford) |

Virtual Session #3 |

| 92 |

Scalable and Probabilistically Complete Planning for Robotic Spatial Extrusion |

Caelan Garrett (MIT)*; Yijiang Huang (MIT Department of Architecture); Tomas Lozano-Perez (MIT); Caitlin Mueller (MIT Department of Architecture) |

Virtual Session #3 |

| 93 |

The RUTH Gripper: Systematic Object-Invariant Prehensile In-Hand Manipulation via Reconfigurable Underactuation |

Qiujie Lu (Imperial College London)*; Nicholas Baron (Imperial College London); Angus Clark (Imperial College London); Nicolas Rojas (Imperial College London) |

Virtual Session #3 |

| 94 |

Heterogeneous Graph Attention Networks for Scalable Multi-Robot Scheduling with Temporospatial Constraints |

Zheyuan Wang (Georgia Institute of Technology)*; Matthew Gombolay (Georgia Institute of Technology) |

Virtual Session #3 |

| 95 |

Robust Multiple-Path Orienteering Problem: Securing Against Adversarial Attacks |

Guangyao Shi (University of Maryland)*; Pratap Tokekar (University of Maryland); Lifeng Zhou (Virginia Tech) |

Virtual Session #3 |

| 96 |

Eyes-Closed Safety Kernels: Safety of Autonomous Systems Under Loss of Observability |

Forrest Laine (UC Berkeley)*; Chih-Yuan Chiu (UC Berkeley); Claire Tomlin (UC Berkeley) |

Virtual Session #3 |

| 97 |

Explaining Multi-stage Tasks by Learning Temporal Logic Formulas from Suboptimal Demonstrations |

Glen Chou (University of Michigan)*; Necmiye Ozay (University of Michigan); Dmitry Berenson (U Michigan) |

Virtual Session #3 |

| 98 |

Nonlinear Model Predictive Control of Robotic Systems with Control Lyapunov Functions |

Ruben Grandia (ETH Zurich)*; Andrew Taylor (Caltech); Andrew Singletary (Caltech); Marco Hutter (ETHZ); Aaron Ames (Caltech) |

Virtual Session #3 |

| 99 |

Learning to Slide Unknown Objects with Differentiable Physics Simulations |

Changkyu Song (Rutgers University); Abdeslam Boularias (Rutgers University)* |

Virtual Session #3 |

| 100 |

Reachable Sets for Safe, Real-Time Manipulator Trajectory Design |

Patrick Holmes (University of Michigan); Shreyas Kousik (University of Michigan)*; Bohao Zhang (University of Michigan); Daphna Raz (University of Michigan); Corina Barbalata (Louisiana State University); Matthew Johnson Roberson (University of Michigan); Ram Vasudevan (University of Michigan) |

Virtual Session #3 |

| 101 |

Learning Task-Driven Control Policies via Information Bottlenecks |

Vincent Pacelli (Princeton University)*; Anirudha Majumdar (Princeton) |

Virtual Session #3 |

| 102 |

Simultaneously Learning Transferable Symbols and Language Groundings from Perceptual Data for Instruction Following |

Nakul Gopalan (Georgia Tech)*; Eric Rosen (Brown University); Stefanie Tellex (Brown University); George Konidaris (Brown) |

Virtual Session #3 |

| 103 |

A social robot mediator to foster collaboration and inclusion among children |

Sarah Gillet (Royal Institute of Technology)*; Wouter van den Bos (University of Amsterdam); Iolanda Leite (KTH) |

Virtual Session #3 |