Artificial intelligence must not be allowed to replace the imperfection of human empathy

Robotic swarm swims like a school of fish

By Leah Burrows / SEAS Communications

Schools of fish exhibit complex, synchronized behaviors that help them find food, migrate, and evade predators. No one fish or sub-group of fish coordinates these movements, nor do fish communicate with each other about what to do next. Rather, these collective behaviors emerge from so-called implicit coordination — individual fish making decisions based on what they see their neighbors doing.

This type of decentralized, autonomous self-organization and coordination has long fascinated scientists, especially in the field of robotics.

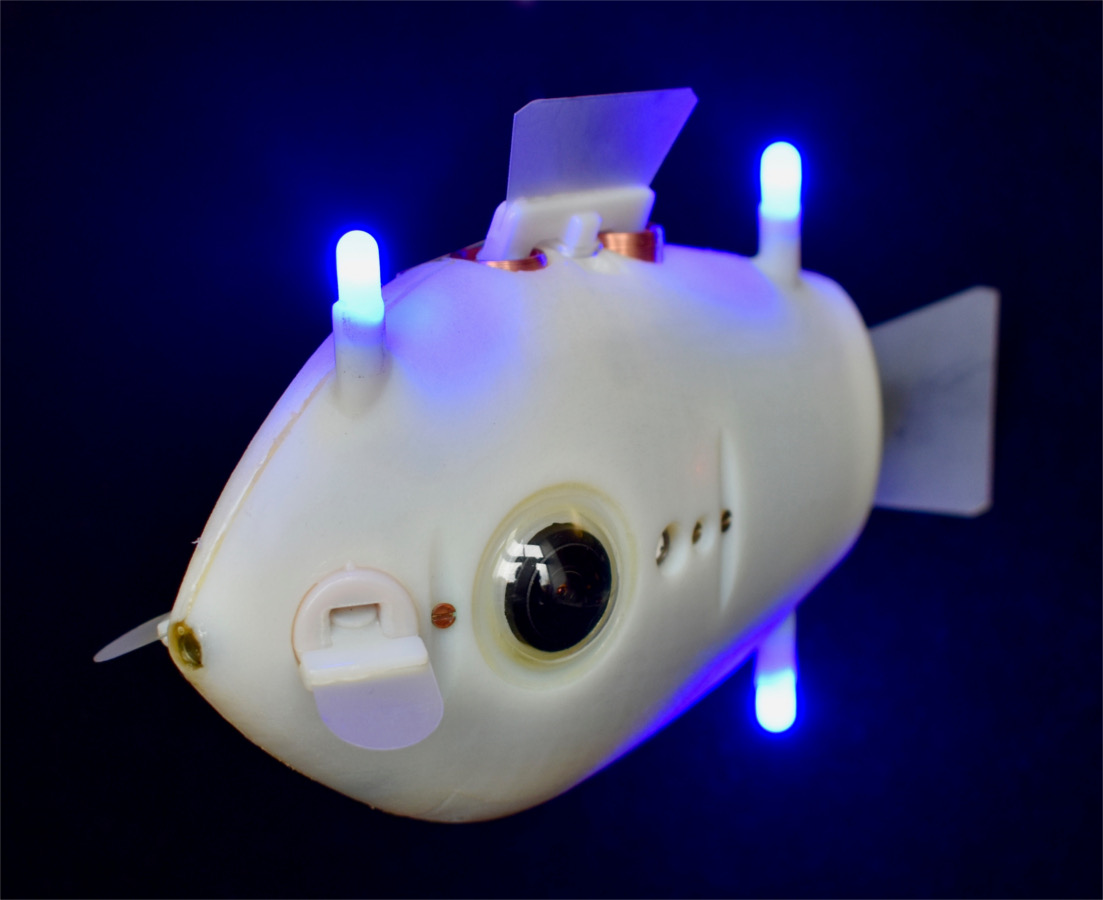

Now, a team of researchers at Harvard’s Wyss Institute and John A. Paulson School of Engineering and Applied Sciences (SEAS) have developed fish-inspired robots that can synchronize their movements like a real school of fish, without any external control. It is the first time researchers have demonstrated complex 3D collective behaviors with implicit coordination in underwater robots.

“Robots are often deployed in areas that are inaccessible or dangerous to humans, areas where human intervention might not even be possible,” said Florian Berlinger, a Ph.D. Candidate at the Wyss Institute and SEAS and first author of the paper. “In these situations, it really benefits you to have a highly autonomous robot swarm that is self-sufficient. By using implicit rules and 3D visual perception, we were able to create a system that has a high degree of autonomy and flexibility underwater where things like GPS and WiFi are not accessible.”

The research is published in Science Robotics.

The fish-inspired robotic swarm, dubbed Blueswarm, was created in the lab of Wyss Associate Faculty member Radhika Nagpal, Ph.D., who is also the Fred Kavli Professor of Computer Science at SEAS. Nagpal’s lab is a pioneer in self-organizing systems, from their 1,000 robot Kilobot swarm to their termite-inspired robotic construction crew.

However, most previous robotic swarms operated in two-dimensional space. Three-dimensional spaces, like air and water, pose significant challenges to sensing and locomotion.

To overcome these challenges, the researchers developed a vision-based coordination system in their fish robots based on blue LED lights. Each underwater robot, called a Bluebot, is equipped with two cameras and three LED lights. The on-board, fisheye-lens cameras detect the LEDs of neighboring Bluebots and use a custom algorithm to determine their distance, direction and heading. Based on the simple production and detection of LED light, the researchers demonstrated that the Blueswarm could exhibit complex self-organized behaviors, including aggregation, dispersion, and circle formation.

“Each Bluebot implicitly reacts to its neighbors’ positions,” said Berlinger. “So, if we want the robots to aggregate, then each Bluebot will calculate the position of each of its neighbors and move towards the center. If we want the robots to disperse, the Bluebots do the opposite. If we want them to swim as a school in a circle, they are programmed to follow lights directly in front of them in a clockwise direction.”

The researchers also simulated a simple search mission with a red light in the tank. Using the dispersion algorithm, the Bluebots spread out across the tank until one comes close enough to the light source to detect it. Once the robot detects the light, its LEDs begin to flash, which triggers the aggregation algorithm in the rest of the school. From there, all the Bluebots aggregate around the signaling robot.

Blueswarm, a Harvard Wyss- and SEAS-developed underwater robot collective, uses a 3D vision-based coordination system and 3D locomotion to coordinate the movements of its individual Bluebots autonomously, mimicking the behavior of schools of fish. Credit: Harvard SEAS

“Our results with Blueswarm represent a significant milestone in the investigation of underwater self-organized collective behaviors,” said Nagpal. “Insights from this research will help us develop future miniature underwater swarms that can perform environmental monitoring and search in visually-rich but fragile environments like coral reefs. This research also paves a way to better understand fish schools, by synthetically recreating their behavior.”

The research was co-authored by Melvin Gauci, Ph.D., a former Wyss Technology Development Fellow. It was supported in part by the Office of Naval Research, the Wyss Institute for Biologically Inspired Engineering, and an Amazon AWS Research Award.

How Unmanned Underwater Vehicles Could Become Easier to Detect – new research from Draper, MIT

What OEMs Look for in the Perfect Robot Supplier

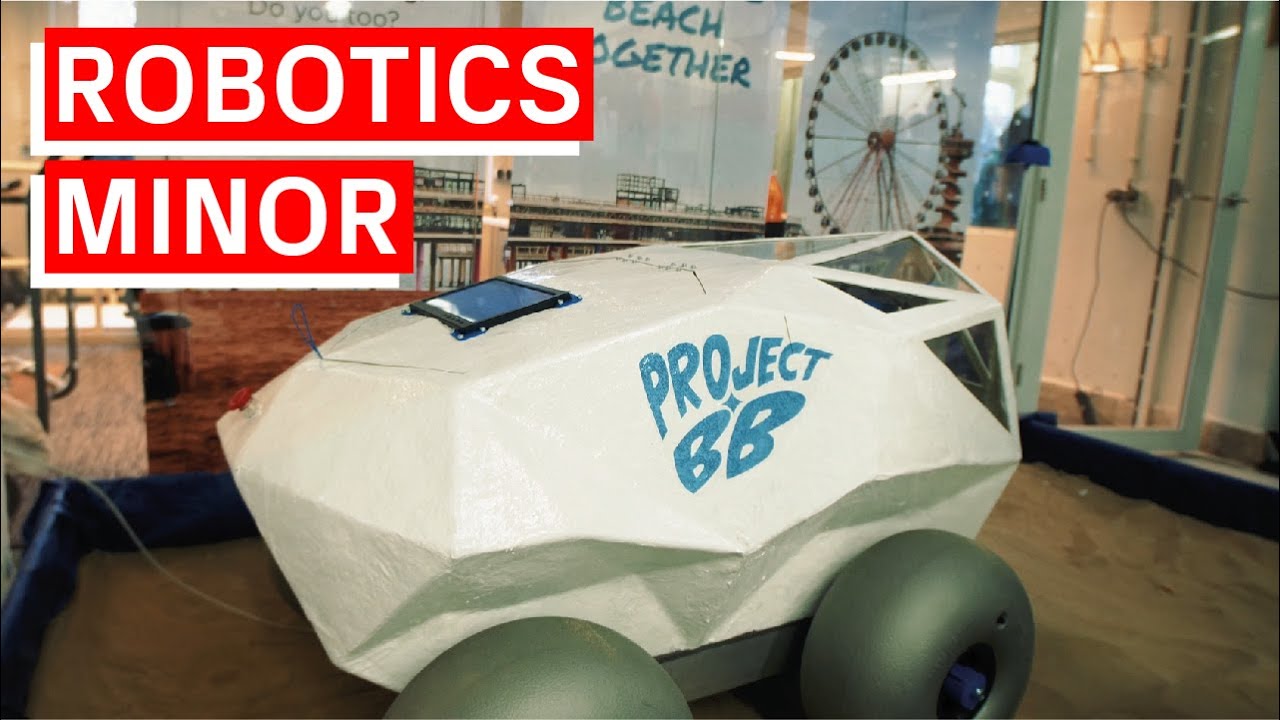

Demo Day: the most exciting day of the year (Jan 28th, 3pm CET)

Multidisciplinary teams of minor students have designed and built a functional robotic prototype for a project customer with a challenge to improve the lives of working people. Streaming live from RoboHouse on the TU Delft Campus, you can join a virtual celebration of ideas en technology with some of our communities finest talent.

How does it work?

On Thursday 28 January 3pm (CET) you can visit the streaming website that we have created for the occasion. There you will find live video streams for a programme with a project overview and demonstrations by each student team. This all happens between 3pm and 4:30pm.

Our hosts will be running around RoboHouse for you, going from robot to robot and from team to team, followed by highly mobile camera people, all with the purpose of giving you the most intimate and direct experience. We want you feel as if you are able to touch the robots yourself.

After toasting on another successful Demo Day, the programme will go into free flow. You are invited to plunge into the various projects and splash around with anyone you like during the Breakouts. These start around 4:40pm and can be joined via Zoom-links that are available on the website.

All this wouldn’t be possible without our fantastic project customers: AI for Retail AIR Lab + Alliander + Deltares + Accell Group + Torso Doc + TechTics + Odd.Bot.

And of course, most appreciation and respect go to the eight talented student teams, who this edition go by the illustrious names of: Krill + ARMS + D.I.R.T + BikeBotics + Oxillia + BoxBot + Shino + Althea.

Welcome to the future of work!

About the TU Delft Minor Robotics

The Minor Robotics is a 5-month educational program for third year BSc students of Mechanical Engineering, Industrial Design, Computer Science, and Electrical Engineering from Delft University of Technology. A team of students from all above disciplines (to make sure they cover all knowledge needed in robotics) are working on building a robot for a customer.

Students follow multidisciplinary courses, and work in multidisciplinary teams to design, build, and program robots for customers. During the first months of the minor the students will focus on taking courses outside their own discipline (for example, the Industrial Design student will learn about programming and electronics, while a Computer Science student will learn about statics and prototyping). But they will also work on the design of their robot in close collaboration with the customer.

The last months are dedicated full time on building the robot and ends with a demo together with all other teams from the Minor Robotics.

Here’s a video of last year’s TU Delft Minor Robotics event at RoboHouse.

The post Demo Day: the most exciting day of the year appeared first on RoboValley.

How the Vision Transformer (ViT) works in 10 minutes: an image is worth 16×16 words

IMTS Spark “Demo Days” Features Interactive Demonstrations, Offers Six Concentrated Learning Opportunities

‘Bleep-bloop-bleep! Say “cheese,” human’

#327: Computational Design, with Bernhard Thomaszewski

Lilly interviews Bernhard Thomaszewski, Professor of Computer Science at the University of Montréal and research scientist at ETH Zurich. Thomaszewski discusses his background in animation at Disney, his current work on mechanical metamaterials and digital fabrication, and how physics-based modeling has connected the dots.

Bernhard Thomaszewski

Bernhard Thomaszewski is an Assistant Professor in the Department of Computer Science and Operations Research at the University of Montréal. Until June 2017, he was a Research Scientist at Disney Research Zurich, heading the group on Computational Design and Digital Fabrication. Thomaszewski obtained his Master’s degree and PhD (Dr. rer. nat) in Computer Science from the University of Tübingen. Before joining Disney, he spent a year at the computer graphics group at ETH Zürich.

Links

- Download mp3 (14.7 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon