Designing customized “brains” for robots

By Daniel Ackerman

Contemporary robots can move quickly. “The motors are fast, and they’re powerful,” says Sabrina Neuman.

Yet in complex situations, like interactions with people, robots often don’t move quickly. “The hang up is what’s going on in the robot’s head,” she adds.

Perceiving stimuli and calculating a response takes a “boatload of computation,” which limits reaction time, says Neuman, who recently graduated with a PhD from the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL). Neuman has found a way to fight this mismatch between a robot’s “mind” and body. The method, called robomorphic computing, uses a robot’s physical layout and intended applications to generate a customized computer chip that minimizes the robot’s response time.

The advance could fuel a variety of robotics applications, including, potentially, frontline medical care of contagious patients. “It would be fantastic if we could have robots that could help reduce risk for patients and hospital workers,” says Neuman.

Neuman will present the research at this April’s International Conference on Architectural Support for Programming Languages and Operating Systems. MIT co-authors include graduate student Thomas Bourgeat and Srini Devadas, the Edwin Sibley Webster Professor of Electrical Engineering and Neuman’s PhD advisor. Other co-authors include Brian Plancher, Thierry Tambe, and Vijay Janapa Reddi, all of Harvard University. Neuman is now a postdoctoral NSF Computing Innovation Fellow at Harvard’s School of Engineering and Applied Sciences.

There are three main steps in a robot’s operation, according to Neuman. The first is perception, which includes gathering data using sensors or cameras. The second is mapping and localization: “Based on what they’ve seen, they have to construct a map of the world around them and then localize themselves within that map,” says Neuman. The third step is motion planning and control — in other words, plotting a course of action.

These steps can take time and an awful lot of computing power. “For robots to be deployed into the field and safely operate in dynamic environments around humans, they need to be able to think and react very quickly,” says Plancher. “Current algorithms cannot be run on current CPU hardware fast enough.”

Neuman adds that researchers have been investigating better algorithms, but she thinks software improvements alone aren’t the answer. “What’s relatively new is the idea that you might also explore better hardware.” That means moving beyond a standard-issue CPU processing chip that comprises a robot’s brain — with the help of hardware acceleration.

Hardware acceleration refers to the use of a specialized hardware unit to perform certain computing tasks more efficiently. A commonly used hardware accelerator is the graphics processing unit (GPU), a chip specialized for parallel processing. These devices are handy for graphics because their parallel structure allows them to simultaneously process thousands of pixels. “A GPU is not the best at everything, but it’s the best at what it’s built for,” says Neuman. “You get higher performance for a particular application.” Most robots are designed with an intended set of applications and could therefore benefit from hardware acceleration. That’s why Neuman’s team developed robomorphic computing.

The system creates a customized hardware design to best serve a particular robot’s computing needs. The user inputs the parameters of a robot, like its limb layout and how its various joints can move. Neuman’s system translates these physical properties into mathematical matrices. These matrices are “sparse,” meaning they contain many zero values that roughly correspond to movements that are impossible given a robot’s particular anatomy. (Similarly, your arm’s movements are limited because it can only bend at certain joints — it’s not an infinitely pliable spaghetti noodle.)

The system then designs a hardware architecture specialized to run calculations only on the non-zero values in the matrices. The resulting chip design is therefore tailored to maximize efficiency for the robot’s computing needs. And that customization paid off in testing.

Hardware architecture designed using this method for a particular application outperformed off-the-shelf CPU and GPU units. While Neuman’s team didn’t fabricate a specialized chip from scratch, they programmed a customizable field-programmable gate array (FPGA) chip according to their system’s suggestions. Despite operating at a slower clock rate, that chip performed eight times faster than the CPU and 86 times faster than the GPU.

“I was thrilled with those results,” says Neuman. “Even though we were hamstrung by the lower clock speed, we made up for it by just being more efficient.”

Plancher sees widespread potential for robomorphic computing. “Ideally we can eventually fabricate a custom motion-planning chip for every robot, allowing them to quickly compute safe and efficient motions,” he says. “I wouldn’t be surprised if 20 years from now every robot had a handful of custom computer chips powering it, and this could be one of them.” Neuman adds that robomorphic computing might allow robots to relieve humans of risk in a range of settings, such as caring for covid-19 patients or manipulating heavy objects.

“This work is exciting because it shows how specialized circuit designs can be used to accelerate a core component of robot control,” says Robin Deits, a robotics engineer at Boston Dynamics who was not involved in the research. “Software performance is crucial for robotics because the real world never waits around for the robot to finish thinking.” He adds that Neuman’s advance could enable robots to think faster, “unlocking exciting behaviors that previously would be too computationally difficult.”

Neuman next plans to automate the entire system of robomorphic computing. Users will simply drag and drop their robot’s parameters, and “out the other end comes the hardware description. I think that’s the thing that’ll push it over the edge and make it really useful.”

This research was funded by the National Science Foundation, the Computing Research Agency, the CIFellows Project, and the Defense Advanced Research Projects Agency.

Best bootcamps and programs to learn Machine Learning and Data Science

DOE’s E-ROBOT Prize targets robots for construction and built environment

Silicon Valley Robotics is pleased to announce that we are a Connector organization for the E-ROBOT Prize, and other DOE competitions on the American-Made Network. There is \$2 million USD available in up to ten prizes for Phase One of the E-ROBOT Prize, and \$10 million USD available in Phase Two. Individuals or teams can sign up for the competition, as the online platform offers opportunities to connect with potential team members, as do competition events organized by Connector organizations. Please cite Silicon Valley Robotics as your Connector organization, when entering the competition.

Silicon Valley Robotics will be hosting E-Robot Prize information and connection events as part of our calendar of networking and Construction Robotics Network events. The first event will be on February 3rd at 7pm PST in our monthly robot ‘showntell’ event “Bots&Beer”, and you can register here. We’ll be announcing more Construction Robotics Network events very soon.

E-ROBOT stands for Envelope Retrofit Opportunities for Building Optimization Technologies. Phase One of the E-ROBOT Prize looks for solutions in sensing, inspection, mapping or retrofitting in building envelopes and the deadline is May 19 2021. Phase Two will focus on holistic, rather than individual solutions, i.e. bringing together the full stack of sensing, inspection, mapping and retrofitting.

The overarching goal of E-ROBOT is to catalyze the development of minimally invasive, low-cost, and holistic building envelope retrofit solutions that make retrofits easier, faster, safer, and more accessible for workers. Successful competitors will provide solutions that provide significant advancements in robot technologies that will advance the energy efficiency retrofit industry and develop building envelope retrofit technologies that meet the following criteria:

- Holistic: The solution must include mapping, retrofit, sensing, and inspection.

- Low cost: The solution should reduce costs significantly when compared to current state-of-the-art solutions. The target for reduction in costs should be based on a 50% reduction from the baseline costs of a fully implemented solution (not just hardware, software, or labor; the complete fully implemented solution must be considered). If costs are not at the 50% level, there should be a significant energy efficiency gain achieved.

- Minimally invasive: The solution must not require building occupants to vacate the premises or require envelope teardown or significant envelope damage.

- Utilizes long-lasting materials: Retrofit is done with safe, nonhazardous, and durable (30+ year lifespan) materials.

- Completes time-efficient, high-quality installations: The results of the retrofit must meet common industry quality standards and be completed in a reasonable timeframe.

- Provides opportunities to workers: The solution enables a net positive gain in terms of the workforce by bringing high tech jobs to the industry, improving worker safety, enabling workers to be more efficient with their time, improving envelope accessibility for workers, and/or opening up new business opportunities or markets.

The E-ROBOT Prize provides a total of \$5 million in funding, including \$4 million in cash prizes for competitors and an additional \$1 million in awards and support to network partners.

Through this prize, the U.S. Department of Energy (DOE) will stimulate technological innovation, create new opportunities for the buildings and construction workforce, reduce building retrofit costs, create a safer and faster retrofit process, ensure consistent, high-quality installations, enhance construction retrofit productivity, and improve overall energy savings of the built environment.

The E-ROBOT Prize is made up of two phases that will fast-track efforts to identify, develop, and validate disruptive solutions to meet building industry needs. Each phase will include a contest period when participants will work to rapidly advance their solutions. DOE invites anyone, individually or as a team, to compete to transform a conceptual solution into product reality.

DOE’s E-ROBOT Prize targets robots for construction and built environment

Silicon Valley Robotics is pleased to announce that we are a Connector organization for the E-ROBOT Prize, and other DOE competitions on the American-Made Network. There is \$2 million USD available in up to ten prizes for Phase One of the E-ROBOT Prize, and \$10 million USD available in Phase Two. Individuals or teams can sign up for the competition, as the online platform offers opportunities to connect with potential team members, as do competition events organized by Connector organizations. Please cite Silicon Valley Robotics as your Connector organization, when entering the competition.

Silicon Valley Robotics will be hosting E-Robot Prize information and connection events as part of our calendar of networking and Construction Robotics Network events. The first event will be on February 3rd at 7pm PST in our monthly robot ‘showntell’ event “Bots&Beer”, and you can register here. We’ll be announcing more Construction Robotics Network events very soon.

E-ROBOT stands for Envelope Retrofit Opportunities for Building Optimization Technologies. Phase One of the E-ROBOT Prize looks for solutions in sensing, inspection, mapping or retrofitting in building envelopes and the deadline is May 19 2021. Phase Two will focus on holistic, rather than individual solutions, i.e. bringing together the full stack of sensing, inspection, mapping and retrofitting.

The overarching goal of E-ROBOT is to catalyze the development of minimally invasive, low-cost, and holistic building envelope retrofit solutions that make retrofits easier, faster, safer, and more accessible for workers. Successful competitors will provide solutions that provide significant advancements in robot technologies that will advance the energy efficiency retrofit industry and develop building envelope retrofit technologies that meet the following criteria:

- Holistic: The solution must include mapping, retrofit, sensing, and inspection.

- Low cost: The solution should reduce costs significantly when compared to current state-of-the-art solutions. The target for reduction in costs should be based on a 50% reduction from the baseline costs of a fully implemented solution (not just hardware, software, or labor; the complete fully implemented solution must be considered). If costs are not at the 50% level, there should be a significant energy efficiency gain achieved.

- Minimally invasive: The solution must not require building occupants to vacate the premises or require envelope teardown or significant envelope damage.

- Utilizes long-lasting materials: Retrofit is done with safe, nonhazardous, and durable (30+ year lifespan) materials.

- Completes time-efficient, high-quality installations: The results of the retrofit must meet common industry quality standards and be completed in a reasonable timeframe.

- Provides opportunities to workers: The solution enables a net positive gain in terms of the workforce by bringing high tech jobs to the industry, improving worker safety, enabling workers to be more efficient with their time, improving envelope accessibility for workers, and/or opening up new business opportunities or markets.

The E-ROBOT Prize provides a total of \$5 million in funding, including \$4 million in cash prizes for competitors and an additional \$1 million in awards and support to network partners.

Through this prize, the U.S. Department of Energy (DOE) will stimulate technological innovation, create new opportunities for the buildings and construction workforce, reduce building retrofit costs, create a safer and faster retrofit process, ensure consistent, high-quality installations, enhance construction retrofit productivity, and improve overall energy savings of the built environment.

The E-ROBOT Prize is made up of two phases that will fast-track efforts to identify, develop, and validate disruptive solutions to meet building industry needs. Each phase will include a contest period when participants will work to rapidly advance their solutions. DOE invites anyone, individually or as a team, to compete to transform a conceptual solution into product reality.

American Robotics Becomes First Company Approved by the FAA To Operate Automated Drones Without Human Operators On-Site

The future of robotics research: Is there room for debate?

By Brian Wang, Sarah Tang, Jaime Fernandez Fisac, Felix von Drigalski, Lee Clement, Matthew Giamou, Sylvia Herbert, Jonathan Kelly, Valentin Pertroukhin, and Florian Shkurti

As the field of robotics matures, our community must grapple with the multifaceted impact of our research; in this article, we describe two previous workshops hosting robotics debates and advocate for formal debates to become an integral, standalone part of major international conferences, whether as a plenary session or as a parallel conference track.

As roboticists build increasingly complex systems for applications spanning manufacturing, personal assistive technologies, transportation and others, we face not only technical challenges, but also the need to critically assess how our work can advance societal good. Our rapidly growing and uniquely multidisciplinary field naturally cultivates diverse perspectives, and informal dialogues about our impact, ethical responsibilities, and technologies. Indeed, such discussions have become a cornerstone of the conference experience, but there has been relatively little formal programming in this direction at major technical conferences like the IEEE International Conference on Robotics and Automation (ICRA) and Robotics: Science and Systems (RSS) Conference.

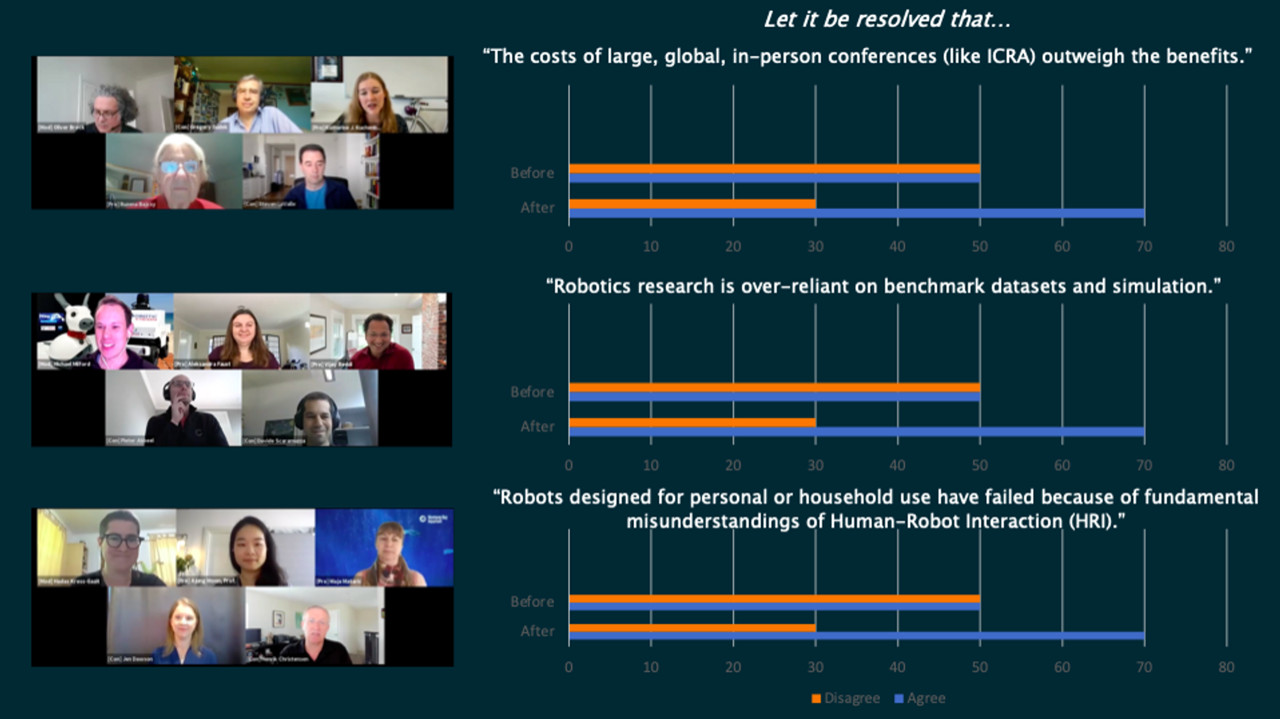

To fill this void, we organized two workshops entitled “Debates on the Future of Robotics Research” at ICRA 2019 and 2020, inspired by a similar workshop at the 2018 International Conference on Machine Learning (ICML). In these workshops, panellists from industry and academia debated key issues in a structured format, with groups of two arguing either “for” or “against” resolutions relating to these issues. The workshops featured three 80-minute sessions modelled roughly after the Oxford Union debate format, consisting of:

- An initial audience poll asking whether they “agree” or “disagree” with the proposed resolution

- Opening statements from all four panellists, alternating for and against

- Moderated discussion including audience questions

- Closing remarks

- A final audience poll

- Panel discussion and audience Q&A

The “Debates” workshops attracted approximately 400 attendees in 2019 and 1100 in 2020 (ICRA 2020 was held virtually in response to the COVID-19 pandemic). In some instances, panellists took positions out of line with their personally held beliefs and nonetheless swayed audience members to their side, with audience polls displaying notable shifts in majority opinion. These results demonstrate the ability of debate to engage our community, elucidate complex issues, and challenge ourselves to adopt new ways of thinking. Indeed, the popularity of the format is on the rise within the robotics community, as other recent workshops have also adopted a debates format — for instance, the 2${}^{nd}$ Workshop on Closing the Reality Gap in Sim2Real Transfer for Robotics or Soft Robotics Debates).

(ICRA)

Given this positive community response, we argue that major robotics conferences should begin to organize structured debates as a core element of their programming. We believe a debate-style plenary session or parallel track would provide a number of benefits to conference attendees and the wider robotics community:

- Exposure to ideas: Attendees would have more opportunities to be exposed to unfamiliar ideas and perspectives in a well-attended plenary session, while minimizing overlap with technical sessions.

- Equity: Panellists and moderators no longer have to choose between accepting a debate invitation, accepting a workshop speaking engagement, supporting their students’ workshop presentations, and/or hosting a workshop of their own. Some of these conflicting responsibilities disproportionately affect early career researchers.

- Inclusion: Conference organizers have the discretion to provide travel support, enabling panellists and moderators from a wider range of countries, career stages, and backgrounds to participate.

- Impact: A notable Science Robotics article cited debates as a necessary driver of progress towards solving robotics’ grand challenges. By facilitating structured and critical self-reflection, a widely accessible debates plenary could help identify avenues for future work and move the field forward.

The driving force behind such an event should be a diverse debates committee responsible for inviting representatives within and outside academia, with different research interests, at different career stages, and from different parts of the world. The committee will also lead the selection of appropriate debate topics. Historically, our debate propositions revolved around three key questions: “what problems should we solve?”, “how should we solve them?”, and “how do we measure success and progress?”.

Recent high-profile shutterings of several prominent robotics companies testify to the importance of identifying the right problem. How do we transform our research into products that provide value to end-users, while keeping in mind our environmental and economic impact? How can we quickly introspect, learn from, and pivot in response to failures, as well as avoid repeating past mistakes? Our 2020 resolution, “robots designed for personal or household use have failed because of fundamental misunderstandings of Human-Robot Interaction (HRI),” provided an opportunity to discuss such questions.

As a multidisciplinary field drawing on advances in machine learning, computer science, mechanical design, control, optimization, biology, and more, robotics has a wealth of tools at its disposal. A central problem in robotics research is then to identify the right tool for the right problem. Our 2019 debate proposition, “The pervasiveness of deep learning in robotics research is an impediment to gaining scientific insights into robotics problems.”, probed the ascendance of data-driven approaches to robotics problems, and attracted a large audience.

In addition to identifying the right problems and the right tools to solve them, it is equally important to discuss how best to evaluate and compare proposed solutions. A central tension in robotics research is how to strike a balance between accessible and replicable benchmark datasets, and real world experiments. Our 2020 debate proposition, “Robotics research is over reliant on benchmark datasets and simulation”, challenged the audience to consider how to rigorously and accessibly evaluate the performance of robotic systems without overfitting to a particular benchmark. Indeed, failures to adequately evaluate robotics algorithms have already led to tragic loss of life, underscoring the importance of establishing common standards for measuring the performance and safety of our technologies in the context of rapid commercialization and real-world deployments. These standards must be informed by regular and rigorous critical reflection on our ethical obligations as researchers, practitioners and policymakers. The fact that our 2019 debate proposition, “Robotics needs a similar level of regulation and certification as other engineering disciplines (e.g., aviation), even if this results in slower technological innovation”, attracted and resonated with a significant audience is evidence of demand for structured discussion of these topics.

As robotics technologies continue to move from the research laboratory to the real world, we believe there should be room for debate at major robotics conferences. Enshrining debate as a core element of major robotics conferences will serve to create opportunities for self-reflection, establish institutional memory, and drive the field forward.

For more information, please see https://roboticsdebates.org/.