Two reputable research resources are reporting that the robotics industry is growing more rapidly than expected. BCG (Boston Consulting Group) is conservatively projecting that the market will reach $87 billion by 2025; Tractica, incorporating the robotic and AI elements of the emerging self-driving industry, is forecasting the market will reach $237 billion by 2022.

Both research firms acknowledge that yesterday’s robots — which were blind, big, dangerous and difficult to program and maintain — are being replaced and supplemented with newer, more capable ones. Today's new, and future robots, will have voice and language recognition, access to super-fast communications, data and libraries of algorithms, learning capability, mobility, portability and dexterity. These new precision robots can sort and fill prescriptions, pick and pack warehouse orders, sort, inspect, process and handle fruits and vegetables, plus a myriad of other industrial and non-industrial tasks, most faster than humans, yet all the while working safely along side them.

Boston Consulting Group (BCG)

Gaining Robotic Advantage, June 2017, 13 pages, free

Gaining Robotic Advantage, June 2017, 13 pages, free

BCG suggests that business executives be aware of ways robots are changing the global business landscape and think and act now. They see robotics-fueled changes coming in retail, logistics, transportation, healthcare, food processing, mining and agriculture.

BCG cites the following drivers:

- Private investment in the robotic space has continued to amaze with exponential year-over-year funding curves and sensational billion dollar acquisitions.

- Prices continue to fall on robots, sensors, CPUs and communications while capabilities continue to increase.

- Robot programming is being transformed by easier interfaces, GUIs and ROS.

- The prospect of a self-driving vehicles industry disrupting transportation is propelling a talent grab and strategic acquisitions by competing international players with deep pockets.

- 40% of robotic startups have been in the consumer sector and will soon augment humans in high-touch fields such as health and elder care.

BCG also cites the following example of paying close attention to gain advantage:

“Amazon gained a first-mover advantage in 2012 when it bought Kiva Systems, which makes robots for warehouses. Once a Kiva customer, Amazon acquired the robot maker to improve the productivity and margins of its network of warehouses and fulfillment centers. The move helped Amazon maintain its low costs and expand its rapid delivery capabilities. It took five years for a Kiva alternative to hit the market. By then, Amazon had a jump on its rivals and had developed an experienced robotics team, giving the company a sustainable edge.”

Tractica

Robotics Market Forecast – June 2017, 26 pages, $4,200

Robotics Market Forecast – June 2017, 26 pages, $4,200

Drones for Commercial Applications – June 2017, 196 pages, $4,200

AI for Automotive Applications – May 2017, 63 pages, $4,200

Consumer Robotics – May 2017, 130 pages, $4,200

The key story is that industrial robotics, the traditional pillar of the robotics market, dominated by Japanese and European robotics manufacturers, has given way to non-industrial robot categories like personal assistant robots, UAVs, and autonomous vehicles, with the epicenter shifting toward Silicon Valley, which is now becoming a hotbed for artificial intelligence (AI), a set of technologies that are, in turn, driving a lot of the most significant advancements in robotics. Consequently, Tractica forecasts that the global robotics market will grow rapidly between 2016 and 2022, with revenue from unit sales of industrial and non-industrial robots rising from $31 billion in 2016 to $237.3 billion by 2022. The market intelligence firm anticipates that most of this growth will be driven by non-industrial robots.

Tractica is headquartered in Boulder and analyzes global market trends and applications for robotics and related automation technologies within consumer, enterprise, and industrial marketplaces and related industries.

General Research Reports

- Global autonomous mobile robots market, June 2017, 95 pages, TechNavio, $2,500

TechNavio forecasts that the global autonomous mobile robots market will grow at a CAGR of more than 14% through 2021. - Global underwater exploration robots, June 2017, 70 pages, TechNavio, $3,500

TechNavio forecasts that the global underwater exploration robots market will grow at a CAGR of 13.92 % during the period 2017-2021. - Household vacuum cleaners market, March 2017, 134 pages, Global Market Insights, $4,500

Global Market Insights forecasts that household vacuum cleaners market size will surpass $17.5 billion by 2024 and global shipments are estimated to exceed 130 million units by 2024, albeit at a low 3.0% CAGR. Robotic vacuums show a slightly higher growth CAGR. - Global unmanned surface vehicle market, June 2017, Value Market Research, $3,950

Value Market Research analyzed drivers (security and mapping) versus restraints such as AUVs and ROVs and made their forecasts for the period 2017-2023. - Innovations in Robotics, Sensor Platforms, Block Chain, and Artificial Intelligence for Homeland Security, May 2017, Frost & Sullivan, $6,950

This Frost & Sullivan report covers recent developments such as co-bots for surveillance applications, airborne sensor platforms for border security, blockchain tech, AI as first responder, and tech for detecting nuclear threats. - Top technologies in advanced manufacturing and automation, April 2017, Frost & Sullivan, $4,950

This Frost & Sullivan report focuses on exoskeletons, metal and nano 3D printing, co-bots and agile robots – all of which are in the top 10 technologies covered. - Mobile robotics market, December 2016, 110 pages, Zion Market Research, $4,199

Global mobile robotics market will reach $18.8 billion by end of 2021, growing at a CAGR of slightly above 13.0% between 2017 and 2021. - Unmanned surface vehicle (USV) market, May 2017, MarketsandMarkets, $5,650

MarketsandMarkets forecasts the unmanned surface vehicle (USV) market to grow from $470.1 Million in 2017 to $938.5 Million by 2022, at a CAGR of 14.83%.

Agricultural Research Reports

- Global agricultural robots market, May 2017, 70 pages, TechNavio, $2,500

Forecasts the global agricultural robots market will grow steadily at a CAGR of close to 18% through 2021. - Agriculture robots market, June 2017, TMR Research, $3,716

Robots are poised to replace agricultural hands. They can pluck fruits, sow and reap crops, and milk cows. They carry out the tasks much faster and with a great degree of accuracy. This coupled with mandates on higher minimum pay being levied in most countries, have spelt good news for the global market for agriculture robots.  Agricultural Robots, December 2016, 225 pages, Tractica, $4,200

Agricultural Robots, December 2016, 225 pages, Tractica, $4,200

Forecasts that shipments of agricultural robots will increase from 32,000 units in 2016 to 594,000 units annually in 2024 and that the market is expected to reach $74.1 billion in annual revenue by 2024. Report, done in conjunction with The Robot Report, profiles over 165 companies involved in developing robotics for the industry.

by Nicholas Charron

by Nicholas Charron

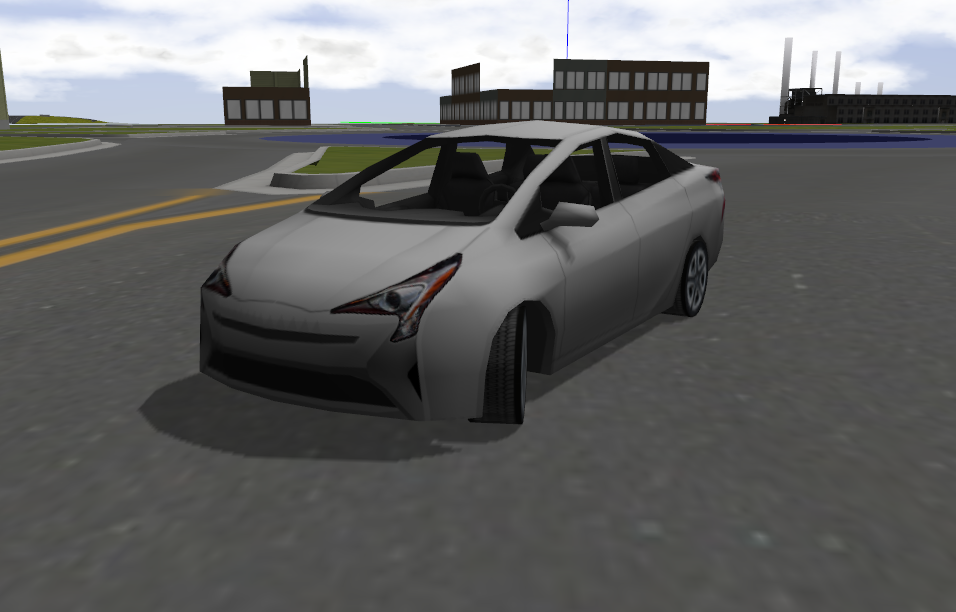

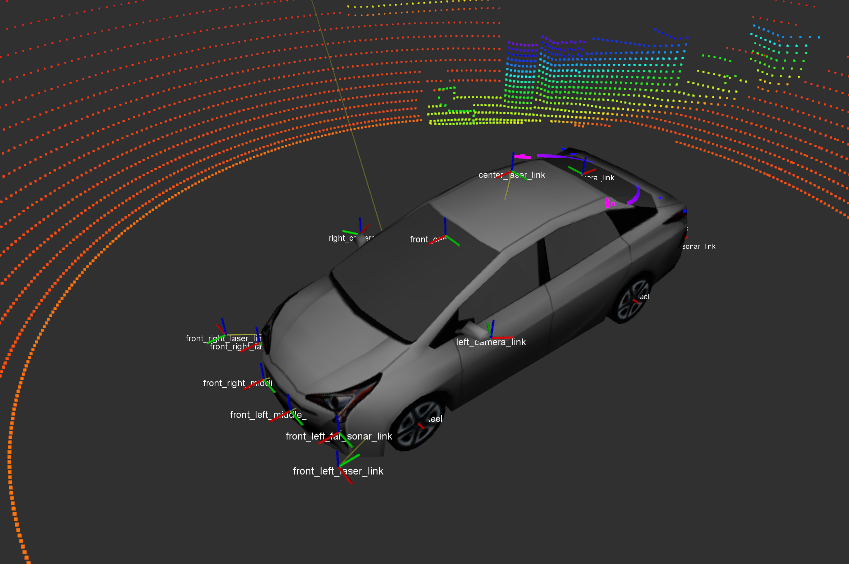

The Apollo platform consists of a core software stack, a number of cloud services, and self-driving vehicle hardware such as GPS, cameras, lidar, and radar.

The Apollo platform consists of a core software stack, a number of cloud services, and self-driving vehicle hardware such as GPS, cameras, lidar, and radar. China Network

China Network